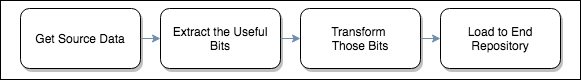

The aim of this chapter is to demonstrate how to load data from its raw format onto different schemas, therefore enabling a variety of different kinds of downstream analytics to be run over the same data. When writing analytics, or even better, building libraries of reusable software, you generally have to work with interfaces of fixed input types. Therefore, having flexibility in how you transition data between schemas, depending on the purpose, can deliver considerable downstream value, both in terms of widening the type of analysis possible and the re-use of existing code.

Our primary objective is to learn about the data format features that accompany Spark, although we will also delve into the finer points of data management by introducing proven methods that will enhance your data handling and increase your productivity. After all, it is most likely that you will be required to formalize your work at some point, and an introduction to how to avoid...