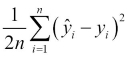

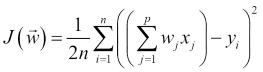

So far, we've looked at two of the most well-known methods used for predictive modeling. Linear regression is probably the most typical starting point for problems where the goal is to predict a numerical quantity. The model is based on a linear combination of input features. Logistic regression uses a nonlinear transformation of this linear feature combination in order to restrict the range of the output in the interval [0,1]. In so doing, it predicts the probability that the output belongs to one of two classes. Thus, it is a very well-known technique for classification.

Both methods share the disadvantage that they are not robust when dealing with many input features. In addition, logistic regression is typically used for the binary classification problem. In this chapter, we will introduce the concept of neural networks, a nonlinear approach to solving both regression and classification problems. They are significantly more robust when dealing with a higher...