Chapter 13. Extending Splunk

While the core of Splunk is closed, there are a number of places where you can use scripts or external code to extend default behaviors. In this chapter, we will write a number of examples, covering most of the places where external code can be added. Most code samples are written in Python, so if you are not familiar with Python a reference may be useful.

We will cover the following topics:

Writing scripts to create events

Using Splunk from the command line

Calling Splunk via REST

Writing custom search commands

Writing event type renderers

Writing custom search action scripts

The examples used in this chapter are included in the ImplementingSplunkExtendingExamples app, which can be downloaded from the support page of the Packt Publishing website at www.packtpub.com/support.

In addition, Hunk will be defined and an overview will be given.

Writing a scripted input to gather data

Scripted inputs allow you to run a piece of code on a scheduled basis and capture the output as if it were simply being written to a file. It does not matter what language the script is written in or where it lives, as long it is executable.

We touched on this topic in the Using scripts to gather data section in Chapter 12, Advanced Deployments. Let's write a few more examples.

Capturing script output with no date

One common problem with script output is the lack of a predictable date or date format. In this situation, the easiest thing to do is to tell Splunk not to try to parse a date at all and instead use the current date. Let's make a script that lists open network connections:

Using Splunk from the command line

Almost everything that can be done via the web interface can also be accomplished via the command line. For an overview, see the output of /opt/splunk/bin/splunk help. For help on a specific command, use /opt/splunk/bin/splunk help [commandname].

The most common action performed on the command line is search. For example, have a look at the following code:

The things to note here are:

By default, searches are performed over All time. Protect yourself by including earliest=-1d or an appropriate time range in your query.

By default, Splunk will only output 100 lines of results. If you need more, use the -maxout flag.

Searches require authentication, so the user will be asked to authenticate unless -auth is included as an argument.

Most...

Splunk provides an extensive HTTP REST interface, which allows searching, adding data, adding inputs, managing users, and more. Documentation and SDKs are provided by Splunk at http://dev.splunk.com/.

To get an idea of how this REST interaction happens, let's walk through a sample conversation to run a query and retrieve the results. The steps are essentially as follows:

Start the query (POST).

Poll for status (GET).

Retrieve results (GET).

We will use the command-line program cURL to illustrate these steps. The SDKs make this interaction much simpler.

The command to start a query is as follows:

This essentially says to use POST on the search=search query. If you are familiar with HTTP, you might notice that this is a standard POST from an HTML form.

To run the query earliest=-1h index="_internal" warn | stats count by host, we need to URL - encode the query. The command then, is as...

To augment the built-in commands, Splunk provides the ability to write commands in Python and Perl. You can write commands to modify events, replace events, and even dynamically produce events.

When not to write a command

While external commands can be very useful, if the number of events to be processed is large or if performance is a concern, it should be considered a last resort. You should make every effort to accomplish the task at hand using the search language built into Splunk or other built-in features. For instance, if you want to accomplish any of the following tasks, make sure you know what to do, which is what is discussed here:

To use regular expressions, learn to use rex, regex, and extracted fields

To calculate a new field or modify an existing field, look into eval (search for splunk eval functions with your favorite search engine)

To augment your results with external data, learn to use lookups, which can also be a script if need be

To read external data that...

Writing a scripted lookup to enrich data

We covered CSV lookups fairly extensively in Chapter 7, Extending Search, then touched on them again in Chapter 10, Summary Indexes and CSV Files and Chapter 11, Configuring Splunk. The capabilities built into Splunk are usually sufficient but sometimes it is necessary to use an external data source or dynamic logic to calculate values. Scripted lookups have the following advantages over commands and CSV lookups:

Scripted lookups are only run once per unique lookup value, as opposed to a command, which would run the command for every event.

The memory requirement of a CSV lookup increases with the size of the CSV file.

Rapidly changing values can be left in an external system and queried using the scripted lookup instead of being exported frequently. In the Using a lookup with wildcards section in Chapter 10, Summary Indexes and CSV Files, we essentially created a case statement through configuration. Let's implement that use case as a script just to...

Writing an event renderer

Event renderers give you the ability to make a specific template for a specific event type. To read more about creating event types, see Chapter 7, Extending Search.

Event renderers use mako templates (http://www.makotemplates.org/).

An event renderer is comprised of the following:

A template stored at $SPLUNK_HOME/etc/apps/[yourapp]/appserver/event_renderers/[template].html

A configuration entry in event_renderers.conf

An optional event type definition in eventtypes.conf

Optional CSS classes in application.css

Let's create a few small examples. All the files referenced are included in $SPLUNK_HOME/etc/apps/ImplementingSplunkExtendingExamples. These examples are not shared outside this app, so to see them in action you will need to search from inside this app. Do this by pointing your browser at http://[yourserver]/app/ImplementingSplunkExtendingExamples/flashtimeline.

If you know the names of the fields you want to display in your output, your template...

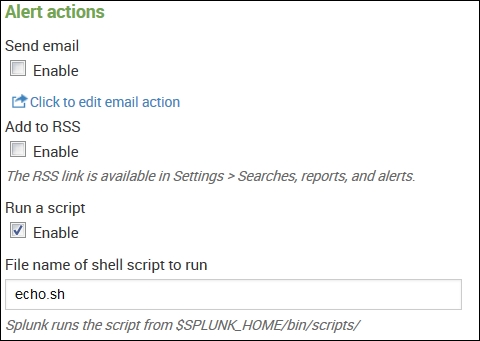

Writing a scripted alert action to process results

Another option to interface with an external system is to run a custom alert action using the results of a saved search. Splunk provides a simple example in $SPLUNK_HOME/bin/scripts/echo.sh. Let's try it out and see what we get using the following steps:

Create a saved search. For this test, lets do something simple and easy such as writing the following code:

Schedule the search to run at a point in the future. I set it to run every five minutes just for this test.

Enable Run a script and type in echo.sh:

The script places the output into $SPLUNK_HOME/bin/scripts/echo_output.txt.

In my case, the output is as follows:

To further extend the power of Splunk, Hunk was developed to give a larger number of users the ability to access data stored within the Hadoop and NoSQL stores (and other similar ones) without needing custom development, costly data modeling, or lengthy batch iterations. For example, Hadoop can consume high volumes of data from many sources, refining the data into readable logs, which can then be exposed to Splunk users quickly and efficiently using Hunk.

Some interesting Hunk features include:

The ability to create a Splunk virtual index: This enables the seamless use of the Splunk Search Processing Language (SPL) for interactive exploration, analysis, and visualization of data as if it was stored in a Splunk index.

You can point and go: Like accessing data indexed in Splunk, there is no need to understand the Hadoop data upfront; you can just point Hunk at the Hadoop source and start exploring.

Interactivity: You can use Hunk for analysis across terabytes and even petabytes of data and...

As we saw in this chapter, there are a number of ways in which Splunk can be extended to input, manipulate, and output events. The search engine at the heart of Splunk is truly just the beginning. With a little creativity, Splunk can be used to extend existing systems, both as a data source and as a way to trigger actions.