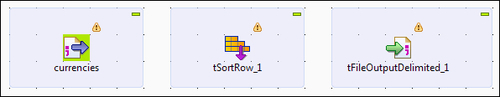

As we pass data around from component to component, system to system, there is often the need to modify it in some way. This chapter introduces the Studio's processing components, which will become your "Swiss Army Knife" as you develop integration jobs. The processing group of components is used as intermediate data processing or transformation components, intercepting data flows between input and output components. For example, we might have a filtering component between a database read component and a database write component, or between an XML file input and a CSV output. Alternatively, we might use a data sorting component that takes sales order data from a file and sorts it by customer ID in ascending order.

In this chapter, we will look at:

Filtering data: Removing or passing through specific records based on some attribute of the data

Sorting data: Alpha and numeric sorts (singularly or in combination)

Summing and aggregating...