Chapter 9

Next Steps in Bayesian Deep Learning

Throughout this book, we’ve covered the fundamental concepts behind Bayesian deep learning (BDL), from understanding what uncertainty is and its role in developing robust machine learning systems, right through to learning how to implement and analyze the performance of several fundamental BDL. While what you’ve learned will equip you to start developing your own BDL solutions, the field is moving quickly, and there are many new techniques on the horizon.

To wrap up the book, in this chapter we’ll take a look at the current trends in BDL, before we dive into some of the latest developments in the field. We’ll conclude by introducing some alternatives to BDL, and provide some advice on additional resources you can use to continue your journey into Bayesian machine learning methods.

We’ll cover the following sections:

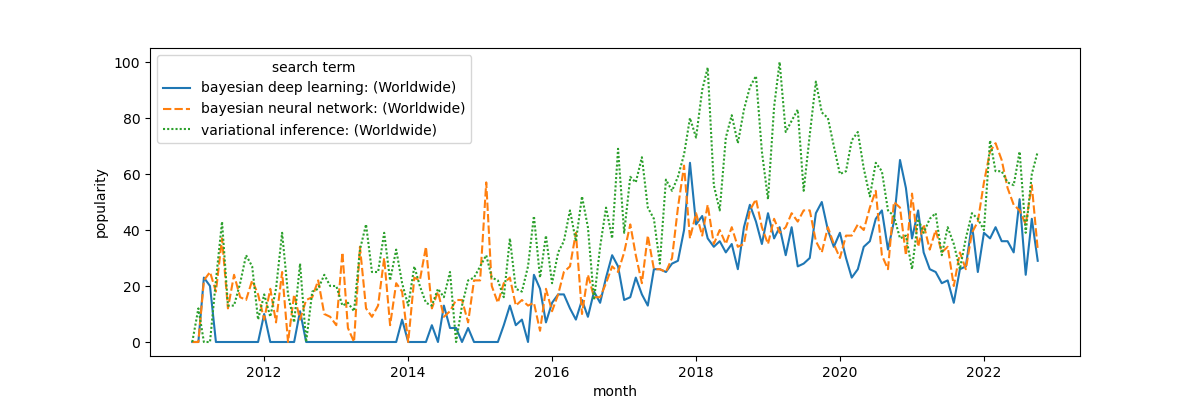

Current trends in BDL

How are BDL methods being applied to solve real-world problems...