This chapter serves the purpose of providing instructions so that the reader becomes familiar with the process of installing Apache Spark in standalone mode, along with its dependencies. Then we will start our first interaction with Apache Spark by doing a couple of hands on exercises using Spark CLI as known as REPL.

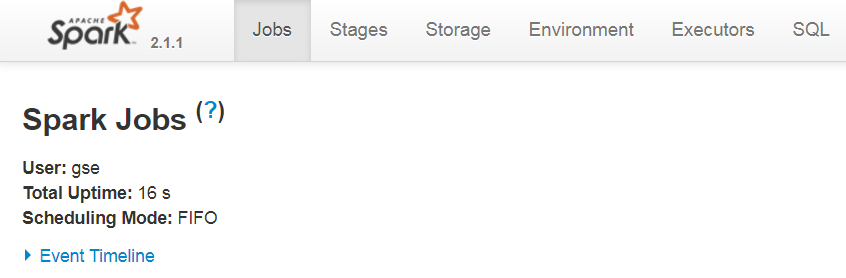

We will move on to discuss Spark components and common terminologies associated with spark, and then finally discuss the life cycle of a Spark Job in a clustered environment. We will also explore the execution of Spark jobs in a graphical sense, from creation of DAG to execution of the smallest unit of tasks by the utilities provided in Spark Web UI.

Finally, we will conclude the chapter by discussing different methods of Spark Job configuration and submission using Spark-Submit tool and Rest APIs.