In the previous two chapters (Chapter 4, Advanced Convolutional Networks, and Chapter 5, Object Detection and Image Segmentation), we focused on supervised computer vision problems, such as classification and object detection. In this chapter, we'll discuss how to create new images with the help of unsupervised neural networks. After all, it's a lot better knowing that you don't need labeled data. More specifically, we'll talk about generative models.

This chapter will cover the following topics:

- Intuition and justification of generative models

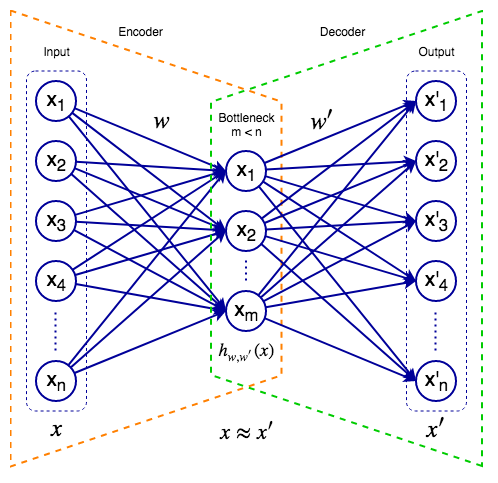

- Introduction to Variational Autoencoders (VAEs)

- Introduction to Generative Adversarial Networks (GANs)

- Types of GAN

- Introducing to artistic style transfer

(class), given

(class), given  (input). In the case of MNIST, this is the probability of the digit when given the pixel intensities of the image.

(input). In the case of MNIST, this is the probability of the digit when given the pixel intensities of the image. , given certain input features, it tries to predict the...

, given certain input features, it tries to predict the...