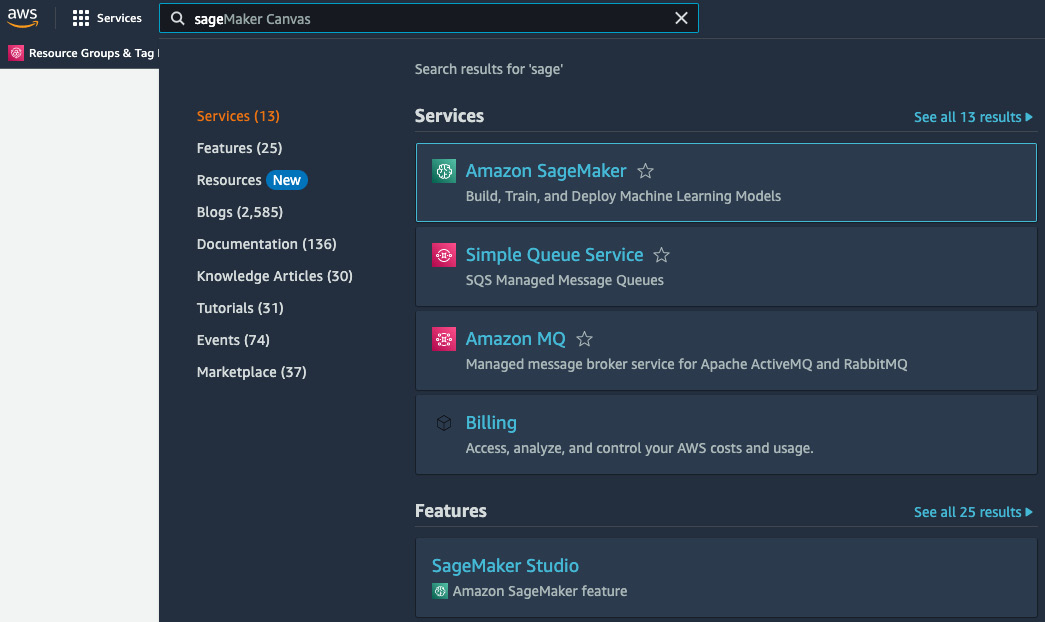

Data Processing for Machine Learning with SageMaker Data Wrangler

In Chapter 4, we introduced you to SageMaker Data Wrangler, a purpose-built tool to process data for machine learning. We discussed why data processing for machine learning is such a critical component of the overall machine learning pipeline and some risks of working with unclean or raw data. We also covered the core capabilities of SageMaker Data Wrangler and how it is helpful to solve some key challenges involved in data processing for machine learning.

In this chapter, we will take things further by taking a practical step-by-step data flow to preprocess an example dataset for machine learning. We will start by taking an example dataset that comes preloaded with SageMaker Data Wrangler and then do some basic exploratory data analysis using Data Wrangler built-in analysis. We will also add a couple of custom checks for imbalance and bias in the dataset. Feature engineering is a key step in the machine learning...