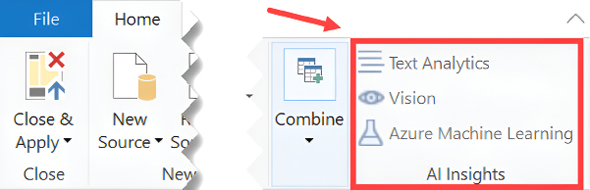

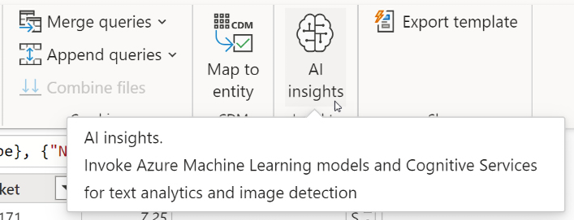

Using Machine Learning without Premium or Embedded Capacity

Advances in computing power have made data analysis much more powerful and efficient. In particular, with the advent of machine learning (ML) models, you can now gain valuable insights and enrich your analysis effortlessly. Fortunately, Power BI includes several AI capabilities that seamlessly integrate with ML models, allowing you to act on these insights immediately. Within the Power BI ecosystem, there are integrated tools designed to enhance your analysis with ML. These tools are tightly integrated with Power BI Desktop and Power BI dataflows, allowing you to leverage ML models created by your data scientists on Azure Machine Learning. In addition, Power BI allows you to harness the power of models trained and deployed through Azure AutoML. Furthermore, you can easily access services exposed by Cognitive Services directly through an easy-to-use graphical interface.