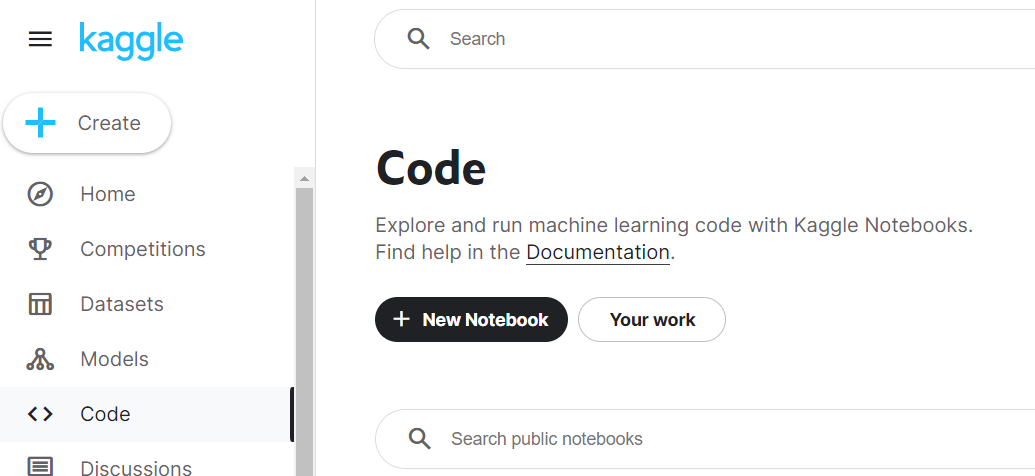

How to create a Notebooks?

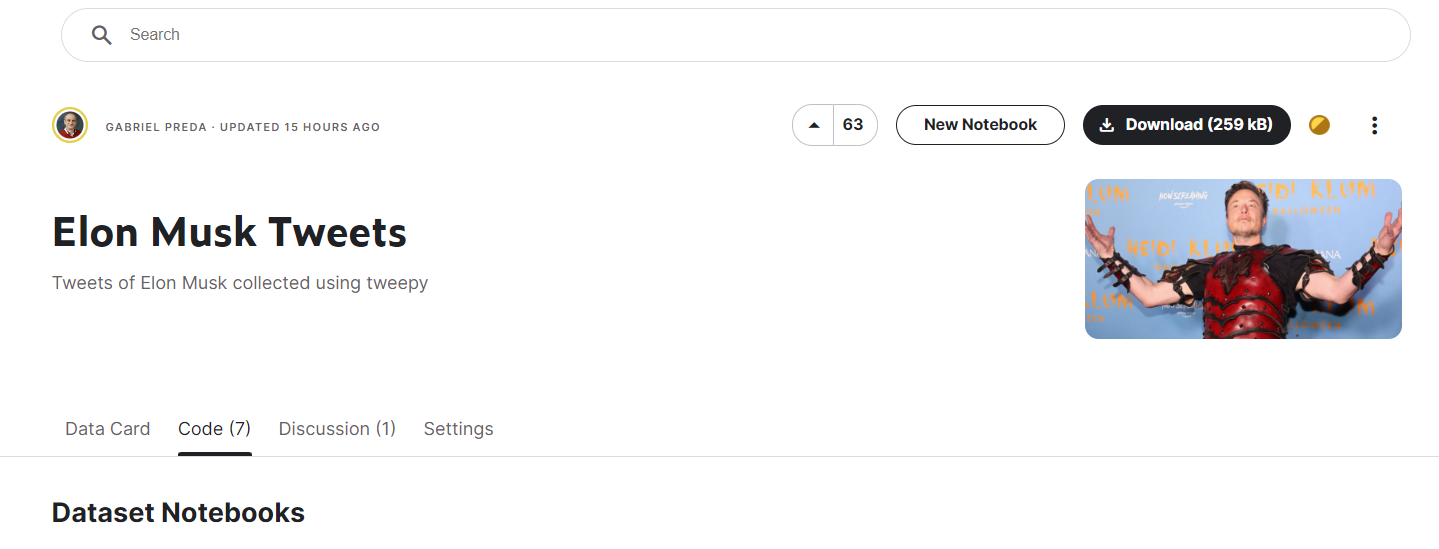

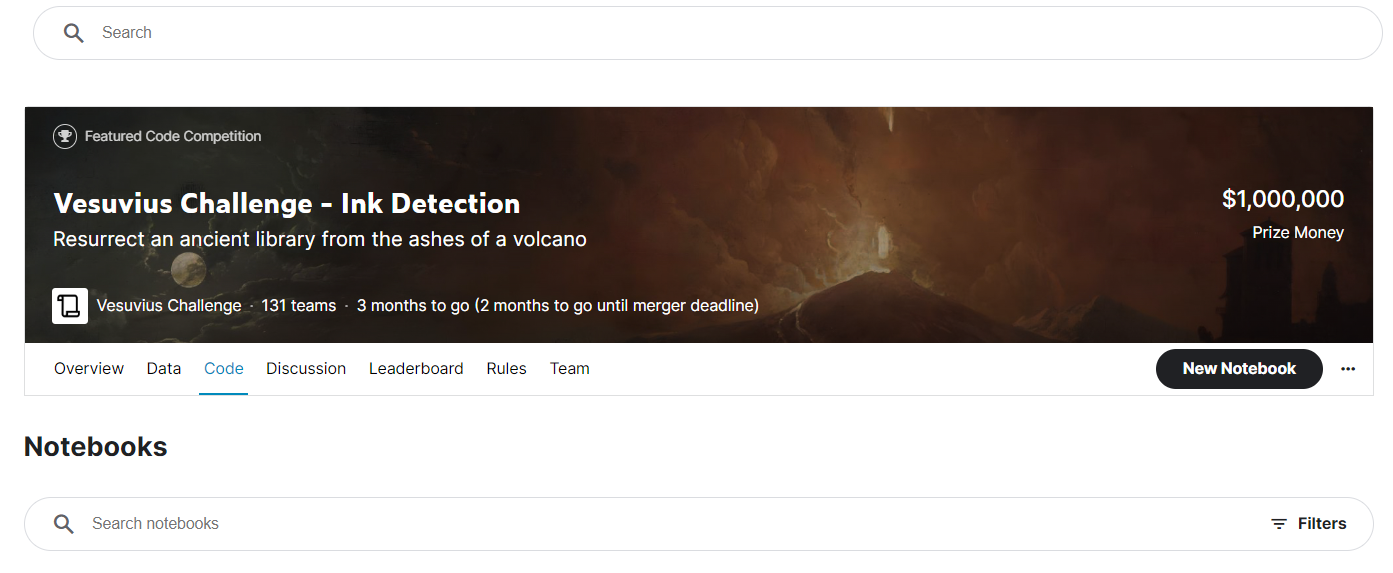

You have several modalities to start a Notebook. You can start it from the main menu “Code” (see Figure 2.1), from the context of a Dataset (see Figure 2.2), a Competition (see Figure 2.3), or by forking one existing Notebook, from your own or from another Notebook.

When you create a new Notebook from Code menu, this will appear in your list of Notebooks but will not be added to any Dataset or Competition context.If you choose to start it from a Dataset, the dataset will be already added in the list of data added to the notebook, and you will see it in the right-side panel when you edit it.

The same in the case of a competition, the dataset associated with it will be already present in the list of datasets when you initialize the notebook.

To fork a notebook, press...