Dimensions

… from a purely mathematical point of view it’s just as easy to think in 11 dimensions, as it is to think in three or four.

Stephen Hawking

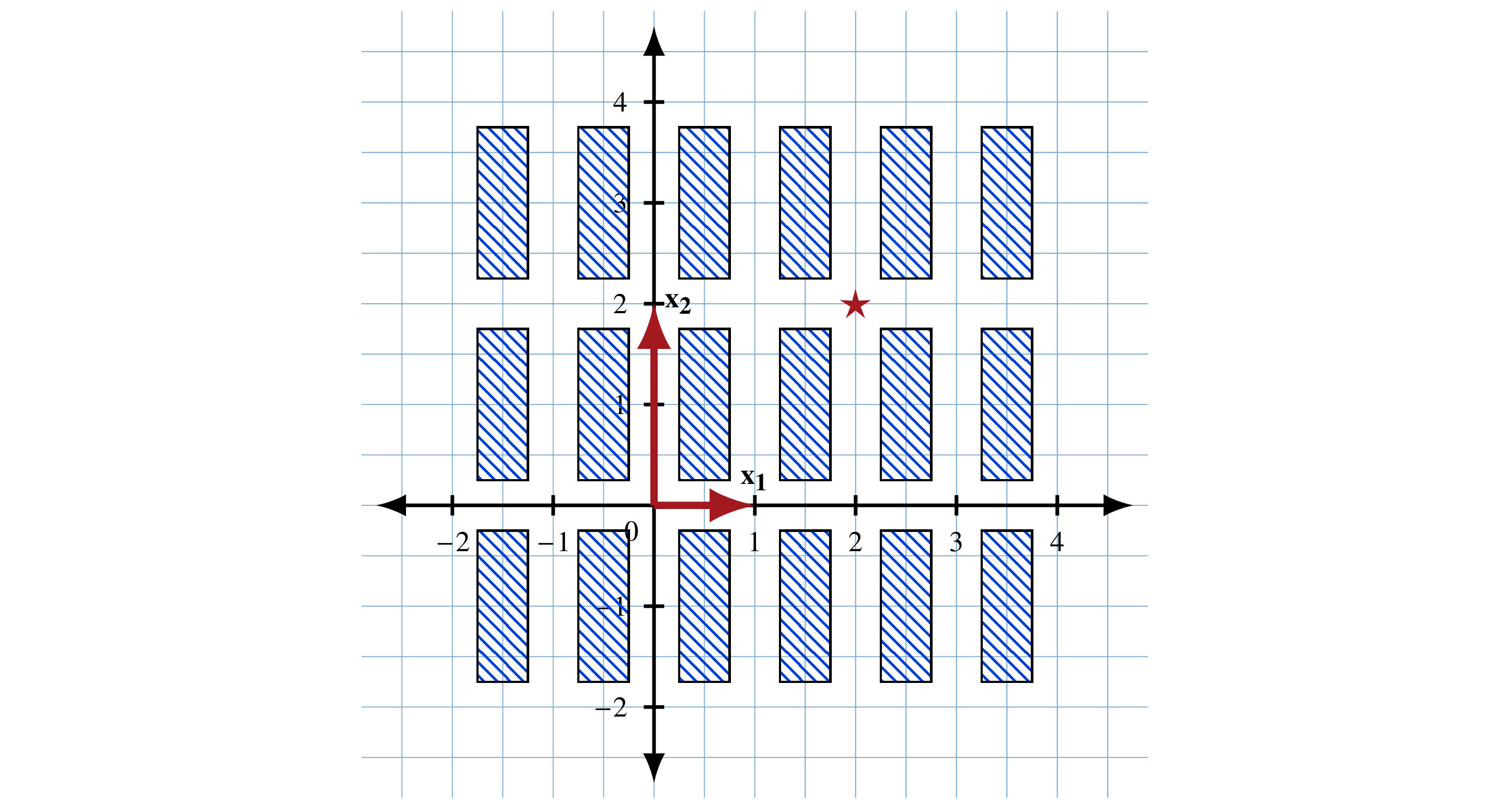

We are familiar with many properties of objects, such as lines and circles in two dimensions, and cubes and spheres in three dimensions. If I ask you how long something is, you might take out a ruler or a tape measure. When you take a photo, you rotate your camera or phone in three dimensions without thinking too much about it.

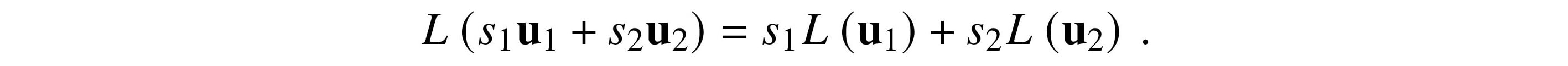

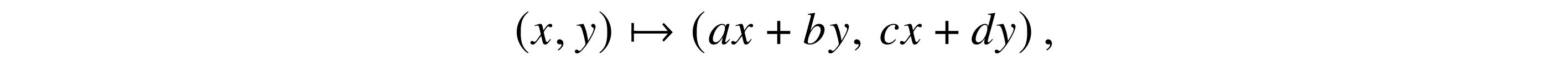

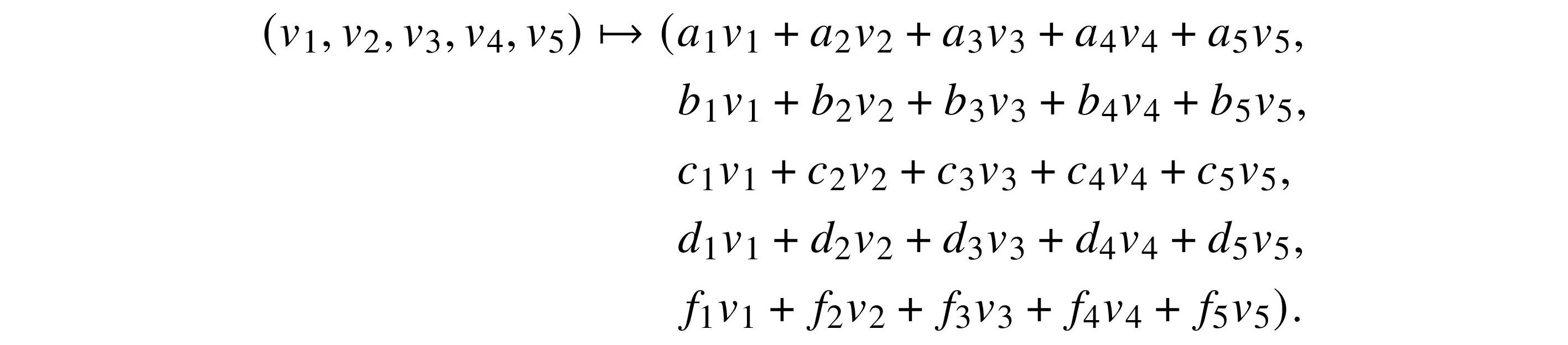

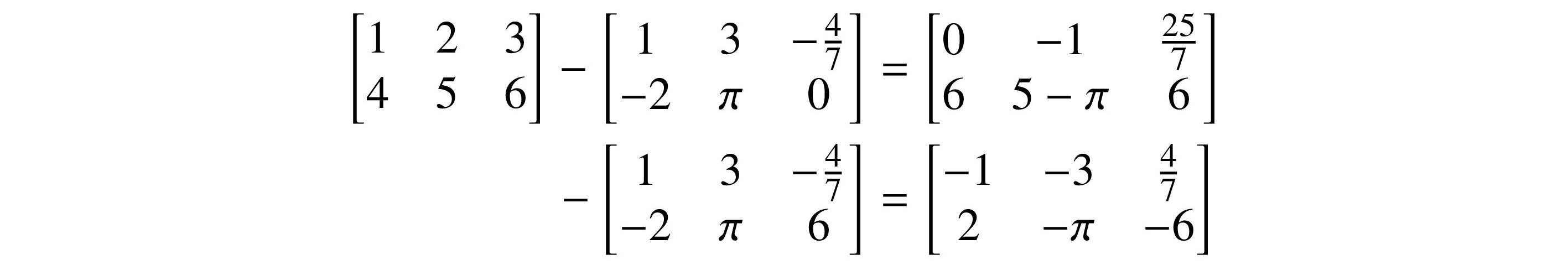

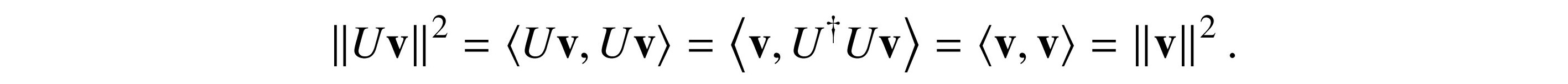

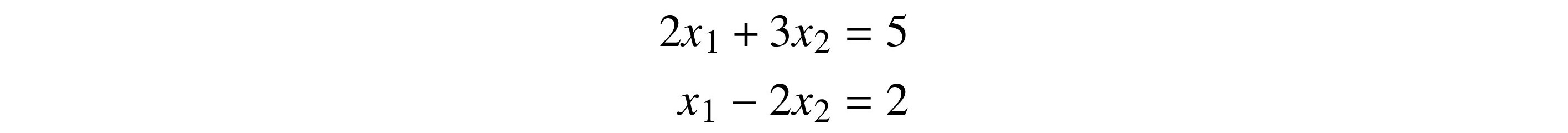

Alas, there is math behind all these actions. The notion of something existing in one or more dimensions, indeed even the idea of what a dimension is, must be made more formal if we are to perform calculations. This is the concept of vector spaces. The study of what they are and what you can do with them is linear algebra.

Linear algebra is essential to pure and applied mathematics, physics, engineering, and the parts of computer science and software engineering that deal with...