Previously, in Chapter 4, Detecting and Merging Faces of Mammals, we used Haar or LBP cascades to classify the faces of humans and cats. We had a very specific classification problem because we wanted to blend faces, and conveniently, OpenCV provided pretrained cascade files for human and cat faces. Now, in our final chapter, we will tackle the broader problem of classifying a variety of objects without a ready-made classifier. Perhaps we could train a Haar or LBP cascade for each kind of object, but this would be a long project, requiring a lot of training images. Instead, we will develop a detector that requires no training and a classifier that requires only a few training images. Along the way, we will practice the following tasks:

You're reading from iOS Application Development with OpenCV 3

A blob is a region that we can discern based on color. Perhaps the blob itself has a distinctive color, or perhaps the background does. Unlike the term "object", the term "blob" does not necessarily imply something with mass and volume. For example, surface variations such as stains can be blobs, even though they have negligible mass and volume. Optical effects can also be blobs. For example, a lens's aperture can produce bokeh balls or out-of-focus highlights that can make lights or shiny things appear strangely large and strangely similar to the aperture's shape. However, in BeanCounter, we tend to assume that a blob is a classifiable object.

Note

The term "bokeh" comes from a Japanese word for bamboo. Different authors give different stories about the etymology, but perhaps someone thought bokeh balls resemble the bright rim of a chopped piece of bamboo.

Typically, a blob detector needs to solve the following sequence of problems:

Segmentation: Distinguish between...

A histogram is a count of how many times each color occurs in an image. Typically, we do not count all possible colors separately; rather, we group similar colors together into bins. With a smaller number of bins, the histogram occupies less memory and offers a coarser basis of comparison. Typically, we want to choose some middle ground between very many bins (as the histograms tend to be highly dissimilar) and very few bins (as the histograms tend to be highly similar). For BeanCounter, let's start with 32 bins per channel (or 32^3=32678 bins in total), but you may change this value in the code to experiment with its effect.

A comparison of histograms can tell us whether two images contain similar colors. This kind of similarity alone does not necessarily mean that the images contain matching objects. For example, a silver fork and silver coin could have similar histograms. OpenCV supports several popular comparison algorithms. We will use the Alternative...

Previously, in the Understanding detection with cascade classifiers section in Chapter 4, Detecting and Merging Faces of Mammals, we considered the problem of searching for a set of high-contrast features at various positions and various levels of magnification or scale. As we saw, Haar and LBP cascade classifiers solve this problem. Thus, we may say they are scale-invariant (robust to changes in scale). However, we also noted that these solutions are not rotation-invariant (robust to changes in rotation). Why? Consider the individual features. Haar-like features include edges, lines, and dots, which are all symmetric. LBP features are gradients, which may be symmetric, too. A symmetric feature cannot give us a clear indication of the object's rotation.

Now, let's consider solutions that are both scale-invariant and rotation-invariant. They must use asymmetric features called corners. A corner has brighter neighbors across one range of directions and darker...

At startup, BeanCounter loads a configuration file and set of images and trains the classifier. This may take several seconds. While loading, the app displays the text Training classifier…, along with a regal image of Queen Elizabeth II and eight dried beans:

Next, BeanCounter shows a live view from the rear-facing camera. A blob detection algorithm is applied to each frame and a green rectangle is drawn around each detected blob. Consider the following screenshot:

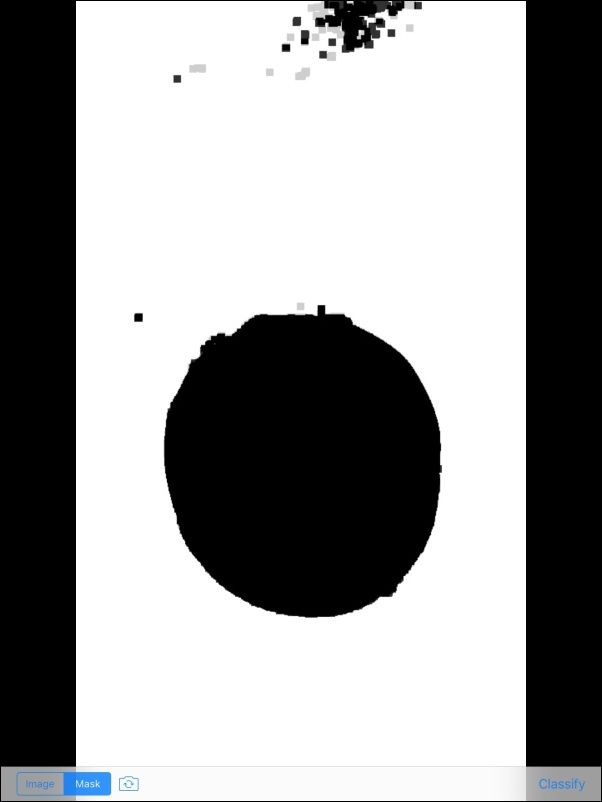

Note the controls in the toolbar below the camera view. The Image and Mask segmented controls enable the user to toggle between the preceding view of detection results in the image and the following view of the mask:

Note

The gray dots in the preceding image are just an artifact of the iOS screenshot function, which sometimes shows a faint ghost of a previous frame. The mask is really pure black and pure white.

The switch camera button has the usual effect of activating a different camera...

Create an Xcode project named BeanCounter. As usual, choose the Single View Application template. Follow the configuration instructions in Chapter 1, Setting Up Software and Hardware and Chapter 2, Capturing, Storing, and Sharing Photos. (See the Configuring the project section of each chapter.) BeanCounter depends on the same frameworks and device capabilities as LightWork.

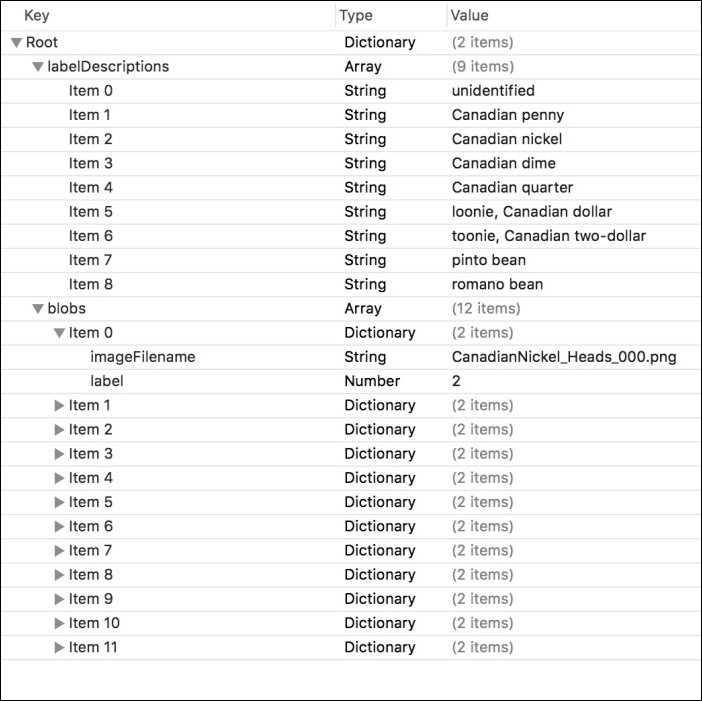

Our blob classifier will depend on a configuration file and set of training images that we provide. As a starting point, you may want to use the training set of beans and Canadian coins, as provided in the book's GitHub repository. Alternatively, under the Supporting Files folder, add your own training images and create a new file called BlobClassifierTraining.plist. Edit the PLIST file to define labels and training images according to the format in the following screenshot:

For example, Item 0 in blobs is a training image with the filename CanadianNickel_Heads_000.png and the label 2. We can look...

For our purposes, a blob simply has an image and label. The image is cv::Mat and the label is an unsigned integer. The label's default value is 0, which shall signify that the blob has not yet been classified. Create a new header file, Blob.h, and fill it with the following declaration of a Blob class:

#ifndef BLOB_H

#define BLOB_H

#include <opencv2/core.hpp>

class Blob

{

public:

Blob(const cv::Mat &mat, uint32_t label = 0ul);

/**

* Construct an empty blob.

*/

Blob();

/**

* Construct a blob by copying another blob.

*/

Blob(const Blob &other);

bool isEmpty() const;

uint32_t getLabel() const;

void setLabel(uint32_t value);

const cv::Mat &getMat() const;

int getWidth() const;

int getHeight() const;

private:

uint32_t label;

cv::Mat mat;

};

#endif // BLOB_HThe image of Blob does not change after construction, but the label may change as a result of our classification process. Note...

Earlier in this chapter, in the Understanding keypoint matching section, we introduced the concept that a keypoint has a descriptor or set of descriptive statistics. Similarly, we can define a custom descriptor for a blob. As our classifier relies on histogram comparison and keypoint matching, let's say that a blob's descriptor consists of a normalized histogram and a matrix of keypoint descriptors. The descriptor object is also a convenient place to put the label. Create a new header file, BlobDescriptor.h, and put the following declaration of a BlobDescriptor class in it:

#ifndef BLOB_DESCRIPTOR_H

#define BLOB_DESCRIPTOR_H

#include <opencv2/core.hpp>

class BlobDescriptor

{

public:

BlobDescriptor(const cv::Mat &normalizedHistogram,

const cv::Mat &keypointDescriptors, uint32_t label);

const cv::Mat &getNormalizedHistogram() const;

const cv::Mat &getKeypointDescriptors() const;

uint32_t getLabel() const...BeanCounter uses two view controllers. The first enables the user to capture and preview images of blobs. The second enables the user to review a blob's classification result and save and share the image of the blob. A segue enables the first view controller to instantiate the second and pass a blob and label to it. This is similar to how we divided the application logic in the project, ManyMasks, in Chapter 4, Detecting and Merging Faces of Mammals, so we are able to reuse some code.

Import copies of the VideoCamera.h and VideoCamera.m files that we created in Chapter 2, Capturing, Storing, and Sharing Photos. These files contain our VideoCamera class, which extends OpenCV's CvVideoCamera to fix bugs and add new functionality.

Also import copies of the CaptureViewController.h and CaptureViewController.m files that we created in Chapter 4, Detecting and Merging Faces of Mammals. These files contain our CaptureViewController...

Let's assume that the background has a distinctive color range, such as "cream to snow white". Our blob detector will calculate the image's dominant color range and search for large regions whose colors differ from this range. These anomalous regions will constitute the detected blobs.

Tip

For small objects such as a bean or coin, a user can easily find a plain background such as a blank sheet of paper, plain table-top, plain piece of clothing, or even the palm of a hand. As our blob detector dynamically estimates the background color range, it can cope with various backgrounds and lighting conditions; it is not limited to a lab environment.

Create a new file, BlobDetector.cpp, for the implementation of our BlobDetector class. (To review the header, refer back to the Defining blobs and a blob detector section.) At the top of BlobDetector.cpp, we will define several constants that pertain to the breadth of the background color range, the size and smoothing...

Our classifier operates on the assumption that a blob contains distinctive colors, distinctive keypoints, or both. To conserve memory and precompute as much relevant information as possible, we do not store images of the reference blobs, but instead we store histograms and keypoint descriptors.

Create a new file, BlobClassifier.cpp, for the implementation of our BlobClassifier class. (To review the header, refer back to the Defining blob descriptors and a blob classifier section.) At the top of BlobDetector.cpp, we will define several constants that pertain to the number of histogram bins, the histogram comparison method, and the relative importance of the histogram comparison versus the keypoint comparison. Here is the relevant code:

#include <opencv2/imgproc.hpp> #include "BlobClassifier.h" #ifdef WITH_OPENCV_CONTRIB #include <opencv2/xfeatures2d.hpp> #endif const int HISTOGRAM_NUM_BINS_PER_CHANNEL = 32; const int HISTOGRAM_COMPARISON_METHOD...

Gather your collection of objects, run BeanCounter, and observe your classifier's successes and failures. Also, check whether the detector is doing a good job. For the best results, obey the following guidelines:

Work in a well-lit area, such as a sunny room.

Use a flat, white background, such as a clean sheet of paper.

View one object at a time.

Keep the iOS device stable. If necessary, use a tripod or other support.

Ensure that the object is in focus. If necessary, tap the screen to focus.

If the object is shiny, ensure that it does not catch reflections.

Under these ideal conditions, what is your classifier's accuracy? Use BeanCounter to save some images of objects, and then select a few of them to add to the Xcode project as reference images. Rebuild and repeat. By training the classifier, can you achieve an accuracy of 80%, 90%, or even 95%?

Now, break the rules! See how the detector and classifier perform under less-than-ideal conditions. The...

So far, you have learned several ways to control the camera, blend images, detect and classify objects, compare images, and apply geometric transformations. These skills can help you solve countless problems in your iOS applications.

Next, you might want to study a collection of advanced OpenCV projects. As of May 2016, there are no other books on OpenCV 3 for iOS. However, with your iOS skills, you can adapt code from any OpenCV 3 book that uses C++. Consider the following options from Packt Publishing:

OpenCV 3 Computer Vision Application Programming Cookbook, Third Edition: This new edition will be published later in 2016. The book provides extensive coverage of OpenCV's C++ API with more than 100 practical samples of reusable code.

Learning Image Processing with OpenCV: This book is a great choice if you are specifically interested in computational photography or videography. The projects use C++ and OpenCV 3.

OpenCV 3 Blueprints: This book...

This chapter has demonstrated a general-purpose approach to blob detection and classification. Specifically, we have applied OpenCV functionality to thresholding, morphology, contour analysis, histogram analysis, and keypoint matching.

You have also learned how to load and parse a PLIST file from an application's resource bundle. As Xcode provides a visual editor for PLIST files, they are a convenient way to configure an iOS app. Specifically, in our case, a configuration file lets us separate the classifier's training data from the application code.

We have seen that our detector and classifier work on different kinds of objects, namely beans and coins. We have also seen that the detector and classifier are somewhat robust with respect to variations in lighting, background, blur, reflections, and the presence of neighboring objects.

Finally, we have identified some further reading that may help you take your knowledge of computer vision and mobile app development to the next level...

© 2016 Packt Publishing Limited All Rights Reserved

© 2016 Packt Publishing Limited All Rights Reserved