Chapter 5. Understanding and Responding to Natural Language

We've built bots that can play games, store data, and provide useful information. The next step isn't information gathering, it's processing. This chapter will introduce natural language processing (NLP) and show how we can use it to enhance our bots even further.

In this chapter, we will cover:

A brief introduction to natural language

A Node implementation

Natural language processing

Natural language generation

Displaying data in a natural way

A brief introduction to natural language

You should always strive to make your bot as helpful as possible. In all the bots we've made so far, we've awaited clear instructions via a key word from the user and then followed said instructions as far as the bot is capable. What if we could infer instructions from users without them actually providing a key word? Enter natural language processing (NLP).

NLP can be described as a field of computer science that strives to understand communication and interactions between computers and human (natural) languages.

In layman's terms, NLP is the process of a computer interpreting conversational language and responding by executing a command or replying to the user in an equally conversational tone.

Examples of NLP projects are digital assistants such as the iPhone's Siri. Users can ask questions or give commands and receive answers or confirmation in natural language, seemingly from a human.

One of the more famous projects using NLP is IBM's Watson system...

NLP, at its core, works by splitting a chunk of text (also referred to as a corpus) into individual segments or tokens and then analyzing them. These tokens might simply be individual words but might also be word contractions. Let's look at how a computer might interpret the phrase: I have watered the plants.

If we were to split this corpus into tokens, it would probably look something like this:

The word the in our corpus is unnecessary as it does not help to understand the phrase's intent— the same for the word have. We should therefore remove the surplus words:

Already, this is starting to look more usable. We have a personal pronoun in the form of an actor (I), an action or verb (watered), and a recipient or noun (plants). From this, we can deduce exactly which action is enacted to what and by whom. Furthermore, by conjugating the verb watered, we can establish that this action occurred in the past. Consider...

Start by creating a new project with npm init. Name your bot "weatherbot" (or something similar), and install the Slack and Natural APIs with the following command:

Copy our Bot class from the previous chapters and enter the following in index.js:

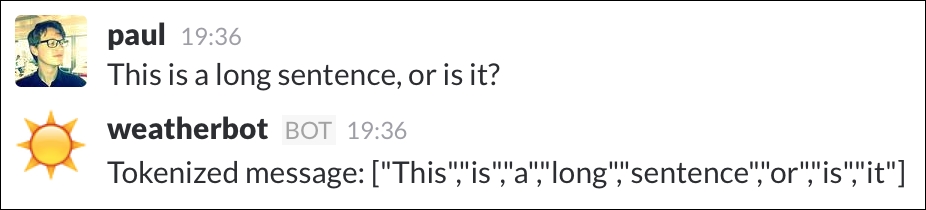

Start up your Node process and type a test phrase into Slack:

Through the use of tokenization, the bot has split the given phrase into short fragments...

Sometimes, it is useful to find the root or stem of a word. In the English language, irregular verb conjugations are not uncommon. By deducing the root of a verb, we can dramatically decrease the amount of calculations needed to find the action of the phrase. Take the verb searching for example; for the purpose of bots, it would be much easier to process the verb in its root form search. Here, a stemmer can help us determine said root. Replace the contents of index.js with the following to demonstrate stemmers:

A string distance measuring algorithm is a calculation of how similar two strings are to one another. The strings smell and bell can be defined as similar, as they share three characters. The strings bell and fell are even closer, as they share three characters and are only one character apart from one another. When calculating string distance, the string fell will receive a higher ranking than smell when the distance is measured between them and bell.

The NPM package natural provides three different algorithms for string distance calculation: Jaro-Winkler, the Dice coefficient, and the Levenshtein distance. Their main differences can be described as follows:

Dice coefficient: This calculates the difference between strings and represents the difference as a value between zero and one. Zero being completely different and one meaning identical.

Jaro-Winkler: This is similar to the Dice Coefficient, but gives greater weighting to similarities at the beginning of the string.

Levenshtein...

An inflector can be used to convert a noun back and forth from its singular and plural forms. This is useful when generating natural language, as the plural versions of nouns might not be obvious:

The preceding code will output viri and octopus, respectively.

Inflectors may also be used to transform numbers into their ordinal forms; for example, 1 becomes 1st, 2 becomes 2nd, and so on:

This outputs 25th, 42nd, and 111th, respectively.

Here's an example of the inflector used in a simple bot command:

Displaying data in a natural way

Let's build our bot's weather functionality. To do this, we will be using a third-party API called Open Weather Map. The API is free to use for up to 60 calls per minute, with further pricing options available. To obtain the API key, you will need to sign up here: https://home.openweathermap.org/users/sign_up.

Note

Remember that you can pass variables such as API keys into Node from the command line. To run the weather bot, you could use the following command:

Once you signed up and obtained your API key, copy and paste the following code into index.js, replacing process.env.WEATHER_API_KEY with your newly acquired Open Weather Map key:

It might be tempting to have weatherbot listen to and process all messages sent in the channel. This immediately poses some problems:

How do we know if the message sent is a query on the weather or is completely unrelated?

Which geographic location is the query about?

Is the message a question or a statement? For example, the difference between Is it cold in Amsterdam and It is cold in Amsterdam.

Although an NLP-powered solution to the preceding questions could probably be found, we have to face facts: it's likely that our bot will get at least one of the above points wrong when listening to generic messages. This will lead the bot to either provide bad information or provide unwanted information, thus becoming annoying. If there's one thing we need to avoid at all costs, it's a bot that sends too many wrong messages too often.

Here's an example of a bot using NLP and completely missing the point of the message sent:

If a bot were to often mistake...

To implement the second point, we need to revisit our Bot class and add mention functionality. In the Bot class' constructor, replace the RTM_CONNECTION_OPENED event listener block with the following:

The only change here is the addition of the bot's id to the this object. This will help us later. Now, replace the respondTo function with this:

Classification is the process of training your bot to recognize a phrase or pattern of words and to associate them with an identifier. To do this, we use a classification system built into natural. Let's start with a small example:

The first log prints:

The second log prints:

The classifier stems the string to be classified first, and then calculates which of the trained phrases it is the most similar to by assigning a weighting to each possibility...

Using trained classifiers

An example

classifier.json file that contains training data for weather is included with this book. For the rest of this chapter, we will assume that the file is present and that we are loading it in via the preceding method.

Replace your respondTo method call with the following snippet:

Natural language generation

Natural language can be defined as a conversational tone in a bot's response. The purpose here is not to hide the fact that the bot is not human, but to make the information easier to digest.

The flavorText variable from the previous snippet is an attempt to make the bot's responses sound more natural; in addition, it is a useful technique to cheat our way out of performing more complex processing to reach a conversational tone in our response.

Take the following example:

Notice how the first weather query is asking whether it's cold or not. Weatherbot gets around giving a yes or no answer by making a generic statement on the temperature to every question.

This might seem like a cheat, but it is important to remember a very important aspect of NLP. The more complex the generated language, the more likely it is to go wrong. Generic answers are better than outright wrong answers.

This particular problem could be solved by adding more...

When should we use natural language generation?

Sparingly, is the answer. Consider Slackbot, Slack's own in-house bot used for setting up new users, amongst other things. Here's the first thing Slackbot says to a new user:

Immediately, the bot's restrictions are outlined and no attempts to hide the fact that it is not human are made. Natural language generation is at its best when used to transform data-intensive constructs such as JSON objects into easy to comprehend phrases.

The Turing Test is a famous test developed in 1950 by Alan Turing to assess a machine's ability to make itself indistinguishable from a human in a text-only sense. Like Slackbot, you should not strive to make your bot Turing Test complete. Instead, focus on how your bot can be the most useful and use natural language generation to make your bot as easy to use as possible.

The uncanny valley is a term used to describe systems that act and sound like humans, but are somehow slightly off. This slight discrepancy actually leads to the bot feeling a lot more unnatural, and this is the exact opposite of what we are trying to accomplish with natural language generation. Instead, we should avoid trying to make the bot perfect in its natural language responses; the chances of finding ourselves in the uncanny valley get higher the more human-like we try to make a bot sound.

Instead, we should focus on making our bots useful and easy to use, over making its responses natural. A good principle to follow is to build your bot to be as smart as a puppy, a concept championed by Matt Jones (http://berglondon.com/blog/2010/09/04/b-a-s-a-a-p/):

"Making smart things that don't try to be too smart and fail, and indeed, by design, make endearing failures in their attempts to learn and improve. Like puppies."

Let's expand our weatherbot to make the generated response...

In this chapter, we discussed what NLP is and how it can be leveraged to make a bot seem far more complex than it really is. By using these techniques, natural language can be read, processed, and responded to in equally natural tones. We also covered the limitations of NLP and understood how to differentiate between good and bad uses of NLP.

In the next chapter, we will explore the creation of web-based bots, which can interact with Slack using webhooks and slash commands.