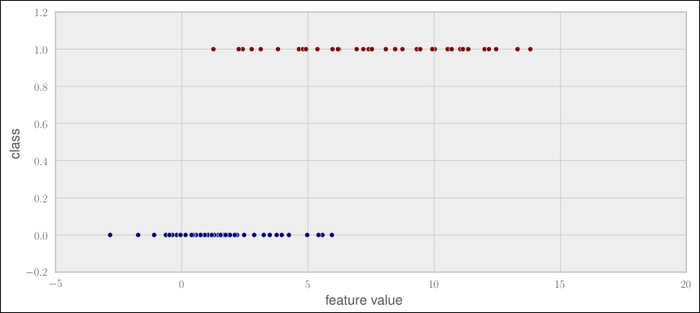

It is always worth looking at the actual contributions of the individual features. For logistic regression, we can directly take the learned coefficients (clf.coef_) to get an impression of the feature's impact. The higher the coefficient of a feature is, the more the feature plays a role in determining whether the post is good or not. Consequently, negative coefficients tell us that the higher values for the corresponding features indicate a stronger signal for the post to be classified as bad:

We see that LinkCount and NumExclams have the biggest impact on the overall classification decision, while NumImages and AvgSentLen play a rather minor role. While the feature importance overall makes sense intuitively, it is surprising that NumImages is basically ignored. Normally, answers containing images are always rated high. In reality, however, answers very rarely have images. So although in principal it is a very powerful feature, it is too sparse to be of any value...

Let's assume we want to integrate this classifier into our site. What we definitely do not want is to train the classifier each time we start the classification service. Instead, we can simply serialize the classifier after training and then deserialize it on that site:

Congratulations, the classifier is now ready to be used as if it had just been trained.

We made it! For a very noisy dataset, we built a classifier that suits part of our goal. Of course, we had to be pragmatic and adapt our initial goal to what was achievable. But on the way, we learned about the strengths and weaknesses of the nearest neighbor and logistic regression algorithms. We learned how to extract features, such as LinkCount, NumTextTokens, NumCodeLines, AvgSentLen, AvgWordLen, NumAllCaps, NumExclams, and NumImages, and how to analyze their impact on the classifier's performance.

But what is even more valuable is that we learned an informed way of how to debug badly performing classifiers. This will help us in the future to come up with usable systems much faster.

After having looked into the nearest neighbor and logistic regression algorithms, in the next chapter we will get familiar with yet another simple yet powerful classification algorithm: Naive Bayes. Along the way, we will also learn how to use some more convenient tools from Scikit-learn.