Evaluating and Optimizing Models

It is now time to learn how to evaluate and optimize machine learning models. During the process of modeling, or even after model completion, you might want to understand how your model is performing. Each type of model has its own set of metrics that can be used to evaluate performance, and that is what you are going to study in this chapter.

Apart from model evaluation, as a data scientist, you might also need to improve your model’s performance by tuning the hyperparameters of your algorithm. You will take a look at some nuances of this modeling task.

In this chapter, the following topics will be covered:

- Introducing model evaluation

- Evaluating classification models

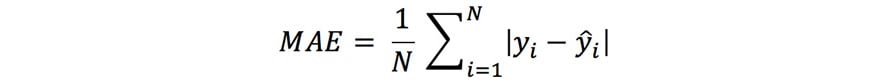

- Evaluating regression models

- Model optimization

Alright, time to rock it!