Getting Started with Scraping

In this chapter, we will cover the following topics:

- Setting up a Python development environment

- Scraping Python.org with Requests and Beautiful Soup

- Scraping Python.org with urllib3 and Beautiful Soup

- Scraping Python.org with Scrapy

- Scraping Python.org with Selenium and PhantomJs

Introduction

The amount of data available on the web is consistently growing both in quantity and in form. Businesses require this data to make decisions, particularly with the explosive growth of machine learning tools which require large amounts of data for training. Much of this data is available via Application Programming Interfaces, but at the same time a lot of valuable data is still only available through the process of web scraping.

This chapter will focus on several fundamentals of setting up a scraping environment and performing basic requests for data with several of the tools of the trade. Python is the programing language of choice for this book, as well as amongst many who build systems to perform scraping. It is an easy to use programming language which has a very rich ecosystem of tools for many tasks. If you program in other languages, you will find it easy to pick up and you may never go back!

Setting up a Python development environment

If you have not used Python before, it is important to have a working development environment. The recipes in this book will be all in Python and be a mix of interactive examples, but primarily implemented as scripts to be interpreted by the Python interpreter. This recipe will show you how to set up an isolated development environment with virtualenv and manage project dependencies with pip . We also get the code for the book and install it into the Python virtual environment.

Getting ready

We will exclusively be using Python 3.x, and specifically in my case 3.6.1. While Mac and Linux normally have Python version 2 installed, and Windows systems do not. So it is likely that in any case that Python 3 will need to be installed. You can find references for Python installers at www.python.org.

You can check Python's version with python --version

How to do it...

We will be installing a number of packages with pip. These packages are installed into a Python environment. There often can be version conflicts with other packages, so a good practice for following along with the recipes in the book will be to create a new virtual Python environment where the packages we will use will be ensured to work properly.

Virtual Python environments are managed with the virtualenv tool. This can be installed with the following command:

~ $ pip install virtualenv

Collecting virtualenv

Using cached virtualenv-15.1.0-py2.py3-none-any.whl

Installing collected packages: virtualenv

Successfully installed virtualenv-15.1.0

Now we can use virtualenv. But before that let's briefly look at pip. This command installs Python packages from PyPI, a package repository with literally 10's of thousands of packages. We just saw using the install subcommand to pip, which ensures a package is installed. We can also see all currently installed packages with pip list:

~ $ pip list

alabaster (0.7.9)

amqp (1.4.9)

anaconda-client (1.6.0)

anaconda-navigator (1.5.3)

anaconda-project (0.4.1)

aniso8601 (1.3.0)

I've truncated to the first few lines as there are quite a few. For me there are 222 packages installed.

Packages can also be uninstalled using pip uninstall followed by the package name. I'll leave it to you to give it a try.

Now back to virtualenv. Using virtualenv is very simple. Let's use it to create an environment and install the code from github. Let's walk through the steps:

- Create a directory to represent the project and enter the directory.

~ $ mkdir pywscb

~ $ cd pywscb

- Initialize a virtual environment folder named env:

pywscb $ virtualenv env

Using base prefix '/Users/michaelheydt/anaconda'

New python executable in /Users/michaelheydt/pywscb/env/bin/python

copying /Users/michaelheydt/anaconda/bin/python => /Users/michaelheydt/pywscb/env/bin/python

copying /Users/michaelheydt/anaconda/bin/../lib/libpython3.6m.dylib => /Users/michaelheydt/pywscb/env/lib/libpython3.6m.dylib

Installing setuptools, pip, wheel...done.

- This creates an env folder. Let's take a look at what was installed.

pywscb $ ls -la env

total 8

drwxr-xr-x 6 michaelheydt staff 204 Jan 18 15:38 .

drwxr-xr-x 3 michaelheydt staff 102 Jan 18 15:35 ..

drwxr-xr-x 16 michaelheydt staff 544 Jan 18 15:38 bin

drwxr-xr-x 3 michaelheydt staff 102 Jan 18 15:35 include

drwxr-xr-x 4 michaelheydt staff 136 Jan 18 15:38 lib

-rw-r--r-- 1 michaelheydt staff 60 Jan 18 15:38 pip-selfcheck.json

- New we activate the virtual environment. This command uses the content in the env folder to configure Python. After this all python activities are relative to this virtual environment.

pywscb $ source env/bin/activate

(env) pywscb $

- We can check that python is indeed using this virtual environment with the following command:

(env) pywscb $ which python

/Users/michaelheydt/pywscb/env/bin/python

With our virtual environment created, let's clone the books sample code and take a look at its structure.

(env) pywscb $ git clone https://github.com/PacktBooks/PythonWebScrapingCookbook.git

Cloning into 'PythonWebScrapingCookbook'...

remote: Counting objects: 420, done.

remote: Compressing objects: 100% (316/316), done.

remote: Total 420 (delta 164), reused 344 (delta 88), pack-reused 0

Receiving objects: 100% (420/420), 1.15 MiB | 250.00 KiB/s, done.

Resolving deltas: 100% (164/164), done.

Checking connectivity... done.

This created a PythonWebScrapingCookbook directory.

(env) pywscb $ ls -l

total 0

drwxr-xr-x 9 michaelheydt staff 306 Jan 18 16:21 PythonWebScrapingCookbook

drwxr-xr-x 6 michaelheydt staff 204 Jan 18 15:38 env

Let's change into it and examine the content.

(env) PythonWebScrapingCookbook $ ls -l

total 0

drwxr-xr-x 15 michaelheydt staff 510 Jan 18 16:21 py

drwxr-xr-x 14 michaelheydt staff 476 Jan 18 16:21 www

There are two directories. Most the the Python code is is the py directory. www contains some web content that we will use from time-to-time using a local web server. Let's look at the contents of the py directory:

(env) py $ ls -l

total 0

drwxr-xr-x 9 michaelheydt staff 306 Jan 18 16:21 01

drwxr-xr-x 25 michaelheydt staff 850 Jan 18 16:21 03

drwxr-xr-x 21 michaelheydt staff 714 Jan 18 16:21 04

drwxr-xr-x 10 michaelheydt staff 340 Jan 18 16:21 05

drwxr-xr-x 14 michaelheydt staff 476 Jan 18 16:21 06

drwxr-xr-x 25 michaelheydt staff 850 Jan 18 16:21 07

drwxr-xr-x 14 michaelheydt staff 476 Jan 18 16:21 08

drwxr-xr-x 7 michaelheydt staff 238 Jan 18 16:21 09

drwxr-xr-x 7 michaelheydt staff 238 Jan 18 16:21 10

drwxr-xr-x 9 michaelheydt staff 306 Jan 18 16:21 11

drwxr-xr-x 8 michaelheydt staff 272 Jan 18 16:21 modules

Code for each chapter is in the numbered folder matching the chapter (there is no code for chapter 2 as it is all interactive Python).

Note that there is a modules folder. Some of the recipes throughout the book use code in those modules. Make sure that your Python path points to this folder. On Mac and Linux you can sets this in your .bash_profile file (and environments variables dialog on Windows):

export PYTHONPATH="/users/michaelheydt/dropbox/packt/books/pywebscrcookbook/code/py/modules"

export PYTHONPATH

The contents in each folder generally follows a numbering scheme matching the sequence of the recipe in the chapter. The following is the contents of the chapter 6 folder:

(env) py $ ls -la 06

total 96

drwxr-xr-x 14 michaelheydt staff 476 Jan 18 16:21 .

drwxr-xr-x 14 michaelheydt staff 476 Jan 18 16:26 ..

-rw-r--r-- 1 michaelheydt staff 902 Jan 18 16:21 01_scrapy_retry.py

-rw-r--r-- 1 michaelheydt staff 656 Jan 18 16:21 02_scrapy_redirects.py

-rw-r--r-- 1 michaelheydt staff 1129 Jan 18 16:21 03_scrapy_pagination.py

-rw-r--r-- 1 michaelheydt staff 488 Jan 18 16:21 04_press_and_wait.py

-rw-r--r-- 1 michaelheydt staff 580 Jan 18 16:21 05_allowed_domains.py

-rw-r--r-- 1 michaelheydt staff 826 Jan 18 16:21 06_scrapy_continuous.py

-rw-r--r-- 1 michaelheydt staff 704 Jan 18 16:21 07_scrape_continuous_twitter.py

-rw-r--r-- 1 michaelheydt staff 1409 Jan 18 16:21 08_limit_depth.py

-rw-r--r-- 1 michaelheydt staff 526 Jan 18 16:21 09_limit_length.py

-rw-r--r-- 1 michaelheydt staff 1537 Jan 18 16:21 10_forms_auth.py

-rw-r--r-- 1 michaelheydt staff 597 Jan 18 16:21 11_file_cache.py

-rw-r--r-- 1 michaelheydt staff 1279 Jan 18 16:21 12_parse_differently_based_on_rules.py

In the recipes I'll state that we'll be using the script in <chapter directory>/<recipe filename>.

Now just the be complete, if you want to get out of the Python virtual environment, you can exit using the following command:

(env) py $ deactivate

py $

And checking which python we can see it has switched back:

py $ which python

/Users/michaelheydt/anaconda/bin/python

Now let's move onto doing some scraping.

Scraping Python.org with Requests and Beautiful Soup

In this recipe we will install Requests and Beautiful Soup and scrape some content from www.python.org. We'll install both of the libraries and get some basic familiarity with them. We'll come back to them both in subsequent chapters and dive deeper into each.

Getting ready...

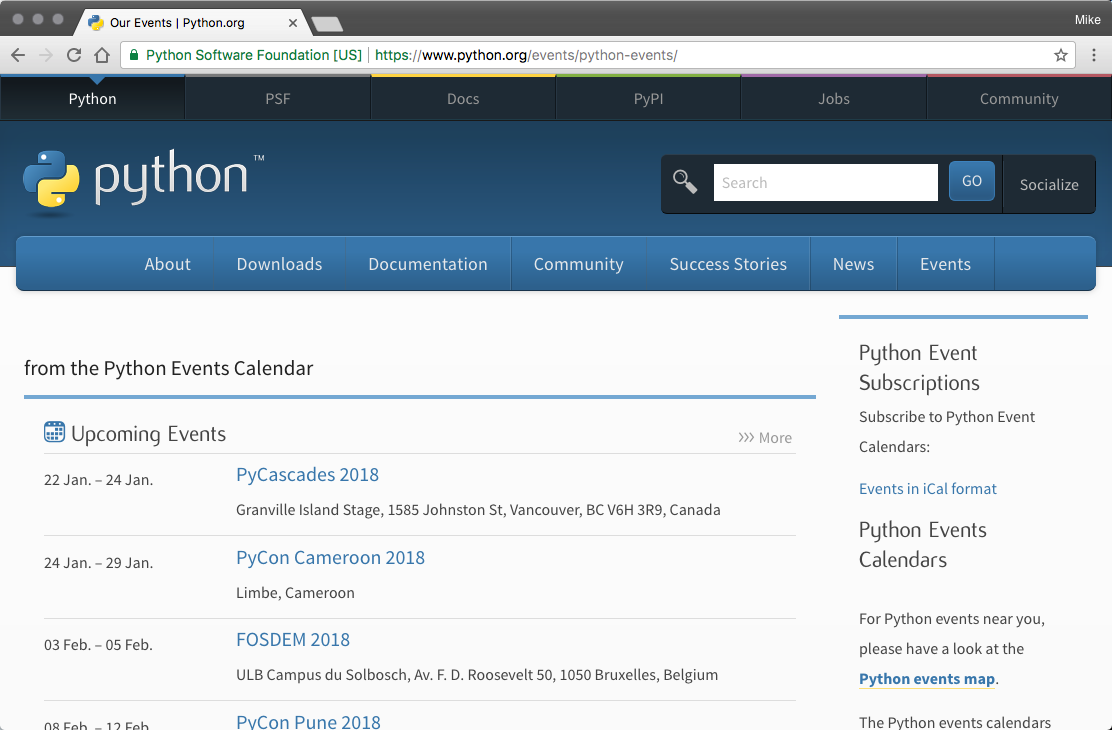

In this recipe, we will scrape the upcoming Python events from https://www.python.org/events/pythonevents. The following is an an example of The Python.org Events Page (it changes frequently, so your experience will differ):

We will need to ensure that Requests and Beautiful Soup are installed. We can do that with the following:

pywscb $ pip install requests

Downloading/unpacking requests

Downloading requests-2.18.4-py2.py3-none-any.whl (88kB): 88kB downloaded

Downloading/unpacking certifi>=2017.4.17 (from requests)

Downloading certifi-2018.1.18-py2.py3-none-any.whl (151kB): 151kB downloaded

Downloading/unpacking idna>=2.5,<2.7 (from requests)

Downloading idna-2.6-py2.py3-none-any.whl (56kB): 56kB downloaded

Downloading/unpacking chardet>=3.0.2,<3.1.0 (from requests)

Downloading chardet-3.0.4-py2.py3-none-any.whl (133kB): 133kB downloaded

Downloading/unpacking urllib3>=1.21.1,<1.23 (from requests)

Downloading urllib3-1.22-py2.py3-none-any.whl (132kB): 132kB downloaded

Installing collected packages: requests, certifi, idna, chardet, urllib3

Successfully installed requests certifi idna chardet urllib3

Cleaning up...

pywscb $ pip install bs4

Downloading/unpacking bs4

Downloading bs4-0.0.1.tar.gz

Running setup.py (path:/Users/michaelheydt/pywscb/env/build/bs4/setup.py) egg_info for package bs4

How to do it...

Now let's go and learn to scrape a couple events. For this recipe we will start by using interactive python.

-

Start it with the ipython command:

$ ipython

Python 3.6.1 |Anaconda custom (x86_64)| (default, Mar 22 2017, 19:25:17)

Type "copyright", "credits" or "license" for more information.

IPython 5.1.0 -- An enhanced Interactive Python.

? -> Introduction and overview of IPython's features.

%quickref -> Quick reference.

help -> Python's own help system.

object? -> Details about 'object', use 'object??' for extra details.

In [1]:

- Next we import Requests

In [1]: import requests

- We now use requests to make a GET HTTP request for the following url: https://www.python.org/events/python-events/ by making a GET request:

In [2]: url = 'https://www.python.org/events/python-events/'

In [3]: req = requests.get(url)

- That downloaded the page content but it is stored in our requests object req. We can retrieve the content using the .text property. This prints the first 200 characters.

req.text[:200]

Out[4]: '<!doctype html>\n<!--[if lt IE 7]> <html class="no-js ie6 lt-ie7 lt-ie8 lt-ie9"> <![endif]-->\n<!--[if IE 7]> <html class="no-js ie7 lt-ie8 lt-ie9"> <![endif]-->\n<!--[if IE 8]> <h'

We now have the raw HTML of the page. We can now use beautiful soup to parse the HTML and retrieve the event data.

- First import Beautiful Soup

In [5]: from bs4 import BeautifulSoup

- Now we create a BeautifulSoup object and pass it the HTML.

In [6]: soup = BeautifulSoup(req.text, 'lxml')

- Now we tell Beautiful Soup to find the main <ul> tag for the recent events, and then to get all the <li> tags below it.

In [7]: events = soup.find('ul', {'class': 'list-recent-events'}).findAll('li')

- And finally we can loop through each of the <li> elements, extracting the event details, and print each to the console:

In [13]: for event in events:

...: event_details = dict()

...: event_details['name'] = event_details['name'] = event.find('h3').find("a").text

...: event_details['location'] = event.find('span', {'class', 'event-location'}).text

...: event_details['time'] = event.find('time').text

...: print(event_details)

...:

{'name': 'PyCascades 2018', 'location': 'Granville Island Stage, 1585 Johnston St, Vancouver, BC V6H 3R9, Canada', 'time': '22 Jan. – 24 Jan. 2018'}

{'name': 'PyCon Cameroon 2018', 'location': 'Limbe, Cameroon', 'time': '24 Jan. – 29 Jan. 2018'}

{'name': 'FOSDEM 2018', 'location': 'ULB Campus du Solbosch, Av. F. D. Roosevelt 50, 1050 Bruxelles, Belgium', 'time': '03 Feb. – 05 Feb. 2018'}

{'name': 'PyCon Pune 2018', 'location': 'Pune, India', 'time': '08 Feb. – 12 Feb. 2018'}

{'name': 'PyCon Colombia 2018', 'location': 'Medellin, Colombia', 'time': '09 Feb. – 12 Feb. 2018'}

{'name': 'PyTennessee 2018', 'location': 'Nashville, TN, USA', 'time': '10 Feb. – 12 Feb. 2018'}

This entire example is available in the 01/01_events_with_requests.py script file. The following is its content and it pulls together all of what we just did step by step:

import requests

from bs4 import BeautifulSoup

def get_upcoming_events(url):

req = requests.get(url)

soup = BeautifulSoup(req.text, 'lxml')

events = soup.find('ul', {'class': 'list-recent-events'}).findAll('li')

for event in events:

event_details = dict()

event_details['name'] = event.find('h3').find("a").text

event_details['location'] = event.find('span', {'class', 'event-location'}).text

event_details['time'] = event.find('time').text

print(event_details)

get_upcoming_events('https://www.python.org/events/python-events/')

You can run this using the following command from the terminal:

$ python 01_events_with_requests.py

{'name': 'PyCascades 2018', 'location': 'Granville Island Stage, 1585 Johnston St, Vancouver, BC V6H 3R9, Canada', 'time': '22 Jan. – 24 Jan. 2018'}

{'name': 'PyCon Cameroon 2018', 'location': 'Limbe, Cameroon', 'time': '24 Jan. – 29 Jan. 2018'}

{'name': 'FOSDEM 2018', 'location': 'ULB Campus du Solbosch, Av. F. D. Roosevelt 50, 1050 Bruxelles, Belgium', 'time': '03 Feb. – 05 Feb. 2018'}

{'name': 'PyCon Pune 2018', 'location': 'Pune, India', 'time': '08 Feb. – 12 Feb. 2018'}

{'name': 'PyCon Colombia 2018', 'location': 'Medellin, Colombia', 'time': '09 Feb. – 12 Feb. 2018'}

{'name': 'PyTennessee 2018', 'location': 'Nashville, TN, USA', 'time': '10 Feb. – 12 Feb. 2018'}

How it works...

We will dive into details of both Requests and Beautiful Soup in the next chapter, but for now let's just summarize a few key points about how this works. The following important points about Requests:

- Requests is used to execute HTTP requests. We used it to make a GET verb request of the URL for the events page.

- The Requests object holds the results of the request. This is not only the page content, but also many other items about the result such as HTTP status codes and headers.

- Requests is used only to get the page, it does not do an parsing.

We use Beautiful Soup to do the parsing of the HTML and also the finding of content within the HTML.

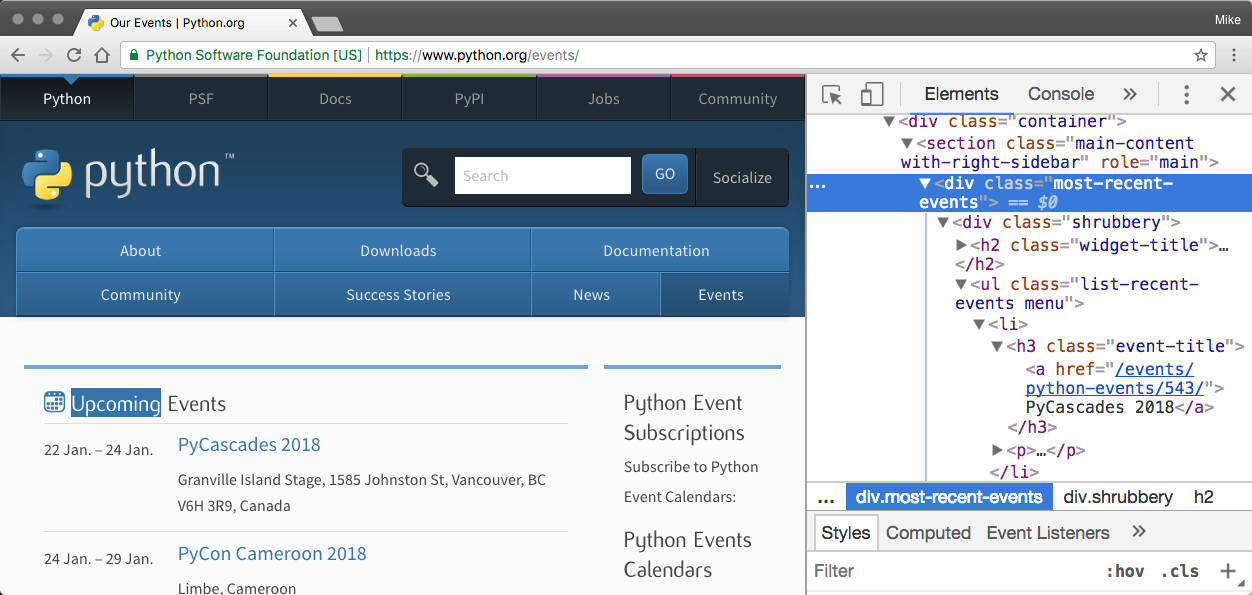

To understand how this worked, the content of the page has the following HTML to start the Upcoming Events section:

We used the power of Beautiful Soup to:

- Find the <ul> element representing the section, which is found by looking for a <ul> with the a class attribute that has a value of list-recent-events.

- From that object, we find all the <li> elements.

Each of these <li> tags represent a different event. We iterate over each of those making a dictionary from the event data found in child HTML tags:

- The name is extracted from the <a> tag that is a child of the <h3> tag

- The location is the text content of the <span> with a class of event-location

- And the time is extracted from the datetime attribute of the <time> tag.

Scraping Python.org in urllib3 and Beautiful Soup

In this recipe we swap out the use of requests for another library urllib3. This is another common library for retrieving data from URLs and for other functions involving URLs such as parsing of the parts of the actual URL and handling various encodings.

Getting ready...

This recipe requires urllib3 installed. So install it with pip:

$ pip install urllib3

Collecting urllib3

Using cached urllib3-1.22-py2.py3-none-any.whl

Installing collected packages: urllib3

Successfully installed urllib3-1.22

How to do it...

The recipe is implemented in 01/02_events_with_urllib3.py. The code is the following:

import urllib3

from bs4 import BeautifulSoup

def get_upcoming_events(url):

req = urllib3.PoolManager()

res = req.request('GET', url)

soup = BeautifulSoup(res.data, 'html.parser')

events = soup.find('ul', {'class': 'list-recent-events'}).findAll('li')

for event in events:

event_details = dict()

event_details['name'] = event.find('h3').find("a").text

event_details['location'] = event.find('span', {'class', 'event-location'}).text

event_details['time'] = event.find('time').text

print(event_details)

get_upcoming_events('https://www.python.org/events/python-events/')

The run it with the python interpreter. You will get identical output to the previous recipe.

How it works

The only difference in this recipe is how we fetch the resource:

req = urllib3.PoolManager()

res = req.request('GET', url)

Unlike Requests, urllib3 doesn't apply header encoding automatically. The reason why the code snippet works in the preceding example is because BS4 handles encoding beautifully. But you should keep in mind that encoding is an important part of scraping. If you decide to use your own framework or use other libraries, make sure encoding is well handled.

There's more...

Requests and urllib3 are very similar in terms of capabilities. it is generally recommended to use Requests when it comes to making HTTP requests. The following code example illustrates a few advanced features:

import requests

# builds on top of urllib3's connection pooling

# session reuses the same TCP connection if

# requests are made to the same host

# see https://en.wikipedia.org/wiki/HTTP_persistent_connection for details

session = requests.Session()

# You may pass in custom cookie

r = session.get('http://httpbin.org/get', cookies={'my-cookie': 'browser'})

print(r.text)

# '{"cookies": {"my-cookie": "test cookie"}}'

# Streaming is another nifty feature

# From http://docs.python-requests.org/en/master/user/advanced/#streaming-requests

# copyright belongs to reques.org

r = requests.get('http://httpbin.org/stream/20', stream=True)

for line in r.iter_lines():

# filter out keep-alive new lines

if line:

decoded_line = line.decode('utf-8')

print(json.loads(decoded_line))

Scraping Python.org with Scrapy

Scrapy is a very popular open source Python scraping framework for extracting data. It was originally designed for only scraping, but it is has also evolved into a powerful web crawling solution.

In our previous recipes, we used Requests and urllib2 to fetch data and Beautiful Soup to extract data. Scrapy offers all of these functionalities with many other built-in modules and extensions. It is also our tool of choice when it comes to scraping with Python.

Scrapy offers a number of powerful features that are worth mentioning:

- Built-in extensions to make HTTP requests and handle compression, authentication, caching, manipulate user-agents, and HTTP headers

- Built-in support for selecting and extracting data with selector languages such as CSS and XPath, as well as support for utilizing regular expressions for selection of content and links

- Encoding support to deal with languages and non-standard encoding declarations

- Flexible APIs to reuse and write custom middleware and pipelines, which provide a clean and easy way to implement tasks such as automatically downloading assets (for example, images or media) and storing data in storage such as file systems, S3, databases, and others

Getting ready...

There are several means of creating a scraper with Scrapy. One is a programmatic pattern where we create the crawler and spider in our code. It is also possible to configure a Scrapy project from templates or generators and then run the scraper from the command line using the scrapy command. This book will follow the programmatic pattern as it contains the code in a single file more effectively. This will help when we are putting together specific, targeted, recipes with Scrapy.

This isn't necessarily a better way of running a Scrapy scraper than using the command line execution, just one that is a design decision for this book. Ultimately this book is not about Scrapy (there are other books on just Scrapy), but more of an exposition on various things you may need to do when scraping, and in the ultimate creation of a functional scraper as a service in the cloud.

How to do it...

The script for this recipe is 01/03_events_with_scrapy.py. The following is the code:

import scrapy

from scrapy.crawler import CrawlerProcess

class PythonEventsSpider(scrapy.Spider):

name = 'pythoneventsspider'

start_urls = ['https://www.python.org/events/python-events/',]

found_events = []

def parse(self, response):

for event in response.xpath('//ul[contains(@class, "list-recent-events")]/li'):

event_details = dict()

event_details['name'] = event.xpath('h3[@class="event-title"]/a/text()').extract_first()

event_details['location'] = event.xpath('p/span[@class="event-location"]/text()').extract_first()

event_details['time'] = event.xpath('p/time/text()').extract_first()

self.found_events.append(event_details)

if __name__ == "__main__":

process = CrawlerProcess({ 'LOG_LEVEL': 'ERROR'})

process.crawl(PythonEventsSpider)

spider = next(iter(process.crawlers)).spider

process.start()

for event in spider.found_events: print(event)

The following runs the script and shows the output:

~ $ python 03_events_with_scrapy.py

{'name': 'PyCascades 2018', 'location': 'Granville Island Stage, 1585 Johnston St, Vancouver, BC V6H 3R9, Canada', 'time': '22 Jan. – 24 Jan. '}

{'name': 'PyCon Cameroon 2018', 'location': 'Limbe, Cameroon', 'time': '24 Jan. – 29 Jan. '}

{'name': 'FOSDEM 2018', 'location': 'ULB Campus du Solbosch, Av. F. D. Roosevelt 50, 1050 Bruxelles, Belgium', 'time': '03 Feb. – 05 Feb. '}

{'name': 'PyCon Pune 2018', 'location': 'Pune, India', 'time': '08 Feb. – 12 Feb. '}

{'name': 'PyCon Colombia 2018', 'location': 'Medellin, Colombia', 'time': '09 Feb. – 12 Feb. '}

{'name': 'PyTennessee 2018', 'location': 'Nashville, TN, USA', 'time': '10 Feb. – 12 Feb. '}

{'name': 'PyCon Pakistan', 'location': 'Lahore, Pakistan', 'time': '16 Dec. – 17 Dec. '}

{'name': 'PyCon Indonesia 2017', 'location': 'Surabaya, Indonesia', 'time': '09 Dec. – 10 Dec. '}

The same result but with another tool. Let's go take a quick review of how this works.

How it works

We will get into some details about Scrapy in later chapters, but let's just go through this code quick to get a feel how it is accomplishing this scrape. Everything in Scrapy revolves around creating a spider. Spiders crawl through pages on the Internet based upon rules that we provide. This spider only processes one single page, so it's not really much of a spider. But it shows the pattern we will use through later Scrapy examples.

The spider is created with a class definition that derives from one of the Scrapy spider classes. Ours derives from the scrapy.Spider class.

class PythonEventsSpider(scrapy.Spider):

name = 'pythoneventsspider'

start_urls = ['https://www.python.org/events/python-events/',]

Every spider is given a name, and also one or more start_urls which tell it where to start the crawling.

This spider has a field to store all the events that we find:

found_events = []

The spider then has a method names parse which will be called for every page the spider collects.

def parse(self, response):

for event in response.xpath('//ul[contains(@class, "list-recent-events")]/li'):

event_details = dict()

event_details['name'] = event.xpath('h3[@class="event-title"]/a/text()').extract_first()

event_details['location'] = event.xpath('p/span[@class="event-location"]/text()').extract_first()

event_details['time'] = event.xpath('p/time/text()').extract_first()

self.found_events.append(event_details)

The implementation of this method uses and XPath selection to get the events from the page (XPath is the built in means of navigating HTML in Scrapy). It them builds the event_details dictionary object similarly to the other examples, and then adds it to the found_events list.

The remaining code does the programmatic execution of the Scrapy crawler.

process = CrawlerProcess({ 'LOG_LEVEL': 'ERROR'})

process.crawl(PythonEventsSpider)

spider = next(iter(process.crawlers)).spider

process.start()

It starts with the creation of a CrawlerProcess which does the actual crawling and a lot of other tasks. We pass it a LOG_LEVEL of ERROR to prevent the voluminous Scrapy output. Change this to DEBUG and re-run it to see the difference.

Next we tell the crawler process to use our Spider implementation. We get the actual spider object from that crawler so that we can get the items when the crawl is complete. And then we kick of the whole thing by calling process.start().

When the crawl is completed we can then iterate and print out the items that were found.

for event in spider.found_events: print(event)

Scraping Python.org with Selenium and PhantomJS

This recipe will introduce Selenium and PhantomJS, two frameworks that are very different from the frameworks in the previous recipes. In fact, Selenium and PhantomJS are often used in functional/acceptance testing. We want to demonstrate these tools as they offer unique benefits from the scraping perspective. Several that we will look at later in the book are the ability to fill out forms, press buttons, and wait for dynamic JavaScript to be downloaded and executed.

Selenium itself is a programming language neutral framework. It offers a number of programming language bindings, such as Python, Java, C#, and PHP (amongst others). The framework also provides many components that focus on testing. Three commonly used components are:

- IDE for recording and replaying tests

- Webdriver, which actually launches a web browser (such as Firefox, Chrome, or Internet Explorer) by sending commands and sending the results to the selected browser

- A grid server executes tests with a web browser on a remote server. It can run multiple test cases in parallel.

Getting ready

First we need to install Selenium. We do this with our trusty pip:

~ $ pip install selenium

Collecting selenium

Downloading selenium-3.8.1-py2.py3-none-any.whl (942kB)

100% |████████████████████████████████| 952kB 236kB/s

Installing collected packages: selenium

Successfully installed selenium-3.8.1

This installs the Selenium Client Driver for Python (the language bindings). You can find more information on it at https://github.com/SeleniumHQ/selenium/blob/master/py/docs/source/index.rst if you want to in the future.

For this recipe we also need to have the driver for Firefox in the directory (it's named geckodriver). This file is operating system specific. I've included the file for Mac in the folder. To get other versions, visit https://github.com/mozilla/geckodriver/releases.

Still, when running this sample you may get the following error:

FileNotFoundError: [Errno 2] No such file or directory: 'geckodriver'

If you do, put the geckodriver file somewhere on your systems PATH, or add the 01 folder to your path. Oh, and you will need to have Firefox installed.

Finally, it is required to have PhantomJS installed. You can download and find installation instructions at: http://phantomjs.org/

How to do it...

The script for this recipe is 01/04_events_with_selenium.py.

- The following is the code:

from selenium import webdriver

def get_upcoming_events(url):

driver = webdriver.Firefox()

driver.get(url)

events = driver.find_elements_by_xpath('//ul[contains(@class, "list-recent-events")]/li')

for event in events:

event_details = dict()

event_details['name'] = event.find_element_by_xpath('h3[@class="event-title"]/a').text

event_details['location'] = event.find_element_by_xpath('p/span[@class="event-location"]').text

event_details['time'] = event.find_element_by_xpath('p/time').text

print(event_details)

driver.close()

get_upcoming_events('https://www.python.org/events/python-events/')

- And run the script with Python. You will see familiar output:

~ $ python 04_events_with_selenium.py

{'name': 'PyCascades 2018', 'location': 'Granville Island Stage, 1585 Johnston St, Vancouver, BC V6H 3R9, Canada', 'time': '22 Jan. – 24 Jan.'}

{'name': 'PyCon Cameroon 2018', 'location': 'Limbe, Cameroon', 'time': '24 Jan. – 29 Jan.'}

{'name': 'FOSDEM 2018', 'location': 'ULB Campus du Solbosch, Av. F. D. Roosevelt 50, 1050 Bruxelles, Belgium', 'time': '03 Feb. – 05 Feb.'}

{'name': 'PyCon Pune 2018', 'location': 'Pune, India', 'time': '08 Feb. – 12 Feb.'}

{'name': 'PyCon Colombia 2018', 'location': 'Medellin, Colombia', 'time': '09 Feb. – 12 Feb.'}

{'name': 'PyTennessee 2018', 'location': 'Nashville, TN, USA', 'time': '10 Feb. – 12 Feb.'}

During this process, Firefox will pop up and open the page. We have reused the previous recipe and adopted Selenium.

How it works

The primary difference in this recipe is the following code:

driver = webdriver.Firefox()

driver.get(url)

This gets the Firefox driver and uses it to get the content of the specified URL. This works by starting Firefox and automating it to go the the page, and then Firefox returns the page content to our app. This is why Firefox popped up. The other difference is that to find things we need to call find_element_by_xpath to search the resulting HTML.

There's more...

PhantomJS, in many ways, is very similar to Selenium. It has fast and native support for various web standards, with features such as DOM handling, CSS selector, JSON, Canvas, and SVG. It is often used in web testing, page automation, screen capturing, and network monitoring.

There is one key difference between Selenium and PhantomJS: PhantomJS is headless and uses WebKit. As we saw, Selenium opens and automates a browser. This is not very good if we are in a continuous integration or testing environment where the browser is not installed, and where we also don't want thousands of browser windows or tabs being opened. Being headless, makes this faster and more efficient.

The example for PhantomJS is in the 01/05_events_with_phantomjs.py file. There is a single one line change:

driver = webdriver.PhantomJS('phantomjs')

And running the script results in similar output to the Selenium / Firefox example, but without a browser popping up and also it takes less time to complete.

Download code from GitHub

Download code from GitHub