We will cover the basic operations of AWS SDKs to understand what they can do with Amazon S3 with the official AWS SDK sample application code to create S3 buckets, and upload, list, get, and download objects into and from a bucket.

In this chapter, we will cover:

Learning AWS SDK for Java and basic S3 operations with sample code

Learning AWS SDK for Node.js and basic S3 operations with sample code

Learning AWS SDK for Python and basic S3 operations with sample code

Learning AWS SDK for Ruby and basic S3 operations with sample code

Learning AWS SDK for PHP and basic S3 operations with sample code

Amazon Simple Storage Service (Amazon S3) is a cloud object storage service provided by Amazon Web Services. As Amazon S3 does not have a minimum fee, we just pay for what we store. We can store and get any amount of data, known as objects, in S3 buckets in different geographical regions through API or several SDKs. AWS SDKs provide programmatic access, for example, multiply uploading objects, versioning objects, configuring object access lists, and so on.

Amazon Web Services provides the following SDKs at http://aws.amazon.com/developers/getting-started/:

AWS SDK for Android

AWS SDK for JavaScript (Browser)

AWS SDK for iOS

AWS SDK for Java

AWS SDK for .NET

AWS SDK for Node.js

AWS SDK for PHP

AWS SDK for Python

AWS SDK for Ruby

This section tells you about how to configure an IAM user to access S3 and install AWS SDK for Java. It also talks about how to create S3 buckets, put objects, and get objects using the sample code. It explains how the sample code runs as well.

AWS SDK for Java is a Java API for AWS and contains AWS the Java library, code samples, and Eclipse IDE support. You can easily build scalable applications by working with Amazon S3, Amazon Glacier, and more.

To get started with AWS SDK for Java, it is necessary to install the following on your development machine:

J2SE Development Kit 6.0 or later

Apache Maven

First, we set up an IAM user, create a user policy, and attach the policy to the IAM user in the IAM management console in order to securely allow the IAM user to access the S3 bucket. We can define the access control for the IAM user by configuring the IAM policy. Then, we install AWS SDK for Java by using Apache Maven and git.

Tip

For more information about AWS Identity and Access Management (IAM), refer to http://aws.amazon.com/iam/.

There are two ways to install AWS SDK for Java, one is to get the source code from GitHub, and the other is to use Apache Maven. We use the latter because the official site recommends this way and it is much easier.

Sign in to the AWS management console and move to the IAM console at https://console.aws.amazon.com/iam/home.

In the navigation panel, click on Users and then on Create New Users.

Enter the username and select Generate an access key for each user, then click on Create.

Click on Download Credentials and save the credentials. We will use the credentials composed of Access Key ID and Secret Access Key to access the S3 bucket.

Click on Select Policy Template and then click on Amazon S3 Full Access.

Click on Apply Policy.

Next, we clone a repository for the S3 Java sample application and run the application by using the Maven command (mvn). First, we set up the AWS credentials to operate S3, clone the sample application repository from GitHub, and then build and run the sample application using the mvn command:

Create a credential file and put the access key ID and the secret access key in the credentials. You can see the access key ID and the secret access key in the credentials when we create an IAM user and retrieve the CSV file in the management console:

$ vim ~/.aws/credentials [default] aws_access_key_id = <YOUR_ACCESS_KEY_ID> aws_secret_access_key = <YOUR_SECRET_ACCESS_KEY>

Tip

Downloading the example code

You can download the example code files from your account at http://www.packtpub.com for all the Packt Publishing books you have purchased. If you purchased this book elsewhere, you can visit http://www.packtpub.com/support and register to have the files e-mailed directly to you.

Download the sample SDK application:

$ git clone https://github.com/awslabs/aws-java-sample.git $ cd aws-java-sample/

Run the sample application:

$ mvn clean compile exec:java

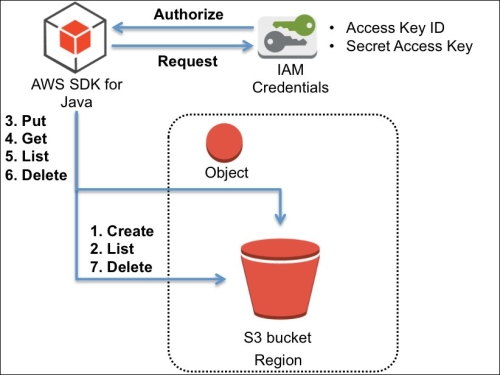

The sample code works as shown in the following diagram; initiating the credentials to allow access Amazon S3, creating and listing a bucket in a region, putting, getting, and listing objects into the bucket, and then finally deleting the objects, and then the bucket:

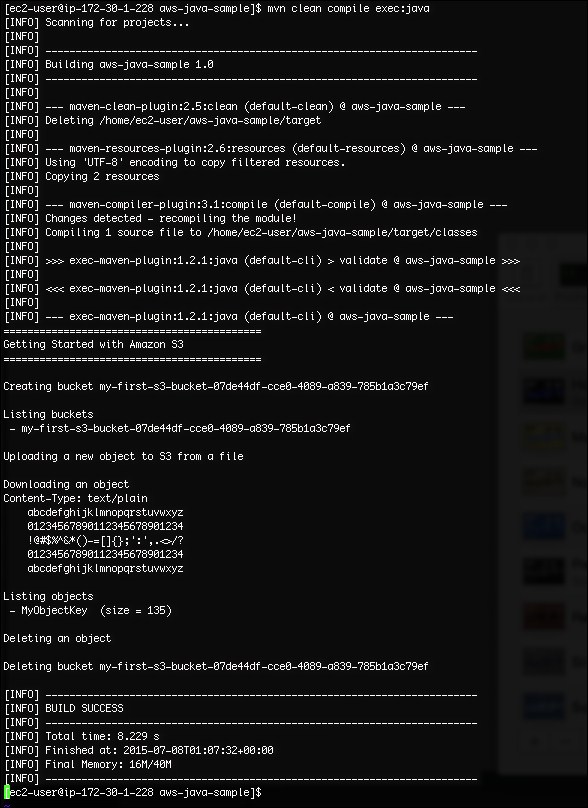

Now, let's run the sample application and see the output of the preceding command, as shown in the following screenshot, and then follow the source code step by step:

Then, let's examine the sample code at aws-java-sample/src/main/java/com/amazonaws/samples/S3Sample.java.

The AmazonS3Client method instantiates an AWS service client with the default credential provider chain (~/.aws/credentials). Then, the Region.getRegion method retrieves a region object, and chooses the US West (Oregon) region for the AWS client:

AmazonS3 s3 = new AmazonS3Client(); Region usWest2 = Region.getRegion(Regions.US_WEST_2); s3.setRegion(usWest2);

Tip

Amazon S3 creates a bucket in a region you specify and is available in several regions. For more information about S3 regions, refer to http://docs.aws.amazon.com/general/latest/gr/rande.html#s3_region.

The createBucket method creates an S3 bucket with the specified name in the specified region:

s3.createBucket(bucketName);

The listBuckets method lists and gets the bucket name:

for (Bucket bucket : s3.listBuckets()) {

System.out.println(" - " + bucket.getName());The putObject method uploads objects into the specified bucket. The objects consist of the following code:

s3.putObject(new PutObjectRequest(bucketName, key, createSampleFile()));

The getObject method gets the object stored in the specified bucket:

S3Object object = s3.getObject(new GetObjectRequest(bucketName, key));

The ListObjects method returns a list of summary information of the object in the specified bucket:

ObjectListing objectListing = s3.listObjects(new ListObjectsRequest()

The deleteObject method deletes the specified object in the specified bucket.

The reason to clean up objects before deleting the S3 bucket is that, it is unable to remove an S3 bucket with objects. We need to remove all objects in an S3 bucket first and then delete the bucket:

s3.deleteObject(bucketName, key);

The deleteBucket method deletes the specified bucket in the region.

The AmazonServiceException class provides the error messages, for example, the request ID, HTTP status code, the AWS error code, for a failed request from the client in order to examine the request. On the other hand, the AmazonClientException class can be used for mainly providing error responses when the client is unable to get a response from AWS resources or successfully make the service call, for example, a client failed to make a service call because no network was present:

s3.deleteBucket(bucketName);

} catch (AmazonServiceException ase) {

System.out.println("Caught an AmazonServiceException, which means your request made it " + "to Amazon S3, but was rejected with an error response for some reason.");

System.out.println("Error Message: " + ase.getMessage());

System.out.println("HTTP Status Code: " + ase.getStatusCode());

System.out.println("AWS Error Code: " + ase.getErrorCode());

System.out.println("Error Type: " + ase.getErrorType());

System.out.println("Request ID: " + ase.getRequestId());

} catch (AmazonClientException ace) {

System.out.println("Caught an AmazonClientException, which means the client encountered " + "a serious internal problem while trying to communicate with S3," + "such as not being able to access the network.");

System.out.println("Error Message: " + ace.getMessage());AWS SDK for the Java sample application, available at https://github.com/aws/aws-sdk-java

Developer Guide available at http://docs.aws.amazon.com/AWSSdkDocsJava/latest/DeveloperGuide/

The API documentation available at http://docs.aws.amazon.com/AWSJavaSDK/latest/javadoc/

Creating the IAM user in your AWS account, available at http://docs.aws.amazon.com/IAM/latest/UserGuide/Using_SettingUpUser.html

This section introduces you about how to install AWS SDK for Node.js and how to create S3 buckets, put objects, get objects using the sample code, and explains how the sample code runs as well.

AWS SDK for JavaScript is available for browsers and mobile services, on the other hand Node.js supports as backend. Each API call is exposed as a function on the service.

To get started with AWS SDK for Node.js, it is necessary to install the following on your development machine:

Node.js (http://nodejs.org/)

Proceed with the following steps to install the packages and run the sample application. The preferred way to install SDK is to use npm, the package manager for Node.js.

Download the sample SDK application:

$ git clone https://github.com/awslabs/aws-nodejs-sample.git $ cd aws-nodejs-sample/

Run the sample application:

$ node sample.js

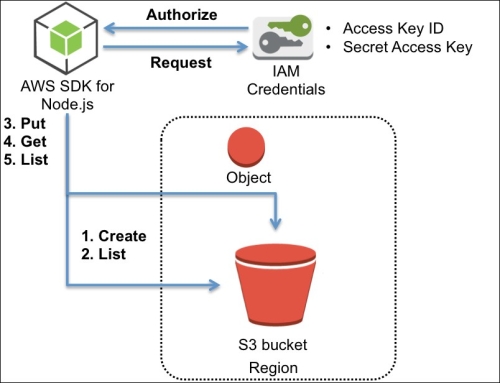

The sample code works as shown in the following diagram; initiating the credentials to allow access to Amazon S3, creating a bucket in a region, putting objects into the bucket, and then, finally, deleting the objects and the bucket. Make sure that you delete the objects and the bucket yourself after running this sample application because the application does not delete the bucket:

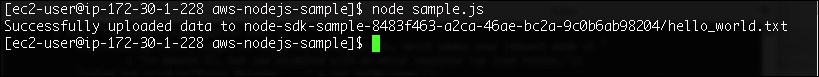

Now, let's run the sample application and see the output of the command, as shown in the following screenshot, and then follow the source code step by step:

Now, let's examine the sample code; the path is aws-nodejs-sample/sample.js. The AWS.S3 method creates an AWS client:

var s3 = new AWS.S3();

The createBucket method creates an S3 bucket with the specified name defined as the bucketName variable. The bucket is created in the standard US region, by default. The putObject method uploads an object defined as the keyName variable into the bucket:

var bucketName = 'node-sdk-sample-' + uuid.v4();

var keyName = 'hello_world.txt';

s3.createBucket({Bucket: bucketName}, function() {

var params = {Bucket: bucketName, Key: keyName, Body: 'Hello World!'};

s3.putObject(params, function(err, data) {

if (err)

console.log(err)

else

console.log("Successfully uploaded data to " + bucketName + "/" + keyName);

});

});The whole sample code is as follows:

var AWS = require('aws-sdk');

var uuid = require('node-uuid');

var s3 = new AWS.S3();

var bucketName = 'node-sdk-sample-' + uuid.v4();

var keyName = 'hello_world.txt';

s3.createBucket({Bucket: bucketName}, function() {

var params = {Bucket: bucketName, Key: keyName, Body: 'Hello World!'};

s3.putObject(params, function(err, data) {

if (err)

console.log(err)

else

console.log("Successfully uploaded data to " + bucketName + "/" + keyName);

});

});AWS SDK for the Node.js sample application, available at https://github.com/aws/aws-sdk-js

Developer Guide available at http://docs.aws.amazon.com/AWSJavaScriptSDK/guide/index.html

The API documentation available at http://docs.aws.amazon.com/AWSJavaScriptSDK/latest/index.html

This section introduces you about how to install AWS SDK for Python and how to create S3 buckets, put objects, get objects using the sample code, and explains how the sample code runs as well.

Boto, a Python interface, is offered by Amazon Web Services and all of its features work with Python 2.6 and 2.7. The next major version to support Python 3.3 is underway.

To get started with AWS SDK for Python (Boto), it is necessary to install the following on your development machine:

Proceed with the following steps to install the packages and run the sample application:

Download the sample SDK application:

$ git clone https://github.com/awslabs/aws-python-sample.git $ cd aws-python-sample/

Run the sample application:

$ python s3_sample.py

The sample code works as shown in the following diagram; initiating the credentials to allow access to Amazon S3, creating a bucket in a region, putting and getting objects into the bucket, and then finally deleting the objects and the bucket.

Now, let's run the sample application and see the output of the preceding command, as shown in the following screenshot, and then follow the source code step by step:

Now, let's examine the sample code; the path is aws-python-sample/s3-sample.py.

The connect_s3 method creates a connection for accessing S3:

s3 = boto.connect_s3()

The create_bucket method creates an S3 bucket with the specified name defined as the bucket_name variable in the standard US region by default:

bucket_name = "python-sdk-sample-%s" % uuid.uuid4() print "Creating new bucket with name: " + bucket_name bucket = s3.create_bucket(bucket_name)

By creating a new key object, it stores new data in the bucket:

from boto.s3.key import Key k = Key(bucket) k.key = 'python_sample_key.txt'

The delete method deletes all the objects in the bucket:

k.delete()

The delete_bucket method deletes the bucket:

bucket.s3.delete_bucket(bucket_name)

The whole sample code is as follows:

import boto

import uuid

s3 = boto.connect_s3()

bucket_name = "python-sdk-sample-%s" % uuid.uuid4()

print "Creating new bucket with name: " + bucket_name

bucket = s3.create_bucket(bucket_name)

from boto.s3.key import Key

k = Key(bucket)

k.key = 'python_sample_key.txt'

print "Uploading some data to " + bucket_name + " with key: " + k.key

k.set_contents_from_string('Hello World!')

expires_in_seconds = 1800

print "Generating a public URL for the object we just uploaded. This URL will be active for %d seconds" % expires_in_seconds

print

print k.generate_url(expires_in_seconds)

print

raw_input("Press enter to delete both the object and the bucket...")

print "Deleting the object."

k.delete()

print "Deleting the bucket."

s3.delete_bucket(bucket_name)AWS SDK for the Python sample application, available at https://github.com/boto/boto

Developer Guide and the API documentation available at http://boto.readthedocs.org/en/latest/

This section introduces you about how to install AWS SDK for Ruby and how to create S3 buckets, put objects, get objects using the sample code, and explains how the sample code runs as well.

The AWS SDK for Ruby provides a Ruby API operation and enables developer help complicated coding by providing Ruby classes. New users should start with AWS SDK for Ruby V2, as officially recommended.

To get started with AWS SDK for Ruby, it is necessary to install the following on your development machine:

Ruby (https://www.ruby-lang.org/)

Bundler (http://bundler.io/)

Proceed with the following steps to install the packages and run the sample application. We install the stable AWS SDK for Ruby v2 and download the sample code.

Download the sample SDK application:

$ git clone https://github.com/awslabs/aws-ruby-sample.git $ cd aws-ruby-sample/

Run the sample application:

$ bundle install $ ruby s3_sample.rb

The sample code works as shown in the following diagram; initiating the credentials to allow access to Amazon S3, creating a bucket in a region, putting and getting objects into the bucket, and then finally deleting the objects and the bucket.

Now, let's run the sample application and see the output of the preceding command, which is shown in the following screenshot, and then follow the source code step by step:

Now, let's examine the sample code; the path is aws-ruby-sample/s3-sample.rb.

The AWS::S3.new method creates an AWS client:

s3 = AWS::S3.new

The s3.buckets.create method creates an S3 bucket with the specified name defined as the bucket_name variable in the standard US region by default:

uuid = UUID.new

bucket_name = "ruby-sdk-sample-#{uuid.generate}"

bucket = s3.bucket(bucket_name)

bucket.createThe objects.put method puts an object defined as the objects variable in the bucket:

object = bucket.object('ruby_sample_key.txt')

object.put(body: "Hello World!")The object.public_url method creates a public URL for the object:

puts object.public_url

The object.url_for(:read) method creates a public URL to read an object:

puts object.url_for(:read)

The bucket.delete! method deletes all the objects in a bucket, and then deletes the bucket:

bucket.delete!

The whole sample code is as follows:

#!/usr/bin/env ruby

require 'rubygems'

require 'bundler/setup'

require 'aws-sdk'

require 'uuid'

s3 = Aws::S3::Resource.new(region: 'us-west-2')

uuid = UUID.new

bucket_name = "ruby-sdk-sample-#{uuid.generate}"

bucket = s3.bucket(bucket_name)

bucket.create

object = bucket.object('ruby_sample_key.txt')

object.put(body: "Hello World!")

puts "Created an object in S3 at:"

puts object.public_url

puts "\nUse this URL to download the file:"

puts object.presigned_url(:get)

puts "(press any key to delete both the bucket and the object)"

$stdin.getc

puts "Deleting bucket #{bucket_name}"

bucket.delete!AWS SDK for the Ruby sample application, available at https://github.com/aws/aws-sdk-ruby

Developer Guide available at http://docs.aws.amazon.com/AWSSdkDocsRuby/latest/DeveloperGuide/

The API documentation available at http://docs.aws.amazon.com/sdkforruby/api/frames.html

This section introduces you about how to install AWS SDK for PHP and how to create S3 buckets, put objects, get objects using the sample code, and explains how the sample code runs as well.

AWS SDK for PHP is a powerful tool for PHP developers to quickly build their stable applications.

To get started with AWS SDK for PHP, it is necessary to install the following on your development machine:

PHP-5.3.3+, compiled with the uCRL extension (http://php.net/)

Composer (https://getcomposer.org/)

It is recommended to use Composer to install AWS SDK for PHP because it is much easier than getting the source code.

Proceed with the following steps to install the packages and run the sample application:

Download the sample SDK application:

$ git clone https://github.com/awslabs/aws-php-sample.git $ cd aws-php-sample/

Run the sample application:

$ php sample.php

The sample code works as shown in the following diagram; initiating the credentials to allow access to Amazon S3, creating a bucket in a region, putting and getting objects into the bucket, and then finally deleting the objects and the bucket:

Now, let's run the sample application and see the output of the preceding command, as shown in the following screenshot, and then follow the source code step by step:

Now, let's examine the sample code; the path is aws-php-sample/sample.php.

The s3Client::facory method creates an AWS client and is the easiest way to get up and running:

$client = S3Client::factory();

The createBucket method creates an S3 bucket with the specified name in a region defined in the credentials file:

$result = $client->createBucket(array(

'Bucket' => $bucket

));The PutOjbect method uploads objects into the bucket:

$key = 'hello_world.txt';

$result = $client->putObject(array(

'Bucket' => $bucket,

'Key' => $key,

'Body' => "Hello World!"

));The getObject method retrieves objects from the bucket:

$result = $client->getObject(array(

'Bucket' => $bucket,

'Key' => $key

));The deleteObject method removes objects from the bucket:

$result = $client->deleteObject(array(

'Bucket' => $bucket,

'Key' => $key

));The deleteBucket method deletes the bucket:

$result = $client->deleteBucket(array(

'Bucket' => $bucket

));The whole sample code is as follows:

<?php

use Aws\S3\S3Client;

$client = S3Client::factory();

$bucket = uniqid("php-sdk-sample-", true);

echo "Creating bucket named {$bucket}\n";

$result = $client->createBucket(array(

'Bucket' => $bucket

));

$client->waitUntilBucketExists(array('Bucket' => $bucket));

$key = 'hello_world.txt';

echo "Creating a new object with key {$key}\n";

$result = $client->putObject(array(

'Bucket' => $bucket,

'Key' => $key,

'Body' => "Hello World!"

));

echo "Downloading that same object:\n";

$result = $client->getObject(array(

'Bucket' => $bucket,

'Key' => $key

));

echo "\n---BEGIN---\n";

echo $result['Body'];

echo "\n---END---\n\n";

echo "Deleting object with key {$key}\n";

$result = $client->deleteObject(array(

'Bucket' => $bucket,

'Key' => $key

));

echo "Deleting bucket {$bucket}\n";

$result = $client->deleteBucket(array(

'Bucket' => $bucket

));AWS SDK for the PHP sample application,. available at https://github.com/aws/aws-sdk-php

Developer Guide available at http://docs.aws.amazon.com/aws-sdk-php/guide/latest/index.html

The API documentation available at http://docs.aws.amazon.com/aws-sdk-php/latest/