In the previous section of the book, we tried to get ourselves familiar with virtualization, server consolidation, and cloud computing concepts. These concepts play a vital role in today's virtualized infrastructure of every organization, whether it's a small to medium size organization or a multinational enterprise. We also saw how server consolidation helps organizations to tailor their needs to consolidate their widespread server farm from underutilized to a consolidated few physical servers (hypervisors). Server consolidation also provides another way of allowing legacy applications to be run on the newer hardware and efficiently migrating the legacy server to run as a virtual machine (VM), which is called physical-to-virtual (P2V) migration.

Cloud computing is a journey, or can better be called a practice of managing IT in an organization. The major players for an organization to build their own private cloud, or start offering public cloud services, are people, processes, and technology. Server virtualization and consolidation is one element of cloud computing and provides the base platform for computing requirements in an economic way.

To understand more about cloud computing, we will go deeper into it and see the definitions for different types of clouds and their services, in this chapter.

In this first chapter, we will elaborate the Microsoft Hyper-V as a hypervisor and a server virtualization platform. After completing this chapter, we will understand the following concepts:

Hyper-V deployment scenarios

Hyper-V architecture

Features of Hyper-V

Hyper-V system and hardware requirements

In the year 2008, Microsoft released the RTM version of Microsoft Windows Server 2008, which had Hyper-V as its first version free of cost; but this was not the first virtualization product Microsoft introduced for operating system or application-level virtualization. Prior to the release of Hyper-V, Microsoft provided Virtual PC, which was a desktop application for end users to install on the base operating system and run as a secondary operating system, to enjoy the concept of virtualization. Using this, a user was able to have two copies of the same or different operating systems running on his/her PC. Later, Microsoft went one step further and released Microsoft Virtual Server 2005 in the year 2006. Virtual Server was the first initiative where Microsoft jumped into server-side virtualization, and this journey later continued with the release of Microsoft Windows Server 2008. In this release of the Windows Server operating system, Microsoft introduced its first true x64 hardware-based hypervisor, known as Hyper-V. In this version of the Microsoft virtualization solution, Microsoft also introduced the flexibility to make virtual machines highly available, with the use of Microsoft Cluster Service (MSCS). This high-availability feature for virtual machines was called quick migration. In this, all the virtual machines are located on a shared cluster storage, and if one Hyper-V cluster node fails, all the virtual machines running on this failed Hyper-V cluster node get migrated to another available Hyper-V cluster node, with some downtime. We will learn more about quick migration and other virtual machine migration within Hyper-V failover cluster, in Chapter 8, Building Hyper-V High Availability and Virtual Machine Mobility, where we will cover Hyper-V and the virtual machine high availability feature in detail.

Later, in 2009, Microsoft released Windows Server 2008 R2, which was the second release of Windows Server 2008 as an operating system. In this release Microsoft fixed a couple of bugs found in the earlier release of Hyper-V, but most importantly added a few new enhancements to the product, such as live migration of virtual machines and Cluster Shared Volumes (CSV). These new features for virtual machines—High Availability and Mobility—gained huge appreciation from customers, and the industry in particular. The first version of Hyper-V gained a lot of attention from those Windows Server 2008 users who found Hyper-V interesting as a native Windows server feature available for a true 64-bit compatible hypervisor. This first version of the product provided all the generic and standard features for 64-bit OS virtualization. As an IT professional, when I started using Hyper-V within Windows Server 2008, I personally found it a handy way of running a virtual machine for virtualizing applications to utilize the hardware resources more effectively, and for building research and development environments. Initially, we had to install and manage virtual machines either with VMware or Microsoft Virtual Server 2005 for setting up the testing environment for real-world proof of concepts (POCs), and since both these solutions were not available as native features of the operating system, it was a bit time consuming.

In the second release of Windows Server 2008 (R2), Microsoft included a series of new features and functionalities to the Hyper-V role that made it popular among companies to roll out Hyper-V as a hypervisor for their server consolidation and application virtualization needs. At this time, Hyper-V really started gaining confidence from its customers, and on the other side there were series of Microsoft enterprise applications such as Exchange Server and SQL Server that officially started supporting the virtualization of these types of workloads. So, at this stage of the product, Hyper-V was not only hosting R&D virtual workloads, but also started hosting the first-tier and middle-tier of applications with High Availability setup, where live migration was added in addition to quick migration. Live migration was another milestone for the product in becoming an enterprise hypervisor. Live migration provided for a single virtual machine to be seamlessly migrated to another Hyper-V host in the event of planned migration. We will get to know more about different types of Hyper-V HA deployment scenarios in the upcoming chapters.

Let's take our journey with Hyper-V to the next level—when Microsoft released Windows Server 2008 R2 Service Pack 1. In Windows Server 2008 R2 Service Pack 1, Microsoft added a number of enhancements to Hyper-V; Dynamic Memory and RemoteFX were two of the major enhancements. These two value-added features changed how Hyper-V used to work earlier, and also helped Hyper-V as a hypervisor to provide a base platform for environments such as Dynamic Data Centers. With the Dynamic Memory feature, Hyper-V allowed its customers to configure memory settings dynamically for the workloads. With dynamic memory, we configure the virtual machine to have "startup RAM" along with a reserved buffer, and a "max RAM". The startup RAM plus reserved buffer will be a dedicated allocation of RAM to the virtual machine, while the remaining max threshold limit value will allow the virtual machine to grab more memory from the physical server's available memory pool on the fly, whenever it is needed by the virtual machine. With this new era, the process of assigning and configuring memory for virtual machines was changed completely, where the Dynamic Memory feature helped administrators to efficiently utilize the physical resources among multiple workloads running on the same Hyper-V host server.

The second major enhancement introduced with Service Pack 1 was RemoteFX. Microsoft RemoteFX was a new feature that was included in Windows Server 2008 R2 Service Pack 1. It introduced a set of end user experience enhancements for Remote Desktop Protocol (RDP) that enable a rich desktop environment within your corporate network.

In this section we will discuss how customers can take advantage of Hyper-V as a base hypervisor for the virtualization stack and server consolidation. And in addition to this, we will see how and where Hyper-V can contribute as a Microsoft native Windows Server hypervisor product.

The following are the scenarios in which Microsoft Hyper-V can contribute efficiently as a hypervisor:

Server consolidation

Physical-to-virtual and virtual-to-virtual conversions

Research and development

Business continuity and disaster recovery

Cloud computing

Now, in the following section, we will discuss each Hyper-V deployment scenario in detail, which may be one of your server virtualization project's main requirements.

As we have been seeing over a decade, computer technology is getting micro—with great enhancements for computer processing power and increased memory and storage capacity. These new changes are allowing new computers to process more data in less time, with less overhead. One of the great examples of these new enhancements is the inclusion of multiple cores in a physical processor, where a single processor chip socket virtually holds multiple processors, and thus we can have more processor cycles and more physical RAM in a single box.

These highly intelligent and fast beasts can handle an immense amount of workload, so running a single application role that might not be a resource-hungry application may result in the hardware box being underutilized. This is not a single commodity loss, because it might also make your other investments underutilized, and that would also result in bad return on investment (ROI). This is a situation where the customer is not making the most of his/her investment.

Virtualization allows a server administrator to consolidate server workloads in the form of virtual machines. This allows an organization to fully utilize its servers with multiple operating systems running on the same box.

On one side, server consolidation gives the benefit of utilizing hardware resources to their utmost capacity, and on the other side it also helps to reduce the power consumption and keeps the datacenter environment less occupied with issues of cooling and loaded racks. Imagine a system's infrastructure without virtualization and server consolidation concepts: where for each single application frontend tier, we have to keep a physical server in the rack; where combining power, cooling, and rack space management would result in high maintenance costs from all aspects of datacenter management. Thanks to virtualization technology, which helps to reduce the maintenance cost by consolidating these multiple applications boxes and running them on a single physical box as virtual machines, we produce more with less cost and overhead while equally using our underutilized resources across the infrastructure.

Physical-to-virtual, also known as P2V, is one of the most demanding features of any server consolidation and datacenter consolidation project, where client requirements are to convert the running physical boxes regardless of the operating system or installed applications, and convert them into VMs. There is also the opposite possibility, virtual-to-physical (V2P), but it hardly comes as a requirement to a hypervisor administrator.

P2V allows legacy application servers to be converted into virtual machines and run on newer hardware, which is one of the features of server consolidation. This helps an organization to get rid of the legacy hardware, which consumes space in the racks, generates a huge amount of heat, and most importantly consumes a lot of power. So you can imagine removing these physical application boxes and converting them into a virtual machine, which can save a lot of your money and datacenter resources. On one end, P2V benefits from server consolidation concepts, and on the other side it provides a flexible platform for migrated servers and applications, by allowing dynamic memory and flexibility to add additional processors and hard disk drives, which is very difficult to do if you run a physical server.

Okay, we talked about P2V, but what about virtual-to-virtual (V2V)? This is also a growing requirement, especially with the availability of native Windows Server hypervisor (Hyper-V), and its fast growth and high demand. Nowadays, most of the customers that are running their Microsoft platform want to migrate their virtual workloads from a third-party hypervisor to Microsoft Hyper-V. This move gives them a lot of flexibility and saves costs, and since the release of Windows Server 2012, where Hyper-V 3.0 provides a number of features and functionalities that no other third-party hypervisor product provides within the industry, many of them have this requirement of converting third-party virtual machines into Hyper-V virtual machines.

Microsoft has made this conversion easier for its customers by providing a handy way of converting these third-party virtual machines into Hyper-V virtual machines using Microsoft Virtual Machine Converter. For example, this allows Hyper-V customers to convert VMware virtual machines into Hyper-V virtual machines. Microsoft Virtual Machine Converter is a part of the Microsoft Solution Accelerator suite, which can be downloaded and used from the Microsoft Solution Accelerator website (http://technet.microsoft.com/en-us/library/hh967435.aspx).

This is one is my favorites, where Hyper-V gives you immense flexibility in building R&D and testing environments with the luxury of many features that help you to test different product applications on different operating systems. Hyper-V admins can also script the creation of virtual machines based on their test cases, which expedites the process of building the R&D environment. We all know that during the testing phase, especially for developers, sometimes it is necessary to reformat the operating system. Hyper-V gives you the snapshot facility, where a Hyper-V administrator can take a snapshot of a VM at any given time and restore it later at any stage of the test cycle, which will take the virtual machine to the exact same state as when the snapshot was initially created. Running multiple OSs with a limited amount of physical RAM has always been a bottleneck, and therefore to provide a handy way to administrators to deal with such cases, Hyper-V provides the virtual machine state saving feature, where you can save or resume the virtual machine to the same state, and at the same time continue with other testing activities.

Business continuity or business continuity planning (BCP) allows an organization to survive major catastrophic situations, and makes sure that business continuity and operation will not be affected if the primary facility is unavailable. Hyper-V provides sound business continuity support for an organization whose mission-critical application is running in a virtualized mode. Microsoft Windows Server 2012 Hyper-V provides BCP capabilities for virtualized workloads by allowing them to replicate to a disaster recovery site, where a primary site Hyper-V server can as act as primary server and a Hyper-V server sitting in the disaster recovery site can get all the VM-related replication from its primary instance.

Hyper-V also allows customers to configure VSS backups for the virtual machines, where VSS writers for Hyper-V virtual machines make it possible for VSS-based software solutions to take virtual machine backups while the virtual machine is up and running.

So let's say you are taking your Hyper-V VM's backup, and unfortunately your entire primary datacenter goes down. In this case, you can get your off-site backup tape drives and restore the virtual machine to any point in time on the same or a different host. We will be covering Hyper-V backup and recovery concept in details in Chapter 10, Performing Hyper-V Backup and Recovery.

The other feature that Hyper-V supports for the BCP concept is VM migration. Hyper-V provides two flavors of VM migration, quick migration and live migration. In quick migration, which came into existence with the first release of Hyper-V Windows Server 2008, a Windows failover cluster is configured with shared cluster storage, on which the virtual hard disks (VHDs) are stored. So, if a failure occurs and one Hyper-V host node goes offline, the cluster senses and moves the VM's workload to another Hyper-V host. In quick migration, while the migration happens, the virtual machine's state (more importantly, the storage VM state) is paused till the time the failover of other resources occurs. Once all the resources get up and running for the second running cluster node, the virtual machine gets resumed on this node. Since quick migration was a cluster failover based migration feature, it introduced some delay in a few user-centric applications.

The other migration solution provided to Hyper-V was live migration, which had come with Windows Server 2008 R2. Live migration was more mature than quick migration, and, as it sounds, it was a live migration of VM workload from one Hyper-V node to another. Live migration of virtual machines provides great flexibility for planned migration, where an administrator, while patching the physical Hyper-V hosts, can migrate a guest virtual machine to other available Hyper-V hosts without any disruption in the machine's availability on the network. While performing the migration, the Hyper-V server creates a secure session from the source Hyper-V host to the destination Hyper-V host where the virtual guest machine is intended to be migrated, as part of the migration plan. During the live migration process, the source Hyper-V server starts copying the memory pages to the destination Hyper-V server, and once all of the memory pages are copied to the destination Hyper-V server, the VM moves and starts on the secondary Hyper-V node. This process is network resource intensive, where the memory pages get copied to the destination Hyper-V server over the network. And for this reason, it would be advisable to have a dedicated NIC card for the live migration process. We will go deeper into the Hyper-V migration strategies in the coming chapters, so stay tuned.

It is quite cloudy here today! In the beginning of the book, we gave an introduction about cloud computing after introducing virtualization. In the cloud services delivery model, the service provider provides computing capabilities, also called a pool of resources, such as network, servers, storage, and applications. These services are highly elastic and flexible with the users' needs. Let's see how a hypervisor works hand in hand with cloud computing. Hyper-V as a hypervisor provides the base virtualization layer of cloud computing, on which the cloud computing builds its underlying infrastructure and provides computing resources. Hyper-V as a base hypervisor in cloud computing solution delivery works with Microsoft System Center 2012 product suite, and covers end-to-end cloud delivery, where the Microsoft System Center 2012 product provides self-service portal, cloud service request, orchestration, operations monitoring, and virtual workload management as well.

After the extensive information gathering from virtualization as a technology, and server consolidation as a technique to take advantage of virtualization, let's move on to the next section of this chapter. Here we will discuss Hyper-V architecture, and will go deeper to understand how different Hyper-V architectural components work together to provide hardware-assisted virtualization.

Before we jump in to discuss the core elements of Hyper-V architecture, let's first quickly see the definition of a hypervisor, and its available types, to better understand the Hyper-V architecture as a hypervisor.

Hypervisor is a term used to describe the software stack, or sometimes operating system feature, that allows us to create virtual machines by utilizing the same physical server's resources. Based on the hypervisor type, some hypervisors run on the operating system layer, and some go underneath the operating system and directly interact with the hardware resources, such as processor, RAM, and NIC. We will understand these different types of hypervisors shortly—in the coming topics.

Hypervisor is not a new term that rose with VMware or Microsoft. If you see the history of this term, it takes you back to the year 1965, when IBM first upgraded the code for its 360 mainframe system's computing platform to support memory virtualization. By evolving this technique, they provided great enhancements to computing as a technology, by addressing different architectural limitations of mainframes.

Now let's discuss the various available hypervisor types, which may be categorized as shown next.

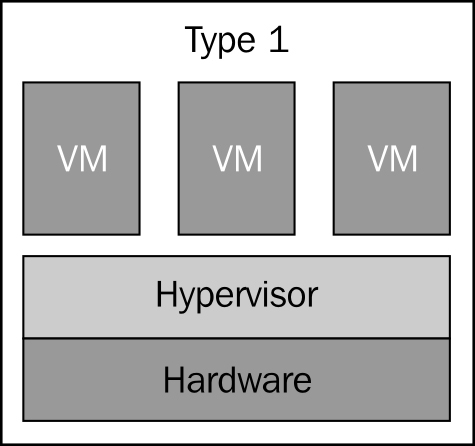

Type 1, or bare metal, hypervisors run on the server hardware. They get more control over the host hardware, thus providing better performance and security. And guest virtual machines run on top of the hypervisor layer. There are a couple of hypervisors available on the market that belong to this hypervisor family, for example, Microsoft Hyper-V, VMware vSphere ESXi Server, and Citrix XenServer.

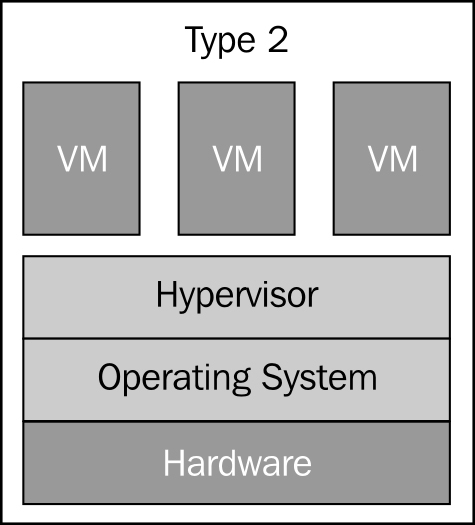

These second type of hypervisors run on top of the operating system as an application installed on the server. And that's why they are often referred to as hosted hypervisors. In type 2 hypervisor environments, the guest virtual machines run on top of the hypervisor layer.

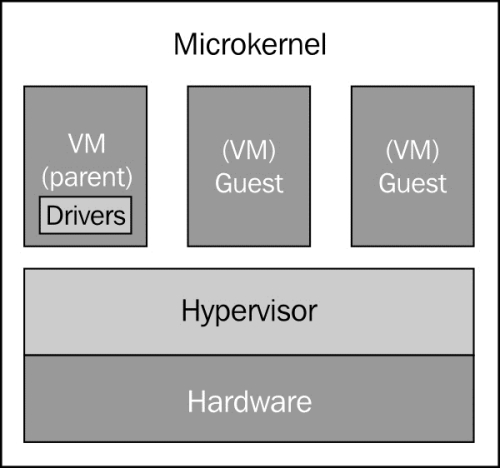

The preceding diagrams illustrate the difference between type 1 and type 2 hypervisors, where you can see that in the type 1 hypervisor architecture, the hypervisor is the first layer right after the base hardware. This allows the hypervisor to have more control and better access to the hardware resources.

While looking at the type 2 hypervisor architecture, we can see that the hypervisor is installed on top of the operating system layer, which doesn't allow the hypervisor to directly access the hardware. This inability to have direct access to the host's hardware increases overhead for the hypervisor, and thus the resources that you may run on the type 1 hypervisor are more while those on the type 2 hypervisor are less for the same hardware.

The second major disadvantage of type 2 hypervisors is that this hypervisor runs as a Windows NT service; so if this service is killed, the virtualization platform will not be available anymore. The examples of Type 2 hypervisor are Microsoft Virtual Server, Virtual PC, and VMware Player/VMware Workstation.

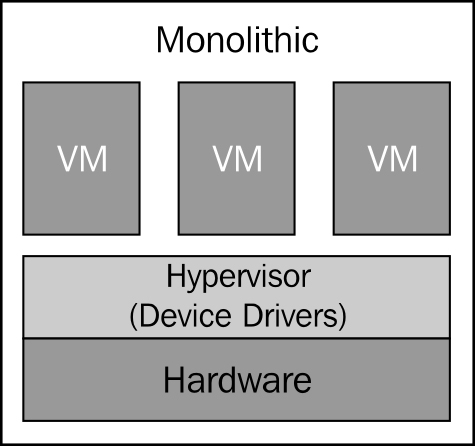

The monolithic hypervisor is a subtype of the type 1 hypervisors. This type of hypervisor holds hypervisor-aware device drivers for guest-operating systems. There are some benefits of using monolithic hypervisors, but there are also a couple of disadvantages in using them. The benefit of using monolithic hypervisors is that they don't need a parent or controlling operating system, and thus they have direct control over the server hardware.

The first disadvantage of using monolithic hypervisors is that not every single hardware vendor may have device drivers ready for these types of hypervisors. This is because there are number of different motherboards and other devices. Therefore, to find a compatible hardware vendor who supports specific monolithic hypervisors could be a potentially hard task to do, before choosing the right hardware for your hypervisor. The same thing can also be counted as an advantage, because each of these drivers for the monolithic hypervisors are tested and verified by the hypervisor manufacturer.

The second disadvantage of a monolithic hypervisor is that it allows the hypervisor to get closer access to kernel (Ring 1) and hardware resources, which may open the door for the malicious activities taking advantage of this excessive privilege. The openness to this threat goes against the trusted computing base (TCB) concept. VMware vSphere ESXi Server is an example of this type of bare metal hypervisor.

In this type of hypervisor, you have device drivers in the kernel mode (Ring 0), and also in the user mode (Ring 3) of the trusted computing base (OS). Along with this, only the CPU and memory scheduling happens in Ring 1.

The advantage of this type of hypervisor is that since the majority of hardware manufactures provide compatible device drivers for operating systems, with the microkernel hypervisor, finding compatible hardware is not a problem.

A microkernel hypervisor requires device drivers to be installed for the physical hardware devices in the operating system, which is running under the parent partition of a hypervisor. This means that we don't need to install the device drivers for each guest operating system running as a child partition, because when these guest operating systems need to access physical hardware resources on the host computer, they simply do this by communicating through the parent partition. One of the features of using microkernel-based hypervisors is that these hypervisors don't hurt the concept of TCB; thus, they work within the limited, privileged boundaries. The hardware-assisted virtualization Hyper-V that Microsoft implemented is an example of a microkernel-based hypervisor.

Tip

What is a trusted computing base?

You can find information about TCB at http://csrc.nist.gov/publications/history/dod85.pdf.

Now let's take a look at the Hyper-V architecture diagram, as follows:

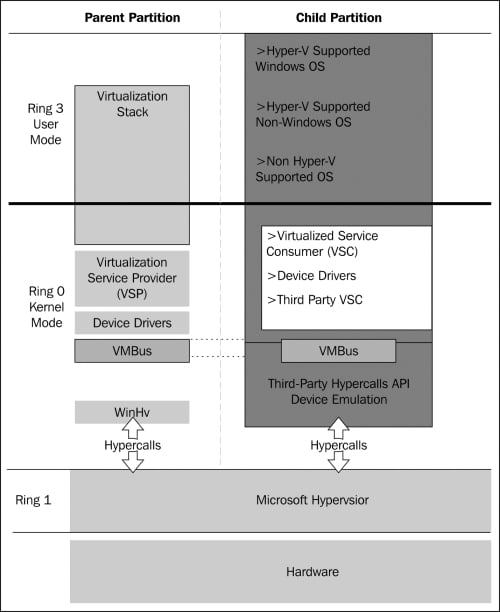

As you can see in the preceding diagram, Hyper-V behaves as a type 1 hypervisor, which runs on top of hardware. Running on top of the hypervisor are one parent partition and one or more child partitions. A partition is simply a unit of isolation within the hypervisor that is allocated physical memory address space and virtual processors. Now let's discuss the parent and child partitions.

The Parent partition is the partition that has all the access to hardware devices, and control over the local virtualization stack. The parent partition has the rights to create child partitions and manage all the components related to them.

As we said in the preceding section, the child partition gets created by the parent partition, and all the guest virtual machine related components run under the child partition.

After seeing the two major elements of any hypervisor virtualization stack, we will now see some more elements related to Hyper-V virtual stack.

When we install the Hyper-V role on supported hardware, right after we start the server to complete the installation of Hyper-V role, the parent partition gets created and the parent partition hypervisor itself goes underneath the operating system layer, and now Windows Server 2012 operating system runs on top of the hypervisor's parent partition layer. With the basic definition, we understood that the parent partition is the brain of Hyper-V virtual stack management, and all the components get installed in child partitions. The parent partition also makes sure that the hypervisor has adequate access to all the hardware resources; and while accessing these hardware resources, the trusted computing base concept will be used. In addition to all the tasks the parent partition performs, when you start your Hyper-V server, the parent partition is the first partition to get created; and while running the virtual machines on the Hyper-V server, it is the parent partition that provisions the child partitions on the hypervisor or Hyper-V server. The parent partition also acts as the middleman in between the virtual machines (child partitions) and hardware for accessing the resources.

Virtual Machine Management Service is the management engine of Hyper-V. Virtual Machine Management Service (VMM Service or VMMS) is responsible for the management of the virtual machine state for the child partitions. This includes making the right decision to change the virtual machine state, handling the creation of snapshots, and managing the addition or removal of devices. When a virtual machine in a child partition is started, the VMMS spawns a new VM worker process, which is used to perform the management tasks for that virtual machine.

Virtual devices (vDevices) are the application interfaces that provide control over devices to the VMs. There are two types of vDevices, as follows:

Core devices

Emulated devices

Synthetic devices

Plugin devices

Let's see the difference between these devices.

These virtual devices emulate a specific hardware device, such as a VESA video card. Most core vDevices are emulated devices like this, and examples include BIOS, DMA, APIC, ISA bus, PCI bus, PIC device, PIT device, Power Management device, RTC device, Serial controller, Speaker device, 8042 PS/2 keyboard/mouse controller, Emulated Ethernet (DEC/Intel 21140), Floppy controller, IDE controller, and VGA/VESA video. Another aspect of the emulated devices is that they run in the user mode of the TCB concept and have less ability to interact with the server hardware.

These virtual devices do not model specific hardware devices. Examples of synthetic devices include a synthetic video controller, synthetic human interface device (HID) controller, synthetic network interface card (NIC), synthetic storage device, synthetic interrupt controller, and memory service routines. These synthetic devices are available only to guest operating systems that support Integration Services.

As we saw previously, emulated devices run in Ring 3 (which is a user ring) and therefore cannot directly interact with the hardware resources; they thus have limited reliability and approachability. On the other hand, synthetic devices run under the kernel mode, which allows them to run faster and be more reliable.

Plugin devices are different from other types of devices. These devices allow direct communications between the parent and the child partition. These devices can safely be removed and added whenever it is necessary. Examples of these types of devices are human interfaces and mass storage devices.

The virtual machine bus is the communication medium between the parent partition (hypervisor) and the child partition (VM). VMBus is the backbone of the overall management and data transmission from parent to child, and vice versa. All the instructions go from the parent partition to the child partition via VMBus. If the VM needs to access the DVD-ROM on the hypervisor server, this request from the child partition (VM) to the DVD-ROM will go through VMBus.

After the introduction and architectural knowledge we gained from the previous topics, let's go forward and get some information about Windows Server 2012 Hyper-V features. We will first take a look at the general features Hyper-V provides as a hypervisor for your server virtualization needs, and then we will discover new features provided by Hyper-V 3.0 with Windows Server 2012.

All these general features were available in the previous version of Hyper-V; among these general features, a few of them have been overhauled and tweaked for better and more reliable service delivery.

Now let's take a look at the following general features, which were introduced with the first version of Hyper-V within Windows Server 2008:

64-bit native hypervisor-based virtualization

Ability to run 32-bit and 64-bit virtual machines concurrently

Uniprocessor and multiprocessor virtual machines

Virtual machine snapshots, which capture the state of a running virtual machine

Snapshots record the system state so that you can revert the virtual machine to a previous state

Virtual LAN support

Documented Windows® Management Instrumentation (WMI) interfaces for scripting and management

Integrated cluster support for quick migration of virtual machines

Virtual machine backups based on Volume Shadow Copy Service (VSS)

Fixed (pass-through) disk support

The following are the features added in Windows Server 2008 R2:

Cluster Shared Volumes (CSV): These were introduced as a special type of storage for the clustered virtual machine instance, with the support of live migration.

In addition to quick migration, live migration was added to the inventory of features of Hyper-V, where virtual machines can seamlessly migrate from one host to another host without any network downtime for the VM.

Dynamic virtual machine storage improvements for virtual machine storage include support for hot plugin and hot removal of the storage on a SCSI controller of the virtual machine. By supporting the addition or removal of virtual hard disks and physical disks while a virtual machine is running, it is possible to quickly reconfigure virtual machines to meet changing requirements.

Enhanced processor support has up to 64 physical processor cores. This increased support has made it possible to run even more demanding workloads on a single host. In addition to this, Hyper-V also provides support for Second Level Address Translation (SLAT) and CPU Core Parking. CPU Core Parking allows Windows and Hyper-V to consolidate processing onto the fewest possible number of processor cores, and suspend inactive processor cores. SLAT adds a second level of paging below the architectural x86/x64 paging tables in x86/x64 processors.

Enhanced networking support for jumbo frames has been extended and is now available on virtual machines (note that this was previously available only in nonvirtual environments). This feature enables VMs to use jumbo frames (up to 9,014 bytes in size) if the underlying physical network supports it.

Now let's take a look at the new features that are provided in Microsoft Windows Server 2012 Hyper-V.

The earlier versions of Windows Server, such as Windows Server 2008 / 2008 R2, allowed administrators to automate various tasks with respect to managing Hyper-V environments using WMI and a portion of Windows PowerShell. But the problem with WMI is that it is designed for developers, and for a server administrator it was a bit difficult to work with. On the other side, PowerShell didn't provide end-to-end automation and support for Hyper-V and virtual machine management related features.

In Windows Server 2012, Microsoft provided full support, through Windows PowerShell, for automation of Hyper-V as the hypervisor and VM-related management tasks. Windows Server 2012 includes 164 built-in PowerShell cmdlets for administrators to work with and customize as per their needs.

Initially, the Dynamic Memory feature was introduced in Windows Server 2008 R2 SP1, which changed the way Hyper-V virtual machines were assigned virtual memory. And it also gave great flexibility to administrators to dynamically manage the physical server memory between the various virtual workloads in the datacenter.

With the dynamic memory improvements introduced in Windows Server 2012 Hyper-V version 3.0, you can now attain a higher level of consolidation with improved reliability for virtual machine restart operations. There are two main areas of dynamic memory that are improved in Hyper-V version 3.0.

The first one is the availability of the minimum memory feature. Before version 3.0 of Hyper-V, however, only the startup memory setting and maximum memory limit settings were available. Here, the unavailability of the minimum memory limit requires administrators to set the startup memory at a higher level because most of the server-side applications require more memory to be available. Once the virtual machine reaches the stable state and loads all its operations, this higher amount of memory, which is now accessed according to the needs of the virtual machine, stays idle with no utilization.

The problem of giving extra amount of memory to the virtual machine to supply required amount of RAM for the boot processes was addressed by Windows Server 2012 Hyper-V version 3.0, and with the ability to set the minimum RAM to a lower value we can have a separate startup RAM that could be higher. This new feature helped to save the extra memory from staying idle in the virtual machines.

The second major improvement that happened around the dynamic memory concept is second-level paging, which is a cure for problems such as memory shortage. Second-level paging provides an alternate for the memory on a disk, which can be used in case there is no available memory available to give a virtual machine its configured and required startup amount of RAM.

Windows Server 2008 / 2008 R2 fulfilled virtual machine network isolation related requirements with the VLAN concept, but there were a series of problems associated with this solution because there were a number of limitations that made it difficult to provide true isolation between different machines in the cloud and dynamic datacenters. These limitations were as follows:

Increased risk of an inadvertent outage due to cumbersome reconfiguration of production switches whenever virtual machines or isolation boundaries move in the dynamic datacenter.

Limited scalability because typical switches support not more than a few thousands of VLAN IDs (maximum of 4,094).

VLANs cannot span multiple logical subnets, which limits the number of nodes within a single VLAN and restricts the placement of virtual machines based on physical location. Even though VLANs can be enhanced or stretched across physical intranet locations, the stretched VLANs must all be on the same subnet.

In addition to the preceding limitations, there were a series of drawbacks of using VLANs for VM isolation requirements, which are as follows:

Dynamic or cloud-based environments require readdressing virtual machines

Security management of virtual machines and policies are based on the IP address of the VM

Windows Server 2012 addressed all these problems, and provided a true virtualized network isolation for virtual workload. Customers who are running Hyper-V in dynamic and cloud-based environments can even use overlapping IP addressing schemes between different VMs. It's a big topic, so we will discuss that in detail in the coming chapters.

In the earlier version of Hyper-V Windows Server 2008 / 2008 R2, the method of copying data requires data to be read from and written to different locations, which can be a time-consuming process. Windows Server 2012 introduced a new way to tackle this problem by supporting offloading data transfer operations so that these operations can be passed from the guest operating system to the host hardware. This ensures that the workload can use storage enabled for offloaded data transfer, as it would if it were running in a nonvirtualized environment. The Hyper-V storage stack also issues offloaded data transfer operations during maintenance operations for virtual hard disks, such as merging disks and storage migration meta operations where large amounts of data are moved.

This gets me excited, as this feature makes Hyper-V so different and unique from other hypervisors available on the market. This is a brand-new feature; it came with Hyper-V version 3.0. This allows the Hyper-V administrator to set a virtual machine to be replicated to another Windows Server 2012 Hyper-V instance regardless of its location and IP subnet. This feature also supports business continuity and disaster-recovery solutions to critical virtual workloads in virtualized environments.

In the early days of Hyper-V Windows Server 2008 / 2008 R2, organizations had to implement chargeback mechanisms for Hyper-V virtual workloads using either a third-party or a self-developed solution, which would never be cheap or handy.

With Windows Server 2012, Microsoft introduced native functionalities in Hyper-V for chargeback calculation with historical information. This feature is called Hyper-V resource metering.

With the recent improvements around the storage area, disks are now being shipped with the capabilities to read/write on disk sectors from 512-byte sectors to 4096-byte sectors (also known as 4 KB sectors). This feature enhances disk storage density and reliability. Earlier editions of Hyper-V and Windows Server didn't provide compatibility for this new enhancement, especially in the case of VHD drivers that assume a physical sector size of 512 bytes and issue 512-byte I/Os, which made them incompatible with these disks.

As a result, the current VHD driver cannot open VHD files on physical 4-KB sector disks. Hyper-V makes it possible to store VHDs on 4-KB disks by implementing a software Read-Modify-Write (RMW) algorithm in the VHD layer to convert the 512-byte access and update request to the VHD file to corresponding with 4-KB accesses and updates.

This is one of the coolest features, and one of my favorites. In the earlier versions of Hyper-V, if you wanted to provide a storage area network (SAN) or logical unit number (LUN) to a virtual machine, the only method available was to use iSCSI SAN or connect the LUN to the Hyper-V host using Fibre Channel or iSCSI, and from there take the LUN inside the VM using a pass-through disk. But this solution doesn't allow us to assign a LUN directly to the VM, therefore not leveraging the benefits from the Fibre Channel investment that was made.

Hyper-V Windows Server 2012 addressed this problem, and provided a new feature, where an administrator can take advantage of its Fiber Channel SAN, and directly connect the SAN fabric to a virtual machine. You can configure as many as four virtual Fiber Channel adapters on a virtual machine and associate each one with a virtual SAN. Each virtual Fiber Channel adapter connects with one World Wide Name (WWN) address or two WWN addresses to support live migration. You can set each WWN address automatically or manually.

In Windows Server 2008 R2 the maximum size of a VHD was 2 TB, but this size was not enough for mission-critical or media-related applications, which store huge amounts of data on a frequent basis. Windows Server 2012 solved this problem by introducing a new virtual hard disk format, VHDX for virtual machines; VHDX has a maximum permissible size of 64 TB.

Before the release of Windows Server 2012, in the early operating system version, administrators used to install third-party software for NIC teaming, which was only supported in the physical servers. Most of the time, these third-party NIC teaming and NIC management suites caused compatibility problems with the other installed Microsoft application on the servers, and were difficult to manage.

Windows Server 2012 addressed this problem, and provided a native feature in the operating system to set up the team for the NICs, which is not only supported for the physical boxes but also provides out-of-the-box support for the guest operating systems. This new feature provides both NIC failover and link aggregation (combining the bandwidths) of the NICs.

The Hyper-V infrastructure based on Windows Server 2008 / 2008 R2 had limitations and issues with regards to Hyper-V vSwitch. Customers who were running virtual workloads in tenants and wanted to isolate their virtual machines' communications faced problems such as difficulty in performing QoS and network troubleshooting. Windows Server 2012 Hyper-V addressed all these problems, and came up with a series of new enhancements that catered to all of these requirements. Windows Server 2012 Hyper-V includes an extensible virtual switch feature, which is a layer-two virtual network switch and acts as a bridge between the virtual machine and the physical core network. This extensible virtual switch can also be used in enforcing policies for network-level security, network isolation, and multitenancy support.

This enhanced virtual network switch supports Network Device Interface Specification (NDIS) filter drivers and Windows Filtering Platform (WFP) callout drivers. This enhanced version of Hyper-V virtual network switch also provides the capabilities for non-Microsoft vendors to build and integrate APIs for rich-level integration with Hyper-V virtual network switches.

Hyper-V gained a lot of confidence from its customers after the first release in 2008. And with Dynamic Memory and CSV-based live migration, Hyper-V became a critical component in datacenters, and hypervisor administrators also started treating Hyper-V as a production virtual workload instead of keeping it only for testing virtual machines. But there were some caveats in Hyper-V Windows Server 2008 / 2008 R2 with regards to scaling up the virtual workloads, especially for virtual machines that require extensive amounts of processing and memory. These critical applications are usually online transaction processing (OLTP) databases and online transaction analysis (OLTA) applications. For another instance, you can take SQL Server as the best example of these resource-hungry applications, which requires a large number of processors and a large amount of RAM. For these types of applications, Hyper-V was failing because of the limits introduced: a maximum of four virtual processors and up to 64 GB of memory.

As a major feature of Windows Server 2012 Hyper-V, it provides support for up to 320 logical processors, and up to 4 TB or more of memory. This series of enhancements made Hyper-V significant in the market as a hypervisor among all other competitors.

Windows Server 2008 R2 provided a handy way of migrating virtual machines from one Hyper-V host to another, while the virtual machine storage stayed in the same place. This feature helped administrators to migrate workloads in a planned migration window. But when it came to storage migration, no solution was available, except that we shut down the virtual machine and manually copy and paste the virtual machine storage to the target location.

Windows Server 2012 Hyper-V version 3.0 addressed these problems with the improved Microsoft Cluster Service (MSCS), where Windows Server 2012 supports a clustered virtual machine storage running on a clustered Hyper-V node, and can be migrated from one location to another while the virtual machine is up and running. This feature doesn't stop or save the virtual machine while the users are accessing the virtual machine.

Before the release of Windows Server 2012 Hyper-V version 3.0, virtual machine storage was not supported on a network share (SMB), which was the most awaited feature of Hyper-V. With the release of Windows Server 2012, Microsoft made this available, and now customers can use SMB file sharing for hosting their virtual machine storage files.

We will learn more about this in the coming chapters. So stay tuned.

In this section of Hyper-V prerequisites, we will go through the hardware requirements; and some of these requirements are hardcore requirements, meaning you have to have these elements available before you start virtualizing your workload on Hyper-V.

The difference between the earlier Microsoft virtualization products and Hyper-V is mainly the processor requirements: where the earlier Microsoft virtualization products, such as Virtual PC and Virtual Server, could run on the 32-bit operating system, Hyper-V only works with 64-bit compatible processor hardware. Along with the 64-bit processor available, there are two other elements that are required by Hyper-V in order for the processor to operate; they are as follows:

Hardware-assisted virtualization: The processors that support hardware-assisted virtualization provide an additional privilege mode above Ring 0 (referred to as Ring -1) in which the hypervisor can operate, essentially leaving Ring 0 available for guest operating systems to run. In the market, each processor maker has given different names to its own hardware-assisted virtualization platform, for example, Intel calls it VT, and AMD uses the term AMD-V for it.

Hardware-based Data Execution Prevention: The second requirement of Hyper-V from the processor side is Data Executive Prevention. Hardware-based ata Execution Prevention ( DEP) allows the processor to mark sections of memory as nonexecutable. This feature is available in processors with AMD NX and Intel XD support.

We can summarize the processor requirements as follows:

The following are the requirements when working with an Intel processor:

x64 processor architecture

Support for Execute Disabled Bit

Intel® VT hardware virtualization

The following are the AMD processor's requirements:

All those storage designs that are supported and workable with Microsoft Windows Server for a particular operating system version are applicable for Hyper-V. But there is a catch—since usage of disk storage changes when it comes to Hyper-V, there are some recommendations that you should consider before you plan and buy hardware for your Hyper-V server.

There will be multiple concurrent sessions on the storage—where a number of virtual machines' virtual storage (VHD, VHDX) will be located—so disk storage with fewer disk spindles will provide poor disk I/O, which will eventually cause poor virtual machine performance. So it is always recommended to use storage with more disk spindles for Hyper-V, which provides better disk I/O, and thus better virtual machine performance.

Let me elaborate this: having more disk spindles means that on your storage side, either your local server storage or SAN storage, you would have more than one disk, and based on your performance and failover requirements you might want to choose an appropriate RAID model for this. So, in this type of setup, more disks would be added to your storage pool and the disk I/O for read/write would be spread across all these disks, which will provide a better resiliency and performance.

The type of hard drive added to the host server or to the storage array for the host server, should have significant impact on the overall storage performance. The differential performance factor for the hard disks is the interface architecture (for example, U320 SCSI, SAS, or SATA). The two elements, which are the rotational speed of a hard disk (7200, 10k, or 15k RPM) and the average latency in milliseconds, play a vital part in the overall performance and availability of storage for a hypervisor server. Additional factors, such as the hard disk cache and the support for advanced features, for example, Native Command Queuing (NCQ), can also be considered as performance improvement factors.

For sound storage planning of the hypervisor and virtual machines, there are a few important elements that should always be carefully evaluated before choosing the right storage for your virtualization stack. These elements are the type of storage, storage IOPs, accepted storage latency, and of course storage size. Mostly, for the virtual machine, storage performance is not the first requirement but the storage size is. From a practical standpoint, I have seen many small, medium, and even large organizations where the hypervisor server has a good number of processors and a large RAM, but due to the unavailability of storage these hardware boxes remain underutilized. Therefore, it is always recommended to go for a bigger size of hard disk instead of finding an expensive one for good performance. Not all the virtual machines need to be placed on a high-speed disk; their OS virtual hard disk (VHD/VHDX) can be placed on a high-speed SATA, and their page file or application/data VHD/VHDX can be placed on a high-speed disk. Utilizing 15k RPM drives instead of 10k RPM drives can result in up to 35 percent more IOPS per drive.

Use the information given next to evaluate the cost/performance tradeoffs.

SATA could be your first choice when considering the Hyper-V storage. Among all other storage types, SATA is the cheapest option, and provides average read/write speed for the data. It is always recommended to go for a higher speed disk if your intended data or workload needs higher performance. SATA disks primarily come with the speed of 1.5 GB/s and 3.0 GB/s.

SAS disks are the next fastest type of disks. If you want fast disks for your highly touched data or accessed virtual machine, then SAS disks would be a standard choice. These disks provide faster I/O response from the read/write activities. Usually, SAS disks come with the rotational speed of 10k to 15k with an average latency speed of 2 to 3 milliseconds.

Fibre Channel disks perform in a similar way to SAS disks, but have a different interface for connectivity. Since they contain the word "Fibre", their connectivity medium is a fiber cable, which usually connects to the server HBA card on one end, and to the SAN switch on the other. Fibre Channel disks come in both variations of 10k and 15k RPM.

Tip

Recommendation

Instead of going to SATA disk, I would recommend going for SAS 15k RPM to 10k RPM disk, which provides better performance.

All of the previously specified storages can be used in the following ways for Hyper-V virtual workloads:

Pass-through disk

Fixed disk

Dynamic disk

Differential disk

We will discuss all of the preceding storage types in more detail in Chapter 6, Insight into Hyper-V Storage.

When it comes to memory planning, it is always recommended to go for server hardware that provides enough memory expansion and may accommodate your future memory needs. The Dynamic Memory Optimization feature of Windows Server 2008 R2 SP1 took Hyper-V to a new era, where Hyper-V changed the face of how Hyper-V memory assignment was done in VM workloads as compared to how it was done in the legacy Hyper-V versions. Dynamic memory gave a new way for administrators to set dynamic thresholds for VM workloads to start from an initial memory assignment and burst to a certain limit. It is always recommended to go for a larger number of filled memory slot banks for the Hyper-V boxes, because when it comes to the Hyper-V server memory planning, RAM legacy and speed is not much more important than the size. In addition to this, we should also pay attention while buying the server hardware for the Hyper-V role; we should focus on the server boxes that support more memory in terms of quantity, because this is your one-time investment, and buying a server with less support for RAM may end up with your investment having less ROI.

This is another crucial part: while considering hardware components for Hyper-V implementation, as we discussed previously, disk storage with more spindles is preferred. Due to high I/O utilization, it may provide better and fast response. In the preferred networking selection for Hyper-V, similar recommendations come to those that we saw for storage, where it is recommended to have multiple NICs available to the Hyper-V host.

Here we will see an example. We have an HY-SVR-0001 server, which has one NIC card with 1 Gbps of speed, installed on it. After installing Hyper-V role, we select this NIC and create an external virtual network on Hyper-V from the network manager. Now, this physical NIC is being used for all the virtual machines created on this server. In the initial days, when fewer virtual machines used to be created on Hyper-V, network performance while accessing virtual machines didn't suffer. But with the passage of time, and increasing demand for virtualization and support from the vendors and applications, more virtual machines are being created as compared to earlier. This increasing number of virtual machines need more bandwidth and better network planning for the Hyper-V role. After finding the root cause, the administrator found that the same physical NIC was being used for all the VMs, and on top of it the file server virtual machine's backup along with all virtual machines' backups were also configured on the same physical NIC.

Hence, it is highly recommended to carefully plan your Hyper-V physical network connectivity to the server farm. Depending on your Hyper-V server configuration, you may need to have more than one network configured, among the following network options:

Cluster private network

Live migration network

Hyper-V replica traffic network

Hyper-V management network

iSCSI SAN connectivity network

As you can see in the example that we discussed previously, there is plenty of network traffic that our Hyper-V server will be part of; therefore, it is highly recommended to have multiple NICs available in your Hyper-V server so that it can individually cater to these different patterns of traffic. In addition to this, it would also be necessary for you to have teamed Hyper-V NICs for the client connectivity network, where the hosted application or VM may require more bandwidth due to the number of concurrent network connections from the client side.

After discussing the hardware requirements for Hyper-V implementation, we will now go through the software requirements and best practices for Hyper-V.

From Windows Server 2008 to Windows Server 2012, Microsoft changed its licensing scheme; Windows Server 2012 doesn't come as an enterprise edition. There are only two editions of Windows Server 2012 available—Standard and Datacenter. These two editions provide equal features, the only difference is that the Standard edition is for virtualization environments that are not mature, and the Datacenter edition is for highly virtualized environments.

Windows Server 2012 Standard edition gives only one virtual machine license free, while Windows Server 2012 Datacenter edition provides unlimited virtual machine licenses.

The following table shows the list of Microsoft Windows Server operating systems and their versions that can run Hyper-V:

|

Operating system |

Version |

|---|---|

|

Windows Server 2008 |

Standard, Enterprise, Datacenter, x64 bit |

|

Windows Server 2008 R2 |

Standard, Enterprise, Datacenter, x64 bit |

|

Windows Server 2012 |

Standard, Datacenter, x64 bit |

The following are the memory requirements for the Hyper-V host and guest machines:

For Hyper-V to work properly, we need to have the same amount of minimum memory available as for Windows Server. A minimum of 2 GB memory is required for Hyper-V.

Maximum memory per system for Windows Server 2008 R2/R2 SP1 is 1 TB, and for Windows Server 2012 it is 4 TB.

Hyper-V guest machine:

The minimum memory for a guest would be the same as for the Hyper-V server from the operating system perspective, but depending on the workload you can upgrade the memory of the guest machine,

The maximum virtual RAM supported on the guest in Windows Server 2008 R2/R2 SP1 is 64 GB, and for Windows Server 2012 it is 1 TB per VM.

Installing Microsoft Hyper-V requires 10 GB of disk space on the Server.

Note

A couple of times, I have seen bad Hyper-V designs. In these, after upgrading the server memory or in the initial phase when the server was being sized, people ignored the importance of correctly sizing the hard disk. This would require adding a large amount of RAM afterwards.

We will now discuss the two major factors that may require sizing of disk storage with respect to paging file requirements.

It is a general recommendation and best practice for sizing to have a sufficient amount of server hard disk space for creating the page file for the server operating system. From my experience, I have seen people managing virtual memory (page file) divided into two parts or sometimes more, between various partitions because they didn't have sufficient of disk space in the system partition. Microsoft recommends keeping the paging file in the system partition for better performance. So make sure that if you have 32 GB of physical RAM in your server, you should allow that much free disk space on your server's local disk as well.

In addition to the page file requirement for the page file, we should also consider the free space requirement for each virtual machine that will be running on the same Hyper-V server, because when you give 10 GB of memory to a virtual machine and you turn on this virtual machine, it takes that 10 GB of memory from the same storage where the virtual machine configuration files are created. This is also true for the virtual machine backup requirements; if you are taking a virtual machine's backups using any third-party VSS software solution, there is a certain percentage of disk space that needs to be free for creating a snapshot of the data.

We saw the paging file's impact on the host server for hard disk space requirement; in the same way, when a guest operating system runs on the Hyper-V server, it takes the same amount of hard disk space as per the virtual memory assigned to the VM. This is the second big mistake I have seen people make in their Hyper-V environment—virtual machine disk storage requirements never included the physical disk space that the VM takes from the Hyper-V to reserve as a virtual memory.

Everyone has been witnessing the ongoing improvements seen around Microsoft Server System's products, but when it came to Hyper-V (a new product a few years back), with each release of the product we saw a tremendous amount of improvements and new features to make the product fully equipped with all the features and functionalities required for enterprise-level virtualization management.

To see a complete comparison between Hyper-V version 3.0 and all the previous releases, visit the following URL:

In this section, we will cover the guest operating systems supported by Windows Server 2012. We will also divide these supported operating systems into two major categories in the server and the client operating systems.

All the following listed server operating systems are supported to be virtualized on Windows Server 2012:

Windows Server 2012

Windows Server 2008 R2 with Service Pack 1

Windows Server 2008 R2

Windows Server 2008 with Service Pack 2

Windows Server 2008

Windows Home Server 2011

Windows Small Business Server 2011

Windows Server 2003 R2 with Service Pack 2

Windows Server 2003 with Service Pack 2

CentOS 6.0 to 6.2

Red Hat Enterprise Linux 6.0 to 6.2

All the following client operating systems are compatible and supported to be virtualized on Windows Server 2012 Hyper-V:

As we mentioned, Microsoft changed the Windows Server licensing with the release of Windows Server 2012, where now only two editions, Standard and Datacenter, are available for Windows Server 2012. As you have read, Microsoft removed the enterprise edition from the Windows Server licensing catalog. These two available editions are exactly the same in the features provided. The only difference between these two editions is the scope for virtualization.

Windows Server 2012 Standard Edition gives customers only one free license for a virtual machine. Standard edition would be the best choice for companies that are not yet very well matured with respect to their virtualization, and are small in size. On the other side, the Datacenter edition gives customers unlimited virtual machine licenses. This edition is a good choice for enterprises where virtualization is the main infrastructure requirement, and most of their servers and applications are virtualized.

For more information about the Windows Server 2012 licensing, visit the following URL:

http://www.microsoft.com/en-us/server-cloud/buy/pricing-licensing.aspx

In this first chapter, we covered the Hyper-V deployment scenario first to get some hot information about where and why to use Hyper-V. This section also provided a great deal of information on how organizations can take advantage of the native hypervisor available in Windows Server, to fulfill their virtualization needs, and how it can also be helpful to set up the disaster recovery and business continuity environments.

In the next section, we moved straight to the Hyper-V architecture to get ourselves familiar with the different types of hypervisors, and see the difference between parent and child partition.

Then, after covering the Hyper-V architecture, we looked closely at the Hyper-V virtual machine service, which is the core engine of Hyper-V, for the management of both parent and child partitions. After discussing VMMS, we discussed the Hyper-V features, where we also compared the features of Windows Server 2012 Hyper-V with its older versions. And finally, we closed this chapter by discussing a few last topics, including Hyper-V hardware, software, and licensing information. With all of these important topics, we made ourselves fully familiar with what Hyper-V offers and we made ourselves ready to take this journey to the next level, where we will examine each Hyper-V pillar and its major service parts individually in the upcoming chapters.

In the next chapter, we will cover the topics of Hyper-V planning, designing, and implementation. These topics will provide all the information needed for us to plan and design our Hyper-V environment. Once the planning and designing part is done, we will also see how to implement Hyper-V in our organization and take care of its basic configuration.