Chapter 1: TheOrigins of Agile and Lightweight Methodologies

This chapter briefly touches on why agile concepts, values, and principles evolved to address issues with traditional plan-driven and linear sequential software development models. Driven by software engineers, the goal of agile is to eliminate the complexities, inefficiencies, and inflexibility of the traditional software development models.

This chapter explains where the traditional waterfall approach often fails due to its emphasis on detailed planning and the execution of deterministic life cycle development processes. Not everything about the traditional software development model is bad, especially with its historical emphasis on developing and applying mature business analysis and engineering practices. In this chapter, you will learn how the values and principles of agile help address the many problems associated with the traditional software development model while understanding the importance of maintaining rigor in developing your business and engineering practices.

While this is a book about scaling Scrum on an enterprise scale, we need to first understand Scrum's agile underpinnings, and why Scrum needs to be scaled.

In this chapter, we will cover the following topics:

- Lightweight software development methodologies

- Core agile implementation concepts

- The values and principles of agile

- Why engineers largely led this movement

With those objectives in mind, this chapter provides an introduction to the lightweight methodologies that preceded the development and promotion of "agile" concepts, values, and principles. Those early efforts addressed the limitations of the traditional development model and also helped refine the concepts that ultimately defined what it means to be agile. In this chapter, you will also learn why engineers largely led the initial movements to implement agile-based practices.

Understanding what's wrong with the traditional model

Since you are reading a book on mastering Scrum, I might assume you already know something about agile practices and how they evolved to address the issues of the traditional software development model, also known as the waterfall approach. Still, it's never safe to assume. So, I'm going to start this book with this section to provide a quick overview of the traditional software development model, why and how it developed, and its shortcomings.

Modern computing became a reality in the early 1940s through the 1950s with the introduction of the first general-purpose electronic computers. The introduction to modern computing is particularly relevant to our discussions on the evolution of software development practices, as the high costs, skills, and complexity of software development drove early software and Systems Development Life Cycle (SDLC) practices.

In the earliest days of computing, computers filled an entire room, were extremely expensive, often served only one purpose or business function, and took a team of highly skilled engineers to run and maintain. Each installation and the related software programming activities were highly unique, time-consuming, schedule-bound, complex, and expensive. In other words, early computing efforts had all the characteristics of project-based work – and development activities were managed accordingly.

It's only natural that executive decision-makers and paying customers want to manage their risks in such an environment, and the discipline of project management provided a model for managing unique and complex work in an uncertain development environment under approved constraints. Project constraints consist of the approved scope of work, budgets, schedules, resources, and quality. Specifically, project management implements detailed project planning, documentation, scheduling, and risk management techniques to guide and control complex development projects within the constraints approved by the executives or paying customers. In software development, such practices came to be known as the so-called waterfall model.

The discipline of project management is as old as the Egyptian pyramids, dating back to roughly 2500 BC, and probably older than that. Project management evolved to manage work associated with building large, complex, risky, and expensive things. If you are the person financing such endeavors, you have a strong desire to control the scope of work to deliver what you want, in the time you want it, at a cost you can afford, and with certain expectations about the quality of the deliverables. Hence the genesis behind managing work under specific and approved project constraints.

Project management looks at work from the perspective of protecting the customer's investments in risky, expensive, and complex projects. In effect, customers accept a layer of management overhead with the goal of helping to ensure on-time and on-budget deliveries.

In a modern yet classical context, projects have the following characteristics:

- An authorized scope of work – no more, no less.

- Are temporary ventures with a specific start and an end date.

- May use borrowed (for example, from functional departments) or contracted resources over the project's life cycle.

- Have specific deliverable items (for example, products, services, or expected outcomes).

- The deliverables and the type of work are relatively unique.

- The uniqueness implies that some important information is not available at the start of the project, potentially impacting the project in some way when discovered.

- The uniqueness of the product and the work also implies that there is some level of risk in achieving the objectives on time, on budget, and with the allotted resources.

- The uniqueness of the deliverables implies the customers probably don't have a complete understanding of what they want or need – as it turns out, this is especially true when it comes to building software applications and systems.

In the traditional project management paradigm, the objective is to manage work within pre-determined constraints. The constraints are the scope of work, budgets, schedules, resources allotted to the effort, deliverables, and quality. The goal of project management is to successfully deliver satisfactory products within the constraints approved by the paying customer. The philosophy behind project management is that the combination of rigorous planning, engineering, and coordinated life cycle development processes provides the best approach to managing uncertainty and risk.

The project management philosophy works well when building things that are difficult to change once construction begins. Imagine that the pharaohs decided they needed tunnels and rooms within their pyramids after construction. I suspect it would have been possible, but the effort, resources, time, and costs would have been extraordinarily higher than if they planned and designed on those requirements before starting construction. The same concept is true when building ships, high-rise buildings, energy and telecom utilities, roads, and other large, complex, and expensive physical products. The cost of change is extraordinarily higher after construction starts.

Early computer scientists and IT managers faced many of the same constraints and issues those other industries faced. In other words, the characteristics of managing software development projects mirrored those faced in other industries that build large, complex, unique, and expensive things. It, therefore, seemed logical that software development projects also required rigorous planning, architectural design, and engineering, and coordinated life cycle development processes.

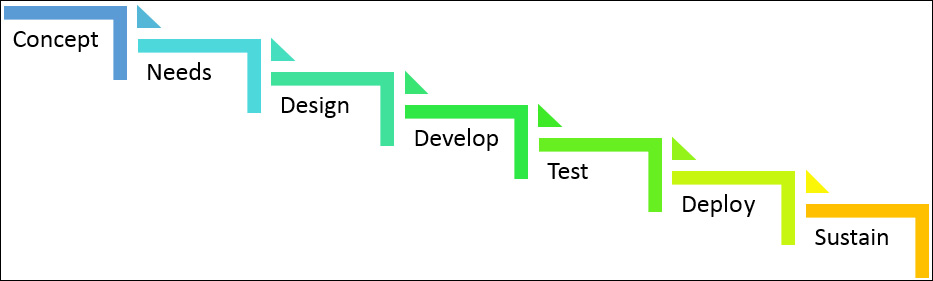

All software products and IT-based systems have a life cycle. The traditional model conceptually breaks up a product's life cycle into a series of phases, with each phase encompassing a type of related work. Phase descriptions expose work through a series of decompositions, consisting of the following:

- Processes provide a step-by-step set of instructions describing work within the phase. Phases typically have multiple processes, each describing a different type of work or approach to work.

- Activities (a.k.a. summary tasks) are artificial placeholders used to aggregate information about the completion of work in the underlying tasks. In other words, activities help roll up both the original estimates and the final measures of costs, time, and resources spent on the underlying work tasks.

- Tasks are the lowest practical level of describing work where a deliverable outcome is definable. Project managers often use the 8-80 rule to limit task durations to a range between 8 hours and 80 hours.

Product life cycle processes follow a common pattern of work activities. Products are conceived; requirements or needs are defined; architectures and designs are established; the products are constructed and tested. Then, they are delivered, supported, maintained, and perhaps enhanced until, one day, the decision is made to retire the products.

In the traditional plan-driven and linear-sequential software development model, the life cycle processes are laid out to show work and information flowing from one phase of work to another, as shown in the following figure:

Figure 1.1 – Traditional plan-driven, linear-sequential "waterfall" software development model

The figure implies this approach to development is a straightforward process from beginning to end. Still, even in the traditional model, this is not entirely true. There tends to be some overlap of work among the phases. The traditional waterfall model is more of a conceptual framework than a mandated work structure.

But the biggest point I want to make here is that the more a team frontend-loads requirements, the more protracted the overall work becomes. The added work in process (WIP) adds complexity, extends the development life cycle, and limits customer input. Moreover, adding WIP delays testing that would otherwise help find and determine the cause, and quickly resolve problems at the point they arise. In short, it is that frontend-loading of work that creates all the problems in the traditional model.

There is strong evidence to support that adding size to a project under the traditional waterfall-based development model is highly correlated with project failures, while agile-based projects outperformed traditionally managed projects at every scale. For example, the Standish Group, in their 2015 Chaos Report (available at https://standishgroup.com/sample_research_files/CHAOSReport2015-Final.pdf), which summarized data across 50,000 projects, shows agile-based projects outpaced waterfall-based projects at every category of project size – spanning small, medium, large, and combined – with the largest projects having the greatest percentage of reported failures, at 42%.

Clearly, there are other factors at work besides the frontend-loading of requirements leading to higher failures in larger projects. Let's take a moment to see how these other factors only make things worse.

It's nearly impossible to capture all the requirements for a new product concept at the start of a project. First off, customers and end-users don't yet know what they don't know. It's not unusual for a customer to get their hands on a product and quickly discover new insights into how the tool can help them in ways not previously discerned. Second, customers and end-users have other jobs and work to do, and it's difficult to get them to sit through a requirement gathering session for protracted periods. And, even if they make the time, they may initially forget about things that later turn out to be very important.

Under the traditional model, astute development teams know they can start assessing architecture and design requirements in parallel with requirement gathering. But if we accept the premise that the requirements are not likely to be completely or correctly defined all at once at the start of the project, then we also have to believe the product designers will make wrong assumptions, leading to future reworks of the architecture, designs, and code to fix errors.

Under the traditional model, the developers follow the plan. If a customer comes in with a new or revised requirement, that change request impacts the baseline plan. The scope of work can change, which in turn can change the planned schedule, resource allocations, and budgets. If the customer insists on the change but won't change the constraints of the project, the project team will likely fail to meet their obligations defined in their project's charter or contract agreements. In other words, under the traditional model, when a client proposes a new change request, something has to give in one or more of the previously defined scopes of work, budgets, schedules, requirements, and quality.

The term scope creep refers to an unexpected and unauthorized change to the project's planned work. Scope creep is a major cause of project failures in the traditional model, because scope creep may impact the project's authorized resources, schedule plan, and the type and amount of work approved under the project's charter or contract agreements. Changes to both functional and non-functional requirements can generate scope creep. Functional requirements are the business and user requirements, whereas the non-functional requirements deal with the quality, security, and performance of the product.

To some degree, software development and testing have always gone hand in hand. But under the traditional development model, only unit and integration testing occur in parallel with coding. System testing, end-to-end testing, acceptance testing, and performance testing tend to be put off until the completion of coding for the entire product release. By that time, the complexity and size of the code base make it much more difficult to assess the root cause of any problems and fix any bugs that crop up in the tests.

The delivery/deployment processes, within the traditional model, span most of the other life cycle development phases. The deliverables of deployment processes are not just the application code but also training aids, wikis, help functionality, sysadmin documents, support documentation, user guides, and other artifacts necessary to support and maintain the product over its operational life span. Also, the development team must coordinate with the operations group to work through hardware, network, software, backup, storage, and security provisioning needs and related procurement acquisitions. Waiting to address these issues until the later stages of the project will almost assuredly delay the scheduled release date for the product.

Collectively, these issues lead to overly complex projects, lengthy development times, cost and schedule overruns, potential scope creep, poor quality, misalignment with customer priorities, and intractable bugs. Moreover, the "soft" nature of software development made it challenging for customers to wrap their heads around the cause and effects that kept leading to so many project failures. It wasn't like they could watch a building go up and see problems as they arose. Instead, a customer's or end user's first real view into the capabilities of the software is delayed until user acceptance testing, which occurs just before the software is supposed to be delivered.

As you might imagine, the folks who most take the heat for failed deliveries under the traditional software development model are the project managers and software development engineers. An astute project manager works with the development team to identify the cause and effects of their project's failures. After all, it's the engineers who are in the best position to understand the impact of unexpected changes and other impediments that prevented delivery within the approved constraints of the project.

Starting in the 1990s, a number of software engineers came to understand that the overly prescriptive practices of the traditional plan-driven and linear-sequential development model were not well suited to software development. The traditional model implements a deterministic view that everything can be known and therefore preplanned, scheduled, and controlled over the life of a project. The reality is that there are too many random or unknowable events that make it impossible to predict client needs, market influences, priorities, risks, and the scope of work required to deliver a viable product. The real world of software development is not deterministic; it is highly stochastic.

As a result, these engineers began to experiment with lighter-weight software development methodologies that provided more flexibility to address changing requirements and information on a near real-time basis. They also developed techniques to eliminate the inefficiencies and quality issues associated with a protracted software development life cycle. In the next section, we will learn about some of the better known and successful lightweight software development methodologies.

Moving away from the traditional model

The movement away from the traditional plan-driven and linear-sequential development model was gradual and spearheaded by a relatively small group of likeminded individuals. Later in this chapter, I am going to discuss how the agile movement was led by a group of engineers, and not by management specialists. The fact that engineers led this movement has ramifications for why many agile practices did not address enterprise scalability issues from the start. But for now, I want to get into a description of some of the so-called lightweight approaches that set the stage for modern agile development concepts.

There were several lightweight methodologies available to the software development community well before the values and principles of agile were outlined in the agile Manifesto in 2001. In common across these lightweight methodologies were a reduction in governing rules and processes, more collaborative development strategies, responsiveness to customer needs and priorities, and the use of prototyping and iterative and incremental development techniques. In short, lightweight software development methodologies evolved to eliminate the complexities of the traditional software development model.

In this section, I'll briefly introduce some of the better known or impactful lightweight methodologies. These include the following:

- Adaptive Software Development, defined by Jim Highsmith and Sam Bayer in the early 1990s [Highsmith, J. (1999) Adaptive Software Development: A Collaborative Approach to Managing Complex Systems. Dorset House Publishing Company. New York, NY].

- Crystal Clear, evolved from the Crystal family in the 1990s, fully described by Alistair Cockburn in 2004 [Cockburn, A. (2005) Crystal Clear: A Human-Powered Methodology for Small Teams. Pearson Education, Inc. Upper Saddle River, NJ].

- Extreme Programming (XP), by Kent Beck in 1996 [Beck, K. (1999) eXtreme Programming eXplained. Embrace Change. Addison-Wesley Professional. Boston, MA].

- Feature Driven Development (FDD) developed (1999) by Jeff De Luca and Peter Coad [Coad, P., Lefebvre, E. & De Luca, J. (1999). Java Modelling In Color With UML: Enterprise Components and Process. Prentice-Hall International. (ISBN 0-13-011510-X)], [Palmer, S.R., & Felsing, J.M. (2002). A Practical Guide to Feature-Driven Development. Prentice-Hall. (ISBN 0-13-067615-2).

- ICONIX, developed by Doug Rosenberg and Matt Stephens, was formally described in 2005 [Rosenberg, D., Stephens, M. & Collins-Cope, M. (2005). Agile Development with ICONIX Process. Apress. ISBN 1-59059-464-9].

- Rapid Application Development (RAD), developed by James Martin, described in 1991 [Martin, J. (1991) Rapid Application Development. Macmillan Publishing Company, New York, NY].

- Scrum, initially defined as a formal process by Jeff Sutherland and Ken Schwaber in 1995 [Sutherland, Jeffrey Victor; Schwaber, Ken (1995). Business Object Design And Implementation: OOPSLA '95 Workshop Proceedings. The University of Michigan. p. 118. ISBN 978-3-540-76096-2]

Adaptive Software Development (ASD)

ASD starts with the premise that large information systems can be built relatively quickly, at lower costs, and with fewer failures through the adoption of prototyping and incremental development concepts. Large projects often fail due to self-inflicted wounds associated with unnecessary complexity and lengthy development cycles.

Some organizations address those problems by breaking up the pieces of a large and complex project into smaller component parts and reassigning the work to multiple teams, who work on their assignments independently and in parallel. However, that approach creates new issues in terms of providing clarity on what the components should be and do, what the final solution must be and do, and how it will all come together. In contrast, Jim Highsmith's ASD approach employed a combination of focus groups, version control, prototyping, and time-boxed development cycles to incrementally evolve new product functionality, on demand.

Highsmith was an early proponent of rapid application development. With an emphasis on speed, RAD reduced cycle times and improved quality in smaller projects with low to moderate change requirements. However, RAD was not an adaptive strategy, and it proved not to scale well in large and more complex environments that have a great deal of uncertainty associated with them.

Highsmith's approach drew from complex adaptive systems theory as a model of how small, self-organizing, and adaptive teams, with only a few rules, can find a path to construct the most complex systems. He argued that imposing order to optimize conditions in uncertain environments has an oppositional effect, making the organization less efficient and less adaptive. Plan-driven development projects continuously operate out of step and behind current organizational and market needs.

ASD implements a series of speculate, collaborate, and learn cycles to force constant learning, adaptions, and improvements. Instead of focusing on the completion of predefined work tasks, ASD has a focus on developing defined features that provide capabilities desired by customers.

The term speculate replaces the term plan in the ASD approach. The basic idea behind ASD is that a team cannot implement a plan to enforce a strategy based on a deterministic view that the world can only follow one set of rules or lead to only one perceivable and desired outcome. Instead, empirical evidence observed over the course of a project quickly reveals the underlying assumptions of the plan are flawed.

Planning is still an acknowledged requirement in an ASD-based project, but the ASD developers and project managers are aware they live in a world of uncertainty, and that plans must change. Therefore, the astute development teams know that they must be willing to experiment, learn, and adapt, and do so over short cycles so that they do not get locked up in a plan that cannot work.

The ability of a project team to be adaptive is largely contingent upon their ability to leverage the strength of their combined knowledge and collaborate on finding effective solutions to complex problems. Again, overly prescriptive plans get in the way of understanding, problem-solving, and innovations. In ASD, the focus is on delivering results, and not on completing predefined tasks.

Learning is the final part of the ASD cycle. The development team obtains input from three types of assessments: technical reviews, iterative retrospections, and customer focus groups. Learning reviews occur at the end of each iterative development cycle. Iterations are kept short to limit the potential damage from making errors that do not support the common purpose of the team and the organization it supports. Customer focus groups provide necessary and ongoing input, feedback, and validation.

Crystal Clear

Over a period of time spanning about a decade, Alistair Cockburn observed numerous development teams to determine what set of practices separated successful teams from those that were not. His study began in 1999 when an IBM consulting group asked him to write a methodology for object-oriented projects. Alistair determined that the best way forward was to interview project teams to uncover their best practices and techniques.

What he found was that success was not at all determined by the methods and tools the teams used to support object-oriented programming. Instead, the success criteria were much softer, more structural, and not so much based on the particular methods and tools used by developers to create their code.

After 10 years of continuing these interviews of successful software development teams, Alistair Cockburn wrote the book Crystal Clear: A Human-Powered Methodology for Small Teams. In that book, Alistair cites the following factors as being most critical to project success:

- Co-location and a collaborative, friendly working environment.

- Eliminate bureaucracy and let the team do their work.

- Encourage end-users to work directly with development team members.

- Automate regression testing.

- Produce potentially shippable products frequently.

So, in other words, it wasn't mature software development practices or tools that made the difference in building and deploying high-quality software products. Rather, it was the decluttering of bureaucratic and overly complex organizational structures that made the most difference in team effectiveness.

In a crystal-clear environment, the development team consists of anywhere from two to seven developers, and they work in a large room or adjacent offices. The team works collaboratively, often holding discussions around whiteboards and flipcharts to work through complex problems. The developers, and not specialist business analysts, work directly with end-users and customers to discern the functional requirements and capabilities needed by the product.

The team does not follow the directions of a plan or manager. Instead, they focus on the requirements they uncover during their direct communications with customers, and then they build, test, and deliver incremental slices of functionality every month or so. The most effective teams also periodically review their work to uncover better ways to build software and operate in their upcoming iterations.

Alistair believes different types of projects need different approaches and that a single methodology does not support all project requirements. Thus, he developed the Crystal family of software development methodologies to support varying project needs. For example, larger projects require more coordination and communication. Projects that are governed by legislative mandates or have life or death consequences require more stringent methodologies with repeated verification and validation testing to confirm the designs and suitability of the products for their intended environments. Smaller development teams working on non-mission-critical applications need less oversight and bureaucracy.

Alistair classified his Crystal methodologies by the number of development team members that must be coordinated on a project, and by the degree of safety required by a product. There are five Crystal colors used to define project team size. These five Crystal colors are as follows:

- Clear for teams of 2 to 8 people

- Yellow for teams of up to 20 people

- Orange for teams of up to 40 people

- Red for teams of up to 80 people

- Maroon for teams of up to 200 people

The Crystal Sapphire and Crystal Diamond methodologies provide additional rigor through verification and validation testing to confirm the viability of mission-critical, life-sustaining, and safety-critical applications.

The Crystal Clear methodology incorporates three properties of most effective small teams. These properties are as follows:

- Frequent delivery

- Reflective improvement

- Osmotic communication, for example, co-location of team members to improve collaboration

Alistair cites four other properties displayed by the most effective small teams. These are as follows:

- Personal safety

- Focus, for example, knowing what to work on and having the ability to do so

- Easy access to expert users

- Automated and integrated development environments

Extreme Programming

Kent Beck is another software engineer who came to realize that great software can be developed at a lower cost, with fewer defects, more productive teams, and greater returns on investment than the industry was producing under the traditional software development model. Now in its second edition, Kent Beck wrote his book titled eXtreme Programming eXplained to detail his approach to achieving the aforementioned goals [Beck, K., Andres, C. (2004-2005) eXtreme Programming eXplained. Embrace Change. Second Edition. Addison-Wesley Professional. Boston, MA].

XP places value on programming techniques and skills, clear and concise communications, and collaborative teamwork. Kent explains the methodology in terms of values, principles, and practices. Values sit at our core and form the basis of our likes and dislikes. Our values often come from life experiences. On the other hand, values also serve as a basis of the expectation we have of ourselves and others. Practices are the things we should do in support of our values. Likewise, practices performed without direction or purpose work against our stated values. Finally, principles help define our values and guide our practices in support of our values.

The values defined in XP encourage communication, simplicity, feedback, courage, respect, safety, security, predictability, and quality of life. Values are abstractions that describe what good looks like in an XP environment.

There are quite a number of principles defined in XP, and simply listing them here would not provide much enlightenment. I've summarized some of the most important principles here:

- Treat people with respect, offering a safe participatory environment that values their contributions, gives them a sense of belonging, and the ability to grow.

- The economics of software development, and the impact on our customers, is just as important as the technical considerations.

- Win-win scenarios are always preferred.

- Look for repeatable patterns that work, even though you must understand the same patterns may not apply to every similar contextual use.

- Get into the habit of designing tests demonstrating compliance before developing the code.

- Seek excellence through ongoing and honest retrospective assessments and improvements.

- Seek diversity in experiences, skills, approaches, and viewpoints when building your teams.

- Focus on sustaining weekly production flows of incremental yet high-priority slices of functionality.

- Problems or issues will arise and represent opportunities to make positive changes.

- Make use of redundancy in practices, approaches, testing, and reviews as checks and balances to improve the quality of released products.

- Don't be afraid to fail; there is something to be learned from every failure.

- Improvements in quality have a net positive impact on the overall productivity and performance of the team.

- Implement changes toward XP in small steps, as rapid change is stressful for people.

- Responsibility must come with authority.

- Team responsibility spans the entire development cycle, from the assignment of a story through analysis, design, implementation, and testing.

Principles provide the development team with a better understanding of what the practices are meant to accomplish. As with the principles, there are many practices listed in XP. Since practices are at the core of XP, we need to take a quick look at them:

- Teams work together in an open space, big enough to support the entire team.

- Ensure that teams have all the skills and resources necessary for success.

- Keep key information out in the open and visible through whiteboards, story cards, and burnup/burn down and velocity charts.

- Maintain team vitality by producing work at a sustainable and healthy rate.

- Implement paired programming where two developers work and collaborate on one computer.

- Use story formats to understand the capabilities customers and users need, and their priorities.

- Plan work 1 week ahead through the refinement of identified high-priority stories.

- Plan work ahead quarterly to evaluate ways to get better, identify areas to aggregate related work (for example, themes), and assign stories to the themes.

- Build in slack and don't overcommit.

- Install build automation capabilities and make sure product builds never take more than 10 minutes to complete.

- Implement continuous integration practices so that the developers merge their working copies of software into a source control management system throughout the day.

- Implement a test-driven development approach where tests are written before writing the code.

- Make time to incrementally improve the design every day.

As this is a book on scaling agile practices, it's interesting to note here that Kent Beck addresses the issue of scaling XP. First, add teams, but keep the basic small team model when scaling XP. Next, break down any large-scale problems into smaller elements and have the teams address each issue as simply as possible. For larger and more intractable problems, do whatever you can to stop making the situation worse, and then work to fix the underlying problems while continuing to deliver useful products.

Feature-Driven Development (FDD)

As the name implies, the feature-driven approach emphasizes the implementation of new or enhanced features at a rapid rate. Developed by Jeff DeLuca and influenced by Peter Coad, the FDD approach leverages object-oriented concepts with the Unified Modeling Language (UML) to define a system as a series of interacting objects. UML provides a method to describe interactions between agents and systems. An agent can be an end user or another system.

At its core, FDD is another iterative and incremental development approach that stresses customer focus and the timely delivery of useful functionality. The FDD approach integrates concepts from XP and Scrum, and, like RAD, takes advantage of modeling techniques and tools – including Domain-Driven Design (DDD) by Eric Evan [Evans, E. (2003) Domain-Driven Design: Tackling Complexity in the Heart of Software. Addison-Wesley. Hoboken, NJ], and object modeling in color by Peter Coad [Peter Coad, Eric Lefebvre, Jeff De Luca (1999). Java Modeling In Color With UML: Enterprise Components and Process, Prentice-Hall. Upper Saddle River, N.J.].

Peter Coad, et al., came to realize that, model after model, they built the same four general types of object classes over and over again. These four repeating object class patterns are called archetypes, which means a common example of a certain person or thing. The four common classes and their color classifications include the following:

- Moment-interval (Pink): Representing a specific moment, event, or interval of time

- Roles (Yellow): Defining the way some thing participates in an activity

- Descriptions (Blue): Methods to describe, classify, or label other things

- Party, Place, or Thing (Green): tangible and uniquely identifiable things

Many agile development approaches use stories as the structure to capture the capabilities that customers and end-users need the product to provide. The UML modeling approach describes requirements from the standpoint of client-valued interactions and functionality (a.k.a. features).

DDD has a focus on modeling domains/domain logic. Instead of working directly with customers and end-users, the DDD approach encourages collaborations between developers and domain experts to define models that accurately reflect the business domain and their problems.

The feature-driven development approach encompasses five standard processes:

- Develop a model – feature-driven, time-boxed, and collaborative.

- Build the feature list – from the models, categorized and prioritized, and time-boxed.

- Plan by feature – the assignment of features to developers.

- Design by feature – establish a design package with two weeks of work each that logically go together.

- Build by feature – keeping the development focus on developing and delivering useful features.

While FDD is considered an agile approach and often included as a lightweight methodology, the approach, by design, supports larger projects involving 50 developers or more.

ICONIX

The ICONIX approach to software development is another use-case-driven practice. However, it is a lighter-weight implementation of UML than the more widely known and practiced Rational Unified Process (RUP). Though RUP incorporates an iterative software development approach, it is a heavyweight approach that is highly prescriptive and implements a comprehensive application life cycle management approach.

Described by Doug Rosenberg, Matt Stephens, and Mark Collins-Cope, the ICONIX approach predates XP and RUP, when Rosenberg and his team at ICONIX developed an object-oriented analysis and design (OOAD) tool, called ObjectModeler. Their ObjectModeler tool supported the object-oriented design methods of Grady Booch, Jim Rumbaugh (OMB), and Ivar Jacobson (Objectory). The ICONIX process was formally published in 2005 in their book titled Agile Development with ICONIX Process. People, Process, and Pragmatism [Rosenberg, D., Stephens, M. & Collins-Cope, M. (2005). Agile Development with ICONIX Process. Apress. New York NY.]. The authors provided further elaboration on the ICONIX approach in their 2007 book titled Use Case Driven Object Modeling with UML: Theory and Practice [Rosenberg, D. & Stephens, M. (2007). Use Case Driven Object Modeling with UML: Theory and Practice. Apress. New York, NY]. In its modern form, the authors promote the ICONIC process as an open, free-to-use, agile, and lightweight object modeling process that is use-case-driven.

ICONIX implements a robust and relatively document-intensive process – when compared to XP – for requirements analysis and design modeling. In addition, ICONIX implements an intermediary process, called robustness modeling, to help developers think through the process of converting what is known about the requirements, into how to design in the desired capabilities. ICONIX implements a modeling technique called robustness diagrams, which depicts the object classes (for example, use case descriptions of classes and behaviors) and the flow of how the defined objects communicate with each other.

From a logical perspective, there is a different level of abstraction when modeling and analyzing requirements versus analyzing software and systems design requirements. They are two different worlds – physical versus logical. The robustness modeling approach helps designers visualize the translation of information from the physical requirements into a logical implementation.

The ICONIX approach breaks out into four delivery milestones, which include a requirements review, a preliminary design review, a detailed design review, and deployment.

Requirements analysis informs the requirements review milestone. The output of the business analysis includes use cases, domain models, and prototype GUIs.

A robustness analysis informs the preliminary design review milestone. The outputs of the robustness analysis include both textual and graphical descriptions of user and system interactions, both of which serve as aids to help the development team validate the requirements and designs with their customers and end-users.

A detailed design informs the detailed design review milestone. The domain model and use case text are the primary inputs to the design document.

The code and unit tests inform the deployment milestone. The term deployment is a bit confusing as this milestone serves as the completion marker for developing the code to support the requirements, and not a physical deployment of the application to the customer.

In summary, the ICONIX process is a lightweight, agile, open, and free-to-use object modeling process that employs use case modeling techniques. Its strength lies in the techniques the methodology implements to transition use case models of requirements into useful design elements.

Rapid application development (RAD)

James Martin wrote Rapid Application Development (RAD) to address the issue that the speed of software development activities always seems to lag behind the speed at which businesses operate. Given that computers and software were fast becoming the backbone of business operations and competitive responses, it was untenable to have 2- or 3-year development life cycles with an even larger backlog of requirements.

James Martin notes in his book that there are three critical success factors that information systems departments face:

- Speed of development – to implement new business capabilities

- Speed of application change – to respond to new needs and priorities

- Speed of cutover – to quickly replace legacy systems and operations

Martin believed the key to sustained business success was the installment of rapid application development capabilities.

Martin was an early proponent of the use of computer-aided systems engineering (CASE) tools, and he was the founder of KnowledgeWare – the company that produced the IEW (Information Engineering Workbench) case tools. (Later, IEW was sold to Sterling Software in 1994, which in turn was subsequently acquired by Computer Associates.) Ultimately, IEW combined personal computer-based information and data modeling tools with a code generator that expedited the process of building software applications that run on mainframe computers.

Martin's concept of RAD was to use tools and techniques to lower development costs, reduce development times, and build high-quality products. Manual processes often lead to reduced quality when attempts are made to expedite the process. However, the methods and tools implemented within CASE tools enforce technical rigor while simultaneously improving quality, consistency, and efficiencies in software development.

The modern concept of RAD lives on through Integrated Development Environments (IDEs), such as Android Studio, Atom, Eclipse, Microsoft's Visual Studio, NetBeans, VIM, and many, many others.

Scrum

This book is about mastering Scrum for enterprise, and the next chapter provides much greater detail on the methodology. For now, I want to focus on introducing Scrum as an example of a lightweight software development methodology and how and why it came about.

Both the initial concepts behind Scrum and the name Scrum came from the works published by Hirotaka Takeuchi and Ikujiro Nonaka, in their 1986 Harvard Business Review article titled The New New Product Development Game [Takeuchi, H., Nonaka, I. (1986) The New New Product Development Game Harvard Business Review. Retrieved <May 21, 2020.]. This article builds on original concepts developed by Nonaka as published in a 1991 article titled The Knowledge Creating Company. [The Knowledge Creating Company. Oxford University Press. 1995. p. 3. ISBN 9780199762330. Retrieved March 12, 2013.] The concepts behind Scrum are built on Takeuchi and Nonaka's findings that organizational knowledge creation drives innovation.

Takeuchi and Nonaka note that the traditional linear-sequential development methods are not competitive in the fast-paced, fiercely competitive world of commercial new product development. Takeuchi and Nonaka cite six differentiating factors that provide the speed and flexibility necessary for competitive success: built-in instability, self-organizing project teams, overlapping development phases, multilearning, subtle control, and organizational transfer of learning.

An important insight, highlighted by the two authors, is that knowledge creation leads to innovation, which in turn leads to competitive advantage. Moreover, they found that learning from direct experiences, and from trial and error, are essential elements of knowledge acquisition.

Jeff Sutherland and Ken Schwaber collaborated to develop the Scrum methodology. In their book titled Software in 30 Days, Southerland notes that the writings of Takeuchi and Nonaka profoundly influenced his early works [Schwaber, K., Sutherland, J. (2012). Software in Thirty Days. How Agile Managers Beat the Odds, Delight Their Customers, and Leave Competitors in the Dust. John Wiley & Sons. Hoboken, N.J.]. Ken Schwaber says he was influenced by the works of Babatunde A. Ogunnaike in the areas of industrial process control, and the applicability of complexity theory and empiricism in software development.

Sutherland developed his views on Scrum, initially in 1993, as an alternative to the highly prescriptive and top-down project management-based methodologies typified in the traditional waterfall-based software development model. Later, in 1995, Jeff Sutherland teamed with Ken Schwaber to formalize and present the Scrum process at the OOPSLA'95 (Object-Oriented Programming, Systems, Languages & Applications) conference. Jeff and Ken continue to maintain the Scrum processes in the form of the Scrum Guide. [Schwaber, K., Sutherland, J. (2017) The Scrum Guide™ The Definitive Guide to Scrum: The Rules of the Game. ScrumGuides.org. https://www.scrumguides.org/]

In his book titled Scrum – The Art of Doing Twice the Work in Half the Time, Sutherland notes that detailed project plans and schedules of the traditional waterfall model gave a false sense of security and control [Sutherland, J. Scrum The Art of Doing Twice the Work in Half the Time. Currency (An imprint of Crown Publishing and a division of Penguin Random House). New York, N.Y.]. He also came to understand that project plans and schedules are a red herring that hinder truthful assessments of the project's real health.

Project managers know they are working with incomplete information and uncertainty when they develop their project plans. What many may not have understood is that the strung-out life cycle of the traditional model prevented discovery of most critical issues until very late into the project schedule. Moreover, by then, the issues were often numerous and cumulative, adding further complexity and challenges to identify and fix the root causes of the problems. In effect, the plans and schedules locked in requirements, deliverables, priorities, and work activities built on incomplete and dated information and wrong assumptions. Consequently, too many traditionally managed projects failed miserably.

Sutherland realized the alleged benefits of the traditional model – control and predictability through detailed planning – were at odds with reality. The traditional model attempts to force a deterministic approach to management – based on the idea that future events are predictable and controllable. In reality, the world is full of uncertainty, subject to stochastic and interlocking processes and events.

Bull riders wouldn't have a job if bulls didn't buck. However, cowboys wouldn't think that they could sit quietly on a bull's back, with a predefined plan, and not have to respond to the bull's every movement. At least, not if they have any desire to stay on the bull for the full 8 seconds. Instead, cowboys use their senses to take in real-time information about the bull's movements, and they constantly adjust their weight and position to stay in proper alignment with the bull's actions.

Bull riding may sound like an extreme analogy, but businesses are no less prone to being thrown off their game by the constant flux in their competitive surroundings. So why do we think we can ride out those fluctuations in business with a predefined strategy that is not responsive to new information and change?

Sutherland and Schwaber both came to realize that software development teams must embrace uncertainty with creativity and responsive movements. They also realized that an entirely different approach to product definition and development was necessary to resolve the self-inflicted wounds of attempting to control chaos. Resultingly, both turned to the philosophy of empiricism, which espouses a belief that real knowledge comes from information gained through sensory experiences and empirical evidence (for example, knowledge gain through observation and experimentation).

Empirical processes support knowledge acquisition through evidence-based strategies that employ the use of human senses to observe and document patterns and behaviors. Empiricism also promotes the use of trial and error practices to reveal the effectiveness of different strategies through experimentation and analysis.

Sutherland's and Schwaber's approach to empiricism is encapsulated in the three pillars of empirical process control: transparency, inspection, and adaptation. Transparency means process information must be fully available to those who are responsible for its execution. Frequent inspections help the team observe their progress with the desired goals. And when deviations from expectations are noted, the team responds by adjusting the process to achieve positive outcomes through adaption.

It's foolish to wait until all the requirements are fully defined, and the product is fully designed, coded, and tested, to see whether it works, and only then determine whether the product still supports the customer's needs. Instead, it makes more sense for the responsible team to frequently evaluate their progress against their goals, and their continued alignment with the customer's current needs and priorities.

Scrum is lightweight because it is not overly prescriptive. Scrum is a framework and not a methodology that might otherwise impose a lot of rules, constraints, processes, and documentation requirements. Within the Scrum framework, developers are free to use any number of tools and techniques to accomplish their work. But the Scrum framework enforces minimalism, offers an open and safe environment to try different things, and implements a frequent inspect and adapt cycle of events.

This book is largely about scaling Scrum concepts because Scrum has largely won the agile methodology wars in terms of wide-scale acceptance. Nevertheless, there are different views on how to scale the implementation of Scrum capabilities across a large enterprise or a large project. This book addresses why there is not a single method that works in all situations.

In this book, we will explore this Scrum scaling issue together in three parts. First, in the next chapter, you will learn the basics of Scrum. Then, in Part 2 of this book, you will learn about the different approaches to scaling Scrum. Finally, in Part 3, you will learn how to apply Scrum in different environments and situations.

But, before we get to the next chapter, we'll review some of the core implementation concepts implemented by the lightweight agile methodologies. Next, we need to understand how the core concepts support Agile's values and principles. Finally, I'll discuss how the agile movement was initially powered by software engineers and not by MBAs or management specialists. The development of the values and principles of agile, along with the development of lightweight software development methodologies by software engineers, had profound ramifications on adoption and scalability in both large enterprise environments and large, complex projects.

Defining Agile's core implementation concepts

You have probably already surmised that the lightweight methodologies introduced in previous sections have more in common than not. This section consolidates and elaborates on the common principles and practices that came from the lightweight software development methodologies.

I have ordered the concepts alphabetically, to avoid an inaccurate view that one concept has more or less importance than any of the others. All of these principles and practices have found wide-scale acceptance. The order of implementation within an organization is situational and usually best implemented at the team level – for reasons I'll explain in Part Two of this book.

Backlogs

In the traditional model business analysts document business and user requirements in some form of business or requirements specification, while members of the development team document functional and nonfunctional requirements and develop related technical specifications. All of that goes away on agile-based software development projects. Instead, a product owner maintains a backlog of identified requirements, typically documented in the form of epics, stories, and features. While the development team members help the product owner refine the product backlog, only the product owner has the authority to set priorities within the backlog.

Co-location

Modern electronic communication, collaboration, and source control management tools allow software development teams to work in virtual environments and across multiple locations. I've managed more than my fair share of virtual software development projects, both large and small, and I've been able to make them work.

However, an ideal situation is to locate all the development team members in a single location, working together in one room large enough to accommodate the entire team. If the organization has the resources, a common practice is to place a central table with sufficient size to sit all the team members around the table with their computers, so that they can face each other and talk comfortably while they're working. Also, the room's walls should all have whiteboards on them so that team members can conduct multiple collaborative working sessions at the boards simultaneously. The room should be dedicated to the team so they can keep the information on their whiteboards and charts out in the open at all times.

The team members also need time and space to work independently. So, again, if the organization has the resources, the ideal situation is to have individual work cubes in or adjacent to the room. The main point is that the teams can come together to work through technical implementation concerns or address problems that crop up during the project.

In Part Two of this book, you'll discover that having multiple Scrum teams associated with the development of a single product is not uncommon. Each team works as an independent group, and they may work at other facilities. However, each separate team should work together as a single unit and co-located in a single facility with a dedicated room to work and collaborate as a team.

CI/CD pipelines

The acronym CI/CD or CICD refers to the combination of continuous integration and either continuous delivery or continuous deployment practices. Continuous integration preceded the concepts of continuous delivery and continuous deployment. The term Continuous Integration was originally described by Kent Beck as part of his 12 Extreme Programming development processes. Continuous delivery is the automation of provisioning development and test environments on-demand and with proper configurations. Continuous deployment takes CI one step further to include automated provisioning and configuration in a production deployment environment. The next two subsections provide more details about these two specific areas of automation, CI and CD.

Continuous Integration

Bugs in software code are pretty much a given. The longer a development team waits to integrate and test their source, the more bugs accumulate. Also, the accumulation of both code and bugs makes the task of debugging more complex, difficult, and time-consuming. More frequent integration and testing make debugging less difficult. The causes of bugs are more easily found and more easily fixed.

Continuous Integration is the practice of frequently integrating code into a shared repository – at a minimum, several times a day. More frequent check-ins are better than less frequent check-ins. For larger sections of code, a good practice is to use stubs and API placeholders (a.k.a. skeletons) so that your code can be tested and checked into the source code repository more frequently. This allows other programmers on the team to see what you are doing and even assist with the development of the functionality delivered to the stubs and APIs.

The development team should always work from a single source code repository. Automating the build process is an important component of CI. The automation process includes compiling source code files, packaging compiled files, producing application installers, and defining the database schemas. The more repeatable, self-testing, and automated the build process, the less likely it will be that the process will introduce bugs.

Ideally, developers initiate the build process every time they check their code into the SCM system, and the build process should take less than 10 minutes, including the execution of automated tests. If the build process is manually intensive and time-consuming, developers won't do it frequently, leading to the previously mentioned bug accumulation issues.

Another good practice is to have every commit build on a dedicated integration server. Cloud-based platforms and virtualized development environments make this strategy increasingly practical. The dedicated integration system offers better visibility to available code and provides a staging environment for subsequent testing.

Continuous delivery/deployment

There are several important elements of the concepts and practices behind the implementation of continuous delivery and deployment capabilities. As a component of agility, iterative development practices generate frequent releases of potentially shippable products that can be made available to customers in a predictable cycle. The frequent availability of useful functional software impacts the organization's delivery capabilities.

Specifically, frequent releases create potential issues in the areas of provisioning. Each new release of software has the potential to impact the organization's infrastructure with new demands for computing equipment, networks, database management systems, backup and storage capabilities, security applications, and other software applications.

Continuous Delivery is the term used to define an IT organization's ability to implement changes on demand. Such changes include the introduction of new features, bug fixes, software, and system configuration changes. Provisioning concerns span the development, testing, and production environments, encompassing all software and related infrastructure requirements.

The goal of Continuous Delivery is to implement changes to the development, test, and production environments safely, rapidly, and sustainably. As an example, continuous delivery capabilities make it practical to release software at extremely short intervals, even multiple times a day.

Cloud-based Platform as a Service (PaaS) offerings make continuous delivery both economical and practical for many organizations. PaaS providers offer their customers a virtualized application development and hosting platform as a cost-effective alternative to building and maintaining dedicated, in-house IT infrastructures. But the same concepts and capabilities are possible within an organization's data center with the correct technologies and practices.

Continuous delivery concepts extend the automated build and integration test capabilities out into the test environments. They also generally include the ability to provision and configure necessary infrastructure resources on demand. Conceptually, continuous delivery supports the development and testing environments. After the completion of testing, the development team manually pushes the released software into the production environments. The customer's IT operations team then provisions resources and configures the hardware and software for the new release.

Continuous deployment takes the automated provisioning and configuration capabilities of continuous delivery out into the production environments. Humans are not involved, and only a failure in the automated process stops the delivery of the release. With the implementation of both continuous delivery and deployment capabilities, developers have the potential to release new product functionality within minutes of pushing their code into the test environments.

Continuous deployment capabilities create a new set of potential problems by releasing software updates to customers who don't value, or understand, or are not yet willing to adopt the changes. Also, the functionality may not apply to every type of user. Therefore, it's not unusual to set up rules that limit the deployment of automated production releases to stages or to specific communities of users.

Cross-functional

The implementation of iterative development releases by small teams is only possible if the teams have the resources and skills required to develop and test the product's features. As the teams are stood up, they must collectively have the skills to work on the product. Over time, individuals should strive to cross-train on the skills they don't have but that are necessary for the development of the product. This concept is called "multi learning" and is key to the team's self-sufficiency.

Customer-centric

The third value in the Agile Manifesto says customer collaboration is more important than contract negotiations [Kent Beck, et al. © 2001. Manifesto for Agile Software Development. http://agilemanifesto.org/. Accessed 10 November 2019]. A contract scenario creates situations where the customer defines high-level requirements and then, except for brief requirement gathering activities, disappears for the remaining duration of the project. Moreover, contract negotiations set up adversarial relationships, and not the collaborative relationships that support the iterative and evolutionary development aspects of agile.

Incremental and iterative development (IID) practices help simplify development by reducing the size and complexity of code, making it easier to find and debug any errors in the application. However, the IID practices also allow several very important customer alignment and collaboration opportunities.

First, the customers and end-users can look at the product frequently to ensure the implementation of features that meet their needs. Invariably, customers get new insights into how a software product can better support their needs only after they have a chance to work with a prototype or new release. The frequent releases of agile provide more opportunities for customers to get these insights.

Second, if the customer judges the software features as insufficient in some manner, the customer's priorities have changed, or their insights after viewing the software lead to new requirements, the development team can quickly adjust to meet the new requirements. In the traditional model, change requests were not desirable as they had a direct impact on the authorized constraints of the project. In other words, changes in scope cause negative impacts on project budgets, resource allocations, and schedules.

Iterative and Incremental Development (IID)

The phrase iterative and incremental development describes the agile approach practitioners take to deliver frequent releases of potentially shippable products. I have found there is often confusion about what the terms iterative and incremental mean.

The term iterative means development is broken up into limited time-boxed durations, typically of 4 weeks or less. In a modern DevOps environment with continuous delivery and deployment capabilities, iterations are measured in hours or minutes. Iterative development reduces complexity and work in progress, which are important elements in implementing lean software development concepts.

The term incremental refers to the concept that the product evolves incrementally with each development iteration. In other words, the term increment implies the release of a new slice of functionality with each iteration.

Iterative and incremental go together because the sole purpose of development, regardless of the methodology employed, is to create something that customers ultimately value. In that context, new increments of product functionality are the deliverables of the iterative development process.

Pair programming

There are several important elements associated with agile practices that allow the development team to operate rapidly, efficiently, and productively. Some of the most important elements include transparency, safety, perspective, and inspection.

An effective strategy to address all four of these elements is pair programming. In pair programming, two developers sit side by side and work together. One programmer has the role of driver, while the other takes on the role of an observer, sometimes called the navigator.

As the driver develops the code, they are heads-down and operating at a tactical/ implementation level. The observer inspects the developer's code in real-time to help resolve any bugs and consider more efficient development approaches. The observer also evaluates the code in terms of addressing the strategic goals of the feature within the application. The developers change roles frequently to stay fresh and engaged.

Potentially shippable products

The first principle stated in the Agile Manifesto is Our highest priority is to satisfy the customer through early and continuous delivery of valuable software [Kent Beck, et al. © 2001. Manifesto for Agile Software Development. http://agilemanifesto.org/. Accessed 10 November 2019.] Agile practitioners employ IID practices in support of this principle.

However, it does not matter how frequently the development team completes new updates if the output has no functional value to the customer. Therefore, a key component of most agile practices is the concept of providing a potentially shippable product with every development iteration.

That does not mean to imply the product owner must release the product of an iteration to customers and end-users. There may be practical reasons not to do so until full business process functionality is available, or the customer is in a position to accept the product into production.

Prototyping

I noted previously that, often, customers don't know what they want until they see the product and have a chance to work with it. This is the point of having frequent customer reviews, as often as every iterative release. At the beginning of a project, there may not be much to show. Moreover, the team may want to show potential product concepts before devoting much time to developing the features.

Prototyping is an approach to quickly and cost-effectively build a mock-up of a particular product or feature. The mock-up could be as simple as a screen or user face design, or more complex with data fields, data, and algorithms that support the proposed application functionality. The prototypes are shown to customers before the implementation of the feature into a production release of the product.

An important concept of prototyping is that all prototypes are, by definition, throwaway activities. In other words, there should never be an expectation that a prototype must be deployed to a customer. Such an expectation gets in the way of the concept that failures are not bad, or to be avoided, as they provide opportunities for learning. If nothing else, the developers now know what not to do in the future.

Retrospectives

The twelfth and final principle of the Agile Manifesto states: At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behavior accordingly [Kent Beck, et al. © 2001. Manifesto for Agile Software Development. http://agilemanifesto.org/. Accessed 10 November 2019]. Retrospectives support this principle.

During a retrospective, the development team looks back at the past iteration to assess what worked well, what didn't work out so well, and what they could do differently in the future, starting with the very next iteration. Retrospectives only work and have value when the development team members feel free to speak out. In other words, they must work in an environment where team members are respectful, open, and honest, with complete transparency about all customer and product information.

Safety

Pair programming, prototyping, and retrospectives all create situations for potential tension and conflict. The development team member must be open to alternative views, know that failure is not going to get them in trouble, and that they should feel free to speak out during retrospectives and other team collaboration activities. That requires the development of an environment that stresses respect and safety for its team members.

The concept of safety is 180° out from the command and control environments dictated by the traditional development methodologies. The plan-driven and linear-sequential approach to development in the traditional model created cultures where team members followed edicts driven from above. In contrast, agile encourages a bottom-up approach to planning and executing work that puts the development team and the product owner in the driver's seat for decision-making.

Self-organizing

Having team members with multiple useful skills gives the team more flexibility in the assignment of work. Development teams can expect that each iteration of work has different task requirements. If the team employs specialists and does not cross-train – both to develop missing skills and provide more flexibility in individual work assignments, they have limited ability to assign work on demand. Instead, they find themselves having to wait to take on work until the required specialist is available.

From the context of the product backlog, having teams filled with specialists causes delays in the assignment of work and bottlenecks that, in turn, creates too much work in progress. Accumulated work in progress is a form of waste and is the antithesis of lean development organizations.

Small teams

Effective software development teams must have the resources they need to deliver incremental slices of new product functionality every iteration. If a team is too small, they likely won't have the skills and resources to deliver. If the team is too large, the coordination of work grows significantly larger and more complex.

Ken Schwaber and Jeff Sutherland, in the Scrum Guide, state teams should not be smaller than three members and not greater than nine. Jeff Bezos, the CEO of Amazon.com, is famous for noting that no matter the size of the company, individual teams should never get large enough to consume more than two pizzas in a sitting. (for example, five to seven people) [Deutschman, A. (2004) Inside the Mind of Jeff Bezos. Pizza Teams and Terabytes. https://www.fastcompany.com/50106/inside-mind-jeff-bezos-5. Accessed November 8, 2019]. UK anthropology professor R.I.M. Dunbar, in his paper titled Co-Evolution of Neocortex Size, Group Size, and Language in Humans, cites the maximum useful team size is limited to no more than 10 to 12 people [Dunbar, R. I. M. (1993). Coevolution of neocortical size, group size and language in humans. Behavioral and Brain Sciences 16 (4): 681-735], [Buys, C.J. & Larsen, K.L. (1979). Human sympathy groups. Psychological Report. 45: 547-553]. The Scaled Agile Framework (SAFe™), another scaled Scrum approach discussed later in this book, recommends that development teams stay in the range of 5 to 11 people. [Corporate (2019) Scaled Agile. Agile Teams. https://www.scaledagileframework.com/agile-teams/. Accessed November 8, 2019].

Source Code Management (SCM)

Developers work on code as individuals and in groups as part of a larger team. The developers implement changes to their code incrementally, not sure if the individual changes can deliver an intended result. In such cases, they may need to retrieve the original code to start over. That is the primary purpose of an SCM tool, to serve as a repository for all source code and related artifacts, and to automate version control over each of the stored artifacts so that developers can roll back to whatever versions they require.

The SCM tool also makes it possible for developers to find and check out the latest version of the code under development. SCM is an important capability as the developers must have confidence that they are working from the most current code set. If they pick up an old code set, there are likely to be numerous changes that can negatively impact the code they are developing. SCM tools also make it easy for the members of the development team to see the completed work to date, work in the queue, and work in progress.

Stories

There are many approaches to documenting business and user requirements. Under traditional development practices, requirements documentation tends to have a focus on analyzing what a product must do to fulfill business and user needs. In other words, the focus of requirements analysis is on the product. In contrast, story-based formats provide a brief elaboration on what customers and end-users want to do. In other words, what are the capabilities they need to do the work of their organizations?

It's a subtle distinction but think of it this way: customers don't care as much about the list of product features as they care about what they can do with the product's features.

The basic format of a story is as follows:

As a <type of user> I want to <desired capability>, so that <expected result or benefit>.

Sustainable workflows

The eighth principle of the Agile Manifesto states Agile processes promote sustainable development. The sponsors, developers, and users should be able to maintain a constant pace indefinitely [Kent Beck, et al. © 2001. Manifesto for Agile Software Development. http://agilemanifesto.org/. Accessed 10 November 2019]. Unfortunately, this objective is not always achieved, but it is the desired goal.

There are several keys to sustainability in iterative development environments. First, the team should never overcommit. They need the skills and resources to do the work required to support the development of the product. They need direct access to customers and users so they can fully assess the feature requirements. The team needs to develop heuristics for estimating the amount of work they can complete in a development iteration. Finally, the team must be in control of stating how much work they can accomplish in any development iteration.

Testing (test-driven and automated)

Testing is an important component of the overall software development life cycle process regardless of whether the development team is following the traditional approach or an agile-based development approach. Testing is a set of quality assurance (QA) activities that confirms the product features meet the business, user, functional, and nonfunctional requirements.

The primary difference between testing practices in the traditional model and those found in agile is the amount of work taken on before testing. As noted in previous sections, the more the development team attempts to implement new functionality before testing, the more likely there is to be an accumulation of bugs and defects that are difficult to locate and resolve. The iterative development nature of agile practices helps reduce the complexity of debugging a software product by limiting the amount of functionality implemented between testing.

Another important aspect of modern agile practices is to develop a test for each piece of code before writing the code. This practice is referred to as test-driven development.

Traditionally, the code is written first and then tested. In the traditional approach, the challenge is to develop a test, after the fact, that accurately supports the original requirement as opposed to writing a test that simply verifies the functionality of their code.

Test-driven development puts the focus on initially creating a test that fails unless the code properly implements the underlying functional requirement. It's not uncommon to have a developer run the test before they write the code to make sure it does fail, thus ensuring there is not a conflict with other code or that other code already fulfills the requirement. Next, the developer creates the code and runs the test again. If the test fails, the developer has not properly implemented the requirement. If the test passes, the developer has confidence they have properly implemented the requirements and that their code works.

Testing environments are another critical success factor in software development regardless of the software development methodology used. Sometimes, organizations take shortcuts due to costs and do not stand up dedicated test environments, forcing the development team to run their tests on their development environments or in the production environments. The problem with running tests on the development environments is that those computing systems likely do not replicate the exact conditions the software must operate in on the production environments. The problem with running tests on the production environments is that a bug could potentially bring the system down.

The only way around the problems associated with testing the production environment is to create a clone of the production environment. Again, modern PaaS offerings make it easier and less costly to stand up a development or test environment to make the conditions found in production environments. Likewise, virtualization makes it possible to set up independent testing environments within a set of clustered servers. Performance testing in test environments helps duplicate the demand loads expected in production environments.

Tools

The introduction of software programming tools came along almost concurrently with the introduction of electronic computing equipment. The primary focus of programming tools is to convert human-readable instructions into machine-readable instructions. The modern instantiation of software programming tools includes text editors and IDEs.