In this chapter, we will cover the following recipes:

Setting up Anaconda

Installing the Data Science Toolbox

Creating a virtual environment with virtualenv and virtualenvwrapper

Sandboxing Python applications with Docker images

Keeping track of package versions and history in IPython Notebooks

Configuring IPython

Learning to log for robust error checking

Unit testing your code

Configuring pandas

Configuring matplotlib

Seeding random number generators and NumPy print options

Standardizing reports, code style, and data access

Reproducible data analysis is a cornerstone of good science. In today's rapidly evolving world of science and technology, reproducibility is a hot topic. Reproducibility is about lowering barriers for other people. It may seem strange or unnecessary, but reproducible analysis is essential to get your work acknowledged by others. If a lot of people confirm your results, it will have a positive effect on your career. However, reproducible analysis is hard. It has important economic consequences, as you can read in Freedman LP, Cockburn IM, Simcoe TS (2015) The Economics of Reproducibility in Preclinical Research. PLoS Biol 13(6): e1002165. doi:10.1371/journal.pbio.1002165.

So reproducibility is important for society and for you, but how does it apply to Python users? Well, we want to lower barriers for others by:

Giving information about the software and hardware we used, including versions.

Sharing virtual environments.

Logging program behavior.

Unit testing the code. This also serves as documentation of sorts.

Sharing configuration files.

Seeding random generators and making sure program behavior is as deterministic as possible.

Standardizing reporting, data access, and code style.

I created the dautil package for this book, which you can install with pip or from the source archive provided in this book's code bundle. If you are in a hurry, run $ python install_ch1.py to install most of the software for this chapter, including dautil. I created a test Docker image, which you can use if you don't want to install anything except Docker (see the recipe, Sandboxing Python applications with Docker images).

Anaconda is a free Python distribution for data analysis and scientific computing. It has its own package manager, conda. The distribution includes more than 200 Python packages, which makes it very convenient. For casual users, the Miniconda distribution may be the better choice. Miniconda contains the conda package manager and Python. The technical editors use Anaconda, and so do I. But don't worry, I will describe in this book alternative installation instructions for readers who are not using Anaconda. In this recipe, we will install Anaconda and Miniconda and create a virtual environment.

The procedures to install Anaconda and Miniconda are similar. Obviously, Anaconda requires more disk space. Follow the instructions on the Anaconda website at http://conda.pydata.org/docs/install/quick.html (retrieved Mar 2016). First, you have to download the appropriate installer for your operating system and Python version. Sometimes, you can choose between a GUI and a command-line installer. I used the Python 3.4 installer, although my system Python version is v2.7. This is possible because Anaconda comes with its own Python. On my machine, the Anaconda installer created an anaconda directory in my home directory and required about 900 MB. The Miniconda installer installs a miniconda directory in your home directory.

Now that Anaconda or Miniconda is installed, list the packages with the following command:

$ conda listFor reproducibility, it is good to know that we can export packages:

$ conda list --exportThe preceding command prints packages and versions on the screen, which you can save in a file. You can install these packages with the following command:

$ conda create -n ch1env --file <export file>This command also creates an environment named

ch1env.The following command creates a simple

testenvenvironment:$ conda create --name testenv python=3On Linux and Mac OS X, switch to this environment with the following command:

$ source activate testenvOn Windows, we don't need

source. The syntax to switch back is similar:$ [source] deactivateThe following command prints export information for the environment in the YAML (explained in the following section) format:

$ conda env export -n testenvTo remove the environment, type the following (note that even after removing, the name of the environment still exists in

~/.conda/environments.txt):$ conda remove -n testenv --allSearch for a package as follows:

$ conda search numpyIn this example, we searched for the NumPy package. If NumPy is already present, Anaconda shows an asterisk in the output at the corresponding entry.

Update the distribution as follows:

$ conda update conda

The .condarc configuration file follows the YAML syntax.

Note

YAML is a human-readable configuration file format with the extension .yaml or .yml. YAML was initially released in 2011, with the latest release in 2009. The YAML homepage is at http://yaml.org/ (retrieved July 2015).

You can find a sample configuration file at http://conda.pydata.org/docs/install/sample-condarc.html (retrieved July 2015). The related documentation is at http://conda.pydata.org/docs/install/config.html (retrieved July 2015).

Martins, L. Felipe (November 2014). IPython Notebook Essentials (1st Edition.). Packt Publishing. p. 190. ISBN 1783988347

The conda user cheat sheet at http://conda.pydata.org/docs/_downloads/conda-cheatsheet.pdf (retrieved July 2015)

The Data Science Toolbox (DST) is a virtual environment based on Ubuntu for data analysis using Python and R. Since DST is a virtual environment, we can install it on various operating systems. We will install DST locally, which requires VirtualBox and Vagrant. VirtualBox is a virtual machine application originally created by Innotek GmbH in 2007. Vagrant is a wrapper around virtual machine applications such as VirtualBox created by Mitchell Hashimoto.

You need to have in the order of 2 to 3 GB free for VirtualBox, Vagrant, and DST itself. This may vary by operating system.

Installing DST requires the following steps:

Install VirtualBox by downloading an installer for your operating system and architecture from https://www.virtualbox.org/wiki/Downloads (retrieved July 2015) and running it. I installed VirtualBox 4.3.28-100309 myself, but you can just install whatever the most recent VirtualBox version at the time is.

Install Vagrant by downloading an installer for your operating system and architecture from https://www.vagrantup.com/downloads.html (retrieved July 2015). I installed Vagrant 1.7.2 and again you can install a more recent version if available.

Create a directory to hold the DST and navigate to it with a terminal. Run the following command:

$ vagrant init data-science-toolbox/dst $ vagrant up

The first command creates a

VagrantFileconfiguration file. Most of the content is commented out, but the file does contain links to documentation that might be useful. The second command creates the DST and initiates a download that could take a couple of minutes.Connect to the virtual environment as follows (on Windows use putty):

$ vagrant sshView the preinstalled Python packages with the following command:

vagrant@data-science-toolbox:~$ pip freezeThe list is quite long; in my case it contained 32 packages. The DST Python version as of July 2015 was 2.7.6.

When you are done with the DST, log out and suspend (you can also halt it completely) the VM:

vagrant@data-science-toolbox:~$ logout Connection to 127.0.0.1 closed. $ vagrant suspend ==> default: Saving VM state and suspending execution...

Virtual machines (VMs) emulate computers in software. VirtualBox is an application that creates and manages VMs. VirtualBox stores its VMs in your home folder, and this particular VM takes about 2.2 GB of storage.

Ubuntu is an open source Linux operating system, and we are allowed by its license to create virtual machines. Ubuntu has several versions; we can get more info with the lsb_release command:

vagrant@data-science-toolbox:~$ lsb_release -a No LSB modules are available. Distributor ID: Ubuntu Description: Ubuntu 14.04 LTS Release: 14.04 Codename: trusty

Vagrant used to only work with VirtualBox, but currently it also supports VMware, KVM, Docker, and Amazon EC2. Vagrant calls virtual machines boxes. Some of these boxes are available for everyone at http://www.vagrantbox.es/ (retrieved July 2015).

Run Ubuntu Linux Within Windows Using VirtualBox at http://linux.about.com/od/howtos/ss/Run-Ubuntu-Linux-Within-Windows-Using-VirtualBox.htm#step11 (retrieved July 2015)

VirtualBox manual chapter 10 Technical Information at https://www.virtualbox.org/manual/ch10.html (retrieved July 2015)

Virtual environments provide dependency isolation for small projects. They also keep your site-packages directory small. Since Python 3.3, virtualenv has been part of the standard Python distribution. The virtualenvwrapper Python project has some extra convenient features for virtual environment management. I will demonstrate virtualenv and virtualenvwrapper functionality in this recipe.

You need Python 3.3 or later. You can install virtualenvwrapper with pip command as follows:

$ [sudo] pip install virtualenvwrapper

On Linux and Mac, it's necessary to do some extra work—specifying a directory for the virtual environments and sourcing a script:

$ export WORKON_HOME=/tmp/envs $ source /usr/local/bin/virtualenvwrapper.sh

Windows has a separate version, which you can install with the following command:

$ pip install virtualenvwrapper-win

Create a virtual environment for a given directory with the

pyvenvscript part of your Python distribution:$ pyvenv /tmp/testenv $ ls bin include lib pyvenv.cfg

In this example, we created a

testenvdirectory in the/tmpdirectory with several directories and a configuration file. The configuration filepyvenv.cfgcontains the Python version and the home directory of the Python distribution.Activate the environment on Linux or Mac by sourcing the

activatescript, for example, with the following command:$ source bin/activateOn Windows, use the

activate.batfile.You can now install packages in this environment in isolation. When you are done with the environment, switch back on Linux or Mac with the following command:

$ deactivateOn Windows, use the

deactivate.batfile.Alternatively, you could use virtualenvwrapper. Create and switch to a virtual environment with the following command:

vagrant@data-science-toolbox:~$ mkvirtualenv env2Deactivate the environment with the

deactivatecommand:(env2)vagrant@data-science-toolbox:~$ deactivateDelete the environment with the

rmvirtualenvcommand:vagrant@data-science-toolbox:~$ rmvirtualenv env2

The Python standard library documentation for virtual environments at https://docs.python.org/3/library/venv.html#creating-virtual-environments (retrieved July 2015)

The virtualenvwrapper documentation is at https://virtualenvwrapper.readthedocs.org/en/latest/index.html (retrieved July 2015)

Docker uses Linux kernel features to provide an extra virtualization layer. Docker was created in 2013 by Solomon Hykes. Boot2Docker allows us to install Docker on Windows and Mac OS X too. Boot2Docker uses a VirtualBox VM that contains a Linux environment with Docker. In this recipe, we will set up Docker and download the continuumio/miniconda3 Docker image.

The Docker installation docs are saved at https://docs.docker.com/index.html (retrieved July 2015). I installed Docker 1.7.0 with Boot2Docker. The installer requires about 133 MB. However, if you want to follow the whole recipe, you will need several gigabytes.

Once Boot2Docker is installed, you need to initialize the environment. This is only necessary once, and Linux users don't need this step:

$ boot2docker init Latest release for github.com/boot2docker/boot2docker is v1.7.0 Downloading boot2docker ISO image... Success: downloaded https://github.com/boot2docker/boot2docker/releases/download/v1.7.0/boot2docker.iso

In the preceding step, you downloaded a VirtualBox VM to a directory such as

/VirtualBox\ VMs/boot2docker-vm/.The next step for Mac OS X and Windows users is to start the VM:

$ boot2docker startCheck the Docker environment by starting a sample container:

$ docker run hello-worldDocker images can be made public. We can search for such images and download them. In Setting up Anaconda, we installed Anaconda; however, Anaconda and Miniconda Docker images also exist. Use the following command:

$ docker search continuumioThe preceding command shows a list of Docker images from Continuum Analytics – the company that developed Anaconda and Miniconda. Download the Miniconda 3 Docker image as follows (if you prefer using my container, skip this):

$ docker pull continuumio/miniconda3Start the image with the following command:

$ docker run -t -i continuumio/miniconda3 /bin/bashWe start out as root in the image.

The command

$ docker imagesshould list thecontinuumio/miniconda3image as well. If you prefer not to install too much software (possibly only Docker and Boot2Docker) for this book, you should use the image I created. It uses thecontinuumio/miniconda3image as template. This image allows you to execute Python scripts in the current working directory on your computer, while using installed software from the Docker image:$ docker run -it -p 8888:8888 -v $(pwd):/usr/data -w /usr/data "ivanidris/pydacbk:latest" python <somefile>.pyYou can also run a IPython notebook in your current working directory with the following command:

$ docker run -it -p 8888:8888 -v $(pwd):/usr/data -w /usr/data "ivanidris/pydacbk:latest" sh -c "ipython notebook --ip=0.0.0.0 --no-browser"Then, go to either

http://192.168.59.103:8888orhttp://localhost:8888to view the IPython home screen. You might have noticed that the command lines are quite long, so I will post additional tips and tricks to make life easier on https://pythonhosted.org/dautil (work in progress).The Boot2Docker VM shares the

/Usersdirectory on Mac OS X and theC:\Usersdirectory on Windows. In general and on other operating systems, we can mount directories and copy files from the container as described in https://docs.docker.com/userguide/dockervolumes/ (retrieved July 2015).Shut down the VM (unless you are on Linux, where you use the

dockercommand instead) with the following command:$ boot2docker down

Docker Hub acts as a central registry for public and private Docker images. In this recipe, we downloaded images via this registry. To push an image to Docker Hub, we need to create a local registry first. The way Docker Hub works is in many ways comparable to the way source code repositories such as GitHub work. You can commit changes as well as push, pull, and tag images. The continuumio/miniconda3 image is configured with a special file, which you can find at https://github.com/ContinuumIO/docker-images/blob/master/miniconda3/Dockerfile (retrieved July 2015). In this file, you can read which image was used as base, the name of the maintainer, and the commands used to build the image.

The Docker user guide at http://docs.docker.com/userguide/ (retrieved July 2015)

The IPython Notebook was added to IPython 0.12 in December 2011. Many Pythonistas feel that the IPython Notebook is essential for reproducible data analysis. The IPython Notebook is comparable to commercial products such as Mathematica, MATLAB, and Maple. It is an interactive web browser-based environment. In this recipe, we will see how to keep track of package versions and store IPython sessions in the context of reproducible data analysis. By the way, the IPython Notebook has been renamed Jupyter Notebook.

For this recipe, you will need a recent IPython installation. The instructions to install IPython are at http://ipython.org/install.html (retrieved July 2015). Install it using the pip command:

$ [sudo] pip install ipython/jupyter

If you have installed IPython via Anaconda already, check for updates with the following commands:

$ conda update conda $ conda update ipython ipython-notebook ipython-qtconsole

I have IPython 3.2.0 as part of the Anaconda distribution.

We will install log a Python session and use the watermark extension to track package versions and other information. Start an IPython shell or notebook. When we start a session, we can use the command line switch --logfile=<file name>.py. In this recipe, we use the %logstart magic (IPython terminology) function:

In [1]: %logstart cookbook_log.py rotate Activating auto-logging. Current session state plus future input saved. Filename : cookbook_log.py Mode : rotate Output logging : False Raw input log : False Timestamping : False State : active

This example invocation started logging to a file in rotate mode. Both the filename and mode are optional. Turn logging off and back on again as follows:

In [2]: %logoff Switching logging OFF In [3]: %logon Switching logging ON

Install the watermark magic from Github with the following command:

In [4]: %install_ext https://raw.githubusercontent.com/rasbt/watermark/master/watermark.py

The preceding line downloads a Python file, in my case, to ~/.ipython/extensions/watermark.py. Load the extension by typing the following line:

%load_ext watermark

The extension can place timestamps as well as software and hardware information. Get additional usage documentation and version (I installed watermark 1.2.2) with the following command:

%watermark?

For example, call watermark without any arguments:

In [7]: %watermark … Omitting time stamp … CPython 3.4.3 IPython 3.2.0 compiler : Omitting system : Omitting release : 14.3.0 machine : x86_64 processor : i386 CPU cores : 8 interpreter: 64bit

I omitted the timestamp and other information for personal reasons. A more complete example follows with author name (-a), versions of packages specified as a comma-separated string (-p), and custom time (-c) in a strftime() based format:

In [8]: %watermark -a "Ivan Idris" -v -p numpy,scipy,matplotlib -c '%b %Y' -w Ivan Idris 'Jul 2015' CPython 3.4.3 IPython 3.2.0 numpy 1.9.2 scipy 0.15.1 matplotlib 1.4.3 watermark v. 1.2.2

The IPython logger writes commands you type to a Python file. Most of the lines are in the following format:

get_ipython().magic('STRING_YOU_TYPED')You can replay the session with %load <log file>. The logging modes are described in the following table:

|

Mode |

Description |

|---|---|

|

over |

This mode overwrites existing log files. |

|

backup |

If a log file exists with the same name, the old file is renamed. |

|

append |

This mode appends lines to already existing files. |

|

rotate |

This mode rotates log files by incrementing numbers, so that log files don't get too big. |

We used a custom magic function available on the Internet. The code for the function is in a single Python file and it should be easy for you to follow. If you want different behavior, you just need to modify the file.

The custom magics documentation at http://ipython.org/ipython-doc/dev/config/custommagics.html (retrieved July 2015)

Helen Shen (2014). Interactive notebooks: Sharing the code. Nature 515 (7525): 151–152. doi:10.1038/515151a

IPython reference documentation at https://ipython.org/ipython-doc/dev/interactive/reference.html (retrieved July 2015)

IPython has an elaborate configuration and customization system. The components of the system are as follows:

IPython provides default profiles, but we can create our own profiles

Various settable options for the shell, kernel, Qt console, and notebook

Customization of prompts and colors

Extensions we saw in Keeping track of package versions and history in IPython notebooks

Startup files per profile

I will demonstrate some of these components in this recipe.

You need IPython for this recipe, so (if necessary) have a look at the Getting ready section of Keeping track of package versions and history in IPython notebooks.

Let's start with a startup file. I have a directory in my home directory at .ipython/profile_default/startup, which belongs to the default profile. This directory is meant for startup files. IPython detects Python files in this directory and executes them in lexical order of filenames. Because of the lexical order, it is convenient to name the startup files with a combination of digits and strings, for example, 0000-watermark.py. Put the following code in the startup file:

get_ipython().magic('%load_ext watermark')

get_ipython().magic('watermark -a "Ivan Idris" -v -p numpy,scipy,matplotlib -c \'%b %Y\' -w')This startup file loads the extension we used in Keeping track of package versions and history in IPython notebooks and shows information about package versions. Other use cases include importing modules and defining functions. IPython stores commands in a SQLite database, so you could gather statistics to find common usage patterns. The following script prints source lines and associated counts from the database for the default profile sorted by counts (the code is in the ipython_history.py file in this book's code bundle):

import sqlite3

from IPython.utils.path import get_ipython_dir

import pprint

import os

def print_history(file):

with sqlite3.connect(file) as con:

c = con.cursor()

c.execute("SELECT count(source_raw) as csr,\

source_raw FROM history\

GROUP BY source_raw\

ORDER BY csr")

result = c.fetchall()

pprint.pprint(result)

c.close()

hist_file = '%s/profile_default/history.sqlite' % get_ipython_dir()

if os.path.exists(hist_file):

print_history(hist_file)

else:

print("%s doesn't exist" % hist_file)The highlighted SQL query does the bulk of the work. The code is self-explanatory. If it is not clear, I recommend reading Chapter 8, Text Mining and Social Network, of my book Python Data Analysis, Packt Publishing.

The other configuration option I mentioned is profiles. We can use the default profiles or create our own profiles on a per project or functionality basis. Profiles act as sandboxes and you can configure them separately. Here's the command to create a profile:

$ ipython profile create [newprofile]

The configuration files are Python files and their names end with _config.py. In these files, you can set various IPython options. Set the option to automatically log the IPython session as follows:

c = get_config() c.TerminalInteractiveShell.logstart=True

The first line is usually included in configuration files and gets the root IPython configuration object. The last line tells IPython that we want to start logging immediately on startup so you don't have to type %logstart.

Alternatively, you can also set the log file name with the following command:

c.TerminalInteractiveShell.logfile='mylog_file.py'

You can also use the following configuration line that ensures logging in append mode:

c.TerminalInteractiveShell.logappend='mylog_file.py'

Introduction to IPython configuration at http://ipython.org/ipython-doc/dev/config/intro.html#profiles (retrieved July 2015)

Terminal IPython options documentation at http://ipython.org/ipython-doc/dev/config/options/terminal.html (retrieved July 2015)

Notebooks are useful to keep track of what you did and what went wrong. Logging works in a similar fashion, and we can log errors and other useful information with the standard Python logging library.

For reproducible data analysis, it is good to know the modules our Python scripts import. In this recipe, I will introduce a minimal API from dautil that logs package versions of imported modules in a best effort manner.

In this recipe, we import NumPy and pandas, so you may need to import them. See the Configuring pandas recipe for pandas installation instructions. Installation instructions for NumPy can be found at http://docs.scipy.org/doc/numpy/user/install.html (retrieved July 2015). Alternatively, install NumPy with pip using the following command:

$ [sudo] pip install numpy

The command for Anaconda users is as follows:

$ conda install numpy

I have installed NumPy 1.9.2 via Anaconda. We also require AppDirs to find the appropriate directory to store logs. Install it with the following command:

$ [sudo] pip install appdirs

I have AppDirs 1.4.0 on my system.

To log, we need to create and set up loggers. We can either set up the loggers with code or use a configuration file. Configuring loggers with code is the more flexible option, but configuration files tend to be more readable. I use the log.conf configuration file from dautil:

[loggers]

keys=root

[handlers]

keys=consoleHandler,fileHandler

[formatters]

keys=simpleFormatter

[logger_root]

level=DEBUG

handlers=consoleHandler,fileHandler

[handler_consoleHandler]

class=StreamHandler

level=INFO

formatter=simpleFormatter

args=(sys.stdout,)

[handler_fileHandler]

class=dautil.log_api.VersionsLogFileHandler

formatter=simpleFormatter

args=('versions.log',)

[formatter_simpleFormatter]

format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

datefmt=%d-%b-%YThe file configures a logger to log to a file with the DEBUG level and to the screen with the INFO level. So, the logger logs more to the file than to the screen. The file also specifies the format of the log messages. I created a tiny API in dautil, which creates a logger with its get_logger() function and uses it to log the package versions of a client program with its log() function. The code is in the log_api.py file of dautil:

from pkg_resources import get_distribution

from pkg_resources import resource_filename

import logging

import logging.config

import pprint

from appdirs import AppDirs

import os

def get_logger(name):

log_config = resource_filename(__name__, 'log.conf')

logging.config.fileConfig(log_config)

logger = logging.getLogger(name)

return logger

def shorten(module_name):

dot_i = module_name.find('.')

return module_name[:dot_i]

def log(modules, name):

skiplist = ['pkg_resources', 'distutils']

logger = get_logger(name)

logger.debug('Inside the log function')

for k in modules.keys():

str_k = str(k)

if '.version' in str_k:

short = shorten(str_k)

if short in skiplist:

continue

try:

logger.info('%s=%s' % (short,

get_distribution(short).version))

except ImportError:

logger.warn('Could not impport', short)

class VersionsLogFileHandler(logging.FileHandler):

def __init__(self, fName):

dirs = AppDirs("PythonDataAnalysisCookbook",

"Ivan Idris")

path = dirs.user_log_dir

print(path)

if not os.path.exists(path):

os.mkdir(path)

super(VersionsLogFileHandler, self).__init__(

os.path.join(path, fName))The program that uses the API is in the log_demo.py file in this book's code bundle:

import sys import numpy as np import matplotlib.pyplot as plt import pandas as pd from dautil import log_api log_api.log(sys.modules, sys.argv[0])

We configured a handler (VersionsLogFileHandler) that writes to file and a handler (StreamHandler) that displays messages on the screen. StreamHandler is a class in the Python standard library. To configure the format of the log messages, we used the SimpleFormater class from the Python standard library.

The API I made goes through modules listed in the sys.modules variable and tries to get the versions of the modules. Some of the modules are not relevant for data analysis, so we skip them. The log() function of the API logs a DEBUG level message with the debug() method. The info() method logs the package version at INFO level.

The logging tutorial at https://docs.python.org/3.5/howto/logging.html (retrieved July 2015)

The logging cookbook at https://docs.python.org/3.5/howto/logging-cookbook.html#logging-cookbook (retrieved July 2015)

If code doesn't do what you want, it's hard to do reproducible data analysis. One way to gain control of your code is to test it. If you have tested code manually, you know it is repetitive and boring. When a task is boring and repetitive, you should automate it.

Unit testing automates testing and I hope you are familiar with it. When you learn unit testing for the first time, you start with simple tests such as comparing strings or numbers. However, you hit a wall when file I/O or other resources come into the picture. It turns out that in Python we can mock resources or external APIs easily. The packages needed are even part of the standard Python library. In the Learning to log for robust error checking recipe, we logged messages to a file. If we unit test this code, we don't want to trigger logging from the test code. In this recipe, I will show you how to mock the logger and other software components we need.

The code for this recipe is in the test_log_api.py file of dautil. We start by importing the module under test and the Python functionality we need for unit testing:

from dautil import log_api import unittest from unittest.mock import create_autospec from unittest.mock import patch

Define a class that contains the test code:

class TestLogApi(unittest.TestCase):

Make the unit tests executable with the following lines:

if __name__ == '__main__':

unittest.main()If we call Python functions with the wrong number of arguments, we expect to get a TypeError. The following tests check for that:

def test_get_logger_args(self): mock_get_logger = create_autospec(log_api.get_logger, return_value=None) mock_get_logger('test') mock_get_logger.assert_called_once_with('test') def test_log_args(self): mock_log = create_autospec(log_api.log, return_value=None) mock_log([], 'test') mock_log.assert_called_once_with([], 'test') with self.assertRaises(TypeError): mock_log() with self.assertRaises(TypeError): mock_log('test')

We used the unittest.create_autospec() function to mock the functions under test. Mock the Python logging package as follows:

@patch('dautil.log_api.logging')

def test_get_logger_fileConfig(self, mock_logging):

log_api.get_logger('test')

self.assertTrue(mock_logging.config.fileConfig.called)The @patch decorator replaces logging with a mock. We can also patch with similarly named functions. The patching trick is quite useful. Test our get_logger() function with the following method:

@patch('dautil.log_api.get_logger')

def test_log_debug(self, amock):

log_api.log({}, 'test')

self.assertTrue(amock.return_value.debug.called)

amock.return_value.debug.assert_called_once_with(

'Inside the log function')The previous lines check whether debug() was called and with which arguments. The following two test methods demonstrate how to use multiple @patch decorators:

@patch('dautil.log_api.get_distribution')

@patch('dautil.log_api.get_logger')

def test_numpy(self, m_get_logger, m_get_distribution):

log_api.log({'numpy.version': ''}, 'test')

m_get_distribution.assert_called_once_with('numpy')

self.assertTrue(m_get_logger.return_value.info.called)

@patch('dautil.log_api.get_distribution')

@patch('dautil.log_api.get_logger')

def test_distutils(self, amock, m_get_distribution):

log_api.log({'distutils.version': ''}, 'test')

self.assertFalse(m_get_distribution.called)Mocking is a technique to spy on objects and functions. We substitute them with our own spies, which we give just enough information to avoid detection. The spies report to us who contacted them and any useful information they received.

The

unittest.mocklibrary documentation at https://docs.python.org/3/library/unittest.mock.html#patch-object (retrieved July 2015)The

unittestdocumentation at https://docs.python.org/3/library/unittest.html (retrieved July 2015)

The pandas library has more than a dozen configuration options, as described in http://pandas.pydata.org/pandas-docs/dev/options.html (retrieved July 2015).

Note

The pandas library is Python open source software originally created for econometric data analysis. It uses data structures inspired by the R programming language.

You can set and get properties using dotted notation or via functions. It is also possible to reset options to defaults and get information about them. The option_context() function allows you to limit the scope of the option to a context using the Python with statement. In this recipe, I will demonstrate pandas configuration and a simple API to set and reset options I find useful. The two options are precision and max_rows. The first option specifies floating point precision of output. The second option specifies the maximum rows of a pandas DataFrame to print on the screen.

You need pandas and NumPy for this recipe. Instructions to install NumPy are given in Learning to log for robust error checking. The pandas installation documentation can be found at http://pandas.pydata.org/pandas-docs/dev/install.html (retrieved July 2015). The recommended way to install pandas is via Anaconda. I have installed pandas 0.16.2 via Anaconda. You can update your Anaconda pandas with the following command:

$ conda update pandas

The following code from the options.py file in dautil defines a simple API to set and reset options:

import pandas as pd

def set_pd_options():

pd.set_option('precision', 4)

pd.set_option('max_rows', 5)

def reset_pd_options():

pd.reset_option('precision')

pd.reset_option('max_rows')The script in configure_pd.py in this book's code bundle uses the following API:

from dautil import options

import pandas as pd

import numpy as np

from dautil import log_api

printer = log_api.Printer()

print(pd.describe_option('precision'))

print(pd.describe_option('max_rows'))

printer.print('Initial precision', pd.get_option('precision'))

printer.print('Initial max_rows', pd.get_option('max_rows'))

# Random pi's, should use random state if possible

np.random.seed(42)

df = pd.DataFrame(np.pi * np.random.rand(6, 2))

printer.print('Initial df', df)

options.set_pd_options()

printer.print('df with different options', df)

options.reset_pd_options()

printer.print('df after reset', df)If you run the script, you get descriptions for the options that are a bit too long to display here. The getter gives the following output:

'Initial precision' 7 'Initial max_rows' 60

Then, we create a pandas DataFrame table with random data. The initial printout looks like this:

'Initial df' 0 1 0 1.176652 2.986757 1 2.299627 1.880741 2 0.490147 0.490071 3 0.182475 2.721173 4 1.888459 2.224476 5 0.064668 3.047062

The printout comes from the following class in log_api.py:

class Printer():

def __init__(self, modules=None, name=None):

if modules and name:

log(modules, name)

def print(self, *args):

for arg in args:

pprint.pprint(arg)After setting the options with the dautil API, pandas hides some of the rows and the floating point numbers look different too:

'df with different options' 0 1 0 1.177 2.987 1 2.300 1.881 .. ... ... 4 1.888 2.224 5 0.065 3.047 [6 rows x 2 columns]

Because of the truncated rows, pandas tells us how many rows and columns the DataFrame table has. After we reset the options, we get the original printout back.

The matplotlib library allows configuration via the matplotlibrc files and Python code. The last option is what we are going to do in this recipe. Small configuration tweaks should not matter if your data analysis is strong. However, it doesn't hurt to have consistent and attractive plots. Another option is to apply stylesheets, which are files comparable to the matplotlibrc files. However, in my opinion, the best option is to use Seaborn on top of matplotlib. I will discuss Seaborn and matplotlib in more detail in Chapter 2, Creating Attractive Data Visualizations.

You need to install matplotlib for this recipe. Visit http://matplotlib.org/users/installing.html (retrieved July 2015) for more information. I have matplotlib 1.4.3 via Anaconda. Install Seaborn using Anaconda:

$ conda install seaborn

I have installed Seaborn 0.6.0 via Anaconda.

We can set options via a dictionary-like variable. The following function from the options.py file in dautil sets three options:

def set_mpl_options():

mpl.rcParams['legend.fancybox'] = True

mpl.rcParams['legend.shadow'] = True

mpl.rcParams['legend.framealpha'] = 0.7The first three options have to do with legends. The first option specifies rounded corners for the legend, the second options enables showing a shadow, and the third option makes the legend slightly transparent. The matplotlib rcdefaults() function resets the configuration.

To demonstrate these options, let's use sample data made available by matplotlib. The imports are as follows:

import matplotlib.cbook as cbook import pandas as pd import matplotlib.pyplot as plt from dautil import options import matplotlib as mpl from dautil import plotting import seaborn as sns

The data is in a CSV file and contains stock price data for AAPL. Use the following commands to read the data and stores them in a pandas DataFrame:

data = cbook.get_sample_data('aapl.csv', asfileobj=True)

df = pd.read_csv(data, parse_dates=True, index_col=0)Resample the data to average monthly values as follows:

df = df.resample('M')The full code is in the configure_matplotlib.ipynb file in this book's code bundle:

import matplotlib.cbook as cbook

import pandas as pd

import matplotlib.pyplot as plt

from dautil import options

import matplotlib as mpl

from dautil import plotting

import seaborn as sns

data = cbook.get_sample_data('aapl.csv', asfileobj=True)

df = pd.read_csv(data, parse_dates=True, index_col=0)

df = df.resample('M')

close = df['Close'].values

dates = df.index.values

fig, axes = plt.subplots(4)

def plot(title, ax):

ax.set_title(title)

ax.set_xlabel('Date')

plotter = plotting.CyclePlotter(ax)

plotter.plot(dates, close, label='Close')

plotter.plot(dates, 0.75 * close, label='0.75 * Close')

plotter.plot(dates, 1.25 * close, label='1.25 * Close')

ax.set_ylabel('Price ($)')

ax.legend(loc='best')

plot('Initial', axes[0])

sns.reset_orig()

options.set_mpl_options()

plot('After setting options', axes[1])

sns.reset_defaults()

plot('After resetting options', axes[2])

with plt.style.context(('dark_background')):

plot('With dark_background stylesheet', axes[3])

fig.autofmt_xdate()

plt.show()The program plots the data and arbitrary upper and lower band with the default options, custom options, and after a reset of the options. I used the following helper class from the plotting.py file of dautil:

from itertools import cycle

class CyclePlotter():

def __init__(self, ax):

self.STYLES = cycle(["-", "--", "-.", ":"])

self.LW = cycle([1, 2])

self.ax = ax

def plot(self, x, y, *args, **kwargs):

self.ax.plot(x, y, next(self.STYLES),

lw=next(self.LW), *args, **kwargs)The class cycles through different line styles and line widths. Refer to the following plot for the end result:

Importing Seaborn dramatically changes the look and feel of matplotlib plots. Just temporarily comment the seaborn lines out to convince yourself. However, Seaborn doesn't seem to play nicely with the matplotlib options we set, unless we use the Seaborn functions reset_orig() and reset_defaults().

The matplotlib customization documentation at http://matplotlib.org/users/customizing.html (retrieved July 2015)

The matplotlib documentation about stylesheets at http://matplotlib.org/users/style_sheets.html (retrieved July 2015)

For reproducible data analysis, we should prefer deterministic algorithms. Some algorithms use random numbers, but in practice we rarely use perfectly random numbers. The algorithms provided in numpy.random allow us to specify a seed value. For reproducibility, it is important to always provide a seed value but it is easy to forget. A utility function in sklearn.utils provides a solution for this issue.

NumPy has a set_printoptions() function, which controls how NumPy prints arrays. Obviously, printing should not influence the quality of your analysis too much. However, readability is important if you want people to understand and reproduce your results.

Install NumPy using the instructions in the Learning to log for robust error checking recipe. We will need scikit-learn, so have a look at http://scikit-learn.org/dev/install.html (retrieved July 2015). I have installed scikit-learn 0.16.1 via Anaconda.

The code for this example is in the configure_numpy.py file in this book's code bundle:

from sklearn.utils import check_random_state import numpy as np from dautil import options from dautil import log_api random_state = check_random_state(42) a = random_state.randn(5) random_state = check_random_state(42) b = random_state.randn(5) np.testing.assert_array_equal(a, b) printer = log_api.Printer() printer.print("Default options", np.get_printoptions()) pi_array = np.pi * np.ones(30) options.set_np_options() print(pi_array) # Reset options.reset_np_options() print(pi_array)

The highlighted lines show how to get a NumPy RandomState object with 42 as the seed. In this example, the arrays a and b are equal, because we used the same seed and the same procedure to draw the numbers. The second part of the preceding program uses the following functions I defined in options.py:

def set_np_options():

np.set_printoptions(precision=4, threshold=5,

linewidth=65)

def reset_np_options():

np.set_printoptions(precision=8, threshold=1000,

linewidth=75)Here's the output after setting the options:

[ 3.1416 3.1416 3.1416 ..., 3.1416 3.1416 3.1416]

As you can see, NumPy replaces some of the values with an ellipsis and it shows only four digits after the decimal sign. The NumPy defaults are as follows:

'Default options' {'edgeitems': 3, 'formatter': None, 'infstr': 'inf', 'linewidth': 75, 'nanstr': 'nan', 'precision': 8, 'suppress': False, 'threshold': 1000}

The scikit-learn documentation at http://scikit-learn.org/stable/developers/utilities.html (retrieved July 2015)

The NumPy

set_printoptions()documentation at http://docs.scipy.org/doc/numpy/reference/generated/numpy.set_printoptions.html (retrieved July 2015)The NumPy

RandomStatedocumentation at http://docs.scipy.org/doc/numpy/reference/generated/numpy.random.RandomState.html (retrieved July 2015)

Following a code style guide helps improve code quality. Having high-quality code is important if you want people to easily reproduce your analysis. One way to adhere to a coding standard is to scan your code with static code analyzers. You can use many code analyzers. In this recipe, we will use the pep8 analyzer. In general, code analyzers complement or maybe slightly overlap each other, so you are not limited to pep8.

Convenient data access is crucial for reproducible analysis. In my opinion, the best type of data access is with a specialized API and local data. I will introduce a dautil module I created to load weather data provided by the Dutch KNMI.

Reporting is often the last phase of a data analysis project. We can report our findings using various formats. In this recipe, we will focus on tabulating our report with the tabulate module. The landslide tool creates slide shows from various formats such as reStructured text.

You will need pep8 and tabulate. A quick guide to pep8 is available at https://pep8.readthedocs.org/en/latest/intro.html (retrieved July 2015). I have installed pep8 1.6.2 via Anaconda. You can install joblib, tabulate, and landslide with the pip command.

I have tabulate 0.7.5 and landslide 1.1.3.

Here's an example pep8 session:

$ pep8 --first log_api.py log_api.py:21:1: E302 expected 2 blank lines, found 1 log_api.py:44:33: W291 trailing whitespace log_api.py:50:60: E225 missing whitespace around operator

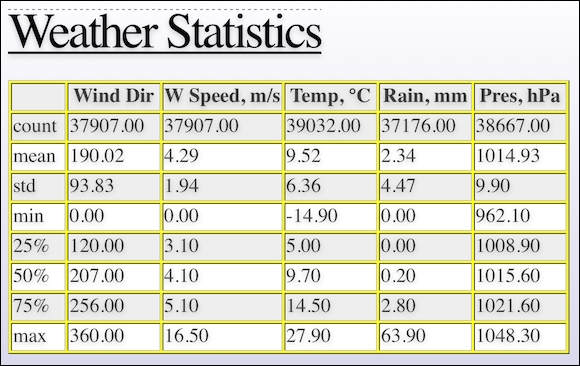

The –-first switch finds the first occurrence of an error. In the previous example, pep8 reports the line number where the error occurred, an error code, and a short description of the error. I prepared a module dedicated to data access of datasets we will use in several chapters. We start with access to a pandas DataFrame stored in a pickle, which contains selected weather data from the De Bilt weather station in the Netherlands. I created the pickle by downloading a zip file, extracting the data file, and loading the data in a pandas DataFrame table. I applied minimal data transformation, including multiplication of values and converting empty fields to NaNs. The code is in the data.py file in dautil. I will not discuss this code in detail, because we only need to load data from the pickle. However, if you want to download the data yourself, you can use the static method I defined in data.py. Downloading the data will of course give you more recent data, but you will get slightly different results if you substitute my pickle. The following code shows the basic descriptive statistics with the pandas.DataFrame.describe() method in the report_weather.py file in this book's code bundle:

from dautil import data

from dautil import report

import pandas as pd

import numpy as np

from tabulate import tabulate

df = data.Weather.load()

headers = [data.Weather.get_header(header)

for header in df.columns.values.tolist()]

df = df.describe()

Then, the code creates a slides.rst file in the reStructuredText format with dautil.RSTWriter. This is just a matter of simple string concatenation and writing to a file. The highlighted lines in the following code show the tabulate() calls that create table grids from the pandas.DataFrame objects:

writer = report.RSTWriter()

writer.h1('Weather Statistics')

writer.add(tabulate(df, headers=headers,

tablefmt='grid', floatfmt='.2f'))

writer.divider()

headers = [data.Weather.get_header(header)

for header in df.columns.values.tolist()]

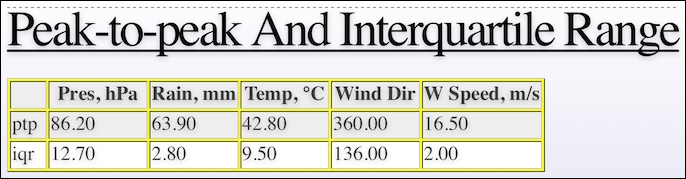

builder = report.DFBuilder(df.columns)

builder.row(df.iloc[7].values - df.iloc[3].values)

builder.row(df.iloc[6].values - df.iloc[4].values)

df = builder.build(['ptp', 'iqr'])]

writer.h1('Peak-to-peak and Interquartile Range')

writer.add(tabulate(df, headers=headers,

tablefmt='grid', floatfmt='.2f'))

writer.write('slides.rst')

generator = report.Generator('slides.rst', 'weather_report.html')

generator.generate() I use the dautil.reportDFBuilder class to create the pandas.DataFrame objects incrementally using a dictionary where the keys are columns of the final DataFrame table and the values are the rows:

import pandas as pd

class DFBuilder():

def __init__(self, cols, *args):

self.columns = cols

self.df = {}

for col in self.columns:

self.df.update({col: []})

for arg in args:

self.row(arg)

def row(self, row):

assert len(row) == len(self.columns)

for col, val in zip(self.columns, row):

self.df[col].append(val)

return self.df

def build(self, index=None):

self.df = pd.DataFrame(self.df)

if index:

self.df.index = index

return self.dfI eventually generate a HTML file using landslide and my own custom CSS. If you open weather_report.html, you will see the first slide with basic descriptive statistics:

The second slide looks like this and contains the peak-to-peak (difference between minimum and maximum values) and the interquartile range (difference between the third and first quartile):

The tabulate PyPi page at https://pypi.python.org/pypi/tabulate (retrieved July 2015)

The landslide Github page at https://github.com/adamzap/landslide (retrieved July 2015)