When starting with Go, one of the most common things you'll hear being said is that it's a systems language.

Indeed, one of the earlier descriptions of Go, by the Go team itself, was that the language was built to be a modern systems language. It was constructed to combine the speed and power of languages, such as C with the syntactical elegance and thrift of modern interpreted languages, such as Python. You can see that goal realized when you look at just a few snippets of Go code.

From the Go FAQ on why Go was created:

"Go was born out of frustration with existing languages and environments for systems programming."

Perhaps the largest part of present-day Systems programming is designing backend servers. Obviously, the Web comprises a huge, but not exclusive, percentage of that world.

Go hasn't been considered a web language until recently. Unsurprisingly, it took a few years of developers dabbling, experimenting, and finally embracing the language to start taking it to new avenues.

While Go is web-ready out of the box, it lacks a lot of the critical frameworks and tools people so often take for granted with web development now. As the community around Go grew, the scaffolding began to manifest in a lot of new and exciting ways. Combined with existing ancillary tools, Go is now a wholly viable option for end-to-end web development. But back to that primary question: Why Go? To be fair, it's not right for every web project, but any application that can benefit from high-performance, secure web-serving out of the box with the added benefits of a beautiful concurrency model would make for a good candidate.

In this book, we're going to explore those aspects and others to outline what can make Go the right language for your web architecture and applications.

We're not going to deal with a lot of the low-level aspects of the Go language. For example, we assume you're familiar with variable and constant declaration. We assume you understand control structures.

In this chapter we will cover the following topics:

- Installing Go

- Structuring a project

- Importing packages

- Introducing the net package

- Hello, Web

The most critical first step is, of course, making sure that Go is available and ready to start our first web server.

Note

While one of Go's biggest selling points is its cross-platform support (both building and using locally while targeting other operating systems), your life will be much easier on a Nix compatible platform.

If you're on Windows, don't fear. Natively, you may run into incompatible packages, firewall issues when running using go run command and some other quirks, but 95% of the Go ecosystem will be available to you. You can also, very easily, run a virtual machine, and in fact that is a great way to simulate a potential production environment.

In-depth installation instructions are available at https://golang.org/doc/install, but we'll talk about a few quirky points here before moving on.

For OS X and Windows, Go is provided as a part of a binary installation package. For any Linux platform with a package manager, things can be pretty easy.

On both OS X and Linux, you'll need to add a couple of lines to your path—the GOPATH and PATH. First, you'll want to find the location of your Go binary's installation. This varies from distribution to distribution. Once you've found that, you can configure the PATH and GOPATH, as follows:

export PATH=$PATH:/usr/local/go/bin export GOPATH="/usr/share/go"

While the path to be used is not defined rigidly, some convention has coalesced around starting at a subdirectory directly under your user's home directory, such as $HOME/go or ~Home/go. As long as this location is set perpetually and doesn't change, you won't run into issues with conflicts or missing packages.

You can test the impact of these changes by running the go env command. If you see any issues with this, it means that your directories are not correct.

Note that this may not prevent Go from running—depending on whether the GOBIN directory is properly set—but will prevent you from installing packages globally across your system.

To test the installation, you can grab any Go package by a go get command and create a Go file somewhere. As a quick example, first get a package at random, we'll use a package from the Gorilla framework, as we'll use this quite a bit throughout this book.

go get github.com/gorilla/mux

If this runs without any issue, Go is finding your GOPATH correctly. To make sure that Go is able to access your downloaded packages, draw up a very quick package that will attempt to utilize Gorilla's mux package and run it to verify whether the packages are found.

package main

import (

"fmt"

"github.com/gorilla/mux"

"net/http"

)

func TestHandler(w http.ResponseWriter, r *http.Request) {

}

func main() {

router := mux.NewRouter()

router.HandleFunc("/test", TestHandler)

http.Handle("/", router)

fmt.Println("Everything is set up!")

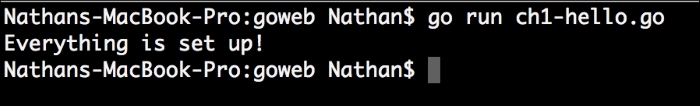

}Run go run test.go in the command line. It won't do much, but it will deliver the good news as shown in the following screenshot:

When you're first getting started and mostly playing around, there's no real problem with setting your application lazily.

For example, to get started as quickly as possible, you can create a simple hello.go file anywhere you like and compile without issue.

But when you get into environments that require multiple or distinct packages (more on that shortly) or have more explicit cross-platform requirements, it makes sense to design your project in a way that will facilitate the use of the go build tool.

The value of setting up your code in this manner lies in the way that the go build tool works. If you have local (to your project) packages, the build tool will look in the src directory first and then your GOPATH. When you're building for other platforms, go build will utilize the local bin folder to organize the binaries.

When building packages that are intended for mass use, you may also find that either starting your application under your GOPATH directory and then symbolically linking it to another directory, or doing the opposite, will allow you to develop without the need to subsequently go get your own code.

As with any language, being a part of the Go community means perpetual consideration of the way others create their code. Particularly if you're going to work in open source repositories, you'll want to generate your code the way that others do, in order to reduce the amount of friction when people get or include your code.

One incredibly helpful piece of tooling that the Go team has included is go fmt. fmt here, of course, means format and that's exactly what this tool does, it automatically formats your code according to the designed conventions.

By enforcing style conventions, the Go team has helped to mitigate one of the most common and pervasive debates that exist among a lot of other languages.

While the language communities tend to drive coding conventions, there are always little idiosyncrasies in the way individuals write programs. Let's use one of the most common examples around—where to put the opening bracket.

Some programmers like it on the same line as the statement:

for (int i = 0; i < 100; i++) {

// do something

}While others prefer it on the subsequent line:

for (int i = 0; i < 100; i++)

{

// do something

}These types of minor differences spark major, near-religious debates. The Gofmt tool helps alleviate this by allowing you to yield to Go's directive.

Now, Go bypasses this obvious source of contention at the compiler, by formatting your code similar to the latter example discussed earlier. The compiler will complain and all you'll get is a fatal error. But the other style choices have some flexibility, which are enforced when you use the tool to format.

Here, for example, is a piece of code in Go before go fmt:

func Double(n int) int {

if (n == 0) {

return 0

} else {

return n * 2

}

}Arbitrary whitespace can be the bane of a team's existence when it comes to sharing and reading code, particularly when every team member is not on the same IDE.

By running go fmt, we clean this up, thereby translating our whitespace according to Go's conventions:

func Double(n int) int {

if n == 0 {

return 0

} else {

return n * 2

}

}Long story short: always run go fmt before shipping or pushing your code.

Code conventions

As with any language, being a part of the Go community means perpetual consideration of the way others create their code. Particularly if you're going to work in open source repositories, you'll want to generate your code the way that others do, in order to reduce the amount of friction when people get or include your code.

One incredibly helpful piece of tooling that the Go team has included is go fmt. fmt here, of course, means format and that's exactly what this tool does, it automatically formats your code according to the designed conventions.

By enforcing style conventions, the Go team has helped to mitigate one of the most common and pervasive debates that exist among a lot of other languages.

While the language communities tend to drive coding conventions, there are always little idiosyncrasies in the way individuals write programs. Let's use one of the most common examples around—where to put the opening bracket.

Some programmers like it on the same line as the statement:

for (int i = 0; i < 100; i++) {

// do something

}While others prefer it on the subsequent line:

for (int i = 0; i < 100; i++)

{

// do something

}These types of minor differences spark major, near-religious debates. The Gofmt tool helps alleviate this by allowing you to yield to Go's directive.

Now, Go bypasses this obvious source of contention at the compiler, by formatting your code similar to the latter example discussed earlier. The compiler will complain and all you'll get is a fatal error. But the other style choices have some flexibility, which are enforced when you use the tool to format.

Here, for example, is a piece of code in Go before go fmt:

func Double(n int) int {

if (n == 0) {

return 0

} else {

return n * 2

}

}Arbitrary whitespace can be the bane of a team's existence when it comes to sharing and reading code, particularly when every team member is not on the same IDE.

By running go fmt, we clean this up, thereby translating our whitespace according to Go's conventions:

func Double(n int) int {

if n == 0 {

return 0

} else {

return n * 2

}

}Long story short: always run go fmt before shipping or pushing your code.

Beyond the absolute and the most trivial application—one that cannot even produce a Hello World output—you must have some imported package in a Go application.

To say Hello World, for example, we'd need some sort of a way to generate an output. Unlike in many other languages, even the core language library is accessible by a namespaced package. In Go, namespaces are handled by a repository endpoint URL, which is github.com/nkozyra/gotest, which can be opened directly on GitHub (or any other public location) for the review.

The go get tool easily handles packages hosted at the repositories, such as GitHub, Bitbucket, and Google Code (as well as a few others). You can also host your own projects, ideally a git project, elsewhere, although it might introduce some dependencies and sources for errors, which you'd probably like to avoid.

But what about the private repos? While go get is a wonderful tool, you'll find yourself looking at an error without some additional configuration, SSH agent forwarding, and so on.

You can work around this in a couple of ways, but one very simple method is to clone the repository locally, using your version control software directly.

You may have paused when you read about the way namespaces are defined and imported in a Go application. What happens if you're using version 1 of the application but would like to bring in version 2? In most cases, this has to be explicitly defined in the path of the import. For example:

import ( "github.com/foo/foo-v1" )

versus:

import ( "github.com/foo/foo-v2" )

As you might imagine, this can be a particularly sticky aspect of the way Go handles the remote packages.

Unlike a lot of other package managers, go get is decentralized—that is, nobody maintains a canonical reference library of packages and versions. This can sometimes be a sore spot for new developers.

For the most part, packages are always imported via the go get command, which reads the master branch of the remote repository. This means that maintaining multiple versions of a package at the same endpoint is, for the most part, impossible.

It's the utilization of the URL endpoints as namespaces that allows the decentralization, but it's also what provides a lack of internal support for versioning.

Your best bet as a developer is to treat every package as the most up-to-date version when you perform a go get command. If you need a newer version, you can always follow whatever pattern the author has decided on, such as the preceding example.

As a creator of your own packages, make sure that you also adhere to this philosophy. Keeping your master branch HEAD as the most up-to-date will make sure your that the code fits with the conventions of other Go authors.

Handling private repositories

The go get tool easily handles packages hosted at the repositories, such as GitHub, Bitbucket, and Google Code (as well as a few others). You can also host your own projects, ideally a git project, elsewhere, although it might introduce some dependencies and sources for errors, which you'd probably like to avoid.

But what about the private repos? While go get is a wonderful tool, you'll find yourself looking at an error without some additional configuration, SSH agent forwarding, and so on.

You can work around this in a couple of ways, but one very simple method is to clone the repository locally, using your version control software directly.

You may have paused when you read about the way namespaces are defined and imported in a Go application. What happens if you're using version 1 of the application but would like to bring in version 2? In most cases, this has to be explicitly defined in the path of the import. For example:

import ( "github.com/foo/foo-v1" )

versus:

import ( "github.com/foo/foo-v2" )

As you might imagine, this can be a particularly sticky aspect of the way Go handles the remote packages.

Unlike a lot of other package managers, go get is decentralized—that is, nobody maintains a canonical reference library of packages and versions. This can sometimes be a sore spot for new developers.

For the most part, packages are always imported via the go get command, which reads the master branch of the remote repository. This means that maintaining multiple versions of a package at the same endpoint is, for the most part, impossible.

It's the utilization of the URL endpoints as namespaces that allows the decentralization, but it's also what provides a lack of internal support for versioning.

Your best bet as a developer is to treat every package as the most up-to-date version when you perform a go get command. If you need a newer version, you can always follow whatever pattern the author has decided on, such as the preceding example.

As a creator of your own packages, make sure that you also adhere to this philosophy. Keeping your master branch HEAD as the most up-to-date will make sure your that the code fits with the conventions of other Go authors.

Dealing with versioning

You may have paused when you read about the way namespaces are defined and imported in a Go application. What happens if you're using version 1 of the application but would like to bring in version 2? In most cases, this has to be explicitly defined in the path of the import. For example:

import ( "github.com/foo/foo-v1" )

versus:

import ( "github.com/foo/foo-v2" )

As you might imagine, this can be a particularly sticky aspect of the way Go handles the remote packages.

Unlike a lot of other package managers, go get is decentralized—that is, nobody maintains a canonical reference library of packages and versions. This can sometimes be a sore spot for new developers.

For the most part, packages are always imported via the go get command, which reads the master branch of the remote repository. This means that maintaining multiple versions of a package at the same endpoint is, for the most part, impossible.

It's the utilization of the URL endpoints as namespaces that allows the decentralization, but it's also what provides a lack of internal support for versioning.

Your best bet as a developer is to treat every package as the most up-to-date version when you perform a go get command. If you need a newer version, you can always follow whatever pattern the author has decided on, such as the preceding example.

As a creator of your own packages, make sure that you also adhere to this philosophy. Keeping your master branch HEAD as the most up-to-date will make sure your that the code fits with the conventions of other Go authors.

At the heart of all network communications in Go is the aptly-named net package, which contains subpackages not only for the very relevant HTTP operations, but also for other TCP/UDP servers, DNS, and IP tools.

In short, everything you need to create a robust server environment.

Of course, what we care about for the purpose of this book lies primarily in the net/http package, but we'll look at a few other functions that utilize the rest of the package, such as a TCP connection, as well as WebSockets.

Let's quickly take a look at just performing that Hello World (or Web, in this case) example we have been talking about.

The following application serves as a static file at the location /static, and a dynamic response at the location /dynamic:

package main

import (

"fmt"

"net/http"

"time"

)

const (

Port = ":8080"

)

func serveDynamic(w http.ResponseWriter, r *http.Request) {

response := "The time is now " + time.Now().String()

fmt.Fprintln(w,response)

}Just as fmt.Println will produce desired content at the console level, Fprintln allows you to direct output to any writer. We'll talk a bit more about the writers in Chapter 2, Serving and Routing, but they represent a fundamental, flexible interface that is utilized in many Go applications, not just for the Web:

func serveStatic(w http.ResponseWriter, r *http.Request) {

http.ServeFile(w, r, "static.html")

}Our serveStatic method just serves one file, but it's trivial to allow it to serve any file directly and use Go as an old-school web server that serves only static content:

func main() {

http.HandleFunc("/static",serveStatic)

http.HandleFunc("/",serveDynamic)

http.ListenAndServe(Port,nil)

}Feel free to choose the available port of your choice—higher ports will make it easier to bypass the built-in security functionality, particularly in Nix systems.

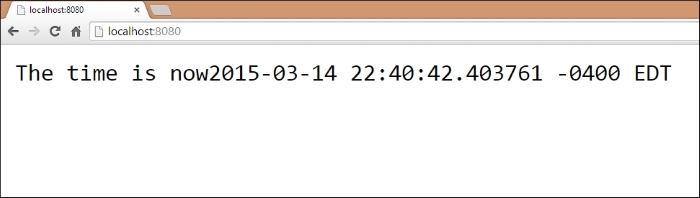

If we take the preceding example and visit the respective URLs—in this case the root at / and a static page at /static, we should see the intended output as shown:

At the root, / , the output is as follows:

At /static, the output is as follows:

As you can see, producing a very simple output for the Web is, well, very simple in Go. The built-in package allows us to create a basic, yet inordinately fast site in Go with just a few lines of code using native packages.

This may not be very exciting, but before we can run, we must walk. Producing the preceding output introduces a few key concepts.

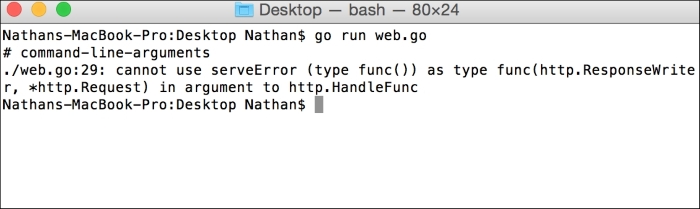

First, we've seen how net/http directs requests using a URI or URL endpoint to helper functions, which must implement the http.ResponseWriter and http.Request methods. If they do not implement it, we get a very clear error on that end.

The following is an example that attempts to implement it in this manner:

func serveError() {

fmt.Println("There's no way I'll work!")

}

func main() {

http.HandleFunc("/static", serveStatic)

http.HandleFunc("/", serveDynamic)

http.HandleFunc("/error",serveError)

http.ListenAndServe(Port, nil)

}The following screenshot shows the resulting error you'll get from Go:

You can see that serveError does not include the required parameters and thus results in a compilation error.

This chapter serves as an introduction to the most basic concepts of Go and producing for the Web in Go, but these points are critical foundational elements for being productive in the language and in the community.

We've looked at coding conventions and package design and organization, and we've produced our first program—the all-too-familiar Hello, World application—and accessed it via our localhost.

Obviously, we're a long way from a real, mature application for the Web, but the building blocks are essential to getting there.

In Chapter 2, Serving and Routing, we'll look at how to direct different requests to different application logic using the built-in routing functionality in Go's net/http package, as well as a couple of third party router packages.

The cornerstone of the Web as a commercial entity—the piece on which marketing and branding has relied on nearly exclusively—is the URL. While we're not yet looking at the top-level domain handling, we need to take up the reins of our URL and its paths (or endpoints).

In this chapter, we'll do just this by introducing multiple routes and corresponding handlers. First, we'll do this with a simple flat file serving and then we'll introduce complex mixers to do the routing with more flexibility by implementing a library that utilizes regular expressions in its routes.

By the end of this chapter, you should be able to create a site on localhost that can be accessed by any number of paths and return content relative to the requested path.

In this chapter, we will cover the following topics:

- Serving files directly

- Basic routing

- Using more complex routing with Gorilla

- Redirecting requests

- Serving basic errors

In the preceding chapter, we utilized the fmt.Fprintln function to output some generic Hello, World messaging in the browser.

This obviously has limited utility. In the earliest days of the Web and web servers, the entirety of the Web was served by directing requests to corresponding static files. In other words, if a user requested home.html, the web server would look for a file called home.html and return it to the user.

This might seem quaint today, as a vast majority of the Web is now served in some dynamic fashion, with content often being determined via database IDs, which allows for pages to be generated and regenerated without someone modifying the individual files.

Let's take a look at the simplest way in which we can serve files in a way similar to those olden days of the Web as shown:

package main

import (

"net/http"

)

const (

PORT = ":8080"

)

func main() {

http.ListenAndServe(PORT, http.FileServer(http.Dir("/var/www")))

}Pretty simple, huh? Any requests made to the site will attempt to find a corresponding file in our local /var/www directory. But while this has a more practical use compared to the example in Chapter 1, Introducing and Setting Up Go, it's still pretty limited. Let's take a look at expanding our options a bit.

In Chapter 1, Introducing and Setting Up, we produced a very basic URL endpoint that allowed static file serving.

The following are the simple routes we produced for that example:

func main() {

http.HandleFunc("/static",serveStatic)

http.HandleFunc("/",serveDynamic)

http.ListenAndServe(Port,nil)

}In review, you can see two endpoints, /static and /, which either serve a single static file or generate output to the http.ResponseWriter.

We can have any number of routers sitting side by side. However, consider a scenario where we have a basic website with about, contact, and staff pages, with each residing in /var/www/about/index.html, /var/www/contact.html, and /var/www/staff/home.html. While it's an intentionally obtuse example, it demonstrates the limitations of Go's built-in and unmodified routing system. We cannot route all requests to the same directory locally, we need something that provides more malleable URLs.

In the previous session, we looked at basic routing but that can only take us so far, we have to explicitly define our endpoints and then assign them to handlers. What happens if we have a wildcard or a variable in our URL? This is an absolutely essential part of the Web and any serious web server.

To invoke a very simple example, consider hosting a blog with unique identifiers for each blog entry. This could be a numeric ID representing a database ID entry or a text-based globally unique identifier, such as my-first-block-entry.

Note

In the preceding example, we want to route a URL like /pages/1 to a filename called 1.html. Alternately, in a database-based scenario, we'd want to use /pages/1 or /pages/hello-world to map to a database entry with a GUID of 1 or hello-world, respectively. To do this we either need to include an exhaustive list of possible endpoints, which is extremely wasteful, or implement wildcards, ideally through regular expressions.

In either case, we'd like to be able to utilize the value from the URL directly within our application. This is simple with URL parameters from GET or POST. We can extract those simply, but they aren't particularly elegant in terms of clean, hierarchical or descriptive URLs that are often necessary for search engine optimization purposes.

The built-in net/http routing system is, perhaps by design, relatively simple. To get anything more complicated out of the values in any given request, we either need to extend the routing capabilities or use a package that has done this.

In the few years that Go has been publicly available and the community has been growing, a number of web frameworks have popped up. We'll talk about these in a little more depth as we continue the book, but one in particular is well-received and very useful: the Gorilla web toolkit.

As the name implies, Gorilla is less of a framework and more of a set of very useful tools that are generally bundled in frameworks. Specifically, Gorilla contains:

gorilla/context: This is a package for creating a globally-accessible variable from the request. It's useful for sharing a value from the URL without repeating the code to access it across your application.gorilla/rpc: This implements RPC-JSON, which is a system for remote code services and communication without implementing specific protocols. This relies on the JSON format to define the intentions of any request.gorilla/schema: This is a package that allows simple packing of form variables into astruct, which is an otherwise cumbersome process.gorilla/securecookie: This, unsurprisingly, implements authenticated and encrypted cookies for your application.gorilla/sessions: Similar to cookies, this provides unique, long-term, and repeatable data stores by utilizing a file-based and/or cookie-based session system.gorilla/mux: This is intended to create flexible routes that allow regular expressions to dictate available variables for routers.- The last package is the one we're most interested in here, and it comes with a related package called

gorilla/reverse, which essentially allows you to reverse the process of creating regular expression-based muxes. We will cover that topic in detail in the later section.

Note

You can grab individual Gorilla packages by their GitHub location with a go get. For example, to get the mux package, going to github.com/gorilla/mux will suffice and bring the package into your GOPATH. For the locations of the other packages (they're fairly self-explanatory), visit http://www.gorillatoolkit.org/

Let's dive-in and take a look at how to create a route that's flexible and uses a regular expression to pass a parameter to our handler:

package main import ( "github.com/gorilla/mux" "net/http" ) const ( PORT = ":8080" )

This should look familiar to our last code with the exception of the Gorilla package import:

func pageHandler(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageID := vars["id"]

fileName := "files/" + pageID + ".html"

http.ServeFile(w,r,fileName)

}Here, we've created a route handler to accept the response. The thing to be noted here is the use of mux.Vars, which is a method that will look for query string variables from the http.Request and parse them into a map. The values will then be accessible by referencing the result by key, in this case id, which we'll cover in the next section.

func main() {

rtr := mux.NewRouter()

rtr.HandleFunc("/pages/{id:[0-9]+}",pageHandler)

http.Handle("/",rtr)

http.ListenAndServe(PORT,nil)

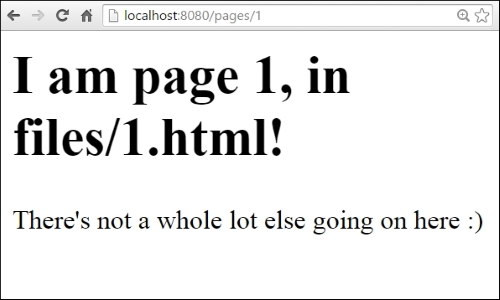

}Here, we can see a (very basic) regular expression in the handler. We're assigning any number of digits after /pages/ to a parameter named id in {id:[0-9]+}; this is the value we pluck out in pageHandler.

A simpler version that shows how this can be used to delineate separate pages can be seen by adding a couple of dummy endpoints:

func main() {

rtr := mux.NewRouter()

rtr.HandleFunc("/pages/{id:[0-9]+}", pageHandler)

rtr.HandleFunc("/homepage", pageHandler)

rtr.HandleFunc("/contact", pageHandler)

http.Handle("/", rtr)

http.ListenAndServe(PORT, nil)

}When we visit a URL that matches this pattern, our pageHandler attempts to find the page in the files/ subdirectory and returns that file directly.

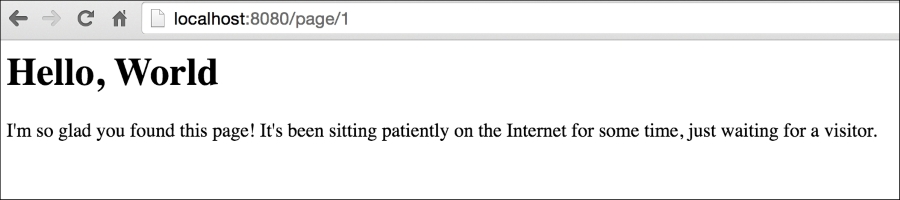

A response to /pages/1 would look like this:

At this point, you might already be asking, but what if we don't have the requested page? Or, what happens if we've moved that location? This brings us to two important mechanisms in web serving—returning error responses and, as part of that, potentially redirecting requests that have moved or have other interesting properties that need to be reported back to the end users.

Before we look at simple and incredibly common errors like 404s, let's address the idea of redirecting requests, something that's very common. Although not always for reasons that are evident or tangible for the average user.

So we might we want to redirect requests to another request? Well there are quite a few reasons, as defined by the HTTP specification that could lead us to implement automatic redirects on any given request. Here are a few of them with their corresponding HTTP status codes:

- A non-canonical address may need to be redirected to the canonical one for SEO purposes or for changes in site architecture. This is handled by 301 Moved Permanently or 302 Found.

- Redirecting after a successful or unsuccessful

POST. This helps us to prevent re-POSTing of the same form data accidentally. Typically, this is defined by 307 Temporary Redirect. - The page is not necessarily missing, but it now lives in another location. This is handled by the status code 301 Moved Permanently.

Executing any one of these is incredibly simple in basic Go with net/http, but as you might expect, it is facilitated and improved with more robust frameworks, such as Gorilla.

At this point, it makes some sense to talk a bit about errors. In all likelihood, you may have already encountered one as you played with our basic flat file serving server, particularly if you went beyond two or three pages.

Our example code includes four example HTML files for flat serving, numbered 1.html, 2.html, and so on. What happens when you hit the /pages/5 endpoint, though? Luckily, the http package will automatically handle the file not found errors, just like most common web servers.

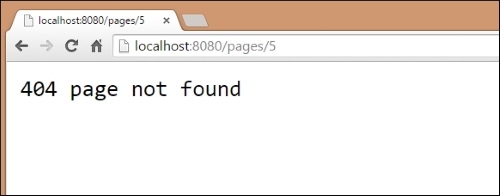

Also, similar to most common web servers, the error page itself is small, bland, and nondescript. In the following section, you can see the 404 page not found status response we get from Go:

As mentioned, it's a very basic and nondescript page. Often, that's a good thing—error pages that contain more information or flair than necessary can have a negative impact.

Consider this error—the 404—as an example. If we include references to images and stylesheets that exist on the same server, what happens if those assets are also missing?

In short, you can very quickly end up with recursive errors—each 404 page calls an image and stylesheet that triggers 404 responses and the cycle repeats. Even if the web server is smart enough to stop this, and many are, it will produce a nightmare scenario in the logs, rendering them so full of noise that they become useless.

Let's look at some code that we can use to implement a catch-all 404 page for any missing files in our /files directory:

package main

import (

"github.com/gorilla/mux"

"net/http"

"os"

)

const (

PORT = ":8080"

)

func pageHandler(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageID := vars["id"]

fileName := "files/" + pageID + ".html"_,

err := os.Stat(fileName)

if err != nil {

fileName = "files/404.html"

}

http.ServeFile(w,r,fileName)

}Here, you can see that we first attempt to check the file with os.Stat (and its potential error) and output our own 404 response:

func main() {

rtr := mux.NewRouter()

rtr.HandleFunc("/pages/{id:[0-9]+}",pageHandler)

http.Handle("/",rtr)

http.ListenAndServe(PORT,nil)

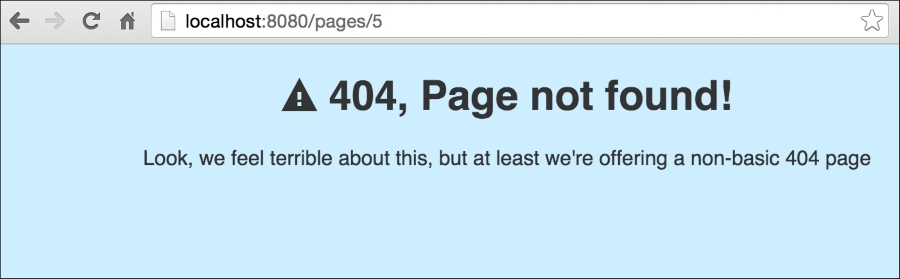

}Now if we take a look at the 404.html page, we will see that we've created a custom HTML file that produces something that is a little more user-friendly than the default Go Page Not Found message that we were invoking previously.

Let's take a look at what this looks like, but remember that it can look any way you'd like:

<!DOCTYPE html>

<html>

<head>

<title>Page not found!</title>

<style type="text/css">

body {

font-family: Helvetica, Arial;

background-color: #cceeff;

color: #333;

text-align: center;

}

</style>

<link rel="stylesheet" type="text/css" media="screen" href="http://code.ionicframework.com/ionicons/2.0.1/css/ionicons.min.css"></link>

</head>

<body>

<h1><i class="ion-android-warning"></i> 404, Page not found!</h1>

<div>Look, we feel terrible about this, but at least we're offering a non-basic 404 page</div>

</body>

</html>Also, note that while we keep the 404.html file in the same directory as the rest of our files, this is solely for the purposes of simplicity.

In reality, and in most production environments with custom error pages, we'd much rather have it exist in its own directory, which is ideally outside the publicly available part of our web site. After all, you can now access the error page in a way that is not actually an error by visiting http://localhost:8080/pages/404. This returns the error message, but the reality is that in this case the file was found, and we're simply returning it.

Let's take a look at our new, prettier 404 page by accessing http://localhost/pages/5, which specifies a static file that does not exist in our filesystem:

By showing a more user-friendly error message, we can provide more useful actions for users who encounter them. Consider some of the other common errors that might benefit from more expressive error pages.

We can now produce not only the basic routes from the net/http package but more complicated ones using the Gorilla toolkit. By utilizing Gorilla, we can now create regular expressions and implement pattern-based routing and allow much more flexibility to our routing patterns.

With this increased flexibility, we also have to be mindful of errors now, so we've looked at handling error-based redirects and messages, including a custom 404, Page not found message to produce more customized error messages.

Now that we have the basics down for creating endpoints, routes, and handlers; we need to start doing some non-trivial data serving.

In Chapter 3, Connecting to Data, we'll start getting dynamic information from databases, so we can manage data in a smarter and more reliable fashion. By connecting to a couple of different, commonly-used databases, we'll be able to build robust, dynamic, and scalable web applications.

In the previous chapter, we explored how to take URLs and translate them to different pages in our web application. In doing so, we built URLs that were dynamic and resulted in dynamic responses from our (very simple) net/http handlers.

By implementing an extended mux router from the Gorilla toolkit, we expanded the capabilities of the built-in router by allowing regular expressions, which gives our application a lot more flexibility.

This is something that's endemic to some of the most popular web servers. For example, both Apache and Nginx provide methods to utilize regular expressions in routes and staying at par with common solutions should be our minimal baseline for functionality.

But this is just an admittedly important stepping stone to build a robust web application with a lot of varied functionality. To go any further, we need to look at bringing in data.

Our examples in the previous chapter relied on hardcoded content grabbed from static files—this is obviously archaic and doesn't scale. Anyone who has worked in the pre-CGI early days of the Web could regale you with tales of site updates requiring total retooling of static files or explain the anachronism that was Server-Side Includes.

But luckily, the Web became largely dynamic in the late 1990s and databases began to rule the world. While APIs, microservices and NoSQL have in some places replaced that architecture, it still remains the bread and butter of the way the Web works today.

So without further ado, let's get some dynamic data.

In this chapter, we will cover the following topics:

- Connecting to a database

- Using GUID for prettier URLs

- Handling 404s

When it comes to accessing databases, Go's SQL interface provides a very simple and reliable way to connect to various database servers that have drivers.

At this point, most of the big names are covered—MySQL, Postgres, SQLite, MSSQL, and quite a few more have well-maintained drivers that utilize the database/sql interface provided by Go.

The best thing about the way Go handles this through a standardized SQL interface is that you won't have to learn custom Go libraries to interact with your database. This doesn't preclude needing to know the nuances of the database's SQL implementation or other functionality, but it does eliminate one potential area of confusion.

Before you go too much farther, you'll want to make sure that you have a library and a driver for your database of choice installed via go get command.

The Go project maintains a Wiki of all of the current SQLDrivers and is a good starting reference point when looking for an adapter at https://github.com/golang/go/wiki/SQLDrivers

MySQL can be downloaded from https://www.mysql.com/ and although there are a few drivers listed by Google, we recommend the Go-MySQL-Driver. Though you won't go wrong with the recommended alternatives from the Go project, the Go-MySQL-Driver is very clean and well-tested. You can get it at https://github.com/go-sql-driver/mysql/For Postgres, grab a binary or package manager command from http://www.postgresql.org/. The Postgres driver of choice here is pq, which can be installed via go get at github.com/lib/pq

You can choose to design any application you wish, but for these examples we'll look at a very simple blog concept.

Our goal here is to have as few blog entries in our database as possible, to be able to call those directly from our database by GUID and display an error if the particular requested blog entry does not exist.

To do this, we'll create a MySQL database that contains our pages. These will have an internal, automatically incrementing numeric ID, a textual globally unique identifier, or GUID, and some metadata around the blog entry itself.

To start simply, we'll create a title page_title, body text page_content and a Unix timestamp page_date. You can feel free to use one of MySQL's built-in date fields; using an integer field to store a timestamp is just a matter of preference and can allow for some more elaborate comparisons in your queries.

The following is the SQL in your MySQL console (or GUI application) to create the database cms and the requisite table pages:

CREATE TABLE `pages` ( `id` int(11) unsigned NOT NULL AUTO_INCREMENT, `page_guid` varchar(256) NOT NULL DEFAULT '', `page_title` varchar(256) DEFAULT NULL, `page_content` mediumtext, `page_date` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, PRIMARY KEY (`id`), UNIQUE KEY `page_guid` (`page_guid`) ) ENGINE=InnoDB AUTO_INCREMENT=2 DEFAULT CHARSET=latin1;

Note

As mentioned, you can execute this query through any number of interfaces. To connect to MySQL, select your database and try these queries, you can follow the command line documentation at http://dev.mysql.com/doc/refman/5.7/en/connecting.html.

Note the UNIQUE KEY on page_guid. This is pretty important, as if we happen to allow duplicate GUIDs, well, we have a problem. The idea of a globally unique key is that it cannot exist elsewhere, and since we'll rely on it for URL resolution, we want to make sure that there's only one entry per GUID.

As you can probably tell, this is a very basic content type of blog database. We have an auto-incrementing ID value, a title, a date and the page's content, and not a whole lot else going on.

While it's not a lot, it's enough to demonstrate dynamic pages in Go utilizing a database interface.

Just to make sure there's some data in the pages table, add the following query to fill this in a bit:

INSERT INTO `pages` (`id`, `page_guid`, `page_title`, `page_content`, `page_date`) VALUES (NULL, 'hello-world', 'Hello, World', 'I\'m so glad you found this page! It\'s been sitting patiently on the Internet for some time, just waiting for a visitor.', CURRENT_TIMESTAMP);

This will give us something to start with.

Now that we have our structure and some dummy data, let's take a look at how we can connect to MySQL, retrieve the data, and serve it dynamically based on URL requests and Gorilla's mux patterns.

To get started, let's create a shell of what we'll need to connect:

package main import ( "database/sql" "fmt" _ "github.com/go-sql-driver/mysql" "log" )

We're importing the MySQL driver package for what's known as side effects. By this, it's generally meant that the package is complementary to another and provides various interfaces that do not need to be referenced specifically.

You can note this through the underscore _ syntax that precedes the packages import. You're likely already familiar with this as a quick-and-dirty way to ignore the instantiation of a returned value from a method. For example x, _ := something()allows you to ignore the second returned value.

It's also often used when a developer plans to use a library, but hasn't yet. By prepending the package this way, it allows the import declaration to stay without causing a compiler error. While this is frowned upon, the use of the underscore—or blank identifier—in the preceding method, for side effects, is fairly common and often acceptable.

As always, though, this all depends on how and why you're using the identifier:

const ( DBHost = "127.0.0.1" DBPort = ":3306" DBUser = "root" DBPass = "password!" DBDbase = "cms" )

Make sure to replace these values with whatever happens to be relevant to your installation, of course:

var database *sql.DB

By keeping our database connection reference as a global variable, we can avoid a lot of duplicate code. For the sake of clarity, we'll define it fairly high up in the code. There's nothing preventing you from making this a constant instead, but we've left it mutable for any necessary future flexibility, such as adding multiple databases to a single application:

type Page struct {

Title string

Content string

Date string

}This struct, of course, matches our database schema rather closely, with Title, Content and Date representing the non-ID values in our table. As we'll see a bit later in this chapter (and more in the next), describing our data in a nicely-designed struct helps parlay the templating functions of Go. And on that note, make sure your struct fields are exportable or public by keeping them propercased. Any lowercased fields will not be exportable and therefore not available to templates. We will talk more on that later:

func main() {

dbConn := fmt.Sprintf("%s:%s@tcp(%s)/%s", DBUser, DBPass, DBHost, DBDbase)

db, err := sql.Open("mysql", dbConn)

if err != nil {

log.Println("Couldn't connect!")

log.Println(err.Error)

}

database = db

}As we mentioned earlier, this is largely scaffolding. All we want to do here is ensure that we're able to connect to our database. If you get an error, check your connection and the log entry output after Couldn't connect.

If, hopefully, you were able to connect with this script, we can move on to creating a generic route and outputting the relevant data from that particular request's GUID from our database.

To do this we need to reimplement Gorilla, create a single route, and then implement a handler that generates some very simple output that matches what we have in the database.

Let's take a look at the modifications and additions we'll need to make to allow this to happen:

package main import ( "database/sql" "fmt" _ "github.com/go-sql-driver/mysql" "github.com/gorilla/mux" "log" "net/http" )

The big change here is that we're bringing Gorilla and net/http back into the project. We'll obviously need these to serve pages:

const ( DBHost = "127.0.0.1" DBPort = ":3306" DBUser = "root" DBPass = "password!" DBDbase = "cms" PORT = ":8080" )

We've added a PORT constant, which refers to our HTTP server port.

Note that if your host is localhost/127.0.0.1, it's not necessary to specify a DBPort, but we've kept this line in the constants section. We don't use the host here in our MySQL connection:

var database *sql.DB

type Page struct {

Title string

Content string

Date string

}

func ServePage(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageID := vars["id"]

thisPage := Page{}

fmt.Println(pageID)

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE id=?", pageID).Scan(&thisPage.Title, &thisPage.Content, &thisPage.Date)

if err != nil {

log.Println("Couldn't get page: +pageID")

log.Println(err.Error)

}

html := `<html><head><title>` + thisPage.Title + `</title></head><body><h1>` + thisPage.Title + `</h1><div>` + thisPage.Content + `</div></body></html>`

fmt.Fprintln(w, html)

}

ServePage is the function that takes an id from mux.Vars and queries our database for the blog entry ID. There's some nuance in the way we make a query that is worth noting; the simplest way to eliminate SQL injection vulnerabilities is to use prepared statements, such as Query, QueryRow, or Prepare. Utilizing any of these and including a variadic of variables to be injected into the prepared statement removes the inherent risk of constructing a query by hand.

The Scan method then takes the results of a query and translates them to a struct; you'll want to make sure the struct matches the order and number of requested fields in the query. In this case, we're mapping page_title, page_content and page_date to a Page struct's Title, Content and Date:

func main() {

dbConn := fmt.Sprintf("%s:%s@/%s", DBUser, DBPass, DBDbase)

fmt.Println(dbConn)

db, err := sql.Open("mysql", dbConn)

if err != nil {

log.Println("Couldn't connect to"+DBDbase)

log.Println(err.Error)

}

database = db

routes := mux.NewRouter()

routes.HandleFunc("/page/{id:[0-9]+}", ServePage)

http.Handle("/", routes)

http.ListenAndServe(PORT, nil)

}Note our regular expression here: it's just numeric, with one or more digits comprising what will be the id variable accessible from our handler.

Remember that we talked about using the built-in GUID? We'll get to that in a moment, but for now let's look at the output of local

host:8080/page/1:

In the preceding example, we can see the blog entry that we had in our database. This is good, but obviously lacking in quite a few ways.

Earlier in this chapter we talked about using the GUID to act as the URL identifier for all requests. Instead, we started by yielding to the numeric, thus automatically incrementing column in the table. That was for the sake of simplicity, but switching this to the alphanumeric GUID is trivial.

All we'll need to do is to switch our regular expression and change our resulting SQL query in our ServePage handler.

If we only change our regular expression, our last URL's page will still work:

routes.HandleFunc("/page/{id:[0-9a-zA\\-]+}", ServePage)The page will of course still pass through to our handler. To remove any ambiguity, let's assign a guid variable to the route:

routes.HandleFunc("/page/{guid:[0-9a-zA\\-]+}", ServePage)After that, we change our resulting call and SQL:

func ServePage(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageGUID := vars["guid"]

thisPage := Page{}

fmt.Println(pageGUID)

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE page_guid=?", pageGUID).Scan(&thisPage.Title, &thisPage.Content, &thisPage.Date)After doing this, accessing our page by the /pages/hello-world URL will result in the same page content we got by accessing it through /pages/1. The only real advantage is cosmetic, it creates a prettier URL that is more human-readable and potentially more useful for search engines:

A very obvious problem with our preceding code is that it does not handle a scenario wherein an invalid ID (or GUID) is requested.

As it is, a request to, say, /page/999 will just result in a blank page for the user and in the background a Couldn't get page! message, as shown in the following screenshot:

Resolving this is pretty simple by passing proper errors. Now, in the previous chapter we explored custom 404 pages and you can certainly implement one of those here, but the easiest way is to just return an HTTP status code when a post cannot be found and allow the browser to handle the presentation.

In our preceding code, we have an error handler that doesn't do much except return the issue to our log file. Let's make that more specific:

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE page_guid=?", pageGUID).Scan(&thisPage.Title, &thisPage.Content, &thisPage.Date)

if err != nil {

http.Error(w, http.StatusText(404), http.StatusNotFound)

log.Println("Couldn't get page!")

}You will see the output in the following screenshot. Again, it would be trivial to replace this with a custom 404 page, but for now we want to make sure we're addressing the invalid requests by validating them against our database:

Providing good error messages helps improve usability for both developers and other users. In addition, it can be beneficial for SEO, so it makes sense to use HTTP status codes as defined in HTTP standards.

In this chapter, we've taken the leap from simply showing content to showing content that's maintained in a sustainable and maintainable way using a database. While this allows us to display dynamic data easily, it's just a core step toward a fully-functional application.

We've looked at creating a database and then retrieving the data from it to inject into route while keeping our query parameters sanitized to prevent SQL injections.

We also accounted for potential bad requests with invalid GUIDs, by returning 404 Not Found statuses for any requested GUID that does not exist in our database. We also looked at requesting data by ID as well as the alphanumeric GUID.

This is just the start of our application, though.

In Chapter 4, Using Templates, we'll take the data that we've grabbed from MySQL (and Postgres) and apply some of Go's template language to them to give us more frontend flexibility.

By the end of that chapter, we will have an application that allows for creation and deletion of pages directly from our application.

In Chapter 2, Serving and Routing, we explored how to take URLs and translate them to different pages in our web application. In doing so, we built URLs that were dynamic and resulted in dynamic responses from our (very simple) net/http handlers.

We've presented our data as real HTML, but we specifically hard-coded our HTML directly into our Go source. This is not ideal for production-level environments for a number of reasons.

Luckily, Go comes equipped with a robust but sometimes tricky template engine for both text templates, as well as HTML templates.

Unlike a lot of other template languages that eschew logic as a part of the presentation side, Go's template packages enable you to utilize some logic constructs, such as loops, variables, and function declarations in a template. This allows you to offset some of your logic to the template, which means that it's possible to write your application, but you need to allow the template side to provide some extensibility to your product without rewriting the source.

We say some logic constructs because Go templates are sold as logic-less. We will discuss more on this topic later.

In this chapter, we'll explore ways to not only present your data but also explore some of the more advanced possibilities in this chapter. By the end, we will be able to parlay our templates into advancing the separation of presentation and source code.

We will cover the following topics:

- Introducing templates, context, and visibility

- HTML templates and text templates

- Displaying variables and security

- Using logic and control structures

It's worth noting very early that while we're talking about taking our HTML part out of the source code, it's possible to use templates inside our Go application. Indeed, there's nothing wrong with declaring a template as shown:

tpl, err := template.New("mine").Parse(`<h1>{{.Title}}</h1>`)If we do this, however, we'll need to restart our application every time the template needs to change. This doesn't have to be the case if we use file-based templates; instead we can make changes to the presentation (and some logic) without restarting.

The first thing we need to do to move from in-application HTML strings to file-based templates is create a template file. Let's briefly look at an example template that somewhat approximates to what we'll end up with later in this chapter:

<!DOCTYPE html>

<html>

<head>

<title>{{.Title}}</title>

</head>

<body>

<h1>{{.Title}}</h1>

<div>{{.Date}}</div>

{{.Content}}

</body>

</html>Very straightforward, right? Variables are clearly expressed by a name within double curly brackets. So what's with all of the periods/dots? Not unlike a few other similarly-styled templating systems (Mustache, Angular, and so on), the dot signifies scope or context.

The easiest way to demonstrate this is in areas where the variables might otherwise overlap. Imagine that we have a page with a title of Blog Entries and we then list all of our published blog articles. We have a page title but we also have individual entry titles. Our template might look something similar to this:

{{.Title}}

{{range .Blogs}}

<li><a href="{{.Link}}">{{.Title}}</a></li>

{{end}}The dot here specifies the specific scope of, in this case, a loop through the range template operator syntax. This allows the template parser to correctly utilize {{.Title}} as a blog's title versus the page's title.

This is all noteworthy because the very first templates we'll be creating will utilize general scope variables, which are prefixed with the dot notation.

In our first example of displaying the values from our blog from our database to the Web, we produced a hardcoded string of HTML and injected our values directly.

Following are the two lines that we used in Chapter 3, Connecting to Data:

html := `<html><head><title>` + thisPage.Title + `</title></head><body><h1>` + thisPage.Title + `</h1><div>` + thisPage.Content + `</div></body></html> fmt.Fprintln(w, html)

It shouldn't be hard to realize why this isn't a sustainable system for outputting our content to the Web. The best way to do this is to translate this into a template, so we can separate our presentation from our application.

To do this as succinctly as possible, let's modify the method that called the preceding code, ServePage, to utilize a template instead of hardcoded HTML.

So we'll remove the HTML we placed earlier and instead reference a file that will encapsulate what we want to display. From your root directory, create a templates subdirectory and blog.html within it.

The following is the very basic HTML we included, feel free to add some flair:

<html>

<head>

<title>{{.Title}}</title>

</head>

<body>

<h1>{{.Title}}</h1>

<p>

{{.Content}}

</p>

<div>{{.Date}}</div>

</body>

</html>Back in our application, inside the ServePage handler, we'll change our output code slightly to leave an explicit string and instead parse and execute the HTML template we just created:

func ServePage(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageGUID := vars["guid"]

thisPage := Page{}

fmt.Println(pageGUID)

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE page_guid=?", pageGUID).Scan(&thisPage.Title, &thisPage.Content, &thisPage.Date)

if err != nil {

http.Error(w, http.StatusText(404), http.StatusNotFound)

log.Println("Couldn't get page!")

return

}

// html := <html>...</html>

t, _ := template.ParseFiles("templates/blog.html")

t.Execute(w, thisPage)

}If, somehow, you failed to create the file or it is otherwise not accessible, the application will panic when it attempts to execute. You can also get panicked if you're referencing struct values that don't exist—we'll need to handle errors a bit better.

The benefits of moving away from a static string should be evident, but we now have the foundation for a much more extensible presentation layer.

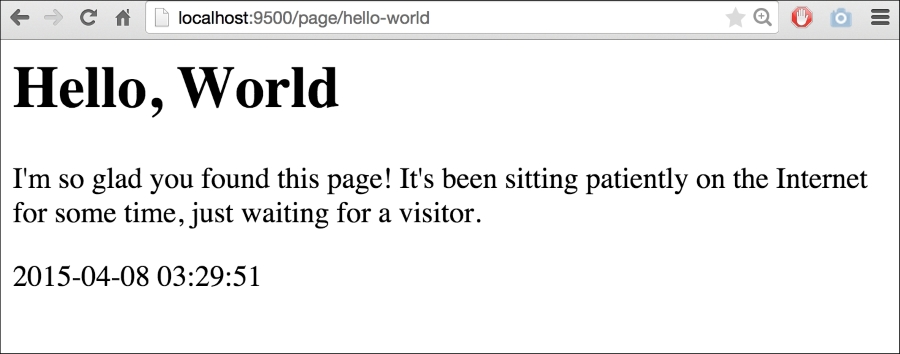

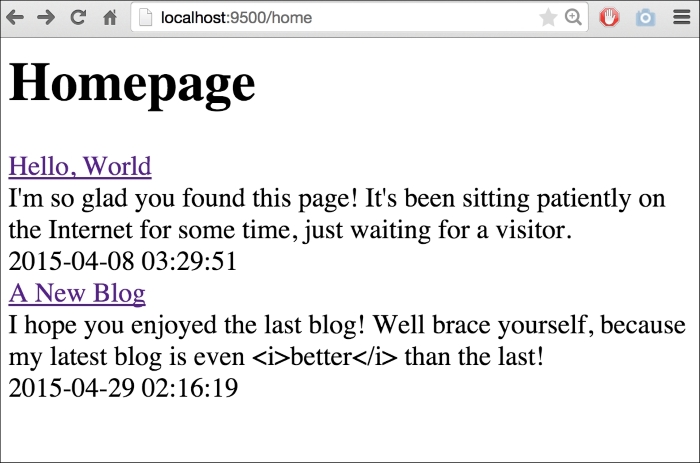

If we visit http://localhost:9500/page/hello-world we'll see something similar to this:

To demonstrate this, let's create a new blog entry by adding this SQL command to your MySQL command line:

INSERT INTO `pages` (`id`, `page_guid`, `page_title`, page_content`, `page_date`)

VALUES:

(2, 'a-new-blog', 'A New Blog', 'I hope you enjoyed the last blog! Well brace yourself, because my latest blog is even <i>better</i> than the last!', '2015-04-29 02:16:19');

Another thrilling piece of content, for sure. Note, however that we have some embedded HTML in this when we attempt to italicize the word better.

Debates about how formatting should be stored notwithstanding, this allows us to take a look at how Go's templates handle this by default. If we visit http://localhost:9500/page/a-new-blog we'll see something similar to this:

As you can see, Go automatically sanitizes our data for output. There are a lot of very, very wise reasons to do this, which is why it's the default behavior. The biggest one, of course, is to avoid XSS and code-injection attack vectors from untrusted sources of input, such as the general users of the site and so on.

But ostensibly we are creating this content and should be considered trusted. So in order to validate this as trusted HTML, we need to change the type of template.HTML:

type Page struct {

Title string

Content template.HTML

Date string

}If you attempt to simply scan the resulting SQL string value into a template.HTML you'll find the following error:

sql: Scan error on column index 1: unsupported driver -> Scan pair: []uint8 -> *template.HTML

The easiest way to work around this is to retain the string value in RawContent and assign it back to Content:

type Page struct {

Title string

RawContent string

Content template.HTML

Date string

}

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE page_guid=?", pageGUID).Scan(&thisPage.Title, &thisPage.RawContent, &thisPage.Date)

thisPage.Content = template.HTML(thisPage.RawContent)If we go run this again, we'll see our HTML as trusted:

Earlier in this chapter we looked at how we can use a range in our templates just as we would directly in our code. Take a look at the following code:

{{range .Blogs}}

<li><a href="{{.Link}}">{{.Title}}</a></li>

{{end}}You may recall that we said that Go's templates are without any logic, but this depends on how you define logic and whether shared logic lies exclusively in the application, the template, or a little of both. It's a minor point, but because Go's templates offer a lot of flexibility; it's the one worth thinking about.

Having a range feature in the preceding template, by itself, opens up a lot of possibilities for a new presentation of our blog. We can now show a list of blogs or break our blog up into paragraphs and allow each to exist as a separate entity. This can be used to allow relationships between comments and paragraphs, which have started to pop up as a feature in some publication systems in recent years.

But for now, let's use this opportunity to create a list of blogs in a new index page. To do this, we'll need to add a route. Since we have /page we could go with /pages, but since this will be an index, let's go with / and /home:

routes := mux.NewRouter()

routes.HandleFunc("/page/{guid:[0-9a-zA\\-]+}", ServePage)

routes.HandleFunc("/", RedirIndex)

routes.HandleFunc("/home", ServeIndex)

http.Handle("/", routes)We'll use RedirIndex to automatically redirect to our /home endpoint as a canonical home page.

Serving a simple 301 or Permanently Moved redirect requires very little code in our method, as shown:

func RedirIndex(w http.ResponseWriter, r *http.Request) {

http.Redirect(w, r, "/home", 301)

}This is enough to take any requests from / and bring the user to /home automatically. Now, let's look at looping through our blogs on our index page in the ServeIndex HTTP handler:

func ServeIndex(w http.ResponseWriter, r *http.Request) {

var Pages = []Page{}

pages, err := database.Query("SELECT page_title,page_content,page_date FROM pages ORDER BY ? DESC", "page_date")

if err != nil {

fmt.Fprintln(w, err.Error)

}

defer pages.Close()

for pages.Next() {

thisPage := Page{}

pages.Scan(&thisPage.Title, &thisPage.RawContent, &thisPage.Date)

thisPage.Content = template.HTML(thisPage.RawContent)

Pages = append(Pages, thisPage)

}

t, _ := template.ParseFiles("templates/index.html")

t.Execute(w, Pages)

}And here's templates/index.html:

<h1>Homepage</h1>

{{range .}}

<div><a href="!">{{.Title}}</a></div>

<div>{{.Content}}</div>

<div>{{.Date}}</div>

{{end}}

We've highlighted an issue with our Page struct here—we have no way to get the reference to the page's GUID. So, we need to modify our struct to include that as the exportable Page.GUID variable:

type Page struct {

Title string

Content template.HTML

RawContent string

Date string

GUID string

}Now, we can link our listings on our index page to their respective blog entries as shown:

var Pages = []Page{}

pages, err := database.Query("SELECT page_title,page_content,page_date,page_guid FROM pages ORDER BY ? DESC", "page_date")

if err != nil {

fmt.Fprintln(w, err.Error)

}

defer pages.Close()

for pages.Next() {

thisPage := Page{}

pages.Scan(&thisPage.Title, &thisPage.Content, &thisPage.Date, &thisPage.GUID)

Pages = append(Pages, thisPage)

}And we can update our HTML part with the following code:

<h1>Homepage</h1>

{{range .}}

<div><a href="/page/{{.GUID}}">{{.Title}}</a></div>

<div>{{.Content}}</div>

<div>{{.Date}}</div>

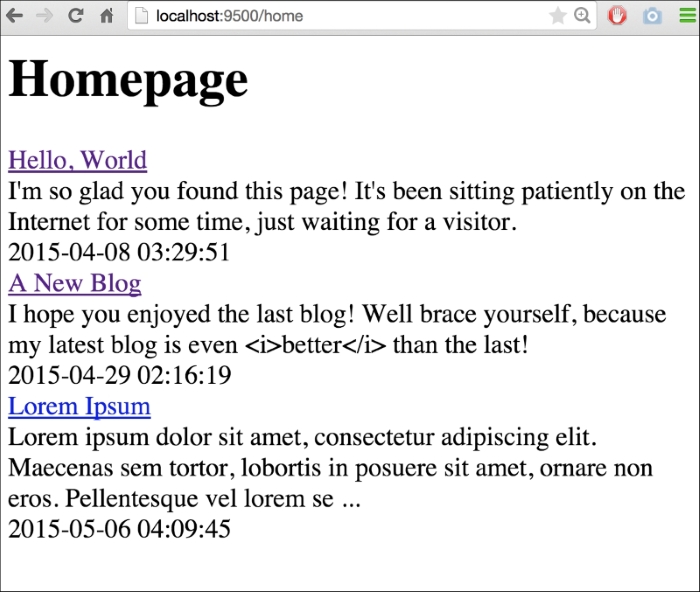

{{end}}But this is just the start of the power of the templates. What if we had a much longer piece of content and wanted to truncate its description?

We can create a new field within our Page struct and truncate that. But that's a little clunky; it requires the field to always exist within a struct, whether populated with data or not. It's much more efficient to expose methods to the template itself.

So let's do that.

First, create yet another blog entry, this time with a larger content value. Choose whatever you like or select the INSERT command as shown:

INSERT INTO `pages` (`id`, `page_guid`, `page_title`, `page_content`, `page_date`)

VALUES:

(3, 'lorem-ipsum', 'Lorem Ipsum', 'Lorem ipsum dolor sit amet, consectetur adipiscing elit. Maecenas sem tortor, lobortis in posuere sit amet, ornare non eros. Pellentesque vel lorem sed nisl dapibus fringilla. In pretium...', '2015-05-06 04:09:45');

Now, we need to represent our truncation as a method for the type Page. Let's create that method to return a string that represents the shortened text.

The cool thing here is that we can essentially share a method between the application and the template:

func (p Page) TruncatedText() string {

chars := 0

for i, _ := range p.Content {

chars++

if chars > 150 {

return p.Content[:i] + ` ...`

}

}

return p.Content

}This code will loop through the length of content and if the number of characters exceeds 150, it will return the slice up to that number in the index. If it doesn't ever exceed that number, TruncatedText will return the content as a whole.

Calling this in the template is simple, except that you might be expected to need a traditional function syntax call, such as TruncatedText(). Instead, it's referenced just as any variable within the scope:

<h1>Homepage</h1>

{{range .}}

<div><a href="/page/{{.GUID}}">{{.Title}}</a></div>

<div>{{.TruncatedText}}</div>

<div>{{.Date}}</div>

{{end}}By calling .TruncatedText, we essentially process the value inline through that method. The resulting page reflects our existing blogs and not the truncated ones and our new blog entry with truncated text and ellipsis appended:

I'm sure you can imagine how being able to reference embedded methods directly in your templates can open up a world of presentation possibilities.

We've just scratched the surface of what Go's templates can do and we'll explore further topics as we continue, but this chapter has hopefully introduced the core concepts necessary to start utilizing templates directly.

We've looked at simple variables, as well as implementing methods within the application, within the templates themselves. We've also explored how to bypass injection protection for trusted content.

In the next chapter, we'll integrate a backend API for accessing information in a RESTful way to read and manipulate our underlying data. This will allow us to do some more interesting and dynamic things on our templates with Ajax.

In Chapter 2, Serving and Routing, we explored how to route URLs to the different pages in our web application. In doing so, we built URLs that were dynamic and resulted in dynamic responses from our (very simple) net/http handlers.

We've just scratched the surface of what Go's templates can do, and we'll also explore further topics as we continue, but in this chapter we have tried to introduce the core concepts that are necessary to start utilizing the templates directly.

We've looked at simple variables as well as the implementing methods within the application using the templates themselves. We've also explored how to bypass injection protection for trusted content.

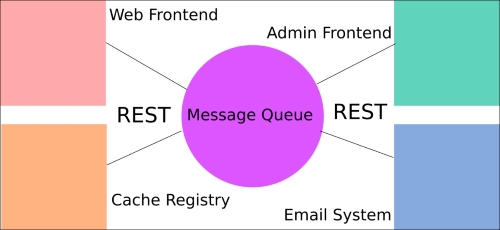

The presentation side of web development is important, but it's also the least engrained aspect. Almost any framework will present its own extension of built-in Go templating and routing syntaxes. What really takes our application to the next level is building and integrating an API for both general data access, as well as allowing our presentation layer to be more dynamically driven.

In this chapter, we'll develop a backend API for accessing information in a RESTful way and to read and manipulate our underlying data. This will allow us to do some more interesting and dynamic things in our templates with Ajax.

In this chapter, we will cover the following topics:

- Setting up the basic API endpoint

- RESTful architecture and best practices

- Creating our first API endpoint

- Implementing security

- Creating data with POST

- Modifying data with PUT

First, we'll set up a basic API endpoint for both pages and individual blog entries.

We'll create a Gorilla endpoint route for a GET request that will return information about our pages and an additional one that accepts a GUID, which matches alphanumeric characters and hyphens:

routes := mux.NewRouter()

routes.HandleFunc("/api/pages", APIPage).

Methods("GET").

Schemes("https")

routes.HandleFunc("/api/pages/{guid:[0-9a-zA\\-]+}", APIPage).

Methods("GET").

Schemes("https")

routes.HandleFunc("/page/{guid:[0-9a-zA\\-]+}", ServePage)

http.Handle("/", routes)

http.ListenAndServe(PORT, nil)Note here that we're capturing the GUID again, this time for our /api/pages/* endpoint, which will mirror the functionality of the web-side counterpart, returning all meta data associated with a single page.

func APIPage(w http.ResponseWriter, r *http.Request) {

vars := mux.Vars(r)

pageGUID := vars["guid"]

thisPage := Page{}

fmt.Println(pageGUID)

err := database.QueryRow("SELECT page_title,page_content,page_date FROM pages WHERE page_guid=?", pageGUID).Scan(&thisPage.Title, &thisPage.RawContent, &thisPage.Date)

thisPage.Content = template.HTML(thisPage.RawContent)

if err != nil {

http.Error(w, http.StatusText(404), http.StatusNotFound)

log.Println(err)

return

}

APIOutput, err := json.Marshal(thisPage)

fmt.Println(APIOutput)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

w.Header().Set("Content-Type", "application/json")

fmt.Fprintln(w, thisPage)

}The preceding code represents the simplest GET-based request, which returns a single record from our /pages

endpoint. Let's take a look at REST now, and see how we'll structure and implement other verbs and data manipulations from the API.

In the world of web API design, there has been an array of iterative, and sometimes competing, efforts to find a standard system and format to deliver information across multiple environments.

In recent years, the web development community at large seems to have—at least temporarily—settled on REST as the de facto approach. REST came after a few years of SOAP dominance and introduced a simpler method for sharing data.

REST APIs aren't bound to a format and are typically cacheable and delivered via HTTP or HTTPS.

The biggest takeaway to start with is an adherence to HTTP verbs; those initially specified for the Web are honored in their original intent. For example, HTTP verbs, such as DELETE and PATCH fell into years of disuse despite being very explicit about their purpose. REST has been the primary impetus for the use of the right method for the right purpose. Prior to REST, it was not uncommon to see GET and POST requests being used interchangeably to do myriad things that were otherwise built into the design of HTTP.

In REST, we follow a

Create-Read-Update-Delete (CRUD)-like approach to retrieve or modify data. POST is used majorly to create, PUT is used as an update (though it can also be used to create), the familiar GET is used to read and DELETE is used to delete, is well, just that.

Perhaps even more important is the fact that a RESTful API should be stateless. By that we mean that each request should exist on its own, without the server necessarily having any knowledge about prior or potential future requests. This means that the idea of a session would technically violate this ethos, as we'd be storing some sense of state on the server itself. Some people disagree; we'll look at this in detail later on.

One final note is on API URL structure, because the method is baked into the request itself as part of the header, we don't need to explicitly express that in our request.

In other words, we don't need something, such as /api/blogs/delete/1. Instead, we can simply make our request with the DELETE method to api/blogs/1.

There is no rigid format of the URL structure and you may quickly discover that some actions lack HTTP-specific verbs that make sense, but in short there are a few things we should aim for:

- The resources are expressed cleanly in the URL

- We properly utilize HTTP verbs

- We return appropriate responses based on the type of request

Our goal in this chapter is to hit the preceding three points with our API.

If there is a fourth point, it would say that we maintain backwards compatibility with our APIs. As you examine the URL structure here, you might wonder how versions are handled. This tends to vary from organization to organization, but a good policy is to keep the most recent URL canonical and deprecate to explicit version URLs.

For example, even though our comments will be accessible at /api/comments, the older versions will be found at /api/v2.0/comments, where 2 obviously represents our API, as it existed in version 2.0.

Note

Despite being relatively simple and well-defined in nature, REST is an oft-argued subject with enough ambiguity to start, most often for the better, a lot of debate. Remember that REST is not a standard; for example, the W3C has not and likely will not ever weigh in on what REST is and isn't. If you haven't already, you'll begin to develop some very strong opinions on what you feel is properly RESTful.

Given that we want to access data from the client-side as well as from server to server, we'll need to start making some of that accessible via an API.

The most reasonable thing for us to do is a simple read, since we don't yet have methods to create data outside of direct SQL queries. We did that at the beginning of the chapter with our APIPage method, routed through a /api/pages/{UUID} endpoint.

This is great for GET requests, where we're not manipulating data, but if we need to create or modify data, we'll need to utilize other HTTP verbs and REST methods. To do this effectively, it's time to investigate some authentication and security in our API.

When you think about creating data with an API like the one we've just designed, what's the first concern that comes to your mind? If it was security, then good for you. Accessing data is not always without a security risk, but it's when we allow for modification of data that we need to really start thinking about security.

In our case, read data is totally benign. If someone can access all of our blog entries via a GET request, who cares? Well, we may have a blog on embargo or accidentally exposed sensitive data on some resource.

Either way, security should always be a concern, even with a small personal project like a blogging platform, similar to the one we're building.

There are two big ways of separating these concerns:

- Are the requests to our APIs secure and private?

- Are we controlling access to data?

Lets tackle Step 2 first. If we want to allow users to create or delete information, we need to give them specific access to that.

There are a few ways to do this:

We can provide API tokens that will allow short-lived request windows, which can be validated by a shared secret. This is the essence of Oauth; it relies on a shared secret to validate cryptographically encoded requests. Without the shared secret, the request and its token will never match, and an API request can then be rejected.

The cond method is a simple API key, which leads us back to point number 1 in the preceding list.

If we allow cleartext API keys, then we might as well not have security at all. If our requests can be sniffed off the wire without much effort, there's little point in even requiring an API key.

So this means that no matter which method we choose, our servers should provide an API over HTTPS. Luckily, Go provides a very easy way to utilize either HTTP or HTTPS via Transport Layer Security (TLS); TLS is the successor of SSL. As a web developer, you must already be familiar with SSL and also be aware of its history of security issues, most recently its susceptibility to the POODLE vulnerability, which was exposed in 2014.

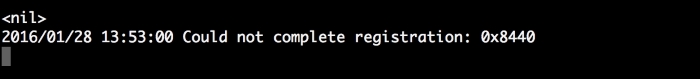

To allow either method, we need to have a user registration model so that we can have new users and they can have some sort of credentials to modify data. To invoke a TLS server, we'll need a secure certificate. Since this is a small project for experimentation, we won't worry too much about a real certificate with a high level of trust. Instead, we'll just generate our own.

Creating a self-signed certificate varies by OS and is beyond the scope of this book, so let's just look at the method for OS X.