"Computer language design is just like a stroll in the park. Jurassic Park, that is." | ||

| --Larry Wall | ||

In this chapter, we will cover the following recipes:

- Adding a resource to a node

- Using Facter to describe a node

- Installing a package before starting a service

- Installing, configuring, and starting a service

- Using community Puppet style

- Creating a manifest

- Checking your manifests with Puppet-lint

- Using modules

- Using standard naming conventions

- Using inline templates

- Iterating over multiple items

- Writing powerful conditional statements

- Using regular expressions in if statements

- Using selectors and case statements

- Using the in operator

- Using regular expression substitutions

- Using the future parser

This recipe will introduce the language and show you the basics of writing Puppet code. A beginner may wish to reference Puppet 3: Beginner's Guide, John Arundel, Packt Publishing in addition to this section. Puppet code files are called manifests; manifests declare resources. A resource in Puppet may be a type, class, or node. A type is something like a file or package or anything that has a type declared in the language. The current list of standard types is available on puppetlabs website at https://docs.puppetlabs.com/references/latest/type.html. I find myself referencing this site very often. You may define your own types, either using a mechanism, similar to a subroutine, named

defined types, or you can extend the language using a custom type. Types are the heart of the language; they describe the things that make up a node (node is the word Puppet uses for client computers/devices). Puppet uses resources to describe the state of a node; for example, we will declare the following package resource for a node using a site manifest (site.pp).

Create a site.pp file and place the following code in it:

Tip

You can download the example code files for all Packt books you have purchased from your account at http://www.packtpub.com. If you purchased this book elsewhere, you can visit http://www.packtpub.com/support and register to have the files e-mailed directly to you.

site.pp file and place the following code in it:

Tip

You can download the example code files for all Packt books you have purchased from your account at http://www.packtpub.com. If you purchased this book elsewhere, you can visit http://www.packtpub.com/support and register to have the files e-mailed directly to you.

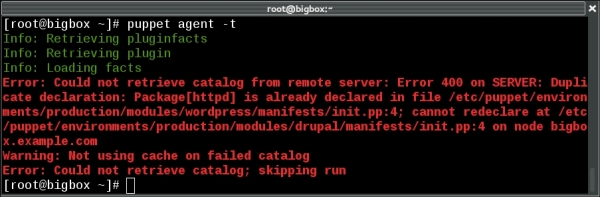

'httpd'. The default keyword is a wildcard to Puppet; it applies anything within the node default definition to any node. When Puppet applies the manifest to a node, it uses a Resource Abstraction Layer (RAL) to translate the package type into the package management system of the target node. What this means is that we can use the same manifest to install the httpd package on any system for which Puppet has a

Provider for the package type. Providers are the pieces of code that do the real work of applying a manifest. When the previous code is applied to a node running on a YUM-based distribution, the YUM provider will be used to install the httpd RPM packages. When the same code is applied to a node running on an APT-based distribution, the APT provider will be used to install the httpd DEB package (which may not exist, most debian-based systems call this package apache2; we'll deal with this sort of naming problem later).

Facter is a separate utility upon which Puppet depends. It is the system used by Puppet to gather information about the target system (node); facter calls the nuggets of information facts. You may run facter from the command line to obtain real-time information from the system.

Variables in Puppet are marked with a dollar sign ($) character. When using variables within a manifest, it is preferred to enclose the variable within braces "${myvariable}" instead of "$myvariable". All of the facts from facter can be referenced as top scope variables (we will discuss scope in the next section). For example, the fully qualified domain name (FQDN) of the node may be referenced by "${::fqdn}". Variables can only contain alphabetic characters, numerals, and the underscore character (_). As a matter of style, variables should start with an alphabetic character. Never use dashes in variable names.

In the variable example explained in the There's more… section, the fully qualified domain name was referred to as ${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates scope. The highest level scope, top scope or global, is referred to by two colons (::) at the beginning of a variable identifier. To reduce namespace collisions, always use fully scoped variable identifiers in your manifests. For a Unix user, think of top scope variables as the / (root) level. You can refer to variables using the double colon syntax similar to how you would refer to a directory by its full path. For the developer, you can think of top scope variables as global variables; however, unlike global variables, you must always refer to them with the double colon notation to guarantee that a local variable isn't obscuring the top scope variable.

facter to find

Variables in Puppet are marked with a dollar sign ($) character. When using variables within a manifest, it is preferred to enclose the variable within braces "${myvariable}" instead of "$myvariable". All of the facts from facter can be referenced as top scope variables (we will discuss scope in the next section). For example, the fully qualified domain name (FQDN) of the node may be referenced by "${::fqdn}". Variables can only contain alphabetic characters, numerals, and the underscore character (_). As a matter of style, variables should start with an alphabetic character. Never use dashes in variable names.

In the variable example explained in the There's more… section, the fully qualified domain name was referred to as ${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates scope. The highest level scope, top scope or global, is referred to by two colons (::) at the beginning of a variable identifier. To reduce namespace collisions, always use fully scoped variable identifiers in your manifests. For a Unix user, think of top scope variables as the / (root) level. You can refer to variables using the double colon syntax similar to how you would refer to a directory by its full path. For the developer, you can think of top scope variables as global variables; however, unlike global variables, you must always refer to them with the double colon notation to guarantee that a local variable isn't obscuring the top scope variable.

facter is installed (as a dependency for puppet), several fact definitions are installed by default. You can reference each of these facts by name from the command line.

Variables in Puppet are marked with a dollar sign ($) character. When using variables within a manifest, it is preferred to enclose the variable within braces "${myvariable}" instead of "$myvariable". All of the facts from facter can be referenced as top scope variables (we will discuss scope in the next section). For example, the fully qualified domain name (FQDN) of the node may be referenced by "${::fqdn}". Variables can only contain alphabetic characters, numerals, and the underscore character (_). As a matter of style, variables should start with an alphabetic character. Never use dashes in variable names.

In the variable example explained in the There's more… section, the fully qualified domain name was referred to as ${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates scope. The highest level scope, top scope or global, is referred to by two colons (::) at the beginning of a variable identifier. To reduce namespace collisions, always use fully scoped variable identifiers in your manifests. For a Unix user, think of top scope variables as the / (root) level. You can refer to variables using the double colon syntax similar to how you would refer to a directory by its full path. For the developer, you can think of top scope variables as global variables; however, unlike global variables, you must always refer to them with the double colon notation to guarantee that a local variable isn't obscuring the top scope variable.

facter without any arguments causes facter to print all the facts known about the system. We will see in later chapters that facter can be extended with your own custom facts. All facts are available for you to use as variables; variables are discussed in the next section.

Variables in Puppet are marked with a dollar sign ($) character. When using variables within a manifest, it is preferred to enclose the variable within braces "${myvariable}" instead of "$myvariable". All of the facts from facter can be referenced as top scope variables (we will discuss scope in the next section). For example, the fully qualified domain name (FQDN) of the node may be referenced by "${::fqdn}". Variables can only contain alphabetic characters, numerals, and the underscore character (_). As a matter of style, variables should start with an alphabetic character. Never use dashes in variable names.

In the variable example explained in the There's more… section, the fully qualified domain name was referred to as ${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates scope. The highest level scope, top scope or global, is referred to by two colons (::) at the beginning of a variable identifier. To reduce namespace collisions, always use fully scoped variable identifiers in your manifests. For a Unix user, think of top scope variables as the / (root) level. You can refer to variables using the double colon syntax similar to how you would refer to a directory by its full path. For the developer, you can think of top scope variables as global variables; however, unlike global variables, you must always refer to them with the double colon notation to guarantee that a local variable isn't obscuring the top scope variable.

in Puppet are marked with a dollar sign ($) character. When using variables within a manifest, it is preferred to enclose the variable within braces "${myvariable}" instead of "$myvariable". All of the facts from facter can be referenced as top scope variables (we will discuss scope in the next section). For example, the fully qualified domain name (FQDN) of the node may be referenced by "${::fqdn}". Variables can only contain alphabetic characters, numerals, and the underscore character (_). As a matter of style, variables should start with an alphabetic character. Never use dashes in variable names.

In the variable example explained in the There's more… section, the fully qualified domain name was referred to as ${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates scope. The highest level scope, top scope or global, is referred to by two colons (::) at the beginning of a variable identifier. To reduce namespace collisions, always use fully scoped variable identifiers in your manifests. For a Unix user, think of top scope variables as the / (root) level. You can refer to variables using the double colon syntax similar to how you would refer to a directory by its full path. For the developer, you can think of top scope variables as global variables; however, unlike global variables, you must always refer to them with the double colon notation to guarantee that a local variable isn't obscuring the top scope variable.

${::fqdn} rather than ${fqdn}; the double colons are how Puppet differentiates

To show how ordering works, we'll create a manifest that installs httpd and then ensures the httpd package service is running.

In this example, the package will be installed before the service is started. Using require within the definition of the httpd service ensures that the package is installed first, regardless of the order within the manifest file.

Capitalization is important in Puppet. In our previous example, we created a package named httpd. If we wanted to refer to this package later, we would capitalize its type (package) as follows:

When you have a defined type, for example the following defined type:

All the manifests that will be used to define a node are compiled into a catalog. A catalog is the code that will be applied to configure a node. It is important to remember that manifests are not applied to nodes sequentially. There is no inherent order to the application of manifests. With this in mind, in the previous httpd example, what if we wanted to ensure that the httpd process started after the httpd package was installed?

The before and require metaparameters specify a direct ordering; notify implies before and subscribe implies require. The notify metaparameter is only applicable to services; what notify does is tell a service to restart after the notifying resource has been applied to the node (this is most often a package or file resource). In the case of files, once the file is created on the node, a notify parameter will restart any services mentioned. The subscribe metaparameter has the same effect but is defined on the service; the service will subscribe to the file.

The relationship between package and service previously mentioned is an important and powerful paradigm of Puppet. Adding one more resource-type file into the fold, creates what puppeteers refer to as the trifecta. Almost all system administration tasks revolve around these three resource types. As a system administrator, you install a package, configure the package with files, and then start the service.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

service {'httpd':

ensure => running,

require => Package['httpd'],

}httpd; we now need to define that resource: package {'httpd':

ensure => 'installed',

}In this example, the package will be installed before the service is started. Using require within the definition of the httpd service ensures that the package is installed first, regardless of the order within the manifest file.

Capitalization is important in Puppet. In our previous example, we created a package named httpd. If we wanted to refer to this package later, we would capitalize its type (package) as follows:

When you have a defined type, for example the following defined type:

All the manifests that will be used to define a node are compiled into a catalog. A catalog is the code that will be applied to configure a node. It is important to remember that manifests are not applied to nodes sequentially. There is no inherent order to the application of manifests. With this in mind, in the previous httpd example, what if we wanted to ensure that the httpd process started after the httpd package was installed?

The before and require metaparameters specify a direct ordering; notify implies before and subscribe implies require. The notify metaparameter is only applicable to services; what notify does is tell a service to restart after the notifying resource has been applied to the node (this is most often a package or file resource). In the case of files, once the file is created on the node, a notify parameter will restart any services mentioned. The subscribe metaparameter has the same effect but is defined on the service; the service will subscribe to the file.

The relationship between package and service previously mentioned is an important and powerful paradigm of Puppet. Adding one more resource-type file into the fold, creates what puppeteers refer to as the trifecta. Almost all system administration tasks revolve around these three resource types. As a system administrator, you install a package, configure the package with files, and then start the service.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

package will be installed before the service is started. Using require within the definition of the httpd service ensures that the package is installed first, regardless of the order within the manifest file.

Capitalization is important in Puppet. In our previous example, we created a package named httpd. If we wanted to refer to this package later, we would capitalize its type (package) as follows:

When you have a defined type, for example the following defined type:

All the manifests that will be used to define a node are compiled into a catalog. A catalog is the code that will be applied to configure a node. It is important to remember that manifests are not applied to nodes sequentially. There is no inherent order to the application of manifests. With this in mind, in the previous httpd example, what if we wanted to ensure that the httpd process started after the httpd package was installed?

The before and require metaparameters specify a direct ordering; notify implies before and subscribe implies require. The notify metaparameter is only applicable to services; what notify does is tell a service to restart after the notifying resource has been applied to the node (this is most often a package or file resource). In the case of files, once the file is created on the node, a notify parameter will restart any services mentioned. The subscribe metaparameter has the same effect but is defined on the service; the service will subscribe to the file.

The relationship between package and service previously mentioned is an important and powerful paradigm of Puppet. Adding one more resource-type file into the fold, creates what puppeteers refer to as the trifecta. Almost all system administration tasks revolve around these three resource types. As a system administrator, you install a package, configure the package with files, and then start the service.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

When you have a defined type, for example the following defined type:

All the manifests that will be used to define a node are compiled into a catalog. A catalog is the code that will be applied to configure a node. It is important to remember that manifests are not applied to nodes sequentially. There is no inherent order to the application of manifests. With this in mind, in the previous httpd example, what if we wanted to ensure that the httpd process started after the httpd package was installed?

The before and require metaparameters specify a direct ordering; notify implies before and subscribe implies require. The notify metaparameter is only applicable to services; what notify does is tell a service to restart after the notifying resource has been applied to the node (this is most often a package or file resource). In the case of files, once the file is created on the node, a notify parameter will restart any services mentioned. The subscribe metaparameter has the same effect but is defined on the service; the service will subscribe to the file.

The relationship between package and service previously mentioned is an important and powerful paradigm of Puppet. Adding one more resource-type file into the fold, creates what puppeteers refer to as the trifecta. Almost all system administration tasks revolve around these three resource types. As a system administrator, you install a package, configure the package with files, and then start the service.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

used to define a node are compiled into a catalog. A catalog is the code that will be applied to configure a node. It is important to remember that manifests are not applied to nodes sequentially. There is no inherent order to the application of manifests. With this in mind, in the previous httpd example, what if we wanted to ensure that the httpd process started after the httpd package was installed?

The before and require metaparameters specify a direct ordering; notify implies before and subscribe implies require. The notify metaparameter is only applicable to services; what notify does is tell a service to restart after the notifying resource has been applied to the node (this is most often a package or file resource). In the case of files, once the file is created on the node, a notify parameter will restart any services mentioned. The subscribe metaparameter has the same effect but is defined on the service; the service will subscribe to the file.

The relationship between package and service previously mentioned is an important and powerful paradigm of Puppet. Adding one more resource-type file into the fold, creates what puppeteers refer to as the trifecta. Almost all system administration tasks revolve around these three resource types. As a system administrator, you install a package, configure the package with files, and then start the service.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

A key concept of Puppet is that the state of the system when a catalog is applied to a node cannot affect the outcome of Puppet run. In other words, at the end of Puppet run (if the run was successful), the system will be in a known state and any further application of the catalog will result in a system that is in the same state. This property of Puppet is known as idempotency. Idempotency is the property that no matter how many times you do something, it remains in the same state as the first time you did it. For instance, if you had a light switch and you gave the instruction to turn it on, the light would turn on. If you gave the instruction again, the light would remain on.

We will need the same definitions as our last example; we need the package and service installed. We now need two more things. We need the configuration file and index page (index.html) created. For this, we follow these steps:

- As in the previous example, we ensure the service is running and specify that the service requires the

httpdpackage:service {'httpd': ensure => running, require => Package['httpd'], } - We then define the package as follows:

package {'httpd': ensure => installed, } - Now, we create the

/etc/httpd/conf.d/cookbook.confconfiguration file; the/etc/httpd/conf.ddirectory will not exist until thehttpdpackage is installed. Therequiremetaparameter tells Puppet that this file requires thehttpdpackage to be installed before it is created:file {'/etc/httpd/conf.d/cookbook.conf': content => "<VirtualHost *:80>\nServernamecookbook\nDocumentRoot/var/www/cookbook\n</VirtualHost>\n", require => Package['httpd'], notify => Service['httpd'], } - We then go on to create an

index.htmlfile for our virtual host in/var/www/cookbook. This directory won't exist yet, so we need to create this as well, using the following code:file {'/var/www/cookbook': ensure => directory, } file {'/var/www/cookbook/index.html': content => "<html><h1>Hello World!</h1></html>\n", require => File['/var/www/cookbook'], }

definitions as our last example; we need the package and service installed. We now need two more things. We need the configuration file and index page (index.html) created. For this, we follow these steps:

- As in the previous example, we ensure the service is running and specify that the service requires the

httpdpackage:service {'httpd': ensure => running, require => Package['httpd'], } - We then define the package as follows:

package {'httpd': ensure => installed, } - Now, we create the

/etc/httpd/conf.d/cookbook.confconfiguration file; the/etc/httpd/conf.ddirectory will not exist until thehttpdpackage is installed. Therequiremetaparameter tells Puppet that this file requires thehttpdpackage to be installed before it is created:file {'/etc/httpd/conf.d/cookbook.conf': content => "<VirtualHost *:80>\nServernamecookbook\nDocumentRoot/var/www/cookbook\n</VirtualHost>\n", require => Package['httpd'], notify => Service['httpd'], } - We then go on to create an

index.htmlfile for our virtual host in/var/www/cookbook. This directory won't exist yet, so we need to create this as well, using the following code:file {'/var/www/cookbook': ensure => directory, } file {'/var/www/cookbook/index.html': content => "<html><h1>Hello World!</h1></html>\n", require => File['/var/www/cookbook'], }

require attribute to the file resources tell Puppet that we need the /var/www/cookbook directory created before we can create the index.html file. The important concept to remember is that we cannot assume anything about the target system (node). We need to define everything on which the target depends. Anytime you create a file in a manifest, you have to ensure that the directory containing that file exists. Anytime you specify that a service should be running, you have to ensure that the package providing that service is installed.

httpd will be running

VirtualHost

If other people need to read or maintain your manifests, or if you want to share code with the community, it's a good idea to follow the existing style conventions as closely as possible. These govern such aspects of your code as layout, spacing, quoting, alignment, and variable references, and the official puppetlabs recommendations on style are available at http://docs.puppetlabs.com/guides/style_guide.html.

Always quote your resource names, as follows:

We cannot do this as follows though:

Use single quotes for all strings, except when:

Puppet doesn't process variable references or escape sequences unless they're inside double quotes.

However, these values are reserved words and therefore not quoted:

There is only one thing in Puppet that is false, that is, the word false without any quotes. The string "false" evaluates to true and the string "true" also evaluates to true. Actually, everything besides the literal false evaluates to true (when treated as a Boolean):

Always include curly braces ({}) around variable names when referring to them in strings, for example, as follows:

Always quote your resource names, as follows:

We cannot do this as follows though:

Use single quotes for all strings, except when:

Puppet doesn't process variable references or escape sequences unless they're inside double quotes.

However, these values are reserved words and therefore not quoted:

There is only one thing in Puppet that is false, that is, the word false without any quotes. The string "false" evaluates to true and the string "true" also evaluates to true. Actually, everything besides the literal false evaluates to true (when treated as a Boolean):

Always include curly braces ({}) around variable names when referring to them in strings, for example, as follows:

Always quote your resource names, as follows:

We cannot do this as follows though:

Use single quotes for all strings, except when:

Puppet doesn't process variable references or escape sequences unless they're inside double quotes.

However, these values are reserved words and therefore not quoted:

There is only one thing in Puppet that is false, that is, the word false without any quotes. The string "false" evaluates to true and the string "true" also evaluates to true. Actually, everything besides the literal false evaluates to true (when treated as a Boolean):

Always include curly braces ({}) around variable names when referring to them in strings, for example, as follows:

We cannot do this as follows though:

Use single quotes for all strings, except when:

Puppet doesn't process variable references or escape sequences unless they're inside double quotes.

However, these values are reserved words and therefore not quoted:

There is only one thing in Puppet that is false, that is, the word false without any quotes. The string "false" evaluates to true and the string "true" also evaluates to true. Actually, everything besides the literal false evaluates to true (when treated as a Boolean):

Always include curly braces ({}) around variable names when referring to them in strings, for example, as follows:

false without any quotes. The string "false" evaluates to true and the string "true" also evaluates to true. Actually, everything besides the literal

Always include curly braces ({}) around variable names when referring to them in strings, for example, as follows:

If you already have some Puppet code (known as a Puppet manifest), you can skip this section and go on to the next. If not, we'll see how to create and apply a simple manifest.

To create and apply a simple manifest, follow these steps:

- First, install Puppet locally on your machine or create a virtual machine and install Puppet on that machine. For YUM-based systems, use https://yum.puppetlabs.com/ and for APT-based systems, use https://apt.puppetlabs.com/. You may also use gem to install Puppet. For our examples, we'll install Puppet using gem on a Debian Wheezy system (hostname:

cookbook). To use gem, we need therubygemspackage as follows:t@cookbook:~$ sudo apt-get install rubygems Reading package lists... Done Building dependency tree Reading state information... Done The following NEW packages will be installed: rubygems 0 upgraded, 1 newly installed, 0 to remove and 0 not upgraded. Need to get 0 B/597 kB of archives. After this operation, 3,844 kB of additional disk space will be used. Selecting previously unselected package rubygems. (Reading database ... 30390 files and directories currently installed.) Unpacking rubygems (from .../rubygems_1.8.24-1_all.deb) ... Processing triggers for man-db ... Setting up rubygems (1.8.24-1) ...

- Now, use

gemto install Puppet:t@cookbook $ sudo gem install puppet Successfully installed hiera-1.3.4 Fetching: facter-2.3.0.gem (100%) Successfully installed facter-2.3.0 Fetching: puppet-3.7.3.gem (100%) Successfully installed puppet-3.7.3 Installing ri documentation for hiera-1.3.4 Installing ri documentation for facter-2.3.0 Installing ri documentation for puppet-3.7.3 Done installing documentation for hiera, facter, puppet after 239 seconds

- Three gems are installed. Now, with Puppet installed, we can create a directory to contain our Puppet code:

t@cookbook:~$ mkdir -p .puppet/manifests t@cookbook:~$ cd .puppet/manifests t@cookbook:~/.puppet/manifests$

- Within your

manifestsdirectory, create thesite.ppfile with the following content:node default { file { '/tmp/hello': content => "Hello, world!\n", } } - Test your manifest with the

puppet applycommand. This will tell Puppet to read the manifest, compare it to the state of the machine, and make any necessary changes to that state:t@cookbook:~/.puppet/manifests$ puppet apply site.pp Notice: Compiled catalog for cookbook in environment production in 0.14 seconds Notice: /Stage[main]/Main/Node[default]/File[/tmp/hello]/ensure: defined content as '{md5}746308829575e17c3331bbcb00c0898b' Notice: Finished catalog run in 0.04 seconds

- To see if Puppet did what we expected (create the

/tmp/hellofile with theHello, world! content), run the following command:t@cookbook:~/puppet/manifests$ cat /tmp/hello Hello, world! t@cookbook:~/puppet/manifests$

- YUM-based systems, use https://yum.puppetlabs.com/ and for APT-based systems, use https://apt.puppetlabs.com/. You may also use gem to install Puppet. For our examples, we'll install Puppet using gem on a Debian Wheezy system (hostname:

cookbook). To use gem, we need therubygemspackage as follows:t@cookbook:~$ sudo apt-get install rubygems Reading package lists... Done Building dependency tree Reading state information... Done The following NEW packages will be installed: rubygems 0 upgraded, 1 newly installed, 0 to remove and 0 not upgraded. Need to get 0 B/597 kB of archives. After this operation, 3,844 kB of additional disk space will be used. Selecting previously unselected package rubygems. (Reading database ... 30390 files and directories currently installed.) Unpacking rubygems (from .../rubygems_1.8.24-1_all.deb) ... Processing triggers for man-db ... Setting up rubygems (1.8.24-1) ...

- Now, use

gemto install Puppet:t@cookbook $ sudo gem install puppet Successfully installed hiera-1.3.4 Fetching: facter-2.3.0.gem (100%) Successfully installed facter-2.3.0 Fetching: puppet-3.7.3.gem (100%) Successfully installed puppet-3.7.3 Installing ri documentation for hiera-1.3.4 Installing ri documentation for facter-2.3.0 Installing ri documentation for puppet-3.7.3 Done installing documentation for hiera, facter, puppet after 239 seconds

- Three gems are installed. Now, with Puppet installed, we can create a directory to contain our Puppet code:

t@cookbook:~$ mkdir -p .puppet/manifests t@cookbook:~$ cd .puppet/manifests t@cookbook:~/.puppet/manifests$

- Within your

manifestsdirectory, create thesite.ppfile with the following content:node default { file { '/tmp/hello': content => "Hello, world!\n", } } - Test your manifest with the

puppet applycommand. This will tell Puppet to read the manifest, compare it to the state of the machine, and make any necessary changes to that state:t@cookbook:~/.puppet/manifests$ puppet apply site.pp Notice: Compiled catalog for cookbook in environment production in 0.14 seconds Notice: /Stage[main]/Main/Node[default]/File[/tmp/hello]/ensure: defined content as '{md5}746308829575e17c3331bbcb00c0898b' Notice: Finished catalog run in 0.04 seconds

- To see if Puppet did what we expected (create the

/tmp/hellofile with theHello, world! content), run the following command:t@cookbook:~/puppet/manifests$ cat /tmp/hello Hello, world! t@cookbook:~/puppet/manifests$

The puppet-lint tool will automatically check your code against the style guide. The next section explains how to use it.

Here's what you need to do to install Puppet-lint:

- We'll install Puppet-lint using the gem provider because the gem version is much more up to date than the APT or RPM packages available. Create a

puppet-lint.ppmanifest as shown in the following code snippet:package {'puppet-lint': ensure => 'installed', provider => 'gem', } - Run

puppet applyon thepuppet-lint.ppmanifest, as shown in the following command:t@cookbook ~$ puppet apply puppet-lint.pp Notice: Compiled catalog for node1.example.com in environment production in 0.42 seconds Notice: /Stage[main]/Main/Package[puppet-lint]/ensure: created Notice: Finished catalog run in 2.96 seconds t@cookbook ~$ gem list puppet-lint *** LOCAL GEMS *** puppet-lint (1.0.1)

Follow these steps to use Puppet-lint:

- Choose a Puppet manifest file that you want to check with Puppet-lint, and run the following command:

t@cookbook ~$ puppet-lint puppet-lint.pp WARNING: indentation of => is not properly aligned on line 2 ERROR: trailing whitespace found on line 4

- As you can see, Puppet-lint found a number of problems with the manifest file. Correct the errors, save the file, and rerun Puppet-lint to check that all is well. If successful, you'll see no output:

t@cookbook ~$ puppet-lint puppet-lint.pp t@cookbook ~$

You can find out more about Puppet-lint at https://github.com/rodjek/puppet-lint.

Should you follow Puppet style guide and, by extension, keep your code lint-clean? It's up to you, but here are a couple of things to think about:

- It makes sense to use some style conventions, especially when you're working collaboratively on code. Unless you and your colleagues can agree on standards for whitespace, tabs, quoting, alignment, and so on, your code will be messy and difficult to read or maintain.

- If you're choosing a set of style conventions to follow, the logical choice would be that issued by puppetlabs and adopted by the community for use in public modules.

Having said that, it's possible to tell Puppet-lint to ignore certain checks if you've chosen not to adopt them in your codebase. For example, if you don't want Puppet-lint to warn you about code lines exceeding 80 characters, you can run Puppet-lint with the following option:

t@cookbook ~$ puppet-lint --no-80chars-check

Run puppet-lint --help to see the complete list of check configuration commands.

- The Automatic syntax checking with Git hooks recipe in Chapter 2, Puppet Infrastructure

- The Testing your Puppet manifests with rspec-puppet recipe in Chapter 9, External Tools and the Puppet Ecosystem

- We'll install Puppet-lint using the gem provider because the gem version is much more up to date than the APT or RPM packages available. Create a

puppet-lint.ppmanifest as shown in the following code snippet:package {'puppet-lint': ensure => 'installed', provider => 'gem', } - Run

puppet applyon thepuppet-lint.ppmanifest, as shown in the following command:t@cookbook ~$ puppet apply puppet-lint.pp Notice: Compiled catalog for node1.example.com in environment production in 0.42 seconds Notice: /Stage[main]/Main/Package[puppet-lint]/ensure: created Notice: Finished catalog run in 2.96 seconds t@cookbook ~$ gem list puppet-lint *** LOCAL GEMS *** puppet-lint (1.0.1)

Follow these steps to use Puppet-lint:

- Choose a Puppet manifest file that you want to check with Puppet-lint, and run the following command:

t@cookbook ~$ puppet-lint puppet-lint.pp WARNING: indentation of => is not properly aligned on line 2 ERROR: trailing whitespace found on line 4

- As you can see, Puppet-lint found a number of problems with the manifest file. Correct the errors, save the file, and rerun Puppet-lint to check that all is well. If successful, you'll see no output:

t@cookbook ~$ puppet-lint puppet-lint.pp t@cookbook ~$

You can find out more about Puppet-lint at https://github.com/rodjek/puppet-lint.

Should you follow Puppet style guide and, by extension, keep your code lint-clean? It's up to you, but here are a couple of things to think about:

- It makes sense to use some style conventions, especially when you're working collaboratively on code. Unless you and your colleagues can agree on standards for whitespace, tabs, quoting, alignment, and so on, your code will be messy and difficult to read or maintain.

- If you're choosing a set of style conventions to follow, the logical choice would be that issued by puppetlabs and adopted by the community for use in public modules.

Having said that, it's possible to tell Puppet-lint to ignore certain checks if you've chosen not to adopt them in your codebase. For example, if you don't want Puppet-lint to warn you about code lines exceeding 80 characters, you can run Puppet-lint with the following option:

t@cookbook ~$ puppet-lint --no-80chars-check

Run puppet-lint --help to see the complete list of check configuration commands.

- The Automatic syntax checking with Git hooks recipe in Chapter 2, Puppet Infrastructure

- The Testing your Puppet manifests with rspec-puppet recipe in Chapter 9, External Tools and the Puppet Ecosystem

- Choose a Puppet manifest file that you want to check with Puppet-lint, and run the following command:

t@cookbook ~$ puppet-lint puppet-lint.pp WARNING: indentation of => is not properly aligned on line 2 ERROR: trailing whitespace found on line 4

- As you can see, Puppet-lint found a number of problems with the manifest file. Correct the errors, save the file, and rerun Puppet-lint to check that all is well. If successful, you'll see no output:

t@cookbook ~$ puppet-lint puppet-lint.pp t@cookbook ~$

You can find out more about Puppet-lint at https://github.com/rodjek/puppet-lint.

Should you follow Puppet style guide and, by extension, keep your code lint-clean? It's up to you, but here are a couple of things to think about:

- It makes sense to use some style conventions, especially when you're working collaboratively on code. Unless you and your colleagues can agree on standards for whitespace, tabs, quoting, alignment, and so on, your code will be messy and difficult to read or maintain.

- If you're choosing a set of style conventions to follow, the logical choice would be that issued by puppetlabs and adopted by the community for use in public modules.

Having said that, it's possible to tell Puppet-lint to ignore certain checks if you've chosen not to adopt them in your codebase. For example, if you don't want Puppet-lint to warn you about code lines exceeding 80 characters, you can run Puppet-lint with the following option:

t@cookbook ~$ puppet-lint --no-80chars-check

Run puppet-lint --help to see the complete list of check configuration commands.

- The Automatic syntax checking with Git hooks recipe in Chapter 2, Puppet Infrastructure

- The Testing your Puppet manifests with rspec-puppet recipe in Chapter 9, External Tools and the Puppet Ecosystem

Puppet-lint at https://github.com/rodjek/puppet-lint.

Should you follow Puppet style guide and, by extension, keep your code lint-clean? It's up to you, but here are a couple of things to think about:

- It makes sense to use some style conventions, especially when you're working collaboratively on code. Unless you and your colleagues can agree on standards for whitespace, tabs, quoting, alignment, and so on, your code will be messy and difficult to read or maintain.

- If you're choosing a set of style conventions to follow, the logical choice would be that issued by puppetlabs and adopted by the community for use in public modules.

Having said that, it's possible to tell Puppet-lint to ignore certain checks if you've chosen not to adopt them in your codebase. For example, if you don't want Puppet-lint to warn you about code lines exceeding 80 characters, you can run Puppet-lint with the following option:

t@cookbook ~$ puppet-lint --no-80chars-check

Run puppet-lint --help to see the complete list of check configuration commands.

- The Automatic syntax checking with Git hooks recipe in Chapter 2, Puppet Infrastructure

- The Testing your Puppet manifests with rspec-puppet recipe in Chapter 9, External Tools and the Puppet Ecosystem

Modules are self-contained bundles of Puppet code that include all the files necessary to implement a thing. Modules may contain flat files, templates, Puppet manifests, custom fact declarations, augeas lenses, and custom Puppet types and providers.

Following are the steps to create an example module:

- We will use Puppet's module subcommand to create the directory structure for our new module:

t@cookbook:~$ mkdir -p .puppet/modules t@cookbook:~$ cd .puppet/modules t@cookbook:~/.puppet/modules$ puppet module generate thomas-memcached We need to create a metadata.json file for this module. Please answer the following questions; if the question is not applicable to this module, feel free to leave it blank. Puppet uses Semantic Versioning (semver.org) to version modules.What version is this module? [0.1.0] --> Who wrote this module? [thomas] --> What license does this module code fall under? [Apache 2.0] --> How would you describe this module in a single sentence? --> A module to install memcached Where is this module's source code repository? --> Where can others go to learn more about this module? --> Where can others go to file issues about this module? --> ---------------------------------------- { "name": "thomas-memcached", "version": "0.1.0", "author": "thomas", "summary": "A module to install memcached", "license": "Apache 2.0", "source": "", "issues_url": null, "project_page": null, "dependencies": [ { "version_range": ">= 1.0.0", "name": "puppetlabs-stdlib" } ] } ---------------------------------------- About to generate this metadata; continue? [n/Y] --> y Notice: Generating module at /home/thomas/.puppet/modules/thomas-memcached... Notice: Populating ERB templates... Finished; module generated in thomas-memcached. thomas-memcached/manifests thomas-memcached/manifests/init.pp thomas-memcached/spec thomas-memcached/spec/classes thomas-memcached/spec/classes/init_spec.rb thomas-memcached/spec/spec_helper.rb thomas-memcached/README.md thomas-memcached/metadata.json thomas-memcached/Rakefile thomas-memcached/tests thomas-memcached/tests/init.pp

This command creates the module directory and creates some empty files as starting points. To use the module, we'll create a symlink to the module name (memcached).

t@cookbook:~/.puppet/modules$ ln –s thomas-memcached memcached - Now, edit

memcached/manifests/init.ppand change the class definition at the end of the file to the following. Note thatpuppet module generatecreated many lines of comments; in a production module you would want to edit those default comments:class memcached { package { 'memcached': ensure => installed, } file { '/etc/memcached.conf': source => 'puppet:///modules/memcached/memcached.conf', owner => 'root', group => 'root', mode => '0644', require => Package['memcached'], } service { 'memcached': ensure => running, enable => true, require => [Package['memcached'], File['/etc/memcached.conf']], } } - Create the

modules/thomas-memcached/filesdirectory and then create a file namedmemcached.confwith the following contents:-m 64 -p 11211 -u nobody -l 127.0.0.1

- Change your

site.ppfile to the following:node default { include memcached } - We would like this module to install memcached. We'll need to run Puppet with root privileges, and we'll use sudo for that. We'll need Puppet to be able to find the module in our home directory; we can specify this on the command line when we run Puppet as shown in the following code snippet:

t@cookbook:~$ sudo puppet apply --modulepath=/home/thomas/.puppet/modules /home/thomas/.puppet/manifests/site.pp Notice: Compiled catalog for cookbook.example.com in environment production in 0.33 seconds Notice: /Stage[main]/Memcached/File[/etc/memcached.conf]/content: content changed '{md5}a977521922a151c959ac953712840803' to '{md5}9429eff3e3354c0be232a020bcf78f75' Notice: Finished catalog run in 0.11 seconds - Check whether the new service is running:

t@cookbook:~$ sudo service memcached status [ ok ] memcached is running.

All manifest files (those containing Puppet code) live in the manifests directory. In our example, the memcached class is defined in the manifests/init.pp file, which will be imported automatically.

Inside the memcached class, we refer to the memcached.conf file:

The preceding source parameter tells Puppet to look for the file in:

If you need to use a template as a part of the module, place it in the module's templates directory and refer to it as follows:

Modules can also contain custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

- We will use Puppet's module subcommand to create the directory structure for our new module:

t@cookbook:~$ mkdir -p .puppet/modules t@cookbook:~$ cd .puppet/modules t@cookbook:~/.puppet/modules$ puppet module generate thomas-memcached We need to create a metadata.json file for this module. Please answer the following questions; if the question is not applicable to this module, feel free to leave it blank. Puppet uses Semantic Versioning (semver.org) to version modules.What version is this module? [0.1.0] --> Who wrote this module? [thomas] --> What license does this module code fall under? [Apache 2.0] --> How would you describe this module in a single sentence? --> A module to install memcached Where is this module's source code repository? --> Where can others go to learn more about this module? --> Where can others go to file issues about this module? --> ---------------------------------------- { "name": "thomas-memcached", "version": "0.1.0", "author": "thomas", "summary": "A module to install memcached", "license": "Apache 2.0", "source": "", "issues_url": null, "project_page": null, "dependencies": [ { "version_range": ">= 1.0.0", "name": "puppetlabs-stdlib" } ] } ---------------------------------------- About to generate this metadata; continue? [n/Y] --> y Notice: Generating module at /home/thomas/.puppet/modules/thomas-memcached... Notice: Populating ERB templates... Finished; module generated in thomas-memcached. thomas-memcached/manifests thomas-memcached/manifests/init.pp thomas-memcached/spec thomas-memcached/spec/classes thomas-memcached/spec/classes/init_spec.rb thomas-memcached/spec/spec_helper.rb thomas-memcached/README.md thomas-memcached/metadata.json thomas-memcached/Rakefile thomas-memcached/tests thomas-memcached/tests/init.pp

This command creates the module directory and creates some empty files as starting points. To use the module, we'll create a symlink to the module name (memcached).

t@cookbook:~/.puppet/modules$ ln –s thomas-memcached memcached - Now, edit

memcached/manifests/init.ppand change the class definition at the end of the file to the following. Note thatpuppet module generatecreated many lines of comments; in a production module you would want to edit those default comments:class memcached { package { 'memcached': ensure => installed, } file { '/etc/memcached.conf': source => 'puppet:///modules/memcached/memcached.conf', owner => 'root', group => 'root', mode => '0644', require => Package['memcached'], } service { 'memcached': ensure => running, enable => true, require => [Package['memcached'], File['/etc/memcached.conf']], } } - Create the

modules/thomas-memcached/filesdirectory and then create a file namedmemcached.confwith the following contents:-m 64 -p 11211 -u nobody -l 127.0.0.1

- Change your

site.ppfile to the following:node default { include memcached } - We would like this module to install memcached. We'll need to run Puppet with root privileges, and we'll use sudo for that. We'll need Puppet to be able to find the module in our home directory; we can specify this on the command line when we run Puppet as shown in the following code snippet:

t@cookbook:~$ sudo puppet apply --modulepath=/home/thomas/.puppet/modules /home/thomas/.puppet/manifests/site.pp Notice: Compiled catalog for cookbook.example.com in environment production in 0.33 seconds Notice: /Stage[main]/Memcached/File[/etc/memcached.conf]/content: content changed '{md5}a977521922a151c959ac953712840803' to '{md5}9429eff3e3354c0be232a020bcf78f75' Notice: Finished catalog run in 0.11 seconds - Check whether the new service is running:

t@cookbook:~$ sudo service memcached status [ ok ] memcached is running.

All manifest files (those containing Puppet code) live in the manifests directory. In our example, the memcached class is defined in the manifests/init.pp file, which will be imported automatically.

Inside the memcached class, we refer to the memcached.conf file:

The preceding source parameter tells Puppet to look for the file in:

If you need to use a template as a part of the module, place it in the module's templates directory and refer to it as follows:

Modules can also contain custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

thomas-memcached. The name before the hyphen is your username or your username on Puppet forge (an online repository of modules). Since we want Puppet to be able to find the module by the name memcached, we make a symbolic link between thomas-memcached and memcached.

Inside the memcached class, we refer to the memcached.conf file:

The preceding source parameter tells Puppet to look for the file in:

If you need to use a template as a part of the module, place it in the module's templates directory and refer to it as follows:

Modules can also contain custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

If you need to use a template as a part of the module, place it in the module's templates directory and refer to it as follows:

Modules can also contain custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

Modules can also contain custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

custom facts, custom functions, custom types, and providers.

For more information about these, refer to Chapter 9, External Tools and the Puppet Ecosystem.

You can download modules provided by other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

other people and use them in your own manifests just like the modules you create. For more on this, see Using Public Modules recipe in Chapter 7, Managing Applications.

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating custom facts recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

- Chapter 9, External Tools and the Puppet Ecosystem

- The Using public modules recipe in Chapter 7, Managing Applications

- The Creating your own resource types recipe in Chapter 9, External Tools and the Puppet Ecosystem

- The Creating your own providers recipe in Chapter 9, External Tools and the Puppet Ecosystem

Choosing appropriate and informative names for your modules and classes will be a big help when it comes to maintaining your code. This is even truer if other people need to read and work on your manifests.

Here are some tips on how to name things in your manifests:

- Name modules after the software or service they manage, for example,

apacheorhaproxy. - Name classes within modules (subclasses) after the function or service they provide to the module, for example,

apache::vhostsorrails::dependencies. - If a class within a module disables the service provided by that module, name it

disabled. For example, a class that disables Apache should be namedapache::disabled. - Create a roles and profiles hierarchy of modules. Each node should have a single role consisting of one or more profiles. Each profile module should configure a single service.

- The module that manages users should be named

user. - Within the user module, declare your virtual users within the class

user::virtual(for more on virtual users and other resources, see the Using virtual resources recipe in Chapter 5, Users and Virtual Resources). - Within the user module, subclasses for particular groups of users should be named after the group, for example,

user::sysadminsoruser::contractors. - When using Puppet to deploy the config files for different services, name the file after the service, but with a suffix indicating what kind of file it is, for example:

- Apache init script:

apache.init - Logrotate config snippet for Rails:

rails.logrotate - Nginx vhost file for mywizzoapp:

mywizzoapp.vhost.nginx - MySQL config for standalone server:

standalone.mysql

- Apache init script:

- If you need to deploy a different version of a file depending on the operating system release, for example, you can use a naming convention like the following:

memcached.lucid.conf memcached.precise.conf

- You can have Puppet automatically select the appropriate version as follows:

source = > "puppet:///modules/memcached /memcached.${::lsbdistrelease}.conf", - If you need to manage, for example, different Ruby versions, name the class after the version it is responsible for, for example,

ruby192orruby186.

Puppet community maintains a set of best practice guidelines for your Puppet infrastructure, which includes some hints on naming conventions:

http://docs.puppetlabs.com/guides/best_practices.html

Some people prefer to include multiple classes on a node by using a comma-separated list, rather than separate include statements, for example:

node 'server014' inherits 'server' {

include mail::server, repo::gem, repo::apt, zabbix

}This is a matter of style, but I prefer to use separate include statements, one on a line, because it makes it easier to copy and move around class inclusions between nodes without having to tidy up the commas and indentation every time.

I mentioned inheritance in a couple of the preceding examples; if you're not sure what this is, don't worry, I'll explain this in detail in the next chapter.

apache or haproxy.

apache::vhosts or rails::dependencies.

disabled. For example, a class that disables Apache should be named apache::disabled.

user.

user::virtual (for more on virtual users and other resources, see the Using virtual resources recipe in

- Chapter 5, Users and Virtual Resources).

- Within the user module, subclasses for particular groups of users should be named after the group, for example,

user::sysadminsoruser::contractors. - When using Puppet to deploy the config files for different services, name the file after the service, but with a suffix indicating what kind of file it is, for example:

- Apache init script:

apache.init - Logrotate config snippet for Rails:

rails.logrotate - Nginx vhost file for mywizzoapp:

mywizzoapp.vhost.nginx - MySQL config for standalone server:

standalone.mysql

- Apache init script:

- If you need to deploy a different version of a file depending on the operating system release, for example, you can use a naming convention like the following:

memcached.lucid.conf memcached.precise.conf

- You can have Puppet automatically select the appropriate version as follows:

source = > "puppet:///modules/memcached /memcached.${::lsbdistrelease}.conf", - If you need to manage, for example, different Ruby versions, name the class after the version it is responsible for, for example,

ruby192orruby186.

Puppet community maintains a set of best practice guidelines for your Puppet infrastructure, which includes some hints on naming conventions:

http://docs.puppetlabs.com/guides/best_practices.html

Some people prefer to include multiple classes on a node by using a comma-separated list, rather than separate include statements, for example:

node 'server014' inherits 'server' {

include mail::server, repo::gem, repo::apt, zabbix

}This is a matter of style, but I prefer to use separate include statements, one on a line, because it makes it easier to copy and move around class inclusions between nodes without having to tidy up the commas and indentation every time.

I mentioned inheritance in a couple of the preceding examples; if you're not sure what this is, don't worry, I'll explain this in detail in the next chapter.

for your Puppet infrastructure, which includes some hints on naming conventions:

http://docs.puppetlabs.com/guides/best_practices.html

Some people prefer to include multiple classes on a node by using a comma-separated list, rather than separate include statements, for example:

node 'server014' inherits 'server' {

include mail::server, repo::gem, repo::apt, zabbix

}This is a matter of style, but I prefer to use separate include statements, one on a line, because it makes it easier to copy and move around class inclusions between nodes without having to tidy up the commas and indentation every time.

I mentioned inheritance in a couple of the preceding examples; if you're not sure what this is, don't worry, I'll explain this in detail in the next chapter.

Templates are a powerful way of using

Embedded Ruby (ERB) to help build config files dynamically. You can also use ERB syntax directly without having to use a separate file by calling the inline_template function. ERB allows you to use conditional logic, iterate over arrays, and include variables.

Here's an example of how to use inline_template:

Pass your Ruby code to inline_template within Puppet manifest, as follows:

In this example, we use inline_template to compute a different hour for this cron resource (a scheduled job) for each machine, so that the same job does not run at the same time on all machines. For more on this technique, see the Distributing cron jobs efficiently recipe in Chapter 6, Managing Resources and Files.

In ERB code, whether inside a template file or an inline_template string, you can access your Puppet variables directly by name using an @ prefix, if they are in the current scope or the top scope (facts):

To reference variables in another scope, use scope.lookupvar, as follows:

You should use inline templates sparingly. If you really need to use some complicated logic in your manifest, consider using a custom function instead (see the Creating custom functions recipe in Chapter 9, External Tools and the Puppet Ecosystem).

- The Using ERB templates recipe in Chapter 4, Working with Files and Packages

- The Using array iteration in templates recipe in Chapter 4, Working with Files and Packages

In this example, we use inline_template to compute a different hour for this cron resource (a scheduled job) for each machine, so that the same job does not run at the same time on all machines. For more on this technique, see the Distributing cron jobs efficiently recipe in Chapter 6, Managing Resources and Files.

In ERB code, whether inside a template file or an inline_template string, you can access your Puppet variables directly by name using an @ prefix, if they are in the current scope or the top scope (facts):

To reference variables in another scope, use scope.lookupvar, as follows:

You should use inline templates sparingly. If you really need to use some complicated logic in your manifest, consider using a custom function instead (see the Creating custom functions recipe in Chapter 9, External Tools and the Puppet Ecosystem).

- The Using ERB templates recipe in Chapter 4, Working with Files and Packages

- The Using array iteration in templates recipe in Chapter 4, Working with Files and Packages

inline_template is executed as if it were an ERB template. That is, anything inside the <%= and %> delimiters will be executed as Ruby code, and the rest will be treated as a string.

inline_template to compute a different hour for this cron resource (a scheduled job) for each machine, so that the same job does not run at the same time on all machines. For more on this technique, see the Distributing cron jobs efficiently recipe in

Chapter 6, Managing Resources and Files.

In ERB code, whether inside a template file or an inline_template string, you can access your Puppet variables directly by name using an @ prefix, if they are in the current scope or the top scope (facts):

To reference variables in another scope, use scope.lookupvar, as follows:

You should use inline templates sparingly. If you really need to use some complicated logic in your manifest, consider using a custom function instead (see the Creating custom functions recipe in Chapter 9, External Tools and the Puppet Ecosystem).

- The Using ERB templates recipe in Chapter 4, Working with Files and Packages

- The Using array iteration in templates recipe in Chapter 4, Working with Files and Packages

To reference variables in another scope, use scope.lookupvar, as follows:

You should use inline templates sparingly. If you really need to use some complicated logic in your manifest, consider using a custom function instead (see the Creating custom functions recipe in Chapter 9, External Tools and the Puppet Ecosystem).

- The Using ERB templates recipe in Chapter 4, Working with Files and Packages

- The Using array iteration in templates recipe in Chapter 4, Working with Files and Packages

Arrays are a powerful feature in Puppet; wherever you want to perform the same operation on a list of things, an array may be able to help. You can create an array just by putting its content in square brackets:

Although arrays will take you a long way with Puppet, it's also useful to know about an even more flexible data structure: the hash.

A hash is like an array, but each of the elements can be stored and looked up by name (referred to as the key), for example (hash.pp):

When we run Puppet on this, we see the following notify in the output:

You can declare literal arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

$packages = [ 'ruby1.8-dev',

'ruby1.8',

'ri1.8',

'rdoc1.8',

'irb1.8',

'libreadline-ruby1.8',

'libruby1.8',

'libopenssl-ruby' ]

package { $packages: ensure => installed }Although arrays will take you a long way with Puppet, it's also useful to know about an even more flexible data structure: the hash.

A hash is like an array, but each of the elements can be stored and looked up by name (referred to as the key), for example (hash.pp):

When we run Puppet on this, we see the following notify in the output:

You can declare literal arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

$packages array, with the same parameters (ensure => installed). This is a very compact way to instantiate many similar resources.

Although arrays will take you a long way with Puppet, it's also useful to know about an even more flexible data structure: the hash.

A hash is like an array, but each of the elements can be stored and looked up by name (referred to as the key), for example (hash.pp):

When we run Puppet on this, we see the following notify in the output:

You can declare literal arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

A hash is like an array, but each of the elements can be stored and looked up by name (referred to as the key), for example (hash.pp):

When we run Puppet on this, we see the following notify in the output:

You can declare literal arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

You can declare literal arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

arrays using square brackets, as follows:

Now, when we run Puppet on the preceding code, we see the following notice messages in the output:

However, Puppet can also create arrays for you from strings, using the split function, as follows:

Running puppet apply against this new manifest, we see the same messages in the output:

Puppet's if statement allows you to change the manifest behavior based on the value of a variable or an expression. With it, you can apply different resources or parameter values depending on certain facts about the node, for example, the operating system, or the memory size.

Optionally, you can add an else branch, which will be executed if the expression evaluates to false.

Optionally, you can add an else branch, which will be executed if the expression evaluates to false.

if keyword as an expression and evaluates it. If the expression evaluates to true, Puppet will execute the code within the curly braces.

if statements.

Another kind of expression you can test in if statements and other conditionals is the regular expression. A regular expression is a powerful way to compare strings using pattern matching.

Puppet treats the text supplied between the forward slashes as a regular expression, specifying the text to be matched. If the match succeeds, the if expression will be true and so the code between the first set of curly braces will be executed. In this example, we used a regular expression because different distributions have different ideas on what to call 64bit; some use amd64, while others use x86_64. The only thing we can count on is the presence of the number 64 within the fact. Some facts that have version numbers in them are treated as strings to Puppet. For instance, $::facterversion. On my test system, this is 2.0.1, but when I try to compare that with 2, Puppet fails to make the comparison:

If you wanted instead to do something if the text does not match, use !~ rather than =~:

You can not only match text using a regular expression, but also capture the matched text and store it in a variable:

The preceding code produces this output:

The variable $0 stores the whole matched text (assuming the overall match succeeded). If you put brackets around any part of the regular expression, it creates a group, and any matched groups will also be stored in variables. The first matched group will be $1, the second $2, and so on, as shown in the preceding example.

Puppet treats the text supplied between the forward slashes as a regular expression, specifying the text to be matched. If the match succeeds, the if expression will be true and so the code between the first set of curly braces will be executed. In this example, we used a regular expression because different distributions have different ideas on what to call 64bit; some use amd64, while others use x86_64. The only thing we can count on is the presence of the number 64 within the fact. Some facts that have version numbers in them are treated as strings to Puppet. For instance, $::facterversion. On my test system, this is 2.0.1, but when I try to compare that with 2, Puppet fails to make the comparison:

If you wanted instead to do something if the text does not match, use !~ rather than =~:

You can not only match text using a regular expression, but also capture the matched text and store it in a variable:

The preceding code produces this output:

The variable $0 stores the whole matched text (assuming the overall match succeeded). If you put brackets around any part of the regular expression, it creates a group, and any matched groups will also be stored in variables. The first matched group will be $1, the second $2, and so on, as shown in the preceding example.

between the forward slashes as a regular expression, specifying the text to be matched. If the match succeeds, the if expression will be true and so the code between the first set of curly braces will be executed. In this example, we used a regular expression because different distributions have different ideas on what to call 64bit; some use amd64, while others use x86_64. The only thing we can count on is the presence of the number 64 within the fact. Some facts that have version numbers in them are treated as strings to Puppet. For instance, $::facterversion. On my test system, this is 2.0.1, but when I try to compare that with 2, Puppet fails to make the comparison: