You have probably heard the term Machine Learning (ML) or Artificial Intelligence (AI) frequently in recent years, especially Deep Learning (DL). It may be the reason you decided to invest in this book and get to know more. Given some new, exciting developments in the area of neural networks, DL has come to be a hot area in ML. Today, it is difficult to imagine a world without quick text translation between languages, or without fast song identification. These, and many other things, are just the tip of the iceberg when it comes to the potential of DL to change your world. When you finish this book, we hope you will join the bus and ride along with amazing new applications and projects based on DL.

This chapter briefly introduces the field of ML and how it is used to solve common problems. Throughout this chapter, you will be driven to understand...

Diving into the ML ecosystem

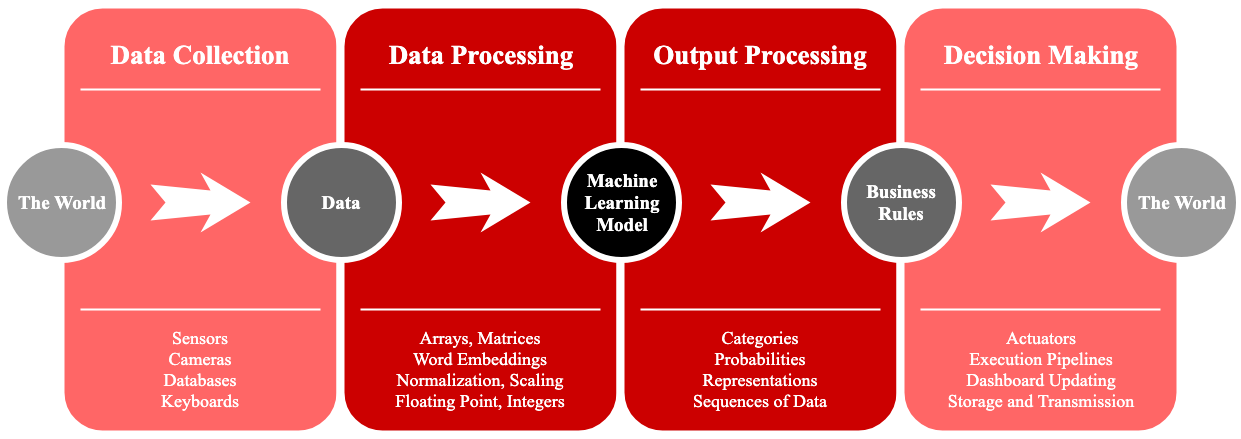

From the typical ML application process depicted in Figure 1.1, you can see that ML has a broad range of applications. However, ML algorithms are only a small part of a bigger ecosystem with a lot of moving parts, and yet ML is transforming lives around the world today:

Deployed ML applications usually start with a process of data collection that uses sensors of different types, such as cameras, lasers, spectroscopes, or other types of direct access to data, including local and remote databases, big or small. In the simplest of cases, input can be gathered through a computer keyboard or smartphone screen taps. At this stage, the data collected or sensed is considered to be raw data.

Raw data is usually preprocessed before presenting it to an ML model. Raw data is rarely the actual input to ML algorithms...

Training ML algorithms from data

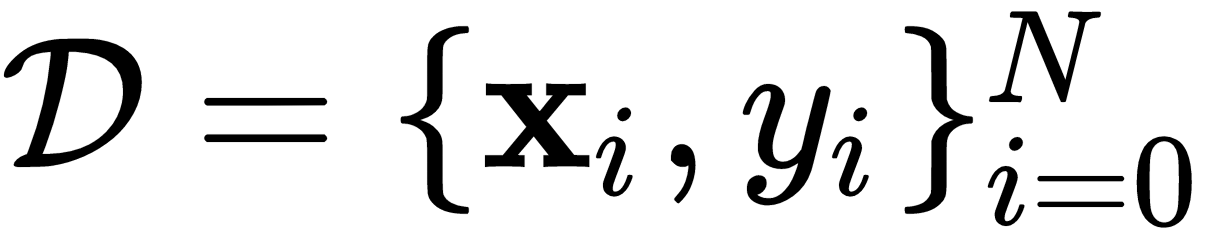

A typical preprocessed dataset is formally defined as follows:

Where y is the desired output corresponding to the input vector x. So, the motivation of ML is to use the data to find linear and non-linear transformations over x using highly complex tensor (vector) multiplications and additions, or to simply find ways to measure similarities or distances among data points, with the ultimate purpose of predicting y given x.

A common way of thinking about this is that we want to approximate some unknown function over x:

Where w is an unknown vector that facilitates the transformation of x along with b. This formulation is very basic, linear, and is simply an illustration of what a simple learning model would look like. In this simple case, the ML algorithms revolve around finding the best w and b that yields the closest (if not perfect) approximation to y, the desired output. Very simple algorithms such as the perceptron (Rosenblatt, F. 1958) try different...

Introducing deep learning

While a more detailed discussion of learning algorithms will be addressed in Chapter 4, Learning from Data, in this section, we will deal with the fundamental concept of a neural network and the developments that led to deep learning.

The model of a neuron

The human brain has input connections from other neurons (synapses) that receive stimuli in the form of electric charges, and then has a nucleus that depends on how the input stimulates the neuron that can trigger the neuron's activation. At the end of the neuron, the output signal is propagated to other neurons through dendrites, thus forming a network of neurons.

The analogy of the human neuron is depicted in Figure 1.3, where the input is represented with the vector x, the activation of the neuron is given by some function z(.), and the output is y. The parameters of the neuron are w and b:

The trainable parameters of a neuron are w and b, and they are unknown...

Why is deep learning important today?

Today, we enjoy the benefits of algorithms and strategies that we did not have 20 or 30 years ago, which enable us to have amazing applications that are changing lives. Allow me to summarize some of the great and important things about deep learning today:

- Training in mini-batches: This strategy allows us today to have very large datasets and train a deep learning model little by little. In the past, we would have to load the entire dataset into memory, making it computationally impossible for some large datasets. Today, yes, it may take a little longer, but we at least can actually perform training on finite time.

- Novel activation functions: Rectified linear units (ReLUs), for example, are a relatively new kind of activation that solved many of the problems with large-scale training with backpropagation strategies. These new activations enable training algorithms to converge on deep architectures when, in the past, we would get stuck on non-converging...

Summary

This introductory chapter presented an overview of ML. It introduced the motivation behind ML and the terminology that is commonly used in the field. It also introduced deep learning and how it fits in the realm of artificial intelligence. At this point, you should feel confident that you know enough about what a neural network is to be curious about how big it can be. You should also feel very intrigued about the area of deep learning and all the new things that are coming out every week.

At this point, you must be a bit anxious to begin your deep learning coding journey; for that reason, the next logical step is to go to Chapter 2, Setup and Introduction to Deep Learning Frameworks. In this chapter, you will get ready for the action by setting up your system and making sure you have access to the resources you will need to be a successful deep learning practitioner. But before you go there, please try to quiz yourself with the following questions.

Questions and answers

- Can a perceptron and/or a neural network solve the problem of classifying data that is linearly separable?

Yes, both can.

- Can a perceptron and/or a neural network solve the problem of classifying data that is non-separable?

Yes, both can. However, the perceptron will go on forever unless we specify a stopping condition such as a maximum number of iterations (updates), or stopping if the number of misclassified points does not decrease after a number of iterations.

- What are the changes in the ML filed that have enabled us to have deep learning today?

(A) backpropagation algorithms, batch training, ReLUs, and so on;

(B) computing power, GPUs, cloud, and so on.

- Why is generalization a good thing?

Because deep neural networks are most useful when they can function as expected when they are given data that they have not seen before, that is, data on which they have not been trained.

References

- Hecht-Nielsen, R. (1992). Theory of the backpropagation neural network. In Neural networks for perception (pp. 65-93). Academic Press.

- Kane, F. (2017). Hands-On Data Science and Python ML. Packt Publishing Ltd.

- LeCun, Y., Bottou, L., Orr, G., and Muller, K. (1998). Efficient backprop in neural networks: Tricks of the trade (Orr, G. and Müller, K., eds.). Lecture Notes in Computer Science, 1524(98), 111.

- Ojeda, T., Murphy, S. P., Bengfort, B., and Dasgupta, A. (2014). Practical Data Science Cookbook. Packt Publishing Ltd.

- Rosenblatt, F. (1958). The perceptron: a probabilistic model for information storage and organization in the brain. Psychological Review, 65(6), 386.

- Rumelhart, D. E., Hinton, G. E., and Williams, R. J. (1985). Learning internal representations by error propagation (No. ICS-8506). California Univ San Diego La Jolla Inst for Cognitive Science.

Download code from GitHub

Download code from GitHub