In this chapter, we will cover the following recipes:

Ceph – the beginning of a new era

RAID – the end of an era

Ceph – the architectural overview

Planning the Ceph deployment

Setting up a virtual infrastructure

Installing and configuring Ceph

Scaling up your Ceph cluster

Using Ceph clusters with a hands-on approach

Ceph is currently the hottest Software Defined Storage (SDS) technology that is shaking up the entire storage industry. It is an open source project that provides unified software defined solutions for Block, File, and Object storage. The core idea of Ceph is to provide a distributed storage system that is massively scalable and high performing with no single point of failure. From the roots, it has been designed to be highly scalable (up to the exabyte level and beyond) while running on general-purpose commodity hardware.

Ceph is acquiring most of the traction in the storage industry due to its open, scalable, and reliable nature. This is the era of cloud computing and software defined infrastructure, where we need a storage backend that is purely software defined, and more importantly, cloud ready. Ceph fits in here very well, regardless of whether you are running a public, private, or hybrid cloud.

Today's software systems are very smart and make the best use of commodity hardware to run gigantic scale infrastructure. Ceph is one of them; it intelligently uses commodity hardware to provide enterprise-grade robust and highly reliable storage systems.

Ceph has been raised and nourished with an architectural philosophy that includes the following:

Every component must scale linearly

There should not be any single point of failure

The solution must be software-based, open source, and adaptable

Ceph software should run on readily available commodity hardware

Every component must be self-managing and self-healing wherever possible

The foundation of Ceph lies in the objects, which are its building blocks, and object storage like Ceph is the perfect provision for the current and future needs for unstructured data storage. Object storage has its advantages over traditional storage solutions; we can achieve platform and hardware independence using object storage. Ceph plays meticulously with objects and replicates them across the cluster to avail reliability; in Ceph, objects are not tied to a physical path, making object location independent. Such flexibility enables Ceph to scale linearly from the petabyte to exabyte level.

Ceph provides great performance, enormous scalability, power, and flexibility to organizations. It helps them get rid of expensive proprietary storage silos. Ceph is indeed an enterprise class storage solution that runs on commodity hardware; it is a low-cost yet feature rich storage system. The Ceph universal storage system provides Block, File, and Object storage under one hood, enabling customers to use storage as they want.

Ceph is being developed and improved at a rapid pace. On July 3, 2012, Sage announced the first LTS release of Ceph with the code name, Argonaut. Since then, we have seen seven new releases come up. Ceph releases are categorized as LTS (Long Term Support), and development releases and every alternate Ceph release is an LTS release. For more information, please visit https://Ceph.com/category/releases/.

|

Ceph release name |

Ceph release version |

Released On |

|---|---|---|

|

Argonaut |

V0.48 (LTS) |

July 3, 2012 |

|

Bobtail |

V0.56 (LTS) |

January 1, 2013 |

|

Cuttlefish |

V0.61 |

May 7, 2013 |

|

Dumpling |

V0.67 (LTS) |

August 14, 2013 |

|

Emperor |

V0.72 |

November 9, 2013 |

|

Firefly |

V0.80 (LTS) |

May 7, 2014 |

|

Giant |

V0.87.1 |

Feb 26, 2015 |

|

Hammer |

V0.94 (LTS) |

April 7, 2015 |

|

Infernalis |

V9.0.0 |

May 5, 2015 |

|

Jewel |

V10.0.0 |

Nov, 2015 |

Data storage requirements have grown explosively over the last few years. Research shows that data in large organizations is growing at a rate of 40 to 60 percent annually, and many companies are doubling their data footprint each year. IDC analysts estimated that worldwide, there were 54.4 exabytes of total digital data in the year 2000. By 2007, this reached 295 exabytes, and by 2020, it's expected to reach 44 zettabytes worldwide. Such data growth cannot be managed by traditional storage systems; we need a system like Ceph, which is distributed, scalable and most importantly, economically viable. Ceph has been designed especially to handle today's as well as the future's data storage needs.

SDS is what is needed to reduce TCO for your storage infrastructure. In addition to reduced storage cost, an SDS can offer flexibility, scalability, and reliability. Ceph is a true SDS solution; it runs on commodity hardware with no vendor lock-in and provides low cost per GB. Unlike traditional storage systems where hardware gets married to software, in SDS, you are free to choose commodity hardware from any manufacturer and are free to design a heterogeneous hardware solution for your own needs. Ceph's software-defined storage on top of this hardware provides all the intelligence you need and will take care of everything, providing all the enterprise storage features right from the software layer.

One of the drawbacks of a cloud infrastructure is the storage. Every cloud infrastructure needs a storage system that is reliable, low-cost, and scalable with a tighter integration than its other cloud components. There are many traditional storage solutions out there in the market that claim to be cloud ready, but today we not only need cloud readiness, but a lot more beyond that. We need a storage system that should be fully integrated with cloud systems and can provide lower TCO without any compromise to reliability and scalability. The cloud systems are software defined and are built on top of commodity hardware; similarly, it needs a storage system that follows the same methodology, that is, being software defined on top of commodity hardware, and Ceph is the best choice available for cloud use cases.

Ceph has been rapidly evolving and bridging the gap of a true cloud storage backend. It is grabbing center stage with every major open source cloud platform, namely OpenStack, CloudStack, and OpenNebula. Moreover, Ceph has succeeded in building up beneficial partnerships with cloud vendors such as Red Hat, Canonical, Mirantis, SUSE, and many more. These companies are favoring Ceph big time and including it as an official storage backend for their cloud OpenStack distributions, thus making Ceph a red hot technology in cloud storage space.

The OpenStack project is one of the finest examples of open source software powering public and private clouds. It has proven itself as an end-to-end open source cloud solution. OpenStack is a collection of programs, such as cinder, glance, and swift, which provide storage capabilities to OpenStack. These OpenStack components required a reliable, scalable, and all in one storage backend like Ceph. For this reason, Openstack and Ceph communities have been working together for many years to develop a fully compatible Ceph storage backend for the OpenStack.

Cloud infrastructure based on Ceph provides much needed flexibility to service providers to build Storage-as-a-Service and Infrastructure-as-a-Service solutions, which they cannot achieve from other traditional enterprise storage solutions as they are not designed to fulfill cloud needs. By using Ceph, service providers can offer low-cost, reliable cloud storage to their customers.

The definition of unified storage has changed lately. A few years ago, the term "unified storage" referred to providing file and block storage from a single system. Now, because of recent technological advancements, such as cloud computing, big data, and Internet of Things, a new kind of storage has been evolving, that is, object storage. Thus, all the storage systems that do not support object storage are not really unified storage solutions. A true unified storage is like Ceph; it supports blocks, files, and object storage from a single system.

In Ceph, the term "unified storage" is more meaningful than what existing storage vendors claim to provide. Ceph has been designed from the ground up to be future ready, and it's constructed such that it can handle enormous amounts of data. When we call Ceph "future ready", we mean to focus on its object storage capabilities, which is a better fit for today's mix of unstructured data rather than blocks or files. Everything in Ceph relies on intelligent objects, whether it's block storage or file storage. Rather than managing blocks and files underneath, Ceph manages objects and supports block-and-file-based storage on top of it. Objects provide enormous scaling with increased performance by eliminating metadata operations. Ceph uses an algorithm to dynamically compute where the object should be stored and retrieved from.

The traditional storage architecture of a SAN and NAS system is very limited. Basically, they follow the tradition of controller high availability, that is, if one storage controller fails it serves data from the second controller. But, what if the second controller fails at the same time, or even worse, if the entire disk shelf fails? In most cases, you will end up losing your data. This kind of storage architecture, which cannot sustain multiple failures, is definitely what we do not want today. Another drawback of traditional storage systems is its data storage and access mechanism. It maintains a central lookup table to keep track of metadata, which means that every time a client sends a request for a read or write operation, the storage system first performs a lookup in the huge metadata table, and after receiving the real data location, it performs client operation. For a smaller storage system, you might not notice performance hits, but think of a large storage cluster—you would definitely be bound by performance limits with this approach. This would even restrict your scalability.

Ceph does not follow such traditional storage architecture; in fact, the architecture has been completely reinvented. Rather than storing and manipulating metadata, Ceph introduces a newer way: the CRUSH algorithm. CRUSH stands for Controlled Replication Under Scalable Hashing. Instead of performing lookup in the metadata table for every client request, the CRUSH algorithm computes on demand where the data should be written to or read from. By computing metadata, the need to manage a centralized table for metadata is no longer there. The modern computers are amazingly fast and can perform a CRUSH lookup very quickly; moreover, this computing load, which is generally not too much, can be distributed across cluster nodes, leveraging the power of distributed storage. In addition to this, CRUSH has a unique property, which is infrastructure awareness. It understands the relationship between various components of your infrastructure and stores your data in a unique failure zone, such as a disk, node, rack, row, and datacenter room, among others. CRUSH stores all the copies of your data such that it is available even if a few components fail in a failure zone. It is due to CRUSH that Ceph can handle multiple component failures and provide reliability and durability.

The CRUSH algorithm makes Ceph self-managing and self-healing. In an event of component failure in a failure zone, CRUSH senses which component has failed and determines the effect on the cluster. Without any administrative intervention, CRUSH self-manages and self-heals by performing a recovering operation for the data lost due to failure. CRUSH regenerates the data from the replica copies that the cluster maintains. If you have configured the Ceph CRUSH map in the correct order, it makes sure that at least one copy of your data is always accessible. Using CRUSH, we can design a highly reliable storage infrastructure with no single point of failure. It makes Ceph a highly scalable and reliable storage system that is future ready.

RAID technology has been the fundamental building block for storage systems for years. It has proven successful for almost every kind of data that has been generated in the last 3 decades. But all eras must come to an end, and this time, it's RAID's turn. These systems have started showing limitations and are incapable of delivering to future storage needs. In the course of the last few years, cloud infrastructures have gained a strong momentum and are imposing new requirements on storage and challenging traditional RAID systems. In this section, we will uncover the limitations imposed by RAID systems.

The most painful thing in RAID technology is its super-lengthy rebuild process. Disk manufacturers are packing lots of storage capacity per disk. They are now producing an extra-large capacity of disk drives at a fraction of the price. We no longer talk about 450 GB, 600 GB, or even 1 TB disks, as there are larger capacity of disks available today. The newer enterprise disks specification offers disks up to 4 TB, 6 TB, and even 10 TB disk drives, and the capacities keep increasing year by year.

Think of an enterprise RAID-based storage system that is made up of numerous 4 or 6 TB disk drives. Unfortunately, when such a disk drive fails, RAID will take several hours and even up to days to repair a single failed disk. Meanwhile, if another drive fails from the same RAID group then it would become a chaotic situation. Repairing multiple large disk drives using RAID is a cumbersome process.

The RAID system requires a few disks as hot spare disks. These are just free disks that will be used only when a disk fails, else they will not be used for data storage. This adds extra cost to the system and increases TCO. Moreover, if you're running short of spare disks and immediately a disk fails in the RAID group, then you will face a severe problem.

RAID requires a set of identical disk drivers in a single RAID group; you would face penalties if you change the disk size, rpm, or disk type. Doing so would adversely affect the capacity and performance of your storage system. This makes RAID highly choosy on hardware.

Also, enterprise RAID-based systems often require expensive hardware components, such as RAID controllers, which significantly increases the system cost. These RAID controllers will become the single points of failure if you do not have many of them.

RAID can hit a dead end when it's not possible to grow the RAID group size, which means that there is no scale out support. After a point, you cannot grow your RAID-based system, even though you have money. Some systems allow the addition of disk shelves, but up to a very limited capacity; however, these new disk shelves put a load on the existing storage controller. So, you can gain some capacity, but with a performance tradeoff.

RAID can be configured with a variety of different types; the most common types are RAID5 and RAID6, which can survive the failure of one and two disks respectively. RAID cannot ensure data reliability after a two-disk failure. This is one of the biggest drawbacks with RAID systems.

Moreover, at the time of a RAID rebuild operation, client requests are most likely to starve for IO until the rebuild completes. Another limiting factor with RAID is that it only protects against disk failure; it cannot protect against a failure of the network, server hardware, OS, power, or other datacenter disasters.

After discussing RAID's drawbacks, we can come to the conclusion that we now need a system that can overcome all these drawbacks in a performance and cost effective way. The Ceph storage system is one of the best solutions available today to address these problems. Let's see how.

For reliability, Ceph makes use of the data replication method, which means it does not use RAID, thus overcoming all the problems that can be found in a RAID-based enterprise system. Ceph is a software-defined storage, so we do not require any specialized hardware for data replication; moreover, the replication level is highly customized by means of commands, which means that the Ceph storage administrator can manage the replication factor of a minimum of one and maximum of a higher number, totally depending on the underlying infrastructure.

In an event of one or more disk failures, Ceph's replication is a better process than RAID. When a disk drive fails, all the data that was residing on that disk at that point of time starts recovering from its peer disks. Since Ceph is a distributed system, all the data copies are scattered on the entire cluster of disks in the form of objects, such that no two object's copies should reside on the same disk and must reside in a different failure zone defined by the CRUSH map. The good part is all the cluster disks participate in data recovery. This makes the recovery operation amazingly fast with the least performance problems. Further to this, the recovery operation does not require any spare disks; the data is simply replicated to other Ceph disks in the cluster. Ceph uses a weighting mechanism for its disks, thus different disk sizes is not a problem.

In addition to the replication method, Ceph also supports another advanced way of data reliability: using the erasure-coding technique. Erasure coded pools require less storage space as compared to replicated pools. In erasure coding, data is recovered or regenerated algorithmically by erasure code calculation. You can use both the techniques of data availability, that is, replication as well as erasure coding, in the same Ceph cluster but over different storage pools. We will learn more about the erasure coding technique in the coming chapters.

The Ceph internal architecture is pretty straight forward, and we will learn it with the help of the following diagram:

Ceph Monitors (MON): Ceph monitors track the health of the entire cluster by keeping a map of the cluster state. It maintains a separate map of information for each component, which includes an OSD map, MON map, PG map (discussed in later chapters), and CRUSH map. All the cluster nodes report to Monitor nodes and share information about every change in their state. The monitor does not store actual data; this is the job of the OSD.

Ceph Object Storage Device (OSD): As soon as your application issues a writes operation to the Ceph cluster, data gets stored in the OSD in the form of objects. This is the only component of the Ceph cluster where actual user data is stored, and the same data is retrieved when the client issues a read operation. Usually, one OSD daemon is tied to one physical disk in your cluster. So, in general, the total number of physical disks in your Ceph cluster is the same as the number of OSD daemons working underneath to store user data on each physical disk.

Ceph Metadata Server (MDS): The MDS keeps track of file hierarchy and stores metadata only for the CephFS filesystem. The Ceph block device and RADOS gateway does not require metadata, hence they do not need the Ceph MDS daemon. The MDS does not serve data directly to clients, thus removing the single point of failure from the system.

RADOS: The Reliable Autonomic Distributed Object Store (RADOS) is the foundation of the Ceph storage cluster. Everything in Ceph is stored in the form of objects, and the RADOS object store is responsible for storing these objects irrespective of their data types. The RADOS layer makes sure that data always remains consistent. To do this, it performs data replication, failure detection and recovery, as well as data migration and rebalancing across cluster nodes.

librados: The librados library is a convenient way to gain access to RADOS with support to the PHP, Ruby, Java, Python, C, and C++ programming languages. It provides a native interface for the Ceph storage cluster (RADOS), as well as a base for other services such as RBD, RGW, and CephFS, which are built on top of librados. Librados also supports direct access to RADOS from applications with no HTTP overhead.

RADOS Block Devices (RBDs): RBDs which are now known as the Ceph block device, provides persistent block storage, which is thin-provisioned, resizable, and stores data striped over multiple OSDs. The RBD service has been built as a native interface on top of librados.

RADOS Gateway interface (RGW): RGW provides object storage service. It uses librgw (the Rados Gateway Library) and librados, allowing applications to establish connections with the Ceph object storage. The RGW provides RESTful APIs with interfaces that are compatible with Amazon S3 and OpenStack Swift.

CephFS: The Ceph File system provides a POSIX-compliant file system that uses the Ceph storage cluster to store user data on a filesystem. Like RBD and RGW, the CephFS service is also implemented as a native interface to librados.

A Ceph storage cluster is created on top of the commodity hardware. This commodity hardware includes industry standard servers loaded with physical disk drives that provide storage capacity and some standard networking infrastructure. These servers run standard Linux distributions and Ceph software on top of them. The following diagram helps you understand a basic view of a Ceph cluster:

As explained earlier, Ceph does not have a very specific hardware requirement. For the purpose of testing and learning, we can deploy a Ceph cluster on top of virtual machines. In this section and in the later chapters of this book, we will be working on a Ceph cluster which is built on top of virtual machines. It's very convenient to use a virtual environment to test Ceph, as it's fairly easy to set up and can be destroyed and recreated any time. It's good to know that a virtual infrastructure for the Ceph cluster should not be used for a production environment, and you might face serious problems with this.

To set up a virtual infrastructure, you will require open source software such as Oracle VirtualBox and Vagrant to automate virtual machine creation for you. Make sure you have the following software installed and working correctly on your host machine. The installation processes of the software are beyond the scope of this book; you can follow their respective documentation in order to get them installed and working correctly.

You will need the following software to get started:

Oracle VirtualBox: This is an open source virtualization software package for host machines based on x86 and AMD64/Intel64. It supports Microsoft Windows, Linux, and Apple MAC OSX host operating systems. Make sure it's installed and working correctly. More information can be found at https://www.virtualbox.org. Once you have installed VirtualBox, run the following command to ensure the installation:

# VBoxManage --version

Vagrant: This is software meant for creating virtual development environments. It works as a wrapper around virtualization software such as VirtualBox, VMware, KVM, and so on. It supports the Microsoft Windows, Linux, and Apple MAC OSX host operating systems. Make sure it's installed and working correctly. More information can be found at https://www.vagrantup.com/. Once you have installed Vagrant, run the following command to ensure the installation:

# vagrant --version

Git: This is a distributed revision control system and the most popular and widely adopted version control system for software development. It supports Microsoft Windows, Linux, and Apple MAC OSX operating systems. Make sure it's installed and working correctly. More information can be found at http://git-scm.com/. Once you have installed Git, run the following command to ensure the installation:

# git --version

Once you have installed the mentioned software, we will then proceed with virtual machine creation:

Git clone

ceph-cookbookrepositories to your VirtualBox host machine:$ git clone https://github.com/ksingh7/ceph-cookbook.git

Under the cloned directory, you will find

Vagrantfile, which is our Vagrant configuration file that basically instructs VirtualBox to launch the VMs that we require at different stages of this book. Vagrant will automate the VM's creation, installation, and configuration for you; it makes the initial environment easy to set up:$ cd ceph-cookbook ; ls -lNext, we will launch three VMs using Vagrant; they are required throughout this chapter:

$ vagrant up ceph-node1 ceph-node2 ceph-node3

Check the status of your virtual machines:

$ vagrant status ceph-node1 ceph-node2 ceph-node3

Vagrant will, by default, set up hostnames as

ceph-node<node_number>, IP address subnet as192.168.1.X, and will create three additional disks that will be used as OSDs by the Ceph cluster. Log in to each of these machines one by one and check if the hostname, networking, and additional disks have been set up correctly by Vagrant:$ vagrant ssh ceph-node1 $ ip addr show $ sudo fdisk -l $ exit

Vagrant is configured to update hosts file on the VMs. For convenience, update the

/etc/hostsfile on your host machine with the following content.192.168.1.101 ceph-node1 192.168.1.102 ceph-node2 192.168.1.103 ceph-node3

Generate root SSH keys for

ceph-node1and copy the keys toceph-node2andceph-node3. The password forrootuser on these VMs isvagrant. Enter the root user password when it's asked by thessh-copy-idcommand and proceed with the default settings:$ vagrant ssh ceph-node1 $ sudo su - # ssh-keygen # ssh-copy-id root@ceph-node2 # ssh-copy-id root@ceph-node3

Once the ssh keys are copied to

ceph-node2andceph-node3, the root user fromceph-node1can do an ssh login to VMs without entering the password:# ssh ceph-node2 hostname # ssh ceph-node3 hostname

Enable ports that are required by the Ceph MON, OSD, and MDS on the operating system's firewall. Execute the following commands on all VMs:

# firewall-cmd --zone=public --add-port=6789/tcp --permanent # firewall-cmd --zone=public --add-port=6800-7100/tcp --permanent # firewall-cmd --reload # firewall-cmd --zone=public --list-all

Disable SELINUX on all the VMs:

# setenforce 0 # sed –i s'/SELINUX.*=.*enforcing/SELINUX=disabled'/g /etc/selinux/config

Install and configure

ntpon all VMs:# yum install ntp ntpdate -y # ntpdate pool.ntp.org # systemctl restart ntpdate.service # systemctl restart ntpd.service # systemctl enable ntpd.service # systemctl enable ntpdate.service

Add repositories on all nodes for the Ceph giant version and update yum:

# rpm -Uhv http://ceph.com/rpm-giant/el7/noarch/ceph-release-1-0.el7.noarch.rpm # yum update -y

To deploy our first Ceph cluster, we will use the ceph-deploy tool to install and configure Ceph on all three virtual machines. The ceph-deploy tool is a part of the Ceph software-defined storage, which is used for easier deployment and management of your Ceph storage cluster. In the previous section, we created three virtual machines with CentOS7, which have connectivity with the Internet over NAT, as well as private host-only networks.

We will configure these machines as Ceph storage clusters, as mentioned in the following diagram:

We will first install Ceph and configure ceph-node1 as the Ceph monitor and the Ceph OSD node. Later recipes in this chapter will introduce ceph-node2 and ceph-node3.

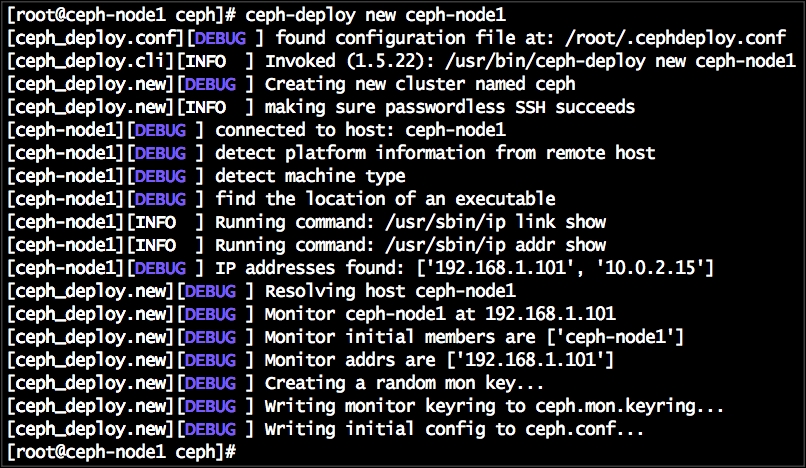

Install

ceph-deployonceph-node1:# yum install ceph-deploy -yNext, we will create a Ceph cluster using

ceph-deployby executing the following command fromceph-node1:# mkdir /etc/ceph ; cd /etc/ceph # ceph-deploy new ceph-node1

The

newsubcommand inceph-deploydeploys a new cluster withcephas the cluster name, which is by default; it generates a cluster configuration and keying files. List the present working directory; you will find theceph.confandceph.mon.keyringfiles:

To install Ceph software binaries on all the machines using

ceph-deploy, execute the following command fromceph-node1:# ceph-deploy install ceph-node1 ceph-node2 ceph-node3The

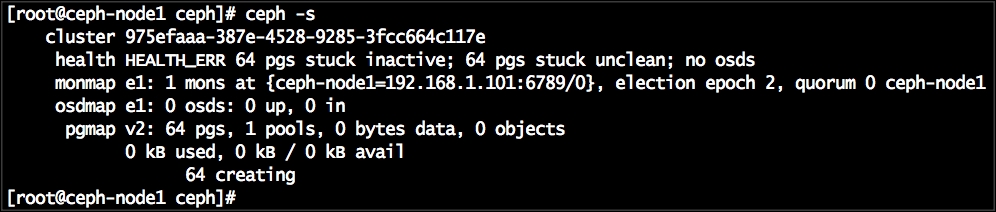

ceph-deploytool will first install all the dependencies followed by the Ceph Giant binaries. Once the command completes successfully, check the Ceph version and Ceph health on all the nodes, as shown as follows:# ceph -vCreate first the Ceph monitor in

ceph-node1:# ceph-deploy mon create-initialOnce the monitor creation is successful, check your cluster status. Your cluster will not be healthy at this stage:

# ceph -s

Create OSDs on

ceph-node1:List the available disks on

ceph-node1:# ceph-deploy disk list ceph-node1From the output, carefully select the disks (other than the OS partition) on which we should create the Ceph OSD. In our case, the disk names are

sdb,sdc, andsdd.The

disk zapsubcommand would destroy the existing partition table and content from the disk. Before running this command, make sure that you are using the correct disk device name:# ceph-deploy disk zap ceph-node1:sdb ceph-node1:sdc ceph-node1:sddThe

osd createsubcommand will first prepare the disk, that is, erase the disk with a filesystem that is xfs by default, and then it will activate the disk's first partition as data partition and its second partition as journal:# ceph-deploy osd create ceph-node1:sdb ceph-node1:sdc ceph-node1:sddCheck the Ceph status and notice the OSD count. At this stage, your cluster would not be healthy; we need to add a few more nodes to the Ceph cluster so that it can replicate objects three times (by default) across cluster and attain healthy status. You will find more information on this in the next recipe:

# ceph -s

At this point, we have a running Ceph cluster with one MON and three OSDs configured on ceph-node1. Now, we will scale up the cluster by adding ceph-node2 and ceph-node3 as MON and OSD nodes.

A Ceph storage cluster requires at least one monitor to run. For high availability, a Ceph storage cluster relies on an odd number of monitors and more than one, for example, 3 or 5, to form a quorum. It uses the Paxos algorithm to maintain quorum majority. Since we already have one monitor running on ceph-node1, let's create two more monitors for our Ceph cluster:

Add a public network address to the

/etc/ceph/ceph.conffile onceph-node1:public network = 192.168.1.0/24From

ceph-node1, useceph-deployto create a monitor onceph-node2:# ceph-deploy mon create ceph-node2Repeat this step to create a monitor on

ceph-node3:# ceph-deploy mon create ceph-node3Check the status of your Ceph cluster; it should show three monitors in the MON section:

# ceph -s # ceph mon stat

You will notice that your Ceph cluster is currently showing

HEALTH_WARN; this is because we have not configured any OSDs other thanceph-node1. By default, the date in a Ceph cluster is replicated three times, that too on three different OSDs hosted on three different nodes. Now, we will configure OSDs onceph-node2andceph-node3:Use

ceph-deployfromceph-node1to perform a disk list, disk zap, and OSD creation onceph-node2andceph-node3:# ceph-deploy disk list ceph-node2 ceph-node3 # ceph-deploy disk zap ceph-node2:sdb ceph-node2:sdc ceph-node2:sdd # ceph-deploy disk zap ceph-node3:sdb ceph-node3:sdc ceph-node3:sdd # ceph-deploy osd create ceph-node2:sdb ceph-node2:sdc ceph-node2:sdd # ceph-deploy osd create ceph-node3:sdb ceph-node3:sdc ceph-node3:sdd

Since we have added more OSDs, we should tune

pg_numand thepgp_numvalues for therbdpool to achieve aHEALTH_OKstatus for our Ceph cluster:# ceph osd pool set rbd pg_num 256 # ceph osd pool set rbd pgp_num 256

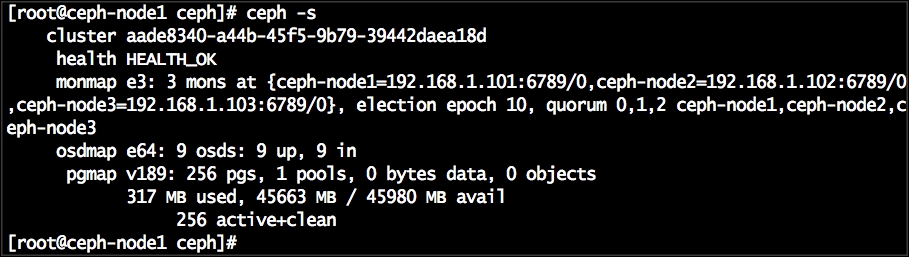

Check the status of your Ceph cluster; at this stage, your cluster will be healthy.

Now that we have a running Ceph cluster, we will perform some hands-on practice to gain experience with Ceph, using some basic commands.

Check the status of your Ceph installation:

# ceph -s or # ceph statusWatch the cluster health:

# ceph -wCheck the Ceph monitor quorum status:

# ceph quorum_status --format json-prettyDump the Ceph monitor information:

# ceph mon dumpCheck the cluster usage status:

# ceph dfCheck the Ceph monitor, OSD, and placement group stats:

# ceph mon stat # ceph osd stat # ceph pg stat

List the placement group:

# ceph pg dumpList the Ceph pools:

# ceph osd lspoolsCheck the CRUSH map view of OSDs:

# ceph osd treeList the cluster authentication keys:

# ceph auth list

These were some basic commands that we learned in this section. In the upcoming chapters, we will learn advanced commands for Ceph cluster management.