In this chapter, we will introduce the basic principles of clustering and show how to set up two Linux servers as members of a cluster, step by step.

As part of this process, we will install the CentOS 7 Linux distribution from scratch, along with the necessary packages, and finally configure key-based authentication for SSH access from one computer to the other. All commands, except if noted otherwise, must be run as root and are indicated by a leading $ sign throughout this book.

In computer science, a cluster consists of a group of computers (with each computer referred to as a node or member) that work together so that the set is seen as a single system from the outside.

The enterprise and science environments often require high computing power to analyze massive amounts of data produced every day, and redundancy. In order for the results to be always available to people either using those services or managing them, we rely on the high availability and performance of computer systems. The need of Internet websites, such as those used by banks and other commercial institutions, to perform well when under a significant load is a clear example of the advantages of using clusters.

There are two typical cluster setups. The first one involves assigning a different task to each node, thus achieving a higher performance compared with several tasks being performed by a single member on its own. Another classic use of clustering is to help ensure high availability by providing failover capabilities to the set where one node may automatically replace a failed member to minimize the downtime of one or several critical services. In either case, the concept of clustering implies not only taking advantage of the computing functionality of each member alone, but also maximizing it by complementing it with the others.

This type of cluster setup is called high availability (HA), and it aims to eliminate system downtime by failing over services from one node to another in case one of them experiences an issue that renders it inoperative. As opposed to switchover, which requires human intervention, a failover procedure is performed automatically by the cluster without any downtime. In other words, this operation is transparent to end users and clients from outside the cluster.

The second setup uses its nodes to perform operations in parallel in order to enhance the performance of one or more applications, and is called a high-performance cluster (HPC). HPCs are typically seen in scenarios involving applications and processes that use large collections of data.

As mentioned earlier, we will build a cluster with two machines running Linux. This choice is supported by the fact that this involves low costs and stability associated with this setup—no paid operating system or software licenses, along with the possibility of running Linux on systems with small resources (such as a Raspberry Pi or relatively old hardware). Thus, we can set up a cluster with very little resources or money.

We will begin our own journey toward clustering by setting up the separate nodes that will make up our system. Our choice of operating system is Linux and CentOS version 7, as the distribution, which is the latest available release of CentOS as of now. The binary compatibility with Red Hat Enterprise Linux © (which is one of the most well-used distributions in enterprise and scientific environments) along with its well-proven stability are the reasons behind this decision.

Note

CentOS 7 is available for download, free of charge, from the project's web site at http://www.centos.org/. In addition to this, specific details about the release can always be consulted in release notes available through the CentOS wiki, http://wiki.centos.org/Manuals/ReleaseNotes/CentOS7.

To download CentOS, go to http://www.centos.org/download/ and click on one of the three options outlined in the following screenshot:

DVD ISO: This is an

.isofile (~4 GB) that can be written into regular DVD optical media and includes the common tools. Download this file if you have permanent access to a reliable Internet connection that you can use to download other packages and utilities later.Everything ISO: This is an

.isofile (~7 GB) with the complete set of packages made available in the base repository of CentOS 7. Download this file if you do not have access to a permanent Internet connection or if your plan contemplates the possibility of installing or populating a local or network mirror.alternative downloads: This link will take you to a public directory within an official nearby CentOS mirror where the previous options are available along with others, including different choices of desktop versions (GNOME or KDE), and the minimal

.isofile (~570 MB), which contains the core or essential packages of the distribution.

Although all the three download options will work, we will use the minimal install as it is sufficient for our purpose at hand, and we can install other needed packages using public software package repositories with the standard Centos package manager yum later. The recommended .iso file to download is the latest that is available from the download page, which at the time of writing this is CentOS 7.0 1406 x86_64 Minimal.iso.

If you do not have dedicated hardware that you can use to set up the nodes of your cluster, you can still create them using virtual machines over some virtualization software, such as Oracle Virtualbox © or VMware ©, for example. Using virtualization will allow us to easily set up the second node after setting up the first by cloning it. The only limitation in this case is that we will not have a STONITH device available. Shoot The Other Node In The Head (STONITH) is a mechanism that aims to prevent two nodes from acting as the primary node in an HA cluster, thus avoiding the possibility of data corruption.

The following setup is going to be performed on a Virtualbox VM with 1 GB of RAM and 30 GB of disk space plus two network card interfaces. The first one will allow us to reach the Internet to download other packages, whereas the second will be needed to create a shared IP address to reach the cluster as a whole.

The reason why I have chosen VirtualBox over VMware is that the former is free of cost and is available for Microsoft Windows, Linux, and MacOS, while a full version of the latter costs money.

To download and install VirtualBox, go to https://www.virtualbox.org/ and choose the version for your operating system. For the installation instructions, you can refer to https://www.virtualbox.org/manual/UserManual.html, especially sections 1.7 Creating your first virtual machine, and 1.13 Cloning virtual machines.

Other than that, you will also need to ensure that your virtual machine has two network interface cards. The first one is created by default while the second one has to be created manually.

To display the current network configuration for a VM, click on it in Virtualbox's main interface and then on the Settings button. A popup window will appear with the list of the different hardware categories. Choose Network and configure Adapter 1 to Bridged Adapter, as shown in the following screenshot:

Click on Adapter 2, enable it by checking the corresponding checkbox and configure it as part of an Internal Network named intnet, as shown in the following screenshot:

We will use the default partitioning schema (LVM) as suggested by the installation process.

We will start by creating the first node step by step and then use the cloning feature in Virtualbox to instantiate an identical node. This will reduce the necessary time for the installation as it will only require a slight modification to the hostname and network. Follow these steps to install CentOS 7 in a virtual machine:

The splash screen shown in the following screenshot is the first step in the installation process after loading the installation media on boot. Highlight Install CentOS 7 using the up and down arrows and press Enter:

Select English (or your preferred installation language) and click on Continue:

In the next screen, you can set the current date and time, choose a keyboard layout and language support, pick a hard drive destination for the installation along with a partitioning method, connect the main network interface, and assign a unique hostname for the node. We will name the current node as node01 and leave the rest of the settings as default (we will configure the extra network card later). Then, click on the Begin installation button.

While the installation continues in the background, we will be prompted to set the password for the root account and create an administrative user for the node. Once these steps have been confirmed, the corresponding warnings will no longer appear:

When the process is completed, click on Finish configuration and the installation will finish configuring the system and the devices. When the system is ready to boot on its own, you will be prompted to do so. Remove the installation media and click on Reboot.

After successfully restarting the computer and booting into a Linux prompt, our first task will be to update our system. However, before we can do this, we first have to set up our basic network adapter to access the Internet to download and update packages. Then, we will be able to proceed with setting up our network interfaces.

Since our nodes will communicate between each other over the network, we will first define our network addresses and configuration. Our rather basic network infrastructure will consist of two CentOS 7 boxes with static IP addresses and host names node01 [192.168.0.2] and node02 [192.168.0.3], and a gateway router called simply gateway [192.168.0.1].

In CentOS, all network interfaces are configured using scripts in the /etc/sysconfig/network-scripts directory. If you followed the steps outlined earlier to create a second network interface, you should have a ifcfg-enp0s3 and ifcfg-enp0s8 file inside that directory. The first one is the configuration file for the network card that we will use to access the Internet and to connect via SSH using an outside client, whereas the second will be used in a later chapter to be a part of a cluster resource. Note that the exact naming of the network interfaces may differ a little, but it is safe to assume that they will follow the ifcfg-enp0sX format, where X is an integer number.

This is the minimum content that is needed in the /etc/sysconfig/network-scripts/ifcfg-enp0s3 directory for our purposes in our first node (when you set up the second node later, just change the IP address (IPADDR) to 192.168.0.3):

HWADDR="08:00:27:C8:C2:BE" TYPE="Ethernet" BOOTPROTO="static" NAME="enp0s3" ONBOOT="yes" IPADDR="192.168.0.2" NETMASK="255.255.255.0" GATEWAY="192.168.0.1" PEERDNS="yes" DNS1="8.8.8.8" DNS2="8.8.4.4"

Note that the UUID and HWADDR values will be different in your case as they are assigned as part of the underlying hardware. For this reason, it is safe to leave the default values for those settings. In addition to this, beware that cluster machines need to be assigned a static IP address—never leave that up to DHCP! In the configuration file used previously, we are using Google's DNS but if you wish to, feel free to use another DNS.

When you are done making changes, save the file and restart the network service in order to apply them. Since CentOS, beginning with version 7, uses systemd instead of SysVinit for service management, we will use the systemctl command instead of the /etc/init.d scripts to restart the services throughout this book, as follows:

$ systemctl restart network.service # Restart the network service

You can verify that the previous changes have taken effect using the following command:

$ systemctl status network.service # Display the status of the network service

You can verify that the expected changes have been correctly applied with the following command:

$ ip addr | grep 'inet' ''# Display the IP addresses

You can disregard all error messages related to the loopback interface as shown in the preceding screenshot. However, you will need to examine carefully any error messages related to enp0s3, if any, and get them resolved in order to proceed further.

The second interface will be called enp0sX, where X is typically 8, as it is in our case. You can verify this with the following command, as shown in the following screenshot:

$ ip link show

As for the configuration file of enp0s8, you can safely create it copying the contents of ifcfg-enp0s3. Do not forget, however, to change the hardware (MAC) address as returned by the information on the NIC by the ip link show enp0s8 command and leave the IP address field blank now, using the following command:

ip link show enp0s8 cp /etc/sysconfig/network-scripts/ifcfg-enp0s3 /etc/sysconfig/network-scripts/ifcfg-enp0s8

Next, restart the network service as explained earlier.

Note that you will also need to set up at least a basic DNS resolution method. Considering that we will set up a cluster with two nodes only, we will use /etc/hosts in both hosts for this purpose.

Edit /etc/hosts with the following content:

192.168.0.2 node01 192.168.0.3 node02 192.168.0.1 gateway

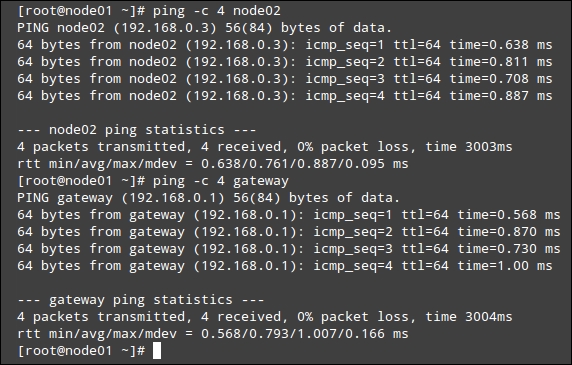

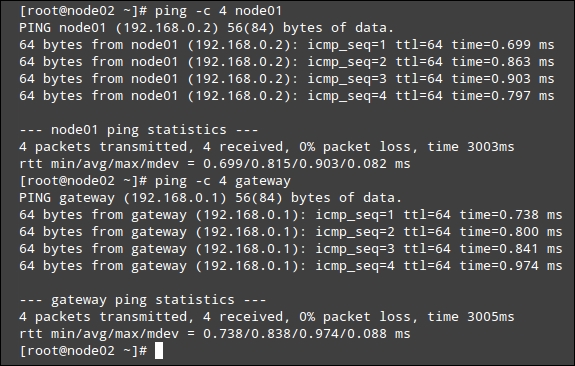

Once you have set up both nodes as explained in the following sections, at this point and before proceeding further, you can perform a ping as a basic test for connectivity between the two hosts to ensure that they are reachable from each other.

To begin, execute in node01:

$ ping –c 4 node02

Next, do the same in node02:

$ ping –c 4 node01

Once we have finished installing the operating system and configuring the basic network infrastructure, we are ready to install the packages that will provide the clustering functionality to each node. Let's emphasize here that without these core components, our two nodes would become simple standalone servers that would not be able to support each other in the event of a system crash or another major issue in one of them.

Each node will need the following software components in order to work as a member of the cluster. These packages are fully supported in CentOS 7 as part of a cluster setup, as opposed to other alternatives that have been deprecated:

Pacemaker: This is a cluster resource manager that runs scripts at boot time, when individual nodes go up or down or when related resources fail. In addition, it can be configured to periodically check the health status of each cluster member. In other words, pacemaker will be in charge of starting and stopping services (such as a web or database server, to name a classic example) and will implement the logic to ensure that all of the necessary services are running in only one location at the same time in order to avoid data failure or corruption.

Corosync: This is a messaging service that will provide a communication channel between nodes. As you can guess, corosync is essential for pacemaker to perform its job.

PCS: This is a corosync and pacemaker configuration tool that will allow you to easily view, modify, and create pacemaker-based clusters. This is not strictly necessary but rather optional. We choose to install it because it will come in handy at a later stage.

To install the three preceding software packages, run the following command:

$ yum update && yum install pacemaker corosync pcs

Yum will update all the installed packages to their most recent version in order to better satisfy dependencies, and it will then proceed with the actual installation.

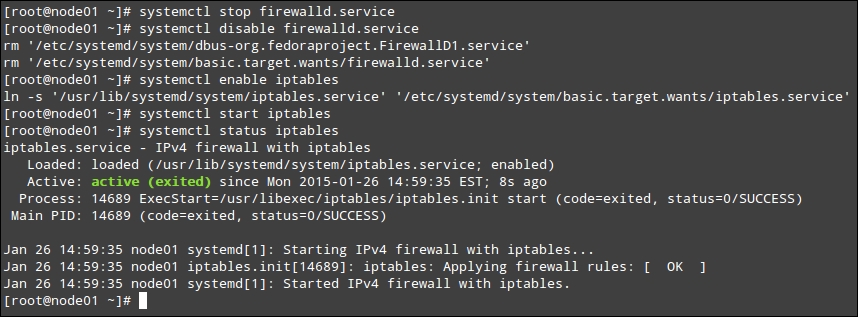

In addition to installing the preceding packages, we also need to enable iptables, as the default firewall for CentOS 7 is firewalld. We choose iptables over firewalld because its use is far more extended, and there is a chance that you will be familiar with it compared with the relatively new firewalld. We will install the necessary packages here and leave the configuration for the next chapter.

In order to manage iptables via systemd utilities, you will need to install (if it is not already installed) the iptables-services package using the following command:

yum update && yum install iptables-services

Now, you can stop and disable firewalld using the following commands:

systemctl stop firewalld.service systemctl disable firewalld.service

Next, enable iptables to both initialize on boot and start during the current session:

systemctl enable iptables.service systemctl start iptables.service

You can refer to the following screenshot for a step-by-step example of this process:

Once the installation of the first node (node01) has been completed successfully, clone the first node following the outline in section 1.13 of Virtualbox manual (Cloning virtual machines). Once you're done cloning the virtual machine, add the following minor changes to the second virtual machine:

Name the machine

node02. When you start this newly created virtual machine, its hostname will still be set tonode01. To change it, issue the following command and then reboot the machine to apply it:$ hostnamectl set-hostname node02 $ systemctl reboot

In the configuration file for

enp0s3innode02, enter192.168.0.3as the IP address and the rightHWADDRaddress.Ensure that both the virtual machines are running and that each node can ping the other and the gateway, as shown in the next two screenshots.

First, we will ping node02 and gateway from node01, and we will see the following output:

Then, we will ping node01 and gateway from node02:

If any of the pings do not return the expected result, as shown in the preceding screenshot, check the network interface configuration in both Virtualbox and in the configuration files, as outlined earlier in this chapter.

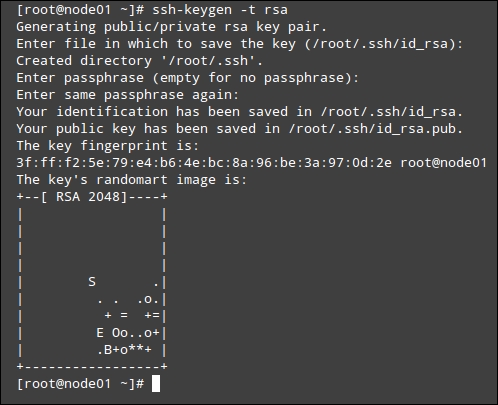

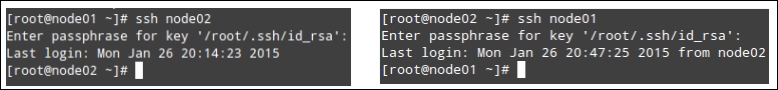

While not strictly required, we will also set up a public key-based authentication for SSH so that we can access each host from the other without entering the accounts password every time we want to access a different node. This feature will come in handy in case, for some reason, we need to perform some system administration task on one of the nodes. Note that you will need to repeat this operation on both nodes.

In order to increase security, we may also enter a passphrase while creating the RSA key, which is shown in the following screenshot. This step is optional and you can omit it if you want. In fact, I advise you to leave it empty in order to make things easier down the road, but it's up to you.

Run the following command in order to create a RSA key:

$ ssh-keygen -t rsa

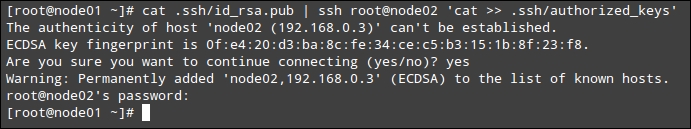

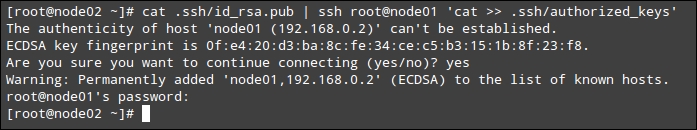

To enable passwordless login, we will copy the newly created key to node02, and vice versa, as shown in the next two figures, respectively.

$ cat .ssh/id_rsa.pub | ssh root@node02 'cat' >> .ssh/authorized_keys'

Copy the key from node01 to node02:

Copy the key from node02 to node01:

Next, we need to verify that we can connect from each cluster member to the other without a password but with the passphrase we entered previously:

Finally, if passwordless login is not successful, you may want to ensure that the SSH daemon is running on both hosts:

$ systemctl status sshd

If it is not running, start it using the following command:

$ systemctl start sshd

You may want to check the status of the service again after attempting to restart it. If there have been any errors, the output of systemctl status sshd will give you indications as to what is wrong with the service and why it is refusing to start properly. Following those directions, you will be able to troubleshoot the problem without much hassle.

In this chapter, we reviewed how to install the operating system and installed the necessary software components to implement the basic cluster functionality. Ensure that you have installed your nodes, the basic clustering software as outlined earlier in this chapter, and configured the network and SSH access before proceeding with Chapter 2, Installing Cluster Services and Configuring Network Components, where we will configure the resource manager, the messaging layer, and the firewall service in order to actually start building our cluster.