Chapter 1: Understanding AWS Cloud Principles and Key Characteristics

You would be hard-pressed to talk to someone today that has not heard of the cloud. For most of its history, Amazon did not advertise, but when it did begin advertising on television, the first commercials were for Amazon Web Services (AWS) and not for its e-commerce division.

Even though the term cloud is pervasive today, not everyone understands what the cloud is. One reason this is the case is that the cloud can be different things for different people. Another reason is that the cloud is continuously evolving.

In this chapter, we will put our best foot forward and attempt to define the cloud, and then we will try to define the AWS cloud more specifically. We will also cover the vast and ever-growing influence and adoption of the cloud in general and AWS in particular. After that, we'll start introducing some elementary cloud and AWS terms to start getting our feet wet with the lingo.

We will then try to understand why cloud computing is so popular. Assuming you buy the premise that the cloud is taking the world by storm, we will then learn how we can take a slice of the cloud pie and build our credibility by becoming certified. Finally, toward the end of the chapter, we will look at some tips and tricks you can use to simplify your journey to obtain AWS certifications, and we will look at some frequently asked questions about AWS certifications.

In this chapter, we will cover the following topics:

- What is cloud computing?

- What is AWS cloud computing?

- The market share, influence, and adoption of AWS

- Basic cloud and AWS terminology

- Why is cloud computing so popular?

- The five pillars of a well-architected framework

- Building credibility by becoming certified

- Learning tips and tricks to obtain AWS certifications

- Some frequently asked questions about AWS certifications

Let's get started, shall we?

What is cloud computing?

Here's a dirty little secret that the cloud providers may not want you to know. Cloud providers use cryptic acronyms and fancy terms such as Elastic Compute Cloud (EC2) instances and S3 services (in the case of AWS), or Azure Virtual Machines (VMs) and blobs (in the case of Azure), but at its most basic level, the cloud is just a bunch of servers and other computing resources managed by a third-party provider in a data center somewhere.

But we had data centers and third-party-managed servers long before the term cloud became popular. So, what makes the cloud different from your run-of-the-mill data center?

Before we try to define cloud computing, let's analyze some of the characteristics that are common to many of the leading cloud providers.

Cloud elasticity

One important characteristic of the leading cloud providers is the ability to quickly and frictionlessly provision resources. These resources could be a single instance of a database or a thousand copies of the same server, used to handle your web traffic. These servers can be provisioned within minutes.

Contrast that with how performing the same operation may play out in a traditional on-premises environment. Let's use an example. Your boss comes to you and asks you to set up a cluster of computers to host your latest service. Your next actions probably look something like this:

- You visit the data center and realize that the current capacity is not enough to host this new service.

- You map out a new infrastructure architecture.

- You size the machines based on the expected load, adding a few more terabytes here and a few gigabytes there to make sure that you don't overwhelm the service.

- You submit the architecture for approval to the appropriate parties.

- You wait. Most likely for months.

It may not be uncommon once you get the approvals to realize that the market opportunity for this service is now gone, or that it has grown more and the capacity you initially planned will not suffice.

It is difficult to overemphasize how important the ability to deliver a solution quickly is when you use cloud technologies to enable these solutions.

What do you think your boss would say if after getting everything set up in the data center and after months of approvals, you told them you made a mistake and you ordered an X213 server instead of an X312, which means you won't have enough capacity to handle the expected load and getting the right server will take a few more months? What do you think their mood would be like?

In a cloud environment, this is not necessarily a problem, because instead of needing potentially months to provision your servers, they can be provisioned in minutes.

Correcting the size of the server may be as simple as shutting down the server for a few minutes, changing a drop-down box value, and restarting the server again.

Hopefully, the unhappy boss example here drives our point home about the power of the cloud and the pattern that is emerging. The cloud exponentially improves time to market. And being able to deliver quickly may not just mean getting there first. It may be the difference between getting there first and not getting there at all.

Another powerful characteristic of a cloud computing environment is the ability to quickly shut down resources and, importantly, not be charged for that resource while it is down. Being able to shut down resources and not paying for them while they are down is not exclusive to AWS. Many of the most popular cloud providers offer this billing option.

In our continuing on-premises example, if we shut down one of our servers, do you think we can call the company that sold us the server and politely ask them to stop charging us because we shut the server down? That would be a very quick conversation and depending on how persistent we were, it would probably not be a very pleasant one. They are probably going to say, "You bought the server; you can do whatever you want to do with it, including using it as a paperweight." Once the server is purchased, it is a sunk cost for the duration of the useful life of the server.

In contrast, whenever we shut down a server in a cloud environment, the cloud provider can quickly detect that and put that server back into the pool of available servers for other cloud customers to use that newly unused capacity.

Cloud virtualization

Virtualization is the process of running multiple virtual instances on top of a physical computer system using an abstract layer sitting on top of actual hardware.

More commonly, virtualization refers to the practice of running multiple operating systems on a single computer at the same time. Applications that are running on VMs are oblivious to the fact that they are not running on a dedicated machine.

These applications are not aware that they are sharing resources with other applications on the same physical machine.

A hypervisor is a computing layer that enables multiple operating systems to execute in the same physical compute resource. These operating systems running on top of these hypervisors are VMs – a component that can emulate a complete computing environment using only software but as if it was running on bare metal.

Hypervisors, also known as Virtual Machine Monitors (VMMs), manage these VMs while running side by side. A hypervisor creates a logical separation between VMs, and it provides each of them with a slice of the available compute, memory, and storage resources.

This allows VMs to not clash and interfere with each other. If one VM crashes and goes down, it will not make other VMs go down with it. Also, if there is an intrusion in one VM, it is fully isolated from the rest.

Definition of the cloud

Let's now attempt to define cloud computing.

The cloud computing model is one that offers computing services such as compute, storage, databases, networking, software, machine learning, and analytics over the internet and on demand. You generally only pay for the time and services you use. The majority of cloud providers can provide massive scalability for many of their services and make it easy to scale services up and down.

Now, as much as we tried to nail it down, this is still a pretty broad definition. For example, in our definition, we specify that the cloud can offer software. That's a pretty broad term. Does the term software in our definition include the following?

- Code management

- Virtual desktops

- Email services

- Video conferencing

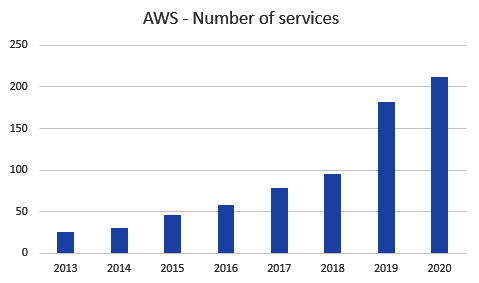

These are just a few examples of what may or may not be included as available services in a cloud environment. When it comes to AWS and other major cloud providers, the answer is yes. When AWS started, it only offered a few core services, such as compute (Amazon EC2) and basic storage (Amazon S3). As of 2020, AWS offers 212 services, including compute, storage, networking, databases, analytics, developer and deployment tools, and mobile apps, among others. For the individual examples given here, AWS offers the following:

- Code management: AWS CodeCommit, AWS CodePipeline, and AWS CodeDeploy

- Virtual desktops: AWS WorkSpaces

- Email services: Amazon SES and Amazon WorkMail

- Video conferencing: Amazon Chime

As we will see throughout the book, this is a tiny sample of the many services that AWS offers. Additionally, since it was launched, AWS services and features have grown exponentially every year, as shown in the following figure:

Figure 1.1 – AWS – number of services

There is no doubt that the number of offerings will continue to grow at a similar rate for the foreseeable future. Having had the opportunity to work in the AWS offices in Seattle, I can report that AWS is hard at work creating these new services and eating their own dog food by using their existing services to create these new services.

Private versus public clouds

Up until a few years ago, one of the biggest and most common objections to hamper cloud adoption on a massive scale was the refrain that the cloud is not secure. To address this objection, cloud providers started offering private clouds: a whole infrastructure setup that only one company can access and is completely private to them. However, this privacy and security come at a price. One of the reasons why the cloud is so popular and affordable is that any resources that you are currently not using can be used by other customers that do need the capacity at that time, meaning they share the cost with you. Whoever uses the resources the most, pays the most. This cost-sharing disappears with private clouds.

Let's use an analogy to gain further understanding. The gig economy has great momentum. Everywhere you look, people are finding employment as contract workers. There are Uber drivers, people setting up Airbnbs, people doing contract work for Upwork. One of the reasons contract work is getting more popular is that it enables consumers to contract services that they may otherwise not be able to afford. Could you imagine how expensive it would be to have a private chauffeur? But with Uber or Lyft, you almost have a private chauffeur who can be at your beck and call within a few minutes of you summoning them.

A similar economy of scale happens with a public cloud. You can have access to infrastructure and services that would cost millions of dollars if you bought them on your own. Instead, you can have access to the same resources for a small fraction of the cost.

A private cloud just becomes a fancy name for a data center managed by a trusted third party, and all the elasticity benefits wither away.

Even though AWS, Azure, Google Cloud Platform (GCP), and the other popular cloud providers are considered mostly public clouds, there are some actions you can take to make them more private. As an example, AWS offers Amazon EC2 dedicated instances, which are EC2 instances that ensure that you will be the only user for a given physical server. Again, this comes at a cost.

Dedicated instance costs are significantly higher than on-demand EC2 instances. On-demand instances may be shared with other AWS users. As mentioned earlier in the chapter, you will never know the difference because of virtualization and hypervisor technology. One common use case for choosing dedicated instances is government regulations and compliance policies that require certain sensitive data to not be in the same physical server with other cloud users.

Truly private clouds are expensive to run and maintain, and for that reason, many of the resources and services offered by the major cloud providers reside in public clouds. But just because you are using a private cloud does not mean that it cannot be set up insecurely, and conversely, if you are running your workloads and applications on a public cloud, you can use security best practices and sleep well at night knowing that you are using state-of-the-art technologies to secure your sensitive data.

Additionally, most of the major cloud providers' clients use public cloud configurations, but there are a few exceptions even in this case. For example, the United States government intelligence agencies are a big AWS customer. As you can imagine, they have deep pockets and are not afraid to spend. In many cases with these government agencies, AWS will set up the AWS infrastructure and services on the premises of the agency itself. You can find out more about this here:

https://aws.amazon.com/federal/us-intelligence-community/

Now that we have gained a better understanding of cloud computing in general, let's get more granular and learn about how AWS does cloud computing.

What is AWS cloud computing?

Put simply, AWS is the undisputed market leader in cloud computing today, and even though there are a few worthy competitors, it doesn't seem like anyone will push them off the podium for a while. Why is this, and how can we be sure that they will remain a top player for years to come? Because this pattern has occurred in the history of the technology industry repeatedly. Geoffrey A. Moore, Paul Thompson, and Tom Kippola explained this pattern best a long time ago in their book The Gorilla Game: Picking Winners in High Technology.

Some important concepts covered in their book are listed here:

- There are two kinds of technology markets: Gorilla Games and Royalty Markets. In a Gorilla Game, the players are dubbed gorillas and chimps. In a Royalty Market, the participants are kings, princes, and serfs.

- Gorilla Games exist because the market leaders possess proprietary technology that makes it difficult for competitors to compete. This proprietary technology creates a moat that can be difficult to overcome.

- In Royalty Markets, the technology has been commoditized and standardized. In a Royalty Market, it's difficult to become a leader and it's easy to fall off the number one position.

- The more proprietary features a gorilla creates in their product and the bigger the moat they establish, the more difficult and expensive it becomes to switch to a competitor and the stronger the gorilla becomes.

- This creates a virtuous cycle for the market leader or gorilla. The market leader's product or service becomes highly desirable, which means that they can charge more for it and sell more of it. They can then reinvest that profit to make the product or service even better.

- Conversely, a vicious cycle is created for second-tier competitors or chimps. Their product or service is not as desirable, so even if they charge as much money as the market leader, because they don't have as many sales, their research and development budget will not be as large as the market leader.

- The focus of this book is on technology, but if you are interested in investing in technology companies, the best time to invest in a gorilla is when the market is about to enter a period of hypergrowth. At this point, the gorilla might not be fully determined and it's best to invest in gorilla candidates and sell stock as it becomes obvious that they won't be a gorilla and reinvest the proceeds of that sale into the emerging gorilla.

- Once a gorilla is established, most often, the way that a gorilla is vanquished is by a complete change in the game, where a new disruptive technology creates a brand new game.

To get a better understanding, let look at an example of a Royalty Market and an example of a Gorilla Game.

Personal computers and laptops – Back in the early 1980s when PCs burst onto the scene, many players emerged that sold personal computers, such as these:

- Dell

- Gateway

- IBM

- Hewlett Packard

I don't know about you, but whenever I buy a computer, I go to the store, see which computer is the cheapest and has the features I want, and pull the trigger regardless of the brand. This is the perfect example of a Royalty Market. It is difficult to differentiate yourself and stand out and there is little to no brand loyalty among consumers.

Personal computer operating systems – Whenever I buy a new computer, I make sure of one thing: that the computer comes with Microsoft Windows, the undisputed market leader in the space. Yes, the Macintosh operating system has been around for a long time, Linux has been around for a while making some noise, and the Google Chrome operating system is making some inroads, especially in the educational market. But ever since it was launched in November 1985, Microsoft Windows has kept the lion's share of the market (or should we say the gorilla's share?).

Of course, this is a subjective opinion, but I believe we are witnessing the biggest Gorilla Game in the history of computing with the advent of cloud computing. This is the mother of all competitive wars. Cloud vendors are not only competing to provide basic services, such as compute and storage, but are continuing to build more services on top of these core services to lock in their customers further and further. Vendor lock-in is not necessarily a bad thing. Lock-in, after all, is a type of golden handcuffs. Customers stay because they like the services they are being offered. But customers also realize that as they use more and more services, it becomes more and more expensive to transfer their applications and workloads to an alternate cloud provider.

Not all cloud services are highly intertwined with their cloud ecosystems. Take these scenarios, for example:

- Your firm may be using AWS services for many purposes, but it may be using WebEx, Microsoft Teams, Zoom, or Slack for its video conference needs instead of Amazon Chime. These services have little dependency on other underlying core infrastructure cloud services.

- You may be using Amazon SageMaker for artificial intelligence and machine learning projects, but you may be using the TensorFlow package in SageMaker as your development kernel, even though TensorFlow is maintained by Google.

- If you are using Amazon RDS and you choose MySQL as your database engine, you should not have too much trouble porting your data and schemas over to another cloud provider that also supports MySQL, if you decide to switch over.

With some other services, it will be a lot more difficult to switch. Here are some examples:

- Amazon DynamoDB is a NoSQL proprietary database only offered by AWS. If you want to switch over to another NoSQL database, porting it may not be a simple exercise.

- If you are using CloudFormation to define and create your infrastructure, it will be difficult, if not impossible, to use your CloudFormation templates to create infrastructure in other cloud provider environments. If the portability of your infrastructure scripts is important to you and you are planning on switching cloud providers, Terraform by HashiCorp may be a better alternative since Terraform is cloud-agnostic.

- If you have a graph database requirement and you decide to use Amazon Neptune (which is the native Amazon graph database offering), you may have a difficult time porting out of Amazon Neptune, since the development language and format can be quite dissimilar if you decide to use another graph database solution such as Neo4j or TigerGraph.

As far as we have come in the last 15 years with cloud technologies, I believe, and I think vendors realize, that these are the beginning innings, and locking customers in right now while they are still deciding who their vendor is going to be will be a lot easier than trying to do so after they pick a competitor.

A good example of one of those make-or-break decisions is the awarding of the Joint Enterprise Defense Infrastructure (JEDI) cloud computing contract by the Pentagon. JEDI is a $10 billion 10-year contract. As big as that dollar figure is, even more important is the fact that it would be nearly impossible for the Pentagon to switch to another vendor once the 10-year contract is up.

For that reason, even though Microsoft was initially awarded the contract, Amazon has sued the US government to potentially get them to change their mind and use AWS instead.

Let's delve a little deeper into how influential AWS currently is and how influential it has the potential to become.

The market share, influence, and adoption of AWS

It is hard to argue that AWS is not the gorilla in the cloud market. For the first 9 years of AWS's existence, Amazon did not break down their AWS sales and their profits. As of January 2020, Microsoft does not fully break down its Azure revenue and profit. As of 2019, in its quarterly reports, they were disclosing their Azure revenue growth rate without reporting the actual revenue number and instead burying Azure revenues in a bucket called Commercial Cloud, which also includes items such as Office 365 revenue. Google, for a long time, has been cagey about breaking down its GCP revenue. Google finally broke down its GCP revenue in February 2019.

The reason cloud providers are careful about reporting these raw numbers is precisely because of the Gorilla Game. Initially, AWS did not want to disclose numbers because they wanted to become the gorilla in the cloud market without other competitors catching wind of it. And if Microsoft and Google disclosed their numbers, it would reveal the exact size of the chasm that exists between them and AWS.

Even though AWS is the gorilla now, and it's quite difficult to dethrone the gorilla, it appears the growth rates for GCP and Azure are substantially higher than AWS's current growth rate. Analysts have pegged the growth rate for GCP and Azure at about 60% year on year, whereas AWS's recent year-on-year revenue growth is closer to 30% to 40%. But the revenue for Azure and GCP is from a much smaller base.

This practice of most cloud providers leaves the rest of us guessing as to what the exact market share and other numbers really are. But, analysts being analysts, they still try to make an educated guess.

For example, one recent analysis from Canalys Cloud Channels in 2019 puts AWS's share of the market at around 33% and the market share for its closest competitor, Azure, at around 17%.

Up until this point, AWS has done a phenomenal job of protecting their market share by adding more and more services, adding features to existing services, building higher-level functionality on top of the core services they already offer, and educating the masses on how to best use these services. It is hard to see how they could lose the pole position. Of course, anything is possible, including the possibility of government intervention and regulation, as occurred in the personal computer chip market and in the attempt the government made to break up Microsoft and their near monopoly on the personal operating system market.

We are in an exciting period when it comes to cloud adoption. Up until just a few years ago, many C-suite executives were leery of adopting cloud technologies to run their mission-critical and core services. A common concern was that they felt having on-premises implementations was more secure than running their workloads on the cloud.

It has become clear to most of them that running workloads on the cloud can be just as secure, if not more secure, than running them on-premises. There is no perfectly secure environment, and it seems that almost every other day we hear about sensitive information being left exposed on the internet by yet another company. But having an army of security experts on your side, as is the case with the major cloud providers, will often beat any security team that most companies can procure on their own.

The current state of the cloud market for most enterprises is a state of Fear Of Missing Out (FOMO). Chief executives are watching their competitors jumping on the cloud and they are concerned that they will be left behind if they don't take the leap as well.

Additionally, we are seeing an unprecedented level of disruption in many industries propelled by the power of the cloud. Let's take the example of Lyft and Uber. Both companies rely heavily on cloud services to power their infrastructure and old-guard companies in the space, such as Hertz and Avis, that rely on older on-premises technology are getting left behind. In fact, on May 22, 2020, Hertz filed for bankruptcy protection. Part of the problem is the convenience that Uber and Lyft offer by being able to summon a car on demand. Also, the pandemic that swept the world in 2020 did not help. But the inability to upgrade their systems to leverage cloud technologies no doubt played a role in their diminishing share of the car rental market.

Let's continue and learn some of the basic cloud terminology in general and AWS terminology in particular.

Basic cloud and AWS terminology

There is a constant effort by technology companies to offer common standards for certain technologies while providing exclusive and proprietary technology that no one else offers.

An example of this can be seen in the database market. The Standard Query Language (SQL) and the ANSI-SQL standard have been around for a long time. In fact, the American National Standards Institute (ANSI) adopted SQL as the SQL-86 standard in 1986.

Since then, database vendors have continuously been supporting this standard while offering a wide variety of extensions to this standard in order to make their products stand out and to lock in customers to their technology.

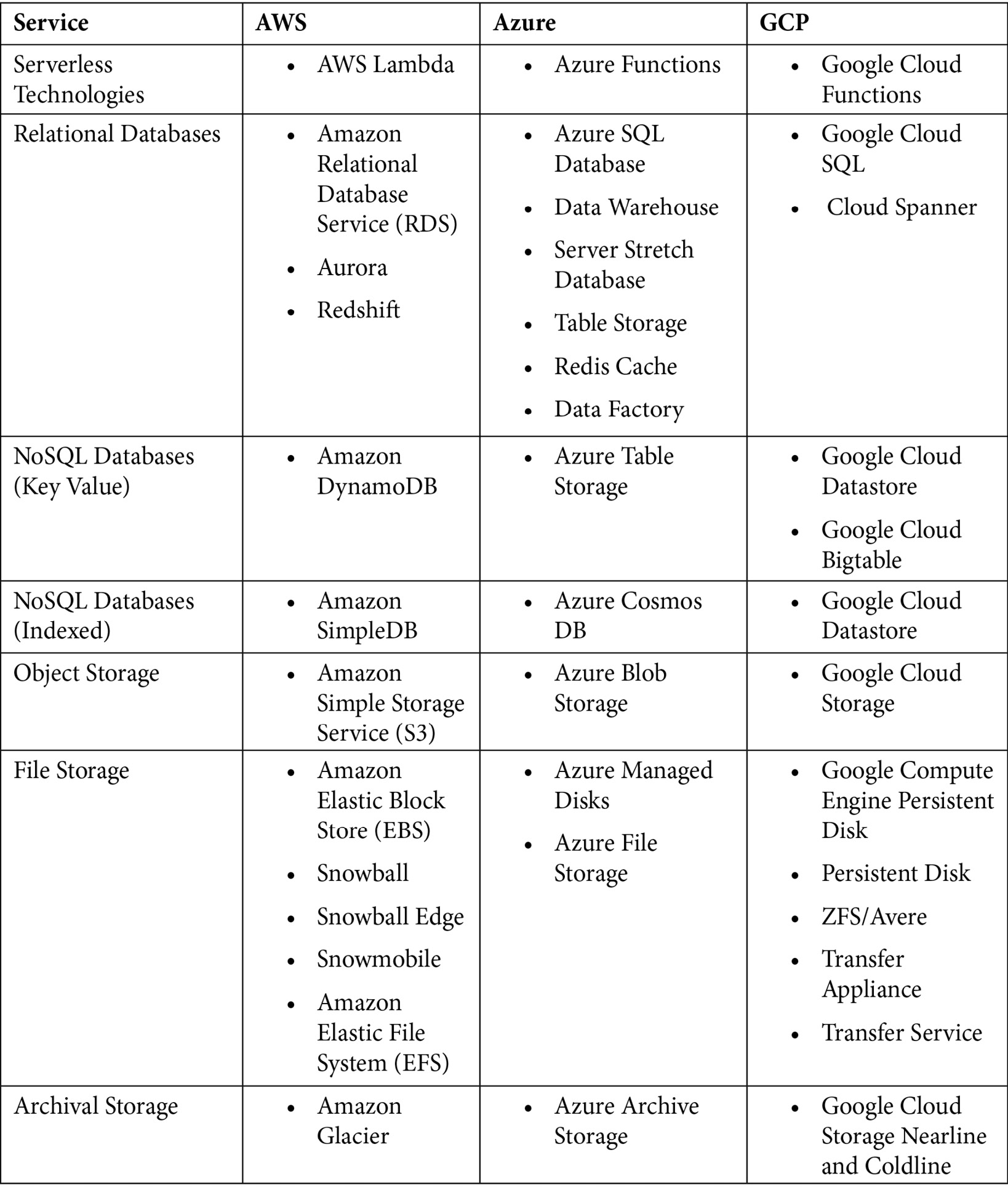

The cloud is no different. Cloud providers provide the same core functionality for a wide variety of customer needs, but they all feel compelled to name these services differently, no doubt in part to try to separate themselves from the rest of the pack and make it more difficult to switch out of their environments once companies commit to using them.

As an example, every major cloud provider offers compute services. In other words, it is simple to spin up a server with any provider, but they all refer to this compute service differently:

- AWS uses EC2 instances.

- Azure uses Azure VM.

- GCP uses Google Compute Engine.

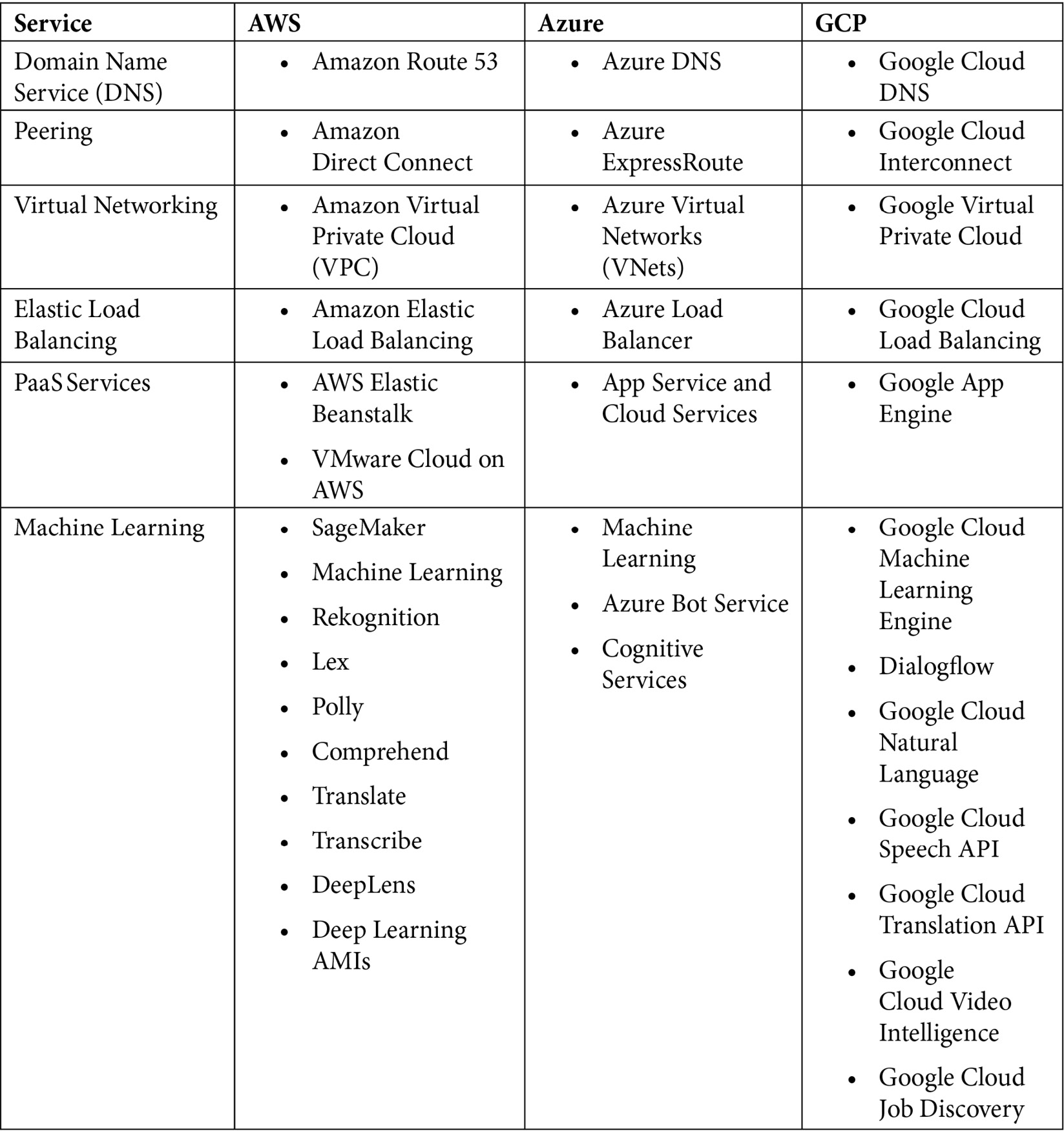

The following tables give a non-comprehensive list of the different core services offered by AWS, Azure, and GCP and the names used by each of them:

Table 1.1 – Cloud provider terminology and comparison (part 1)

These are some of the other services, including serverless technology services and database services:

Table 1.2 – Cloud provider terminology and comparison (part 2)

These are additional services:

Table 1.3 – Cloud provider terminology and comparison (part 3)

If you are confused by all the terms in the preceding tables, don't fret. We will learn about many of these services throughout the book and when to use each of them.

In the next section, we are going to learn why cloud services are becoming so popular and in particular why AWS adoption is so prevalent.

Why is cloud computing so popular?

Depending on who you ask, some estimates peg the global cloud computing market at around USD 370 billion in 2020, growing to about USD 830 billion by 2025. This implies a Compound Annual Growth Rate (CAGR) of around 18% for the period.

There are multiple reasons why the cloud market is growing so fast. Some of them are listed here:

- Elasticity

- Security

- Availability

- Faster hardware cycles

- System administration staff

- Faster time to market

Let's look at the most important one first.

Elasticity

Elasticity may be one of the most important reasons for the cloud's popularity. Let's first understand what it is.

Do you remember the feeling of going to a toy store as a kid? There is no feeling like it in the world. Puzzles, action figures, games, and toy cars are all at your fingertips, ready for you to play with them. There was only one problem: you could not take the toys out of the store. Your mom or dad always told you that you could only buy one toy. You always had to decide which one you wanted and invariably, after one or two weeks of playing with that toy, you got bored with it and the toy ended up in a corner collecting dust, and you were left longing for the toy you didn't choose.

What if I told you about a special, almost magical, toy store where you could rent toys for as long or as little as you wanted, and the second you got tired with the toy you could return it, change it for another toy, and stop any rental charges for the first toy? Would you be interested?

The difference between the first traditional store and the second magical store is what differentiates on-premises environments and cloud environments.

The first toy store is like having to set up infrastructure on your own premises. Once you purchase a piece of hardware, you are committed to it and will have to use it until you decommission it or sell it at a fraction of what you paid for it.

The second toy store is analogous to a cloud environment. If you make a mistake and provision a resource that's too small or too big for your needs, you can transfer your data to a new instance, shut down the old instance, and importantly, stop paying for that instance.

More formally defined, elasticity is the ability of a computing environment to adapt to changes in workload by automatically provisioning or shutting down computing resources to match the capacity needed by the current workload.

In AWS as well as with the main cloud providers, resources can be shut down without having to completely terminate them, and the billing for resources will stop if the resources are shut down.

This distinction cannot be emphasized enough. Computing costs in a cloud environment on a per-unit basis may even be higher when comparing them with on-premises prices, but the ability to shut resources down and stop getting charged for them makes cloud architectures cheaper in the long run, often in a quite significant way. The only time when absolute on-premises costs may be lower than cloud costs is if workloads are extremely predictable and consistent. Let's look at exactly what this means by reviewing a few examples.

Web storefront

A popular use case for cloud services is to use it to run an online storefront. Website traffic in this scenario will be highly variable depending on the day of the week, whether it's a holiday, the time of day, and other factors.

This kind of scenario is ideally suited for a cloud deployment. In this case, we can set up resource load balancers that automatically start and shut down compute resources as needed. Additionally, we can set up policies that allow database storage to grow as needed.

Apache Spark and Hadoop workloads

The popularity of Apache Spark and Hadoop continues to increase. Many Spark clusters don't necessarily need to run consistently. They perform heavy batch computing for a period and then can be idle until the next batch of input data comes in. A specific example would be a cluster that runs every night for 3 or 4 hours and only during the working week.

In this instance, the shutdown of resources may be best managed on a schedule rather than by using demand thresholds. Or, we could set up triggers that automatically shut down resources once the batch jobs are completed.

Online storage

Another common use case in technology is file and object storage. Some storage services may grow organically and consistently. The traffic patterns can also be consistent.

This may be one example where using an on-premises architecture may make sense economically. In this case, the usage pattern is consistent and predictable.

Elasticity is by no means the only reason that the cloud is growing in leaps and bounds. Having the ability to easily enable world-class security for even the simplest applications is another reason why the cloud is becoming pervasive. Let's understand this at a deeper level.

Security

The perception of on-premises environments being more secure than cloud environments was a common reason that companies big and small would not migrate to the cloud. More and more enterprises are now realizing that it is extremely hard and expensive to replicate the security features that are provided by cloud providers such as AWS. Let's look at a few of the measures that AWS takes to ensure the security of its systems.

Physical security

You probably have a better chance of getting into the Pentagon without a badge than getting into an Amazon data center.

AWS data centers are continuously upgraded with the latest surveillance technology. Amazon has had decades to perfect the design, construction, and operation of its data centers.

AWS has been providing cloud services for over 15 years and it literally has an army of technologists, solution architects, and some of the brightest minds in the business. It leverages this experience and expertise to create state-of-the-art data centers. These centers are in nondescript facilities. You could drive by one and never know what it is. If you do find out where one is, it will be extremely difficult to get in. Perimeter access is heavily guarded. Visitor access is strictly limited, and they always must be accompanied by an Amazon employee.

Every corner of the facility is monitored by video surveillance, motion detectors, intrusion detection systems, and other electronic equipment.

Amazon employees with access to the building must authenticate themselves four times to step on the data center floor.

Only Amazon employees and contractors that have a legitimate right to be in a data center can enter. Any other employee is restricted. Whenever an employee does not have a business need to enter a data center, their access is immediately revoked, even if they are only moved to another Amazon department and stay with the company.

Lastly, audits are routinely performed and part of the normal business process.

Encryption

AWS makes it extremely simple to encrypt data at rest and data in transit. It also offers a variety of options for encryption. For example, for encryption at rest, data can be encrypted on the server side, or it can be encrypted on the client side. Additionally, the encryption keys can be managed by AWS, or you can use keys that are managed by you.

Compliance standards supported by AWS

AWS has robust controls in place to allow users to maintain security and data protection. We'll be talking more about how AWS shares security responsibilities with its customers, but the same is true with how AWS supports compliance. AWS provides many attributes and features that enable compliance with many standards established by different countries and standards organizations. By providing these features, AWS simplifies compliance audits. AWS enables the implementation of security best practices and many security standards, such as these:

- ITAR

- SOC 1/SSAE 16/ISAE 3402 (formerly SAS 70)

- SOC 2

- SOC 3

- FISMA, DIACAP, and FedRAMP

- PCI DSS Level 1

- DOD CSM Levels 1-5

- ISO 9001/ISO 27001/ISO 27017/ISO 27018

- MTCS Level 3

- FIPS 140-2

- HITRUST

In addition, AWS enables the implementation of solutions that can meet many industry-specific standards, such as these:

- Criminal Justice Information Services (CJIS)

- Family Educational Rights and Privacy Act (FERPA)

- Cloud Security Alliance (CSA)

- Motion Picture Association of America (MPAA)

- Health Insurance Portability and Accountability Act (HIPAA)

Another important thing that can explain the meteoric rise of the cloud is how you can stand up high-availability applications without having to pay for the additional infrastructure needed to provide these applications. Architectures can be crafted in such a way that additional resources are started when other resources fail. This ensures that we only bring additional resources when they are necessary, keeping costs down. Let's analyze this important property of the cloud in a deeper fashion.

Availability

When we deploy infrastructure in an on-premises environment, we have two choices. We can purchase just enough hardware to service the current workload, or we can make sure that there is enough excess capacity to account for any failures that may occur. This extra capacity and the elimination of single points of failure is not as simple as it may first seem. There are many places where single points of failure may exist and need to be eliminated:

- Compute instances can go down, so we need to have a few on standby.

- Databases can get corrupted.

- Network connections can be broken.

- Data centers can flood or burn down.

This last one may seem like a hypothetical example but there was a fire reported in the suburb of Tama in Tokyo, Japan, that apparently was at an AWS data center under construction. Here is a clip of the incident:

https://www.datacenterdynamics.com/en/news/aws-building-site-burns-in-fatal-tokyo-fire-reports-say/

Using the cloud simplifies the "single point of failure" problem. We already determined that provisioning software in an on-premises data center can be a long and arduous process. In a cloud environment, spinning up new resources can take just a few minutes. So, we can configure minimal environments knowing that additional resources are a click away.

AWS data centers are built in different regions across the world. All data centers are "always on" and delivering services to customers. AWS does not have "cold" data centers. Their systems are extremely sophisticated and automatically route traffic to other resources if a failure occurs. Core services are always installed in an N+1 configuration. In the case of a complete data center failure, there should be the capacity to handle traffic using the remaining available data centers without disruption.

AWS enables customers to deploy instances and persist data in more than one geographic region and across various data centers within a region.

Data centers are deployed in fully independent zones. Each data center is constructed with enough separation between them such that the likelihood of a natural disaster affecting two of them at the same time is very low. Additionally, data centers are not built in flood zones.

To increase resilience, data centers have discrete Uninterruptable Power Supplies (UPSes) and onsite backup generators. They are also connected to multiple electric grids from multiple independent utility providers. Data centers are connected redundantly to multiple tier-1 transit providers. Doing all this minimizes single points of failure.

Faster hardware cycles

When hardware is provisioned on-premises, from the instant that it is purchased it starts becoming obsolete. Hardware prices have been on an exponential downtrend since the first computer was invented, so the server you bought a few months ago may now be cheaper, or a new version of the server may be out that's faster and still costs the same. However, waiting until hardware improves or becomes cheaper is not an option. At some point, a decision needs to be made and a purchase needs to be made.

Using a cloud provider instead eliminates all these problems. For example, whenever AWS offers new and more powerful processor types, using them is as simple as stopping an instance, changing the processor type, and starting the instance again. In many cases, AWS may keep the price the same even when better and faster processors and technology become available.

System administration staff

An on-premises implementation may require full-time system administration staff and a process to ensure that the team remains fully staffed. By using cloud services, many of these tasks can be handled by the cloud providers, allowing you to focus on core application maintenance and functionality and not have to worry about infrastructure upgrades, patches, and maintenance.

By offloading this task to the cloud provider, costs can come down because instead of having a dedicated staff, the administrative duties can be shared with other cloud customers.

The five pillars of a well-architected framework

That all leads us nicely into this section. The reason the cloud in general and AWS in particular are so popular is that they simplify the development of well-architected frameworks. If there is one must-read white paper from AWS, it is the paper titled AWS Well-Architected Framework, which spells out the five pillars of a well-architected framework. The full paper can be found here:

https://d1.awsstatic.com/whitepapers/architecture/AWS_Well-Architected_Framework.pdf

In this section, we will summarize the main points about those five pillars.

First pillar – security

In both on-premises and cloud architectures, security should always be a high priority. All aspects of security should be considered, including data encryption and protection, access management, infrastructure security, monitoring, and breach detection and inspection.

To enable system security and to guard against nefarious actors and vulnerabilities, AWS recommends these architectural principles:

- Always enable traceability.

- Apply security at all levels.

- Implement the principle of least privilege.

- Secure the system at all levels: application, data, operating system, and hardware.

- Automate security best practices.

Almost as important as security is the next pillar – reliability.

Second pillar – reliability

Another characteristic of a well-architected framework is the minimization or elimination of single points of failure. Ideally, every component should have a backup, and the backup should be able to come online as quickly as possible and in an automated manner, without the need for human intervention. Another applicable concept to support reliability is the idea of self-healing systems. An example of this is how Amazon S3 handles data replication. At any given time, there are at least six copies of any object stored in Amazon S3. If, for some reason, one of the resources storing one of these copies fails, AWS will automatically recover from this failure, mark that resource as unavailable, and create another copy of the object using a healthy resource to keep the number of copies at six. When using AWS services that are not managed by AWS and are instead managed by you, make sure that you are following a similar pattern to avoid data loss and service interruption.

The well-architected framework paper recommends these design principles to enhance reliability:

- Continuously test backup and recovery processes.

- Design systems so that they can automatically recover from a single component failure.

- Leverage horizontal scalability whenever possible to enhance overall system availability.

- Use automation to provision and shut down resources depending on traffic and usage to minimize resource bottlenecks.

- Manage change with automation.

Whenever possible, changes to the infrastructure should occur in an automated fashion.

Third pillar – performance efficiency

In some respects, over-provisioning resources is just as bad as not having enough capacity to handle your workloads. Launching an instance that is constantly idle or almost idle is a sign of bad design. Resources should not be at full capacity, but they should be efficiently utilized. AWS provides a variety of features and services to assist in the creation of architectures with high efficiency. However, we still have a responsibility to ensure that the architectures we design are suitable and correctly sized for our applications.

When it comes to performance efficiency, the recommended design best practices are as follows:

- Democratize advanced technologies.

- Take advantage of AWS's global infrastructure to deploy your application globally with minimal cost and to provide low latency.

- Leverage serverless architectures wherever possible.

- Deploy multiple configurations to see which one delivers better performance.

Efficiency is closely related to the next pillar – cost optimization.

Fourth pillar – cost optimization

This pillar is related to the third pillar. If your architecture is efficient and can accurately handle varying application loads and adjust as traffic changes, it will follow that your costs will be minimized if your architecture can downshift when traffic slows down.

Additionally, your architecture should be able to identify when resources are not being used at all and allow you to stop them or, even better, stop these unused compute resources for you. In this department, AWS also provides you with the ability to turn on monitoring tools that will automatically shut down resources if they are not being utilized. We strongly encourage you to adopt a mechanism to stop these resources once they are identified as idle. This is especially useful in development and test environments.

To enhance cost optimization, these principles are suggested:

- Use a consumption model.

- Leverage economies of scale whenever possible.

- Reduce expenses by limiting the use of company-owned data centers.

- Constantly analyze and account for infrastructure expenses.

Whenever possible, use AWS-managed services instead of services that you need to manage yourself. This should lower your administration expenses.

Fifth pillar – operational excellence

The operational excellence of a workload should be measured across these dimensions:

- Agility

- Reliability

- Performance

The ideal way to optimize these metrics is to standardize and automate the management of these workloads. To achieve operational excellence, AWS recommends these principles:

- Provision infrastructure through code (for example, via CloudFormation).

- Align operations and applications with business requirements and objectives.

- Change your systems by making incremental and regular changes.

- Constantly test both normal and abnormal scenarios.

- Record lessons learned from operational events and failures.

- Write down and keep the standard operations procedures manual up to date.

AWS users need to constantly evaluate their systems to ensure that they are following the recommended principles of the AWS Well-Architected Framework paper and that they comply with and follow architecture best practices.

Building credibility and getting certified

It is hard to argue that the cloud is not an important technology shift. We have established that AWS is the clear market and thought leader in the cloud space.

Comparing the cloud to an earthquake, we could say that it started slowly as a small rumbling that started getting louder and louder, and we are now at a point where the walls are shaking and it's only getting stronger.

In the The market share, influence, and adoption of AWS section, we introduced the concept of FOMO. There, we mentioned that enterprises are now eager to adopt cloud technologies because they do not want to fall behind their competition and become obsolete.

Hopefully, by now you are excited to learn more about AWS and other cloud providers, or at the very least, you're getting a little nervous and catching a little FOMO yourself.

We will devote the rest of this chapter to showing you the path of least resistance for how to become an AWS guru and someone that can bill themselves as an AWS expert.

As with other technologies, it is hard to become an expert without hands-on experience, and it's hard to get hands-on experience if you can't demonstrate that you're an expert. The best method, in my opinion, that you can use to crack this chicken-and-egg problem is to get certified.

Fortunately, AWS offers a wide array of certifications that will demonstrate to your potential clients and employers your deep AWS knowledge and expertise.

As AWS creates more and more services, it continues to offer new certifications (and render some of them obsolete) aligned with these new services.

Let's review the certifications available as of August 2020.

AWS Certified Cloud Practitioner – Foundational

This is the most basic certification offered by AWS. It is meant to demonstrate a broad- strokes understanding of the core services and foundational knowledge of AWS. It is also a good certification for non-technical people that need to be able to communicate using the AWS lingo but are not necessarily going to be configuring or developing in AWS.

This certification is ideal to demonstrate a basic understanding of AWS technologies for people such as salespeople, business analysts, marketing associates, executives, and project managers.

AWS Certified Solutions Architect – Associate

Important note

There is a new exam version (SAA-C02) as of March 22, 2020.

This is the most popular certification offered by AWS. Many technically minded developers and administrators skip taking the Cloud Practitioner certification and start by taking this certification instead. If you are looking to demonstrate technical expertise in AWS, obtaining this certification is a good start and the bare minimum to demonstrate AWS proficiency.

AWS Certified Developer – Associate

Obtaining this certification will demonstrate your ability to design, develop, and deploy applications in AWS. Even though this is a Developer certification, do not expect to see any code in any of the questions during the exam. However, having knowledge of at least one programming language supported by AWS will help you to achieve this certification. Expect to see many of the same concepts and similar questions to what you would see in the Solutions Architect certification.

AWS Certified SysOps Administrator – Associate

Obtaining this certification will demonstrate to potential employers and clients that you have experience in deploying, configuring, scaling up, managing, and migrating applications using AWS services. You should expect the difficulty level of this certification to be a little bit higher than the other two Associate-level certifications, but also expect quite a bit of overlap in the type of questions that will be asked with this certification and the other two Associate-level certifications.

AWS Certified Solutions Architect – Professional

This certification together with the DevOps Engineer – Professional certification is at least two or three times harder than the Associate-level certification. Getting this certification will demonstrate to employers that you have a deep and thorough understanding of AWS services, best practices, and optimal architectures based on the particular business requirements for a given project. Obtaining this certification shows to potential employers that you are an expert in the design and creation of distributed systems and applications on the AWS platform. It used to be that having at least one of the Associate-level certifications was a prerequisite in order to sit the Professional-level certifications, but AWS has eliminated that requirement.

AWS Certified DevOps Engineer – Professional

This advanced AWS certification validates knowledge on how to provision, manage, scale, and secure AWS resources and services. Obtaining this certification will demonstrate to potential employers that you can run their DevOps operations and that you can proficiently develop solutions and applications in AWS.

AWS Certified Advanced Networking – Specialty

This AWS specialty certification demonstrates that you possess the skills to design and deploy AWS services as part of a comprehensive network architecture and that you know how to scale using best practices. Together with the Security – Specialty certification, this is one of the hardest certifications to obtain.

AWS Certified Security – Specialty

Possessing the AWS Certified Security – Specialty certification demonstrates to potential employers that you are well versed in the ins and outs of AWS security. It shows that you know security best practices for encryption at rest, encryption in transit, user authentication and authorization, penetration testing, and generally being able to deploy AWS services and applications in a secure manner that aligns with business requirements.

AWS Certified Machine Learning – Specialty

This is a good certification to have in your pocket if you are a data scientist or a data analyst. It shows to potential employers that you are familiar with many of the core machine learning concepts, as well as the AWS services that can be used to deliver machine learning and artificial intelligence projects, such as these:

- Amazon SageMaker

- Amazon Rekognition

- Amazon Comprehend

- Amazon Translate

- Amazon Lex

- Amazon Kinesis

- Amazon DynamoDB

AWS Certified Alexa Skill Builder – Specialty

This is a focused certification. It tests for a small subset of services that are used to deliver Alexa skills.

AWS Certified Database – Specialty

Having this certification under your belt demonstrates to potential employers your mastery of the persistence services in AWS and your deep knowledge of the best practices needed to manage them.

Important note

This is a brand new certification as of April 6, 2020.

Some of the services tested are these:

- Amazon RDS

- Amazon Neptune

- Amazon DynamoDB

- Amazon Kinesis

- Amazon DocumentDB

AWS Certified Data Analytics – Specialty

Completing this certification demonstrates to employers that you have a good understanding of the concepts needed to perform data analysis on petabyte-scale datasets.

Important note

This has a new certification name and exam version as of April 13, 2020 (formerly AWS Certified Big Data – Specialty).

This certification shows your ability to design, implement, and deploy analytics solutions that can deliver insights by enabling the visualization of data and implementing the appropriate security measures. Some of the services covered are listed here:

- Amazon QuickSight

- Amazon Kinesis

- Amazon DynamoDB

Learning tips and tricks to obtain AWS certifications

Now that we have learned about the various certifications offered by AWS, let's learn about some of the strategies we can use to get these certifications with the least amount of work possible and what we can expect as we prepare for these certifications.

Focus on one cloud provider

Some enterprises are trying to adopt a cloud-agnostic or multi-cloud strategy. The idea behind this strategy is to not have a dependency on only one cloud provider. In theory, this seems like a good idea, and some companies such as Databricks, Snowflake, and Cloudera offer their wares so that they can run using the most popular cloud providers.

However, this agnosticism comes with some difficult choices. One way to implement this strategy is to choose the least common denominator, for example, only using compute instances so that workloads can be deployed on various cloud platforms. Implementing this approach means that you cannot use the more advanced services offered by cloud providers. For example, using AWS Glue in a cloud-agnostic fashion is quite difficult, if not impossible.

Another way that a multi-cloud strategy can be implemented is by using the more advanced services, but this means that your staff will have to know how to use these services for all the cloud providers you decide to use. To use the common refrain, you will end up being a jack of all trades and a master of none.

Similarly, it is difficult to be a cloud expert across vendors at an individual level. It is recommended to pick one cloud provider and try to become an expert on that one stack. AWS, Azure, and GCP, to name the most popular options, offer an immense amount of services that continuously change and get enhanced, and they keep adding more services. Keeping up with one of these providers is not an easy task. Keeping up with all three, in my opinion, is close to impossible.

Pick one and dominate it.

Focus on the Associate-level certifications

As we mentioned before, there's quite a bit of overlap between the Associate-level certifications. In addition, the jump in difficulty between the Associate-level certifications and the Professional-level ones is quite steep.

I highly recommend sitting at least two, if not all three, of the Associate-level certifications before attempting the Professional-level certifications. Not only will this method prepare you for the Professional certifications, but having multiple Associate certifications will make you stand out against others that only have one Associate-level certification.

Get experience wherever you can

AWS recommends having 1 year of experience before taking the Associate-level certifications and 2 years of experience before you sit the Professional-level certifications. This may seem like a catch-22 situation. How can you get experience if you are not certified?

There are a couple of loopholes in this recommendation. First, it's a recommendation and not a mandatory requirement. Second, they mention that experience is required, but not necessarily work experience. This means that you can get experience as you are training and studying for the exam.

I can tell you from personal experience that work experience is not required. I personally passed the two Professional certifications before I engaged in my first AWS project.

Let's spend some time now addressing some of the questions that frequently come up while preparing to take these certifications.

Some frequently asked questions about the AWS certifications

I have had the opportunity to take and pass 9 of the 12 certifications offered by AWS. In addition, as part of my job, I have had the good fortune of being able to help hundreds of people to get certified. The next section will have a list of frequently asked questions that you will not find in the AWS FAQ section.

What is the best way to get certified?

Before we get to the best way to get certified, let's look at the worst way. Amazon offers extremely comprehensive documentation. You can find this documentation here:

This a great place to help you troubleshoot issues you may encounter when you are directly working with AWS services or perhaps to correctly size the services that you are going to be using. It is not, however, a good place to study for the exams. It will get overwhelming quickly and much of the material you will learn about will not be covered in the exams.

The better way to get certified is to use the training materials that AWS specifically provides for certification, starting with the roadmaps of what will be covered in each individual certification. These roadmaps are a good first step to understanding the scope of each individual exam.

You can begin to learn about all these roadmaps, or learning paths, as AWS likes to call them, here: https://aws.amazon.com/training/learning-paths/.

In these learning paths, you will find a combination of free online courses as well as paid intensive training sessions. While the paid classes may be helpful, in my opinion, they are not mandatory for you to pass the exam.

Before you look at the learning paths, the first place to go to find out the scope of each certification is the study guides available for each certification. In these study guides, you will learn at a high level what will and what won't be covered for each individual exam.

For example, the study guide for the AWS Cloud Practitioner certification can be found here:

Now, while the training provided by AWS may be sufficient to pass the exams and I know plenty of folks that have passed the certifications using only those resources, there are plenty of third-party companies that specialize in training people with a special focus on the certifications. The choices are almost endless, but there are a couple of companies that I can recommend and have a great reputation in this space.

Acloud.guru

Acloud.guru has been around since 2015, which is a long time in cloud years. Acloud.guru has courses for most of the AWS certifications. In addition, it also offers courses for Azure and GCP certifications. Finally, it has a few other courses unrelated to certifications that are also quite good.

It constantly updates and refreshes its content, which is a good thing because AWS is also constantly changing its certifications to align with new services and features.

The company was started in Melbourne, Australia, by Sam and Ryan Kroonenburg, two Australian brothers. Initially, Ryan was the instructor for all the courses, but they now have many other experts on their staff to help with the course load.

They used to charge by course, but a few years back they changed their model to a monthly subscription, and signing up for it gives you access to the whole site.

The training can be accessed here: https://acloud.guru/.

Acloud.guru is the site that I have used the most to prepare for my certifications. This is how I recommend tackling the training:

- Unless you have previous experience with the covered topics, watch all the training videos at least once. If it's a topic you feel comfortable with, you can play the videos at a higher speed, and then you will be able to watch the full video faster.

- For video lessons that you find difficult, watch them again. You don't have to watch all the videos again – only the ones that you found difficult.

- Make sure to take any end-of-section quizzes.

- Once you are done watching the videos, the next step is to attempt some practice exams. One of my favorite features of Acloud.guru is the exam simulator. Keep on taking practice exams until you feel confident and you are consistently correctly answering a high percentage of the questions (anywhere between 80 and 85%, depending on the certification).

The questions provided in the exam simulator will not be the same as the ones from the exam, but they will be of a similar difficulty level and they will all be in the same domains and often about similar concepts and topics.

By using the exam simulator, you will achieve a couple of things. First, you will be able to gauge your progress and determine whether you are ready for the exam. My suggestion is to keep on taking the exam simulator tests until you are consistently scoring at least 85% and above. Most of the real certifications require you to answer 75% of the questions correctly, so consistently scoring a little higher than that should ensure that you pass the exam.

Some of the exams, such as the Security – Specialty exam, require a higher percentage of correct answers, so you should adjust accordingly.

Using the exam simulator will also enable you to figure out which domains you are weak on. After taking a whole exam in the simulator, you will get a list detailing exactly which questions you got right and which questions were wrong, and they will all be classified by domain.

So, if you get a low score on a certain domain, you know that's the domain that you need to focus on when you go back and review the videos again.

Lastly, you will be able to learn new concepts by simply taking the tests in the exam simulator. Let's now learn about another popular site that I highly recommend for your quest toward certification.

Linux Academy

Linux Academy was created even earlier than Acloud.guru. It was founded in 2012. It is currently headquartered in Texas and it also frequently refreshes its courses and content to accommodate the continuously changing cloud landscape. It also offers courses for the Azure and GCP certifications. True to its name and roots, it also offers courses for Linux.

It claims to get over 50,000 reviews a month from its students with a user satisfaction rating of over 95%.

Acloud.guru bought it in 2019, but it still provides different content and training courses. The content often does not overlap, so having access to both sites will improve your chances of passing the certification exams.

I don't necessarily recommend signing up for multiple training sites for the lower-level certifications such as Cloud Practitioner and the Associate-level certifications. However, it may not be a bad idea to do it for the harder exams. The more difficult exams are for the Professional-level certifications and, depending on your background, some of the Specialty certifications. I found the Security and Advanced Networking certifications especially difficult, but my background does not necessarily align with these topics, so that may have been part of the problem.

Whizlabs

Whizlabs was founded by CEO Krishna Srinivasan after spending 15 years in other technology ventures. In addition to AWS, it also offers certification courses for the following technologies:

- Microsoft Azure

- GCP

- Salesforce

- Alibaba Cloud

Whizlabs divides the charges for its training between its online courses and its practice tests.

One disadvantage of Whizlabs is that unlike the exam simulator with Acloud.guru, where it has a bank of questions and it randomly combines them, the Whizlabs exam questions are fixed and they will not be shuffled to create a different exam.

It also has a free version of its practice exams for most of the certifications, with 20 free questions.

The same strategy as mentioned before can be used with Whizlabs. You don't need to sign up for multiple vendors for the easier exams, but you can combine a couple when it comes to the harder exams.

Jon Bonso's Udemy courses

Jon Bonso on Udemy can be considered the new kid on the block but his content is excellent, and he has a passionate and growing following. For example, as of August 2020, his Solution Architect Associate practice exams have over 75,000 students with over 14,000 ratings and a satisfaction rating of 4.5 stars out of a possible 5 stars.

The pricing model used is also similar to Whizlabs. The practice exams are sold separately from the online courses.

As popular as his courses are, it is worth noting that Jon Bonso is a one-man band and does not offer courses for all the available AWS certifications, but he does focus exclusively on AWS technologies.

How long will it take to get certified?

A question that I am frequently asked is about how many months you should study before sitting the exam. I always answer it using hours instead of months.

As you can imagine, you will be able to take the exam a lot sooner if you study 2 hours every day instead of only studying 1 hour a week. If you decide to take some AWS-sponsored intensive full-day or multi-day training, that may go a long way toward shortening the cycle.

I had the good fortune of having the opportunity to take some AWS training. To be honest, even though the teachers were knowledgeable and were great instructors, I found that taking such courses was a little like drinking out of a firehose. I much prefer the online AWS and third-party courses, where I could watch the videos and take the practice exams whenever it was convenient.

In fact, sometimes, instead of watching the videos, I would listen to them in my car or while on the train going into the city. Even though watching them is much more beneficial, I felt like I was still able to embed key concepts while listening to them, and that time would have been dead time anyway.

You don't want to space the time between study sessions too much. If you do that, you may find yourself in a situation where you start forgetting what you have learned.

The number of hours it will take you will also depend on your previous experience. If you are working with AWS for your day job, that will shorten the number of hours needed to complete your studies.

The following subsections will give you an idea of the amount of time you should spend preparing for each exam.

Cloud Practitioner certification

Be prepared to spend anywhere from 15 to 25 hours preparing to successfully complete this certification.

Associate-level certifications

If you don't have previous AWS experience, plan to spend between 70 and 100 hours preparing. Also keep in mind that once you pass one of the Associate certifications, there is considerable overlap between the certifications, and it will not take another 70 to 100 hours to obtain the second and third certifications. As mentioned previously in this chapter, it is highly recommended to take the two other Associate-level certifications soon after you pass the first one.

Expect to spend another 20 to 40 hours studying for the two remaining certifications if you don't wait too long to take them after you pass the first one.

Professional-level certifications

There is quite a leap between the Associate-level certifications and the Professional-level certifications. The domain coverage will be similar, you will need to know how to use the AWS services covered in much more depth, and the questions will certainly be harder.

Assuming you took at least one of the Associate-level certifications, expect to spend another 70 to 100 hours watching videos, reading, and taking practice tests to pass this exam.

AWS removed the requirement of having to take the Associate-level certifications before being able to sit the Professional-level certifications, but it is still probably a good idea to take at least some of the Associate exams before taking the Professional-level exams.

As is the case with the Associate-level exams, once you pass one of the Professional-level exams, it should take much less study time to pass the other Professional exam as long as you don't wait too long to take the second exam and forget everything.

To give you an example, I was able to pass both Professional exams a week apart from each other. I spent the week taking practice exams and that was enough, but your mileage may vary.

Specialty certifications

I am lumping all the Specialty certifications under one subheading, but there is great variability in the level of difficulty between all the Specialty certifications. If you have a background in networking, you are bound to be more comfortable with the Advanced Networking certification than with the Data Science certification.

When it comes to these certifications, unless you are collecting all certifications, you may be better off focusing on your area of expertise. For example, if you are a data scientist, the Machine Learning Specialty certification and the Alexa Skills Builder certification may be your best bet.

In my personal experience, the Security certification was the most difficult one. Something that didn't help is that AWS does set a higher bar for this certification than with the other certifications.

Depending on your experience, expect to spend about these amounts of time:

- Security – Specialty – 40 to 70 hours

- Alexa Skill Builder – Specialty – 20 to 40 hours

- Machine Learning – Specialty – 40 to 70 hours

- Data Analytics – Specialty (previously Big Data – Specialty) – 30 to 60 hours

- Database – Specialty – 30 to 60 hours

- Advanced Networking – Specialty – 40 to 70 hours

What are some last-minute tips for the days of the exam?

I am a runner. I mention that not to boast but because I found a lot of similarities between preparing for races and preparing for the AWS exams. A decent half marathon time is about 90 minutes, which is the time you get to take the Associate-level exams, and a good marathon time is about 3 hours, which is how long you get to take the Professional-level exams.

Keeping focus for that amount of time is not easy. For that reason, you should be well rested when you take the exam. It is highly recommended to take the exam on a day when you don't have too many other responsibilities; I would not take it after working a full day. You will be too burned out.

Make sure you have a light meal before the exam – enough so that you are not hungry during the test and feel energetic, but not so much that you actually feel sleepy from digesting all that food.

Just as you wouldn't want to get out of the gate too fast or too slow in a race, make sure to pace yourself during the exam. The first time I took a Professional exam, I had almost an hour left when I completed the last question, but when I went back to review the questions, my brain was completely fried and I could not concentrate and review my answers properly, as I had rushed through the exam to begin with. Needless to say, I did not pass. The second time I took it, I had a lot less time left but I was much more careful and thoughtful with my answers, so I didn't need as much time to review and I was able to pass.

You also don't want to be beholden to the clock, checking it constantly. The clock will always appear in the top-right part of the exam, but you want to avoid looking at it most of the time. I recommend writing down on the three sheets you will receive where you should be after every 20 questions and checking the clock against these numbers only when you have answered a set of 20 questions. This way, you will be able to adjust if you are going too fast or too slow but you will not spend an inordinate amount of time watching the clock.

Let's now summarize what we have learned in this chapter.

Summary

In this chapter, we were able to piece together many of the technologies, best practices, and AWS services we covered in the book. We weaved it all together into a generic architecture that you should be able to use for your own projects.

As fully featured as AWS has become, it is all but certain that AWS will continue to provide more and more services to help enterprises, large and small, to simplify the information technology infrastructure.

You can rest assured that Amazon and its AWS division are hard at work creating new services and improving the existing services by making them better, faster, easier, more flexible, and more powerful, as well as adding more features.

As of 2020, AWS offers a total of 212 services. That's a big jump from the two services it offered in 2004. The progress that AWS has made in the last 16 years has been nothing short of monumental. I personally cannot wait to see what the next 16 years will bring for AWS and what can kind of solutions we will be able to deliver with their new offerings.

We also covered some reasons that the cloud in general and AWS in particular are so popular. As we learned, one of the main reasons for the cloud's popularity is the concept of elasticity, which we explored in detail.

After reviewing the cloud's popularity, we have hopefully convinced you to hop aboard the cloud train. Assuming you want to get on the ride, we covered the easiest way to break into the business. We learned that one of the easiest ways to build credibility is to get certified. We learned that AWS offers 12 certifications. We learned that the most basic one is AWS Cloud Practitioner and that the most advanced certifications are the Professional-level certifications. In addition, we learned that, as of 2020, there are six Specialty certifications for a variety of different domains. We also covered some of the best and worst ways to obtain these certifications.

Finally, we hope you became curious enough about potentially getting at least some of the certifications that AWS offers. I hope you are as excited as I am about the possibilities that AWS can bring.

In the next chapter, we will cover in broad strokes how the AWS infrastructure is organized, as well as understanding how you can use AWS and cloud technologies to lead a digital transformation.