In this chapter, we will discuss how Service-oriented Architecture (SOA) as a design approach allows us to achieve certain goals and the characteristics that have to be maintained to make these benefits feasible. The practical ways of attaining these characteristics are based on a concrete balance of very well-defined principles, and we will closely look at each one of them. This balance is maintained in specific areas of relevance and is formed in a structure of frameworks. Here, we will discuss issues that are frequently encountered within and across these frameworks, and the common patterns employed as a publicly approved way of solving these recurring problems. One of the main purposes of this chapter is to give developers and architects a matrix of the design rules (patterns) in relation to the corresponding frameworks, all based on SOA principles.

As an evolutionary approach, comprising the best of the architectural and technical solutions designed in the last forty years (arguably even more), SOA nowadays in many ways is quite well standardized with a well laid out vocabulary of meanings of technical terms. Along the course of the entire book, we will stick to the definition of SOA, summarized by Thomas Erl in Service-Oriented Architecture: Concepts, Technology, and Design, Prentice Hall / PearsonPTR Publishing.

This is also available at http://serviceorientation.com/whatissoa/service_oriented_architecture. The complete SOA Manifesto that was developed as a result of systematic collaboration of many experts' groups can be found at http://serviceorientation.com/soamanifesto/annotated.

Still, it's quite fascinating to see that debates are still being sparked and raged worldwide regarding proper terms and their meanings. We are not going to judge or participate in any form in these discussions. That's not the purpose of this book. Obviously, there is one good way to avoid that, which is to stay focused on the practical targets that SOA helps us to achieve. No wonder these goals and benefits are quite well defined and are the sole purposes and reasons why the SOA approach was proposed in the first place. Any practicing architect who has been through several projects (even if not defined as being SOA-based) could easily recollect the common requirements stated by both sides: Business and IT. Let's just quickly recollect them. So, any concrete solution should have the following properties:

They should be kept as simple as possible while still meeting the business needs [R 1]

They should be kept flexible and consistent to support the changing enterprise-wide business needs and enable the evolution of the company [R 2]

They should be based on open industry standards [R 3]

Systems and components within the proposed IT domain (architecture) will be viewed as a set of independent and reusable assets that can be composed to provide a solution for the company [R 4]

They should be based on clearly defined, well-partitioned, and loosely-coupled components, processes, and roles [R 5]

They should be designed for ease of testing [R 6]

They should be based on a proven, reliable technology that is used as originally intended [R 7]

They should be designed and developed, focusing on nonfunctional requirements right from the start [R 8]

They should be secure; able to protect confidentiality and the privacy of all underlying resources and communications [R 9]

They should be resilient to faults, that is, capable of staying operational even in the event of catastrophic failure of the internal components [R 10]

We could really continue on, but in general, these are the top-ten points of any requirements list, and it will be hard to go further without repeating them. Therefore, any list that is similar to this cannot be consistent with more than 15 unique statements within it. We suggest keeping these points up your sleeve until the end of this chapter. This is because at the end of the chapter, we will do some practical exercises of matching listed declarations to the capabilities of SOA. Quite often, these requirements are based on pure common sense, and some people declare them as design principles. It is hard to argue that real design principles should at least be based on common sense, but compliance to this simple fact is not enough to talk about elements from the previous list as principles. At the moment, they are just declarations of good intentions, and we, after several implementations of complex projects, know quite well what road is paved with them. We will talk about the definition of principles a bit later in a considerable amount of detail, but now it's important to analyze these wishes and find what's common there and how it is relevant to the service-oriented approach. It is quite simple to see that the whole list (with one small exception, which is just to confirm the general rule) can be divided into two categories. These categories are related to effort (first, third, fourth, fifth, sixth, seventh, and eighth items) and time (second and seventh items), with the seventh item equally relevant to both effort and time.

Standing a bit aside, the ninth item, generally described as compliance by security policies, is nothing more than pure money, as almost no one these days seeks fun in simple informational vandalism. All security breaches aim to steal your information, that is, money, and therefore, put you out of business. As a consequence, it's needless to say that time and effort can essentially be compared to money as well. So, unsurprisingly, everything boils down to the same logical end, that is, money, which is the key; we have learned this many times, when talking to the bosses (CIO, CEO, project manager, and so on). As stated previously, in IT, money comes in two general ways: either we consistently shorten the delivery cycle or reduce operational costs.

Firstly, we would like to place strong affectation on the word consistently, otherwise, all delivered solutions will tend to be quick and dirty with rocketing operational costs. The two ways (mentioned previously) don't need to be exactly inversely proportional, and proper balance can be found, as we will see later.

A shortened delivery cycle simply means that we will strive to employ the existing reusable components if feasible. Also, every new component or element of the infrastructure that we will add to our inventory will be designed, keeping reusability features in mind. The good rate of return from previous investments (that is, ROI) is the main direction of implementation for new products. At the same time, a higher level of reuse denotes a lower number of heterogeneous components and elements in the infrastructure.

A less diverse technical infrastructure with more standardized components tends to be more predictable and consequently more manageable, and the lowering Total Cost of Ownership (TCO), which is the key part of operational costs, becomes more attainable. With higher ROI and lower TCO, an organization becomes more adaptable to market changes. This is because with them, we will maintain a more transparent and understandable application portfolio with a high level of reuse, and at the same time, reserve more money for creating new and best-of-breed products in the areas of our business expansion.

How can these strategic business benefits be achieved from a components' development standpoint? We have already mentioned that to make our components more interoperable, we should reduce the level of disparity, but at what level? By building components on the same platforms using the same languages, versions, protocols, and so on? This will be unrealistic even within a single department, not to mention a decent-sized enterprise. Our development and implementation processes must be focused on reducing the integration efforts between components, aiming to standardize interfaces. Taking this standardization further onto higher levels, we could achieve a certain abstraction level that will be comprehensible to business analysts yet present enough technical details to be sufficient for IT personnel. The long time benefits promised (which may not be entirely directed) by object-oriented programming (OOP) are quite rarely achieved due to the complexity of inheritance and encapsulation concepts.

The Agile developing approach is the solid basis on which business analysts and technical leads can find mutual areas for fostering reusable components with minimal interaction cycles. Still, the Agile methodology in place is not the main prerequisite for achieving this, and if correctly maintained, the level of interface abstraction allows people from both business and technology fields to speak the same language. The main outcome of this exercise should be to provide a description of a component's interface with business-related capabilities that is desirable for the expected level of reuse. What is inside the component, that is, its technical implementation, is completely out of discussion, and it's up to the technical lead to decide which way to go.

Thus, various technical platforms can leverage their best sides where it's needed (or where it's inevitable due to specific skill sets in place of physical implementation) by staying interconnected without affecting each other's premises and project deadlines. Finally, the federated approach gives the opportunity to choose the best products from various vendors and assemble them in the business flows, abstracted and architected in the previous steps. Of course, these products must stay in compliance with the interface specifications and the operational requirements that we put in place. The opposite is also true, that is, setting standards from our business standpoint will help vendors to adjust their products and offerings in such a way that integration efforts will be minimal.

So, it's all about money, as the logical sequence mentioned earlier demonstrates. Have you noticed that in that logical exercise, we didn't use the abbreviation SOA at all? So far, we are just trying to convert the previously presented list of intentions derived from various project-design documents, such as request for informations (RFIs) and request for proposals (RFPs), into a concise list of benefits. Our next step will be to assess how attainable they are. Although that will be the purpose of the entire book, the key criterion will be defined here shortly. Before proceeding with this, we would like to stress again that the basic terminology around business benefits and design characteristics is based on the widely accepted structure presented by Thomas Erl as mentioned earlier. Also, we do not want to reinvent the wheel for the thousandth time and then participate in terminology wars, which will lead us nowhere. Thomas Erl has described the obvious benefits that we would like to achieve in a logical sequence, and you can see the proposed sequence for implementing the listed-out goals in the following table:

|

Goals and benefits |

Common solutions' requirements |

|---|---|

|

1, 8 | |

|

Reduced IT burden (low TCO) |

1, 3 |

|

Increased organizational agility (shorter time to market) |

4, 8, 10 |

|

Increased intrinsic interoperability (reduce integration efforts) |

2, 4, 6 |

|

3 | |

|

Increased federation |

5, 6 |

|

Increased business and technology alignment |

1, 2 |

It is also obvious from the previous requirements list that some of the requirements are very contradictory, and in most practical implementations, there could be quite a few natural enemies present, such as:

Security and performance (always blood enemies).

Reliability factors and highly reusable components (for example, having a single point of failure).

Resilience achieved by Redundant Implementation and IT costs, independent reusable assets and governance costs (for example, preventing the component logic from getting scattered over several implementations).

Flexibility and reuse-by-design and development costs (for example, in the initial phase of development). Higher flexibility denotes that more execution paths are required, which requires more testing.

This list can go on as the previously mentioned points are just the obvious ones. Thus, the benefits summarized in the table are comparably more consistent as the most contradicting parts are abstracted. However, we must keep in mind that they are still there, and we will focus on them in more detail while discussing the implementation of design principles. What is important now is to distill the most common characteristics that any architectural approach will ensure in every application to attain these benefits.

It is clear that one of the primary requirements for reducing time to market is to improve communication between the technical leads and business analysts. If the ways of expressing the business and technical requirements are kept abstracted from the analysts, and at the same time, the essential technical specifications are kept in place for the developers, then this architectural model could be truly business-driven. The other way around is also valid. If IT provides a managed collection of reusable business-related services, then it's quite possible that new business opportunities can be spotted and proposed by business analysts; this is because new workflows are composed out of the existing services. The response time to the new challenges will be lowered as the change in the implementation's task force will be business-driven and IT will be resource-oriented at the same time.

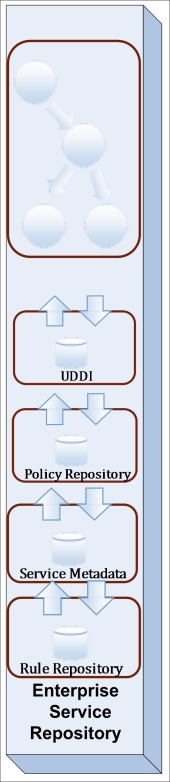

Components, especially developed with a business recomposition option in mind, will gradually form some kind of components library. With strong sponsorship from architects, this library will become attractive for more and more extensive reuse in various business domains, depending on the business context of the components of course. This library has a name. Traditionally, it's called repository, and we will spend a lot of time discussing its purpose and architecture a bit further. However, from the characteristics standpoint, let's depict it as a technical platform that is capable of hosting these components and providing runtime and design-time visibility, which will be discussed further. Simplistically, this will be any application server with a management console, available for all enterprise developers and architects; it will present all reusable components as the sole enterprise-centric assets.

This second characteristic would be possible only when the presented components are designed with the highest level of composability in mind. This means that when integration efforts, including regression test requirements, platform performance enforcements, and activity monitoring are tamed enough to a level where the reusability option becomes so attractive for all the technical and business teams, the idea of reinventing the wheel would never come as a plausible option. Surely, these characteristics could have more governance efforts in the background than purely technical ones. Still, with proper planning based on honest and realistic maturity assessment and with evasion of the big bang's "all-or-nothing" approach, when SOA becomes more religion than the practical "one step at the time" approach, it’s quite achievable.

Components developed as reusable assets should follow commonly accepted standards; otherwise, reusability will be severely limited to one technology domain. Another alternative would be to reinvent the already existing standards, which is always a waste of time. It doesn't mean that any published standard must be followed blindly; the adoption of standards must be carefully planned. An enterprise's maturity analysis combined with marketing research on top products in a particular area will guide an architect towards common models, describing the component's behavior and implementation technique with minimal integration efforts. Thus, by achieving the first three characteristics, we will open the highly desirable option of maintaining the hot-pluggable infrastructure where best-of-breed products from various vendors could be combined into well-turned fabric based on common standards. It is an architect's responsibility to stay watchful, analyze standards' specifications, and deduct the crucial parts and to be focused on increasing the desirable characteristics.

This design characteristic of making it possible for all components in a repository to stay vendor-neutral has an extra significance for us in the context of this book; it is dedicated to the realization of certain design patterns on the Oracle platform. Actually, there is no contradiction here. This is because we will strive to present concrete solutions in a vendor-neutral way first, if possible, and then demonstrate how Oracle tools could address the same issue. We will do this here and try to demonstrate the maturity of the Oracle platform, which is capable of delivering hot-pluggable solutions that could potentially pose fewer burdens for the enterprise IT domain.

So, now it's a good time to sum up the short descriptions given previously in the table of architectural characteristics, in the way they have been defined by Thomas Erl. Here, we will again repeat our exercise, trying to map the supporting core characteristics to most of our requirements (if not all).

|

Characteristics |

Requirements [R 1] to [R 8] |

|---|---|

|

Business-driven |

2 |

|

Composition-centric |

1, 5, 8 |

|

Enterprise-centric |

4 |

|

Vendor-neutral |

7 |

We have selected the most obvious characteristics, which directly support the most common requirements, summarized at the beginning of this chapter. There is no need to elaborate on this further, as we can easily see that other requirements are supported directly or indirectly. However, we would strongly recommend that you repeat this exercise every time you analyze the requirements for new products, systems, or components in the RFI scope or at any other stage of the project.

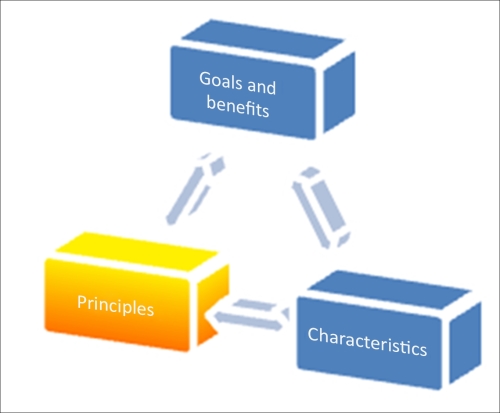

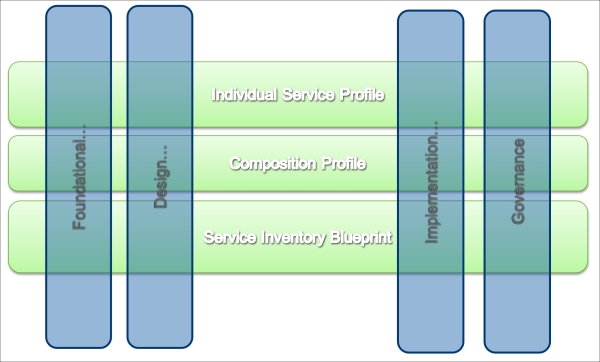

The following figure summarizes all that we have learned so far:

Until now, we have intentionally avoided mentioning the term SOA during this short exercise of outlining the keystones of the requirements analysis. The purpose is quite straightforward: if you could clearly define your goals in a very precise way and declare concise characteristics in support of these objectives, it really doesn't matter what the name of your design approach is. Some can call it common sense, and that's perfectly fine. Nothing could be better than a design approach based on common sense, which is easily comprehendible by business and IT. However, apparently something else is needed, and that would be the design principles as a strong foundation for the first two keystones.

In the following paragraphs, we will outline this foundation again using the classification provided by a best-selling SOA author and founder of the SOA School, Thomas Erl, which is accepted by Oracle. This time, we will strongly focus on SOA and Service-Oriented Computing (SOC) as the best technical implementation of common sense depicted earlier. There is no reason to evade it further—if it looks like a duck, swims like a duck, and quacks like a duck, then it is probably a duck. Goals and characteristics mentioned previously are exactly how the SOA declares them. How they will be achieved and supported is a matter of the principles' implementation. They are all interconnected, so the balance is also part of common sense, and as is usually put, it must be applied in a meaningful context. The extent of this is the level of realization of tactical and strategic goals and technical capabilities of the principles' implementation.

Tip

Please be forewarned as the following example is a recipe for a perfect disaster. We have to put this disclaimer as some could take it as direct architectural advice. It is also sadly realistic, since all that we have described next was taken from real implementations. We will use this example in the later chapters.

So, what are the tactical goals? The essence here is time, usually limited by a timeframe of a project or several milestones of non-correlated projects. It is always good to stay on budget and deliver on time what was promised. This is a common scenario for a component or a single application development process. Isolation, focus on performance, and reliability as primary targets have their obvious benefits. As a solution architect, do not bother your team much with interoperability, as you probably have another enterprise application integration (EAI) team that is especially dedicated to this purpose; they are somewhere nearby and are capable of performing the tricks. Skillful EAI means that some integration platforms are in place already, providing hub-and-spoke capabilities with all the necessary transformations, translations, protocol bridges, and so on. Honestly, nothing's wrong with that. At least, not yet.

All that you need is a capable integration team and be lucky enough not to be at the end in the row of endless regression tests. Also, it would be prudent to maintain a very thorough events/error log for your product, just in case you need to identify where all your inbound/outbound messages have gone. You must be able to prove that you (your application) have sent all the required outgoing messages, and they are definitely now on the integration platform's side (just search better); alternatively, if you haven't received what you need, the flaw is definitely on the part of the EAI's design.

As time is of the essence, moving further, you can take the liberty to define all your APIs and XSDs as close to your technical implementations as possible, based on the DB structure and the logic of the classes. Modern development platforms and SDK/XDK are truly advanced, so this task can really be done in no time by a right-mouse click. Following this path, you can provide newer versions of your application almost instantly after receiving new requirements, and it's purely EAI's responsibility to maintain concurrent APIs published on an integration platform. Again, just be the first in the list of regression tests.

As performance is declared to be one of the primary objectives, always demand for direct access to the resources you need. A direct DB access or Remote Method Invocation (RMI) is much faster than the hub-and-spoke integration approach. At the same time, do not let anyone access your internal resources, as it potentially could affect the third characteristic declared prime, that is, reliability. On second thoughts, it would be good to hide all your implementation details and keep them hidden from everyone. It will prevent any unauthorized access into your backend resources.

Strengthening security is a positive side effect of this isolation. The obvious fact that all technical details and data structures are already exposed via your autogenerated Web Services Description Languages (WSDLs) are just Web Services (WS) collaterals and must be handled again by EAI. This is because we follow a common principle of separation of concerns by delegating security operations to the middleware. By helping middleware handle error situations, you could provide a full-stack trace from your entire web-based API, leaving the standard SOAP Fault message by default. The EAI team will have to find all the necessary details and handle them according to their understanding of business logic, as we will keep our internal logic secluded for the reasons explained previously (security and resource protection).

As you already have direct connections to the resources in other systems, you could potentially implement some extra logic on your side in order to speed up the external processes and get the necessary results without waiting. Why not? We are endorsing distributed computing! You can go even further; you can include your public API's capabilities from other systems, as you already have access to them. So, it's a mashup, isn't it? For the sake of clarity, you just inform the EAI team that these new capabilities are foreign and not covered by your original SLA, as you cannot guarantee that the design of other systems would be good; however, you welcome everyone to use them. Speaking of SLA, quite soon you will spot that the autogenerated XSDs are a bit elaborate, causing some latency on the API side and extra processing overhead on the EAI platform. As an architect, you would propose quite a simple workaround (remember that performance is the essence): switch off the XSD validation at the EAI platform's end. It certainly helped a bit, but not enough. Later, you will discover the original cause, that is, the standard JAXB library responsible for message marshaling/demarshaling is way too slow.

The implementation of custom marshaling would certainly be helpful not only for your application, but also for others' as well. Why not help other teams by supplying them with a more robust and reliable XDK? In parallel, you can make some improvements to the XML structure, presenting custom elements within the message body for parsing acceleration. For instance, if you have several addresses in the message (billing, postal, and corporate), you could implement special predicates to indicate which one is to be used in a particular business case and when others should be suppressed. You can really dedicate some time to these tasks so that developing and adapting your components is not so burdensome.

Our tactical goals have been well achieved. All that's left is to explain to your CIO why the consolidated IT costs after three years of tactical architecting are almost equal to corporate revenues.

If you think the presented scenario is a bit artificial, please suspect not. On the contrary, some unnecessary technical details had been omitted to make it less chilling. However, we would like to make one thing clear: we are not against tactical goals at all; they are chunks of iterative development and essential parts of SCRUM sprints. We just believe that tactical goals and benefits must be a native part of some bigger strategy; otherwise, you could win a battle or two but lose the war very badly. The temptation to achieve your target instantly by buying another magic pill is always high but usually leads to a spaghetti-like infrastructure. In best case scenarios, you will get a lasagna-style infrastructure if your integration efforts are consistent (another term for expensive). So now, we are going to discuss the principles that could make our strategy capable of supporting declared goals and characteristics.

Don't worry, we will not be reinventing the terms here again. After more than ten years of implementation, the principles are quite well declared and explained. The consolidation done by Thomas Erl has been accepted de facto by most of the top market players, and what is most important for this exercise is that it has been accepted even by Oracle. You can refer to it at http://serviceorientation.com/serviceorientation/index.

Here, we will mostly focus on the relation of the principles and characteristics and the consequences that will follow if the principles are neglected. Jumping ahead, it would be right to say that the patterns are really needed when principles are not implemented as they are intended. The reasons for this could be different, which are mentioned as follows:

The already existing burden of legacy systems prevents us from implementing more reusable solutions immediately. We really do not want any revolutions.

The obvious political reasons of all kinds, usually caused by strong focus on tactical goals, temptation to pick the low-hanging fruit, and show quick results even if they are based on another silo in the app's stack.

Most interestingly, patterns would be required to resolve the conflicts that arise during the implementation of different principles from the same technological area. Yes, principles can contradict and must be applied in a meaningful context.

It is also important to recognize that all these architectural principles are generic, common, and universal for the selected technological area (SOA in this case). Principles are also tangible, well recognized, and limited in number.

Some may say that our top-ten requirements can be perceived as principles as well. Even all illities could be principles because of their universality and simplicity. Unfortunately, simplicity here cannot help. We, as architects, should give strict, precise, and most importantly, tangible guidance to developers, and be able to follow our own recommendations.

The measurable outcome is the result of proper guidance, and the principles here are closest to the physical implementation and must be understood and followed. Some principles could be less tangible than others and could just present the results for collective implementation principles with lesser abstraction, but still the results of the implementations can be measured. Let's take the most common illities such as reliability or flexibility and try to explain to your developers (or yourself) in just few words how to code your components in order to achieve them.

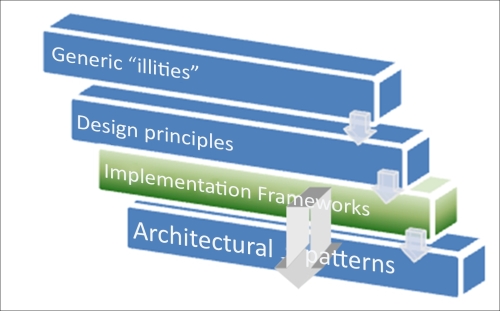

Depending on the technology platform, the explanation could take up to a couple of pages or several chapters. Still, they should seem obvious and even quite measurable. (Reliability is usually the Mean Time Between Failure (MTBF) and flexibility is also a time-based characteristic that displays how fast a system can be reconfigured for other business requirements.) So, NFRs are also precise technical requirements and not a guide in technical terms. Looking forward, let's propose a logical hierarchy of the terms, one way or another related to principles and their areas of application. By the end of this chapter, we will cover all of them. Your benefit from this exercise will be a clear outline that will guide you on how to analyze requirements and apply design rules for most of your SOA-related projects. The following table illustrates the principles and patterns discussed:

|

Principles and patterns |

Quantity |

|---|---|

|

Even being highly generic, the characteristics of generic illities have certain practical implications and materialize in at least six architectural frameworks. |

7 |

|

Technology stack's architectural principles (for SOA design principles) states that every application consists of several technology areas, the sharing or reuse of components, and composites. For every application, an individual and balanced combination of the universal principles is the key for successful implementation. |

8 |

|

Architectural patterns form a pattern catalog, commonly approved as open standard ( |

>85 |

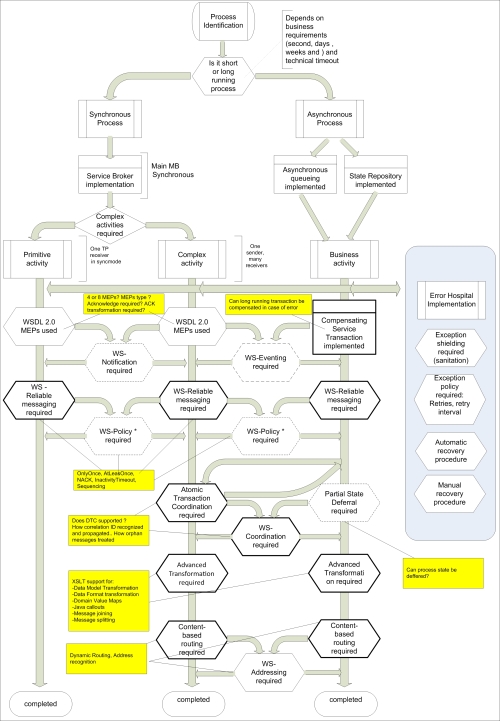

The following figure explains the preceding principles and patterns:

So let's start with the obvious ones that were already mentioned earlier.

In a standardized service contract, we really believe that the word service here is a bit of an overkill. Services today are strongly associated with the web service's technical implementations, so naturally, the first thing that comes to our mind would be WSDL with schemas, optionally, with policies. Nothing's wrong with that; it's truly the most common service implementation (or REST maybe), but the fact that any WSDL and XSD can be easily autogenerated compromises this idea. Autogeneration doesn't turn it into a standard. An autogenerated service contract is nothing but trouble, and if you haven't got it after the first exercise dedicated to tactical goals, we will have plenty of opportunities to convince you.

By doing so, you are just forcing everyone to adapt to your specifications, which include existing applications. Surely, if you are an architect in a big bank, this approach might work; we're hesitant about the stub's implementation time at the remote end.

The second point here is that we can build a very robust service-oriented system without a single web service. The Oracle PL/SQL will do it beautifully, and we will demonstrate it in the next chapters. So, the contract could be anything that declares public operations, available protocols, and data structures such as the PL/SQL package header .pks, C++ class header .h, and the Java interfaces (also called contracts). Component-based development is a completely valid approach in SOA if it's approved by all the members of the implementation domain. Interactions between different domains will require some integration efforts even if the technology is the same, but that would be true for web services as well, so that's not a major drawback of using components as SOA's building blocks.

The problem here is that all components' contracts are nondetachable compared to WSDLs. The true beauty of WS interfaces is that we can sit in a quiet corner along with developers and business analysts and by using just a pen and a napkin describe the prototype of service compositions, go back to our stations, and start coding right away. Talking seriously, by describing the detachable contract as WSDL, we can really provide a parallel development process and work on an iterative development in a reasonably painless manner. Simply speaking, you can compile the service logic (Java) without WSDL and try to do the same with a PL/SQL package body without the package specification. Finally, the most important thing is that this contract-first approach allows us to generate the code based on an initially defined and mutually accepted contract, and Oracle is really good at it. Practically, you can generate skeletons on any platform that you want to be your logic carrier, such as Java, BPEL, and Mediators.

A standard contract is the primary means of presenting your service as a corporate asset to maintain at least two main SOA characteristics: Composition-centric and Enterprise-centric. With the WS-based approach, you will achieve vendor neutrality as well.

This principle is probably the most well-comprehended principle. Everybody knows that tight coupling is bad. Is it really? To discuss this, let's first describe what kind of couplings we could get. You'll be able to understand this from the realization of service anatomy. Basically, we have the following:

Service resources, presented as DB, file structures, and so on

Technology platform (Java, .NET)

Service logic implementation

Parent service logic

Anything that links your contract or, even worse, your consumer to one of those service resources can obstruct the core SOA characteristics we are trying to maintain. So, all links going from contract to service resources or bypassing the contract are bad. The opposite direction is not much better because providing details of the technology platform or excessive resource demonstrations is not good, as it can provoke the service consumers to build their consumption logic based on these details. However, what about the contract-first principle? Yes, it's a positive thing, so coupling your service logic to the declared contract is a natural and decent way of implementing the service. However, neither the service logic nor the service contract has been set in stone—business is evolving, and so are our services. Quite soon, a new contract version will be published or the core service logic will be patched. It will eventually turn out that this positive coupling also has its deficiencies. No reason to despair though; it's life. All of us are evolving, and customers connected to our contract are always welcome. How to deal with this situation using various Oracle SOA patterns will be discussed further.

In addition to this, we would like to emphasize that coupling from customer to contract is the second positive coupling, although it is susceptible to the same problem like the one with contract evolution. All other couplings must be prohibited if possible. This statement is not as strong as you would expect. We have touched upon the reason for this earlier in the tactical goal's architecture example, that is, performance. Standard contract denotes the message processing overhead, some milliseconds (or more) in addition to the total processing time, CPU utilization, and memory consumption. Is it worthy enough to jump over the service contract and utilize service resources directly? Only you know what these milliseconds of overhead mean for your business, and the decision on what to sacrifice is yours. In general, the answer is no. Please look at your contract first. Is it truly standard? Assess your needs using the following logic:

Do you clearly define your data structures with the required elements only?

Do you avoid autogeneration, especially for operations with CLOB fields without

CDATAor<any>elements? (Memory leaks during marshaling is a common outcome of this approach.)Can a concurrent contract with more lightweight technology (REST instead of SOAP) possibly solve the problem? (Concurrent contracts will be discussed further in the Chapter 4, From Traditional Integration to Composition – Enterprise Business Services.)

How about a platform-specific SOAP/XML acceleration? Oracle's WLS T3 protocol could be useful as it has proven many times

The tuning of the execution environment and proactive monitoring.

If platform-neutral contracts (WSDL / REST-based) do not help, could we employ a component-based concurrent contract?

Always think what price you will pay to break this principle for gaining ten or fifty milliseconds of processing time. This principle directly supports the composition's centricity and vendor neutrality's SOA characteristics.

The logical outcome from the implementation of the first two principles is standard, preferably (but not mandatory) a detachable service contract as a declaration of our capabilities, processing requirements, and input expectations. Still, the word standard is a bit vague. Let's put the discussion about existing standards aside for a moment and focus on the areas of standardization. The bottom line is that standardization is the way of generalizing information, a process of making it more abstract in order for it to be more multipurpose in predefined technical boundaries. Some of the elements of abstraction in service-orientation boundaries that we have already mentioned during the discussion of Loose Coupling are as follows:

Do not reveal in your service contract the specifications of your technical platform (such as the coding language, SDK's details, and XDK properties)

Do not expose details regarding your underlying resources (such as the DB structure, constraints, and especially the foreign keys)

Be reasonably reserved regarding services-composition members that comprises your service

Why would you do that? It is because of the same reasons we mentioned while discussing the previous principle. Excessive information can provoke negative coupling to service resources, making the service less adaptive and reducing its reusability options.

For example, you have a lot of useful functions in your service logic. Obviously, you can fall into the trap of promising extra features in addition to the already agreed one. (Okay, not you, your new project manager.) It literally costs almost nothing at the beginning. Most probably, it will not even affect the level of standardization of your contract at first glance, which is shown as follows:

Who can give you a warrant that the business logic, encapsulated in your service, will not change tomorrow and that an auxiliary-declared operation becomes a burden or even an unwanted shortcut in the business process? How about a number of consumers who become dependent on your extra feature? Migration in SOA is not an easy task, even with certain SOA patterns applied.

On the other hand, even in a relatively static business ecosystem, this new feature could become so popular that all of the hardware power dedicated to your service scope will be consumed by only this one operation.

So, do not promise anything and keep everything for ourselves? Let's not blow this out of proportion. SOA is full of promises; it was designed in this way, and luckily, we have enough methods to keep these promises. If service capabilities (that is, operations) are correctly planned from the beginning and used unevenly, then maybe we have put too much on a single service's plate. What is the functional scope of this service? If this service handles one single business entity (such as invoice), then all our operations should be bound to its functional context, which is abstracted to the level of a functionally completed environment. You would hardly keep salt, sugar, and flour in one jar in your kitchen just because all of them are white. Still, it's rather amazing how this simple thing called granularity is neglected in the real life of service development.

Functional granularity is based on the understanding of service models. Entity services that are already mentioned are the first and closest abstracts to the atomic data representations in an enterprise, for example, invoice, order, cargo unit, and customer. All operations would be naturally based on the DB CRUD model but not limited by them. The number of truly unique entity services is rarely more than 20 in any enterprise. The functional granularity here is usually based on the OLAP/OLTP segregation:

Online transaction processing (OLTP) as very short, real-time, CRUD-like operations with high demands for response time are naturally the primary capabilities of the entity services, and their operational time slot is frequently within the standard business hours (that is, 08:00-17:00)

Online analytical processing (OLAP) operations are not that demanding when it comes to response times, but data volumes are usually higher and operational time slots are either evenly distributed around the clock or tend to be close to the regular nightly batch-operations time.

As you can see, mixing them together would not be a good idea if we have an overlapping operational time slot. The possible conflict between high volumes and high throughput will require your attention at the very early stages of service modeling. Should we abstract OLAP operations to DWH-specific services?

The second service model is the utility that usually presents the most reusable and supplemental logic, consumed by all other services. The level of functional abstraction here is really high and business-independent. Your transformation, translation, or measure-unit conversion services are typical representatives of this model. The level of functional granularity can be easily defined and operations can be tuned for high-usage demands. Migration issues are not that frequent here, so functional abstraction is fairly simple.

The last model or task service is what we usually know as workflow, which is the composition of other services combined together in order to fulfill one single task such as OrderProcurement and BookingRequest.

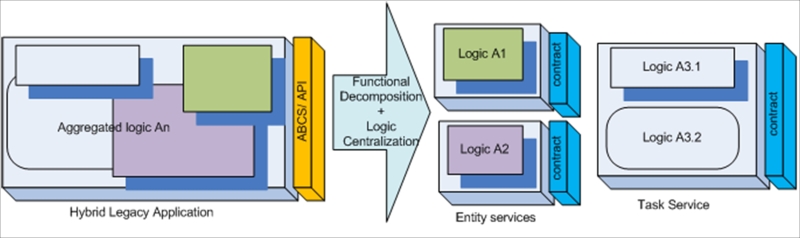

Distinctive properties of this service model comprise one task, one operation, and one business context. Functional abstraction should not be a big puzzle, but still we can see a lot of misinterpretation caused by the deceptive simplicity of modern development tools, providing neat visualization of service compositions, plus very mature resource adapter frameworks starting from order fulfillment (many thanks to Oracle for providing an extremely detailed Fusion Order Demo (FOD), available at http://www.oracle.com/technetwork/developer-tools/jdev/learnmore/fod1111-407812.html). An enthusiastic developer can soon include Invoice, Booking, and General Ledger flows into one monster. Entity services are commonly neglected, as we do not need them anymore; a DB adapter can provide us with the perfect result in five clicks. Additional interfaces that were constructed while composing this task service can be easily exposed to external consumers. The problem here is that this service is not a task anymore; it's a hybrid with the worst possible functional granularity, combining business-specific and business-agnostic capabilities. A thorough application of the abstraction principle from the very beginning could prevent this problem.

The deceptive simplicity of development can hoodwink developers, who are left alone without architectural guidance. This fact provokes developers to use it everywhere, whether it's appropriate or not. Some industry-specific forums and advisory boards quite often produce rather vague frameworks and business process specifications that are in fact not more than business heat maps. Following them too directly can easily result in such hybrid services with unclear abstraction levels.

Data granularity is the next level of granularity we should take into consideration when applying the abstraction principle. Processing one single order line or a complete bunch of orders received in one message in reality makes a difference; however, in your design of an Order XSD, all that it takes is to set minOccurs to greater than 1 for the Order node right under the

OrderHeader element.

The data constraints granularity or constraints granularity in general is the next logical level of granularity. Again, talking about XSD, you could be really restrictive with your data type definitions while determining whether they are necessary by declaring a simple type using XSD patterns, explained as follows:

|

Fine-grained |

Coarse-grained | |

|---|---|---|

|

<xsd:simpleType name="imageType">

<xsd:restriction base="xsd:string">

<xsd:pattern value="(.)+\.(gif|jpg|jpeg|bmp)"/>

</xsd:restriction>

</xsd:simpleType> |

xsd:string |

xsd:any |

Here, you want to be sure that the image's filename provided in a message is safe (at least not executable). This could not be achieved by the most popular xsd:string data type alone in the service contract. The xsd:any element is at the upper level in this hierarchy, which in OOP has equivalent Object. All these levels of abstraction have full rights to exist, but you must clearly realize which part of your SOA infrastructure should employ these different levels of granularity. The other means of data granularity already mentioned are minOccurs, maxOccurs, and nillable that are applicable for the elements and xsd: attributes. Terms usually used for different levels of detailing are the fine- and coarse-grained granularity, and they are quite self-explanatory. The levels of declared granularity directly impact the location of the service-processing logic. This means that with a more fine-grained XSD, you will put more processing demands on the contract's message processing logic—XDK marshalers (serializers). With a more coarse-grained granularity, you inevitably put the big chunk of message parsing and validation logic into the service's component logic behind the contract. It could also make service difficult to test, as highly abstracted contract will not reflect any changes that are supposed to be presented to the Consumer.

Other abstractions include the abstraction of technical details hidden behind the service contract, and programming language aims to increase the federation of our heterogeneous service infrastructure. Abstracting contract-related parts of SLA, such as quality of service, availability information, and performance metrics also helps to standardize service profiles within a service inventory.

We have deliberately put aside the security considerations related to the abstraction principle until now. By declaring more precise data types, you could reveal technical information necessary for data-oriented attacks. When using the data type casts features, the attacker could trigger the error, revealing internal data structures associated with the element (the point of the attack) and exploit them. An element with the type <any> reveals nothing, but at the same time allows it to send any types of data, including the harmful code. With such a high level of abstraction, presenting the service contract with the operation Process and data model Any, you literally open the door for all kinds of parser-related attacks, memory leaks, and buffer overflows. Possible ways of balancing the granularity and abstraction levels for services that operate on different technology layers will be discussed further in Chapter 7, Gotcha! Implementing Security Layers.

The ultimate purpose of principles' implementation in SOA is to increase the service composability options as a direct method of increasing ROI. A less abstract service contract where more information is revealed tends to be more attractive for developers as they are more interpretable.

The implementation of this principle directly affects SOA characteristics such as composition centricity and vendor neutrality. This principle directly supports Loose Coupling. Abstraction from excessively expressed technical details will certainly increase the business value of the service (business-driven).

The first three fundamental principles combined together will lead us to the declaration of the first really tangible design principle, that is, reusability. One can say that this is the essence of all the SOA principles. Still, we will not crown it above all others, as it cannot be maintained alone without proper foundation of the first three. In the book dedicated to the Java EE enterprise architecture, Sun Certified Enterprise Architect for Java EE Study Guide (2nd Edition) by Mark Cade, Humphrey Sheil, Prentice Hall Publishing, this principle is not included in the requirements for the component's architecture. The first three include performance, scalability, and reliability, and that's absolutely true. No one needs reusable components that are unreliable and cannot perform as intended.

We just have to realize that these illities here are applied to the service logic, presented by Java components. If it's not reliable, and we would like to put that first, we must not present this logic as a public service. In traditional component architecture, the reusability support is delegated to the integration layer. In SOA, we strive to make services reusable by means of the following:

Defining the standard contract, exposing canonical data and canonical operations.

Making internal service logic more universal (another synonym for abstract) and suitable for reutilization by other services. As discussed earlier, only one service model is allowed to be highly specific, that is, task, as a composition of other services, fulfilling the specific operation.

Preventing negative couplings by promoting the technical contract as only one way of accessing the service logic.

The level of reusability is really easy to assess: just count the number of compositions where this service participates. The implementation of this principle directly promotes composability and the enterprise's centricity. Let's now look at the two pure technical principles that support reusability.

An service can maintain the required level of reliability (measured by MTBF as time, or percentage as an availability) necessary for consistent reuse only if it can possess and control its own underlying resources. Database, file objects, physical realization of the service logic, and so on should not be shared or delegated to other services. The service should be perceived as an atomic unit of concrete logic, functioning in a dedicated technical environment. In this case, the service behavior will be predictable, fulfilling scalability requirements, and making it possible to relocate the service into a similar technical environment with reasonably low efforts. This last illity is highly desirable for a cloud-based implementation.

Unfortunately, this principle is probably the hardest to implement. We all know that most commonly used databases are shared resources. License costs, bundles of legacy applications, common network infrastructure, and so on are the reasons why this principle is very hard to achieve without significant investment or considerable maintenance efforts.

This principle is quite often mistaken for Loose Coupling. Indeed, they are very similar with regards to the negative impact on service reusability, although we can draw a distinct line between them as mentioned as follows:

Loose Coupling is the ratio between iterations, carrying through the service contract from consumer and service resources (relatively positive coupling) and iterations bypassing the contract (negative coupling). In fact, the service is always coupled if it's in use, positively and negatively. Service-oriented architecture based on components is more prone to negative coupling, as their APIs are more technology-specific.

Service autonomy is the measure of service independence. A business usually has quite a limited number of data warehouses (DWHs) (usually one per business domain and ideally—only one). Therefore, all analytical services (InvoiceHistory, OrderHistory, and so on) using a single DWH DB will not be autonomous. Present them as one service (this is not an advice), move into a private cloud, and you will get a perfectly autonomous service. Now the question is the price.

The implementation of this principle directly promotes the composition and enterprise centricity and vendor neutrality.

There are no negative impacts on other principles, but as we have said, true service autonomy is the nirvana that is really hard to reach.

This second technical and very tangible design principle is the support of the service reusability.

It's defined as an ability of a service to maintain low-resource consumption when needed, namely between service activities, while waiting for a response, and so on.

At first glance, it's more applicable to the long running asynchronous services, which could run for days or weeks. The deferring service state is vital here. We have to store execution scope variables and preinvocation data in a special database with all necessary information for waking it up when the response arrives. In this Hibernation DB (dehydration store in Oracle terms), we will have a chain of defer-awake records that are equal to the number of asynchronous invocations. Moving further, we can defer the information at any stage of the long running process for legal or compensative activities. This type of storage is compulsory for all task-orchestrated services and is usually provided centrally by an orchestration platform.

This fact makes all task orchestrated services far less autonomic than other service models. Surely, you can implement individual partial state deferral for every task-orchestrated service (task service hosted within an orchestration platform), making them ultimately autonomous. In that case, we truly admire the grandiosity of your project's budget, not to mention the infrastructure and support.

Asynchronous services are not the only ones that need to maintain their state. A poorly designed synchronous service with a lot of global variables, excessive looping or branching logic, and a lot of calls to underlying storage resources will consume a lot of memory and CPU. There is no remedy for this scenario that is provided by the SOA technology platform; you can only rebuild it from scratch. You could technically turn this service into an asynchronous process and set the queues as transport means; however, that's not what consumers expect, and the level of potential reuse drops considerably. Only services with predictable state management will have predictable behavior and scale well when necessary.

This principle addresses the same benefits as autonomy but has negative impact on the autonomy itself.

Despite its obvious meaning, this is arguably the most-neglected principle. The main reason for such negligence is the misunderstanding of service governance boundaries. For some, governance starts after the service deployment process in production. Although it's been said many times before, we would like to repeat it again—governance starts long before the first line of code is written. You must plan for the following:

Which service trace records will be left in your audit/trace log under the different logging settings

How service activities will be perceived by different operational policies

How a service could be dynamically invoked by different consumers and controllers

These items among many others form the so-called runtime discoverability. It is in your best interests to expose your service to all who can potentially use it. This is possible if you follow these points:

Service operations and functional boundaries are well defined according to the service contract

Service particulars presented in the form of service metadata help everyone understand possible service runtime roles, model, composability potential, and limitations

Quality of service information describing service availability, reliability, and performance is guaranteed

Supplementary information regarding all test results and test conditions are in support of declared performance

Policy standards to which services adhere to mandatorily or optionally

All these items are elements of design-time discoverability. As part of governance efforts, special layers of service infrastructure will be established in order to support these two types of discoverability. We will dedicate a whole chapter to this challenge, as we reckon lack of discoverability is probably the main problem in the implementation of SOA. However, even if a technical platform is capable of supporting dynamic discovery and invocation, and the service metadata storage is full of service details down to the particular engines in use and rule types employed, we could still jeopardize potential reuse by keeping interpretability of discovered information below the comprehension level. Information that is too abstract (see the Implementation of Service Abstraction principle) will prevent the demonstration of full service potentials. A methodology in support of service taxonomy and metadata ontology (discussed in Chapter 5, Maintaining the Core – the Service Repository in great detail) will be not only established, but also conveyed to all individuals responsible for SOA governance from the very beginning to the end.

How do we measure service discoverability? What questions should you ask your developers and architects (including yourself) in order to understand the level of principle adoption? Refer to the following questions:

Do we have an inventory for all enterprise assets acting as service consumers and service providers? In other words, what are the enterprise/domain boundaries?

Do we have individual service profiles?

What are the key elements of service metadata available from the service profile that we will use for a service lookup?

From which service infrastructure layers will we perform the lookup and for what purpose? In other words, who is allowed to discover this information and when (security)?

Can we perform reverse search metadata by service?

How will these metadata elements be presented in a service message, in which parts, and at what level of detail?

Are these metadata elements in the service messages covered by the existing SOA standards? Can we keep them vendor-neutral and minimalistic?

Believe it or not, a simple Excel spreadsheet will do the trick, and the explanation will be provided shortly. How many times have we witnessed the situation when without a well-structured and understandable taxonomy, even the most powerful harvesters (SOA artifacts introspection tools) with marvelous graphical representation of discovered relations just turn the situation from bad to worse?

This principle undoubtedly supports all four desirable SOA characteristics. This principle conflicts with the service abstraction and can negatively impact security when poorly implemented.

Finally, we come to the last principle that completes the foundation of SOA. This principle is in fact the paramount realization of SOA, as it's the closest thing to money, the universal entity that is understandable by any members of an enterprise.

Next, we compose and recompose the new business applications and processes out of the existing building blocks; the less we waste, the more we gain through reuse. However, is there any overlap between composability and the reusability principles discussed before? Yes, but only at first glance, as the key here is in the measure of "waste" we would like to prevent. Almost everything in IT could be reused; the question is how much effort (time) it could cost for doing this.

The composability principle defines the measure of how easily any service from a particular service inventory could be involved in a new composition, regardless of the composition's size or complexity. Of course, a service should be involved in the operations it was designed for and in the roles it can support. Thus, the common quantifier for this principle is time. When designing a service from the very beginning, you should speculate on how hard it would be to implement composed capabilities using your service along with others, and how many compositions a single instance of your service can support from the performance standpoint. Yes, the statement regardless of the composition's size or complexity has its own limits, and these limits will be thoroughly tested during the unit stress test and properly documented in the individual service profile.

The quantification of this principle is roughly similar to the measure of flexibility of hub-and-spoke integration platform. For hub-and-spoke, with all enterprise applications connected to it, you have the canonical data models for all entities presented by the application. When a new application with its own data representation arrives, all that you need is to perform the following:

Transform the newly received application data model to a canonical model

Establish routing rules for message flows in a hub for this application

For simplicity, we assume that a unified canonical protocol is in place. With this simplistic model, we can estimate that a new XSLT (actually, two of them) with a reasonable level of complexity could be built in four hours, and the routing rules in a static table can be maintained in another two. Allocate a day for testing and we are ready for production in less than 16 business hours! Theoretically. Let's now recall how reality typically bends our plans.

|

No. |

How do we see it |

What it means |

|---|---|---|

|

1 |

Yes, it's not a problem to build XSLT or XQuery in four hours. Obtaining the mapping instruction and understanding the meaning of field names and data types can take week(s). |

Discoverability and Abstraction |

|

2 |

Not all data elements needed for CDM and other applications in the farm are available via a public API. |

Standardized contract |

|

3 |

To extract necessary data, we have to bypass the API and reach out for internal resources. |

Loose Coupling |

|

4 |

The extraction of additional data inevitably implements call-back interchange patterns, which are not always positive. This could disrupt performance of the main app functions and put some logic outside the app boundaries. |

Autonomy |

|

5 |

Quite often, internal logic of a new application is more complex than what a simple request-response interchange pattern can provide, thus requiring the hub atomic transaction coordinator's (ATCs) capabilities. We have to put more logic on the hub. |

Loose Coupling |

This shortlist of five items is only the tip of the iceberg; usually, it's more than a page long. The bottom line is that the total time required for making a new application composable via the hub-and-spoke approach is usually from two to six months.

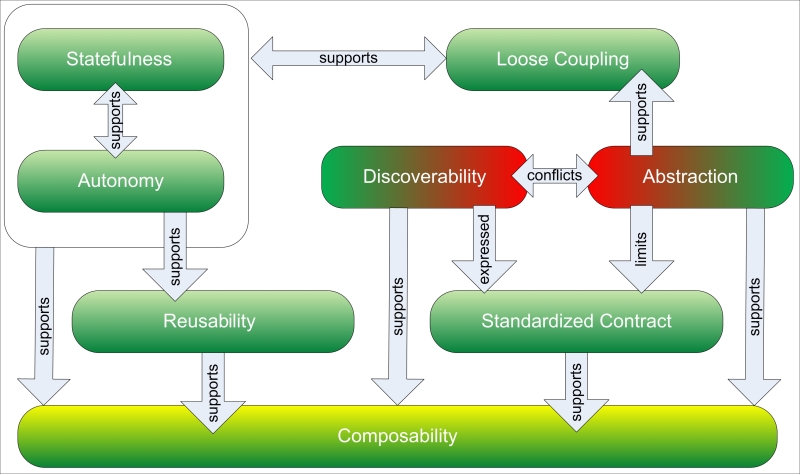

With the service-oriented approach, the result will be quite similar if any of the previously discussed principles are neglected or put off-balance. The following figure explains the general relations between principles and their importance for composability. Loose Coupling and Abstraction together with Composability are the regulatory principles, shaping and governing the implementation of others. Despite their regulatory status, only Abstraction is quite difficult to quantify, although it's still quite possible by assessing the amount of message-processing logic in marshaler / contract parser and core service logic.

The Statefulness and Autonomy services are pure technical principles and directly affect Reusability. It would be quite right to say that Reusability and Discoverability together have a major impact on Composability, but other principles also must be accounted for.

We would like to advise you to keep this relation matrix in front of you every time you are given the task to analyze an existing design or propose a new one based on expressed illities, or analyze what's behind the illities, which is promoted as design guidance. It will prevent you from establishing rules that are too vague for understanding and following. For the final exercise dedicated to principles alone, let's get back to our list of top ten generic wishes and analyze the sixth item; refer to the following table:

|

Designed for testing |

Meaning |

Requirement |

|---|---|---|

|

Valid (we are testing what we are supposed to test) |

Service / Component APIs can be easily exposed to any existing testing tool (JMeter, SoapUI, LoadUI, and so on) and all the test operations generated with low efforts. |

Standardized Contract |

|

Verifiable (we must be able to recreate the results) |

Test results, achieved in one environment (JIT, for instance) can be easily recreated in any other environment in testing hierarchy. This means no surprises in production! |

Autonomy, Statefulness |

|

Reliable (we will trust test results) |

Service with acceptable characteristics achieved initially must not be obscured by later amendments/implementations breaking services, technical consistency. |

Autonomy, Statefulness, Loose Coupling |

|

Comprehendible (we must be able to understand the results) |

Test results, sometime together with test suites must be stored in a place where it can be reached, accessed, comprehended by any concerned party and re-implemented if necessary. |

Discoverability (Beware of Abstraction) |

This simple exercise demonstrates that there's neither any need to invent new principles out of wishes or abstract illities, nor to multiply unnecessary entities:

Entia non sunt multiplicanda praeter necessitatem | ||

| --Ockham | ||

Principles here act as precise technical guidance. Elaborative lists of more than 50 items, produced as a collective effort of several departments in some enterprises, usually leave only one question: how are you supposed to enforce all of them? A principle is the direct order, and it is only good if you can control its fulfillment.

For good measure, may we suggest that you repeat this exercise for the remaining nine requirements in that list?

The deceptive simplicity of SOA as an architectural approach made it attractive twelve years ago, and this deception (actually, the misunderstanding) caused its downfall after two to three years of initial implementations. Initially, the idea was pure and simply brilliant; it is mentioned as follows:

Quickly maturing XML, as the most universal standard and foundation for practically everything: messaging, transformations, protocols, data representation, the way you name it

Emergence of web services, as the next logical step in object-orientation and procedural programming with the highest level of encapsulation and standard detachable API expressed via the WSDL contract

SOAP presented in 1998 promised some transport-independent (to a certain extent) messaging protocol that was simple enough to gain popularity in the blink of an eye

In addition to the UDDI standard, employing lots of XML features has also been presented in order to support service discoverability. Thus, we got our first so-called Contemporary SOA representation in the shape of a triangle: Service Consumer (Sender), Service Provider (Receiver), and Service Registry (UDDI) shown as follows:

Message is always SOAP-based synchronous request/response; contract is WSDL with number of operations abstracted to be reasonable minimum. Service Consumer is displayed in a different color in order to stress the fact that it doesn't have to be a web service. This means that a service can be called from any program, interface, and device if WSDL can be understood and SOAP request-response can be supported.

This model will work (and more importantly it works) perfectly within designated boundaries for simple compositions or compositions based on multiple sequential invocations. We will discuss the obvious limitation of the contemporary model shortly; now, let's focus on core technologies, which are the core of all SOA models.

As stated before, we do not intend to cover all SOA standards, as we simply have no room for this in a single chapter. We will briefly touch only those which we will be using in the following chapters. The book Web Service Contract Design and Versioning for SOA, Prentice Hall Publishing, by Thomas Erl, could be a good reference in addition to the web recourses from standard authorities for SAML, OAuth, and so on. This section is just a technical recap of the absolute bare minimum requirements to link previous paragraphs dedicated to SOA principles with the soon-to-arrive SOA design rules based on patterns applied in particular SOA frameworks.

As an experienced architect, you are certainly quite familiar with all the technologies we will briefly touch on now.

This is the foundation of most of the standards and applications, not only SOA. Please note that you do not have to use XML to make your platform service-oriented in order to achieve goals of service-orientation, but you will find it rather difficult not to do so. You will have to replace quite a sizeable amount of movable and static elements of your infrastructure (configuration files, interchange messages, transformation mappings, contracts, and so on) with some similar but older formats such as CSV, EDIFACT, and X12 with lots of unexpected consequences. Modern standards such as JSON are also not entirely XML-free. So, we would like to suggest something for your own architectural benefits. Please look at the simple W3C School XML quiz (http://www.w3schools.com/xml/xml_quiz.asp); it will only take five minutes. If your score is less than 100 percent, we suggest you refresh yourself by reading a good XML book.

If service is an atomic building block of the whole SOA, then web services are the most popular variant of these building blocks. The reason for this is in an object/XML serializer, which is the native part of any WS and the link between a detached WSDL-based service contract and core service logic. For the Oracle platform (but not only), quite naturally employed Java marshaling/unmarshaling, Java-WS (or JWS) technology would be based on one of the following serialization APIs:

Java architecture for XML binding: JAXB (exists on multiple implementations but is not always fully compatible).

A more advanced JiBX. This can inject the conversion code directly into Java classes during the post-compilation process, and by doing so, improve the performance considerably when compared to JAXB. Also, it has its own runtime-binding component.

Simplified mapping-free version of marshaler: XStream.

JAXB is still the most popular one because of the number of characteristics it offers:

Runtime message validation

XPath-oriented

No post compilation required for code injection

Can support very complex message structures

The JAXB API is part of Java SE and EE package bundles, but still it is better to check for the latest release if performance is an issue. If serialization performance is the major concern, look at the JiBX more closely as it could be up to five times (some claim more) faster.

Here again, the reliability and predictability of parser should be balanced with reasonable performance; otherwise, you will have to rebuild your services from scratch every time the XSD specifications change.

So, in the JWS specification, JAXB is responsible for mapping a Java class to the message's XSD using customizable annotations. Java API for XML Web Services (JAX-WS) is the technology that is responsible for mapping Java parameters to the WSDL declaration. These two specifications conclude

service endpoint interface (SEI) as the representation of a standardized contract. Of course, just using them alone doesn't guarantee that contract will be truly standardized, but they are the two essential technical WS specs. The last and the most important part of the WS spec is the web container; it is responsible for performing basic HTTP operations: POST and GET. It's related to the handling of transport protocols, and we will discuss it right away.

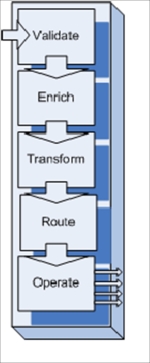

XML-based SOAP-messaging protocol handled by the web container is typically implemented as a servlet if you need to utilize the HTTP transport protocol, which is most common for JWS. SOAP is a type of XML structure, serving as a container for service message interactions. There are two mandatory parts: soap:Envelope as a root and soap:Body that acts as a business payload container. Two optional elements can also be present: soap:Header and soap:Fault. Although the header element is optional, its role for providing transport and processing-related metadata is enormous. Most of the WS-* extensions followed after the first publication of initial WS specs are related to the SOAP headers. There are some which could be equally distributed between the header and body. For instance, WS-Security naturally relates to the body and header via encrypted and signed elements and WS-MetadataExchange provides and distributes WSDL-related data necessary for establishing service interactions, which can also be done via the SOAP body.

Most commonly, the Java web container will probably use a servlet as the front controller, and it is responsible for parsing the header elements and invoking the JAXB mapping to Java objects to process the SOAP body.

We will discuss UDDI-related protocols as part of contemporary SOA further when we approach the Service Registry architecture.

There are no drawbacks in the contemporary SOA model. Every single technical element is mature and proven to be reliable after dozen of years of evolution and improvements. Actually, it was pretty acceptable from the very beginning, but its broad usage was severely limited by the initial constraints set by the simplified service interaction model: synchronous request-response between limited numbers of composition members (usually two). At the time of the first implementation, it was apparent that a substantial number of complex real-life requirements needed be addressed by the SOA technology platform to make it capable of fulfilling its promises; they are as follows:

There is more than one simple message exchange pattern (MEP). We can count one-way MEPs, two-ways, callback MEP types along with all possible types of acknowledgements (responses), such as mandatory, only on errors, and so on.

Asynchronous MEPs are equally popular and must be covered by the technology platform in a common way. The ability to maintain sync-async communication bridges for complex service interactions was the prerequisite for further SOA proliferation.

Even synchronous service compositions could be far more complex than the basic request-response method with all elements of distributed transactions and two-phase commits requiring a transparent level of transaction coordination.

Long-running transactions also need common and reliable methods of controlling process execution with a lot of callbacks and numerous activation-deactivation phases. First of all, this involves transparent coordination based on the correlation ID and correlations sets, and the ability to compensate unsuccessful transactions.

Services must be able to reliably communicate in cases where we have infrastructure breakdowns or slow responses from other parties.

Service messages must be equipped with information that is sufficient for supporting complex routings and distributions.

Services and service registries are extremely vulnerable to security breaches due to high exposure to potential consumers (implementation of the Discoverability principle). This issue will be addressed with minimal impact on services intrinsic's interoperability.

These are only the most obvious requirements, which had to be fulfilled in order to make service orientation capable to serve its purpose and achieve the goals we discussed in the beginning of this chapter. It is apparent that all these issues must be addressed in a standard way; otherwise, proprietary implementations will be put across service environments' federation and vendor neutrality.

Most of the standards in the form of recommendations and profiles are provided by three main standardization committees, as shown in the following table:

|

About |

OASIS |

W3C |

WS-I |

|---|---|---|---|

|

URL | |||

|

Established |

1993 as SGML Open |

1994 by Tim Berners-Lee |

2002 |

|

Approximate membership |

600 |

About 390 |

200 |

|

Overall goal (as it relates to SOA) |

OASIS promotes industry consensus and produces worldwide standards for security, Cloud computing, SOA, web services, Smart Grid, electronic publishing, emergency management, and other areas. OASIS's open standards offer the potential to lower the cost, stimulate innovation, grow global markets, and protect the right of free choice of technology. (From official site) |

W3C's primary activity is to develop protocols and guidelines that ensure long-term growth for the Web. W3C's standards define key parts of what makes the World Wide Web work. (From official site) |

The Web Services Interoperability Organization (WS-I) is an open industry organization chartered to establish best practices for the web services interoperability. It is for selected groups of web services standards across platforms, operating systems, and programming languages |

|

Delivered Standards/ Specifications |

UDDI, ebXML, SAML, XACML, WS-BPEL, WS-Security |