The technological industry is constantly changing. Although the internet was born only a quarter of a century ago, it has already transformed the way that we live. Every day, over a billion people visit Facebook; every minute, approximately 300 hours of video footage are uploaded on YouTube; and every second, Google processes approximately 40,000 search queries. Being able to handle such a staggering scale isn't easy. However, this book will provide you with a practical guide for deployment philosophy, tooling, or using the best practices of the companies. Through the use of Amazon Web Services (AWS), you will be able to build the key elements required to efficiently manage and scale your infrastructure, your engineering processes, and your applications, with minimal cost and effort. This first chapter will explain the new paradigms of the following topics:

- Thinking in terms of the cloud, and not infrastructure

- Adopting a DevOps culture

- Deploying in AWS

We will now describe a real incident that took place in a datacenter in late December, 2011, when dozens of alerts were received from our live monitoring system. This was a result of losing connectivity to the datacenter. In response to this, administrator rushed to the Network Operations Center (NOC), hoping that it was only a small glitch in the monitoring system. With so much redundancy, we may wonder how everything can gooffline. Unfortunately,the big monitoring screens in the NOC room were all red, which is not a good sign. This was the beginning of a very long nightmare.

As it happens, this was caused by an electrician who was working in the datacenter and mistakenly triggered the fire alarm. Within seconds of this occurring, the fire suppression system set off and released its aragonite on top of the server racks. Unfortunately, this kind of fire suppression system made so much noise when it released its gas that sound waves instantly killed hundreds of hard drives, effectively shutting down the data center facility. It took months to recover from this.

It wasn't long ago that tech companies, small and large, had to have a proper technical operations team, able to build infrastructures. The process went a little bit like this:

- Fly to the location where you want to set up your infrastructure. Here, take a tour of different datacenters and their facilities. Observe the floor considerations, power considerations, Heating, Ventilation, and Air Conditioning (HVAC), fire prevention systems, physical security, and so on.

- Shop for an internet service provider. Ultimately, you are considering servers and a lot more bandwidth, but the process is the same—you want to acquire internet connectivity for your servers.

- Once this is done, it's time to buy your hardware. Make the right decisions here, because you will probably spend a big portion of your company's money on selecting and buying servers, switches, routers, firewalls, storage, UPS (for when you have a power outage), KVM, network cables, labeling (which is dear to every system administrator's heart), and a bunch of spare parts, hard drives, raid controllers, memory, power cables, and so on.

- At this point, once the hardware has been purchased and shipped to the data center location, you can rack everything, wire all the servers, and power everything on. Your network team can kick in and establish connectivity to the new datacenter using various links, configuring the edge routers, switches, top of the rack switches, KVM, and firewalls (sometimes). Your storage team is next, and will provide the much-neededNetwork Attached Storage(NAS) orStorage Area Network(SAN). Next comes your sysops team, which will image the servers, upgrade the BIOS (sometimes), configure the hardware raid, and finally, put an OS on the servers.

Not only is this a full-time job for a big team, but it also takes a lot of time and money to even get there. As you will see in this book, getting new servers up and running with AWS only takes us a few minutes. In fact, you will soon see how to deploy and run multiple services in a few minutes, and just when you need it, with the pay-what-you-use model.

From the perspective of cost, deploying services and applications in a cloud infrastructure such as AWS usually ends up being a lot cheaper than buying your own hardware. If you want to deploy your own hardware, you have to pay for all of the hardware mentioned previously (servers, network equipment, storage, and so on) upfront as well as licensed software, in some cases. In a cloud environment,you pay as you go. You can add or remove servers in no time, and will only be charged for the duration in which the servers were running. Also, if you take advantage of PaaS and SaaS applications, you will usually end up saving even more money by lowering your operating costs, as you won't need as many administrators to administrate your servers, database, storage, and so on. Most cloud providers (AWS included) also offer tiered pricing and volume discounts. As your service grows, you will end up paying less for each unit of storage, bandwidth, and so on.

As you just saw, when deploying in the cloud, you only pay for the resources that you are provided with. Most cloud companies use this to their advantage, in order to scale their infrastructure up or down as the traffic to their site changes. This ability to add or remove new servers and services in no time and on demand is one of the main differentiators of an effective cloud infrastructure.

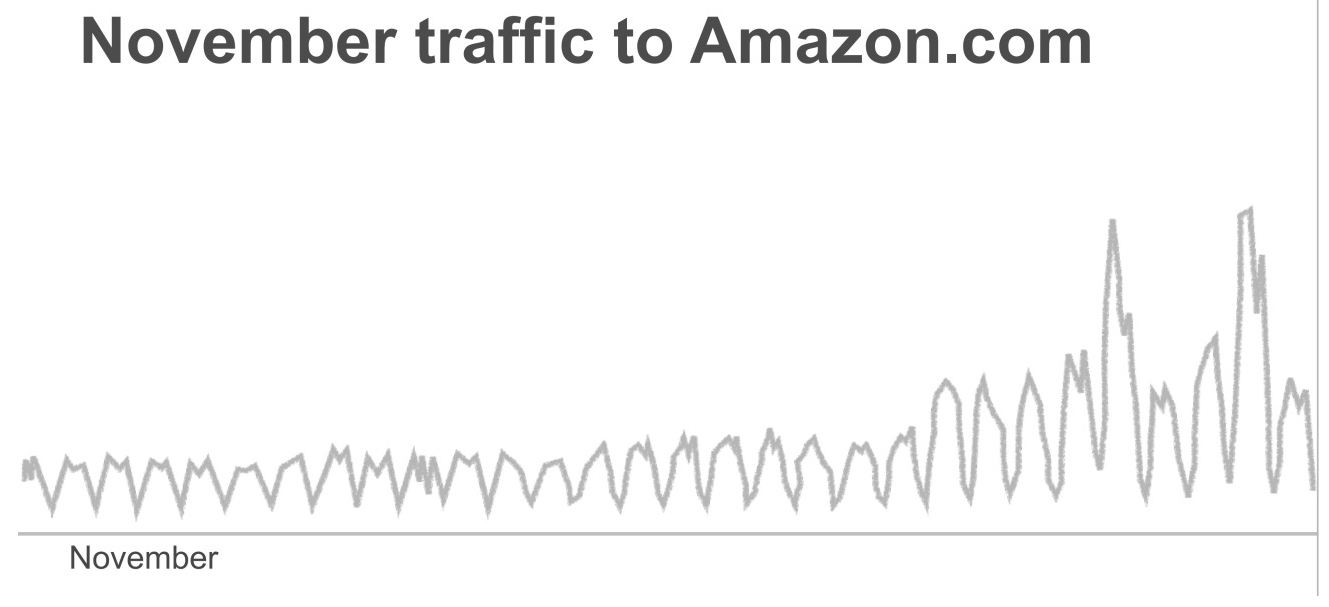

In the following example, you can see the amount of traffic at https://www.amazon.com/ during the month of November. Thanks to Black Friday and Cyber Monday, the traffic triples at the end of the month:

If the company were hosting their service in an old-fashioned way, they would need to have enough servers provisioned to handle this traffic, so that only 24% of their infrastructure would be used during the month, on average:

However, thanks to being able to scale dynamically, they can provide only what they really need, and then dynamically absorb the spikes in traffic that Black Friday and Cyber Monday trigger:

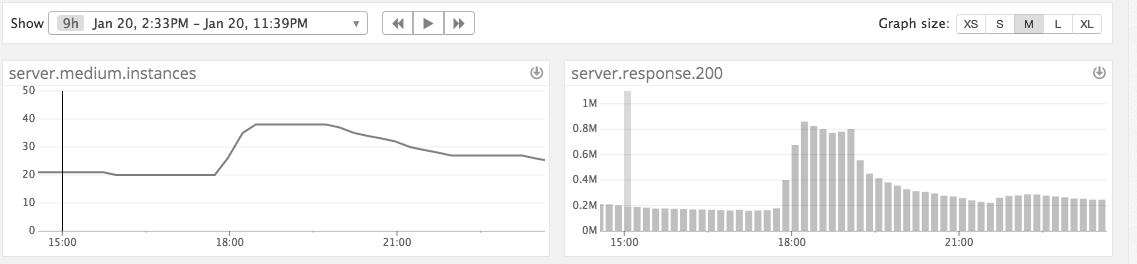

You can also see the benefits of having fast auto-scaling capabilities on a very regular basis, across multiple organizations using the cloud. This is again a real case study taken by the company medium, very often. Here, stories become viral, and the amount of traffic going on drastically changes. On January 21, 2015, the White House posted a transcript of the State of the Union minutes before President Obama began his speech: http://bit.ly/2sDvseP. As you can see in the following graph, thanks to being in the cloud and having auto-scaling capabilities, the platform was able to absorb five times the instant spike of traffic that the announcement made, by doubling the number of servers that the front service used. Later, as the traffic started to drain naturally, you automatically removed some hosts from your fleet:

Cloud computing is often broken down into three different types of services, generally called service models, as follows:

- Infrastructure as a Service (IaaS): This is the fundamental building block, on top of which everything related to the cloud is built. IaaS is usually a computing resource in a virtualized environment. This offers a combination of processing power, memory, storage, and network. The most common IaaS entities that you will find are Virtual Machines (VMs) and network equipment, such as load balancers or virtual Ethernet interfaces, and storage, such as block devices. This layer is very close to the hardware, and offers the full flexibility that you would get when deploying your software outside of a cloud. If you have any experience with datacenters, it will also apply mostly to this layer.

- Platform as a Service (PaaS): This layer is where things start to get really interesting with the cloud. When building an application, you will likely need a certain number of common components, such as a data store and a queue. The PaaS layer provides a number of ready-to-use applications, to help you build your own services without worrying about administrating and operating third-party services, such as database servers.

- Software as a Service (SaaS): This layer is the icing on the cake. Similar to the PaaS layer, you get access to managed services, but this time, these services are a complete solution dedicated to certain purposes, such as management or monitoring tools.

We would suggest that you go through the National Institute of Standard and Technology (NIST) Definition of Cloud Computing at https://nvlpubs.nist.gov/nistpubs/legacy/sp/nistspecialpublication800-145.pdf and the NIST Cloud Computing Standards Roadmap at https://www.nist.gov/sites/default/files/documents/itl/cloud/NIST_SP-500-291_Version-2_2013_June18_FINAL.pdf. This book covers a fair amount of services of the PaaS and SaaS types. While building an application, relying on these services makes a big difference, in comparison to the more traditional environment outside of the cloud. Another key element to success when deploying or migrating to a new infrastructure is adopting a DevOps mindset.

Running a company with a DevOps culture is all about adopting the right culture to allow developers and the operations team to work together. A DevOps culture advocates the implementation of several engineering best practices, by relying on tools and technologies that you will discover throughout this book.

DevOps is a new movement that officially started in Belgium in 2009, when a group of people met at the first DevOpsdays conference, organized by Patrick Debois, to discuss how to apply some agile concepts to infrastructure. Agile methodologies transformed the way software is developed. In a traditional waterfall model, the product team would come up with specifications; the design team would then create and define a certain user experience and user interface; the engineering team would then start to implement the requested product or feature, and would then hand off the code to the QA team, who would test and ensure that the code behaved correctly, according to the design specifications. Once all the bugs were fixed, a release team would package the final code, which would be handed off to the technical operations team, to deploy the code and monitor the services over time:

The increasing complexity of developing certain software and technologies showed some limitations with this traditional waterfall pipeline. The agile transformation addressed some of these issues, allowing for more interaction between the designers, developers, and testers. This change increased the overall quality of the product, as these teams now had the opportunity to iterate more on product development. However, apart from this, you would still be in a very classical waterfall pipeline, as follows:

All of the agility added by this new process didn't extend past the QA cycles, and it was time to modernize this aspect of the software development life cycle. This foundational change with the agile process which allows for more collaboration between the designers, developers, and QA teams, is what DevOps was initially after, but very quickly, the DevOps movement started to rethink how developers and operations teams could work together.

In a non-DevOps culture, developers are in charge of developing new products and features and maintaining the existing code, but ultimately, they are rewarded when their code is shipped. The incentive is to deliver as quickly as possible. On the other hand, the operations team, in general, is responsible for maintaining the uptime of the production environment. For these teams, change is a negative thing. New features and services increase the risk of having an outage, and therefore, it is important to move with caution. To minimize the risk of outages, operations teams usually have to schedule any deployments ahead of time, so that they can stage and test any production deployment and maximize their chances of success. It is also very common for enterprise software companies to schedule maintenance windows, and, in these cases, production changes can only be made a few times a quarter, half-yearly, or once a year. Unfortunately, many times, deployments won't succeed, and there are many possible reasons for that.

It is often the case that the code produced by developers works fine in a development environment, but not in production. A lot of the time, this is because the production environment is very different from other environments, and some unforeseen errors occur. The common mistakes involve the development environment, because services are collocated on the same servers, or there isn't the same level of security. As a consequence, services can communicate with one another in development, but not in production. Another issue is that the development environment might not run the same versions of a certain library/software, and therefore, the interface to communicate with them might differ. The development environment may be running a newer version of a service, which has new features that the production doesn't have yet; or it could be simply a question of scale. Perhaps the dataset used in development isn't as big as that of production, and scaling issues will crop up once the new code is out in production.

One of the biggest dilemmas in information technology is miscommunication.

The following is according to Conway's Law:

"Organizations which design systems are constrained to produce designs which are copies of the communication structures of these organizations." —Melvin Conway

In other words, the product that you are building reflects the communication of your organization. A lot of the time, problems don't come from the technology, but from the people and organizations surrounding the technology. If there is dysfunction among your developers and operations team in the organization, this will show. In a DevOps culture, developers and operations have a different mindset. They help to break down the silos that surround those teams, by sharing responsibilities and adopting similar methodologies to improve productivity. Together, they try to automate whatever is possible (not everything, as not everything can be automated in a single go) and use metrics to measure their success.

As we have noted, a DevOps culture relies on a certain number of principles. These principles are to source control (version control) everything, automate whatever is possible, and measure everything.

Revision control software has been around for many decades now, but too often, only the product code is checked. When practicing DevOps, not only is the application code checked, but configurations, tests, documentation, and all of the infrastructure automation needed to deploy the application in all environments, are also checked. Everything goes through the regular review process by theSource Code Manager(SCM).

Automated software testing predates the history of DevOps, but it is a good starting point. Too often, developers focus on implementing features and forget to add a test to their code. In a DevOps environment, developers are responsible for adding proper testing to their code. QA teams can still exist; however, similar to other engineering teams, they work on building automation around testing.

This topic could fill its own book, but in a nutshell, when developing code, keep in mind that there are four levels of testing automation to focus on, in order to successfully implement DevOps:

- Unit testing: This is to test the functionality of each code block and function.

- Integration testing: This is to make sure that services and components work together.

- User interface testing: This is often the most challenging component to successfully implement.

- System testing: This is end-to-end testing. For example, in a photo- sharing application, the end-to-end testing could be to open the home page, sign in, upload a photo, add a caption, publish the photo, and then sign out.

In the last few decades, the size of the average infrastructure and the complexity of the stack have skyrocketed. Managing infrastructure on an ad-hoc basis, as was once possible, is very error-prone. In a DevOps culture, the provisioning and configuration of servers, networks, and services in general, are performed through automation. Configuration management is often what the DevOps movement is known for. However, as you know, this is just a small piece of a big puzzle.

As you now, it is easier to write software in small chunks and deploy the new chunks as soon as possible, to make sure that they are working. To get there, companies practicing DevOps rely on continuous integration and continuous deployment pipelines. Whenever a new chunk of code is ready, the continuous integration pipeline kicks off. Through an automated testing system, the new code is run through all of the relevant, available tests. If the new code shows no obvious regression, it is considered valid and can be merged to the main code base. At that point, without further involvement from the developer, a new version of the service (or application) that includes those new changes will be created and handed off to a system called a continuous deployment system. The continuous deployment system will take the new builds and automatically deploy them to the different environments that are available. Depending on the complexity of the deployment pipeline, this might include a staging environment, an integration environment, and sometimes, a pre-production environment. Ultimately, if everything goes as planned (without any manual intervention), this new build will get deployed to production.

One aspect about practicing continuous integration and continuous deployment that often gets misunderstood is that new features don't have to be accessible to users as soon as they are developed. In this paradigm, developers heavily rely on feature flagging and dark launches. Essentially, whenever you develop new code and want to hide it from the end users, you set a flag in your service configuration to describe who gets access to the new feature, and how. At the engineering level, by dark launching a new feature this way, you can send production traffic to the service, but hide it from the UI, to see the impact it has on your database or on performance, for example. At the product level, you can decide to enable the new feature for only a small percentage of your users, to see if the new feature is working correctly and if the users who have access to the new feature are more engaged than the control group, for example.

Measuring everything is the last major principle that DevOps-driven companies adopt. As Edwards Deming said, you can't improve what you can't measure. DevOps is an ever-evolving process and methodology that feeds off those metrics to assess and improve the overall quality of the product and the team working on it. From a tooling and operating standpoint, the following are some of the metrics most organizations look at:

- How many builds are pushed to production a day

- How often you need to roll back production in your production environment (this is indicated when your testing didn't catch an important issue)

- The percentage of code coverage

- The frequency of alerts resulting in paging the on-call engineers for immediate attention

- The frequency of outages

- Application performance

- The Mean Time to Resolution (MTTR), which is the speed at which an outage or a performance issue can be fixed

At the organizational level, it is also interesting to measure the impact of shifting to a DevOps culture. While this is a lot harder to measure, you can consider the following points:

- The amount of collaboration across teams

- Team autonomy

- Cross-functional work and team efforts

- Fluidity in the product

- How often Dev and Ops communicate

- Happiness among engineers

- Attitudes towards automation

- Obsession with metrics

As you just learned, having a DevOps culture means, first of all, changing the traditional mindset that developers and operations are two separate silos, and making the teams collaborate more, during all phases of the software development life cycle.

In addition to a new mindset, DevOps culture requires a specific set of tools geared toward automation, deployment, and monitoring:

With AWS, Amazon offers a number of services of the PaaS and SaaS types that will let us do just that.

AWS is at the forefront of cloud providers. Launched in 2006, with SQS and EC2, Amazon quickly became the biggest IaaS provider. They have the biggest infrastructure and ecosystem, with constant additions of new features and services. In 2018, they passed more than a million active customers. Over the last few years, they have managed to change peoples mindsets about the cloud, and deploying new services to this is now the norm. Using AWS's managed tools and services is a way to drastically improve your productivity and keep your team lean. Amazon continually listens to its customer's feedback and looks at the market trends. Therefore, as the DevOps movement started to get established, Amazon released a number of new services tailored toward implementing some DevOps best practices. In this book, you will see how these services synergize with the DevOps culture.

Amazon services are like Lego pieces. If you can picture your final product, then you can explore the different services and start combining them, in order to build the stack needed to quickly and efficiently build your product. Of course, in this case, the if is a big if, and, unlike Lego, understanding what each piece can do is a lot less visual and colorful. That is why this book is written in a very practical way; throughout the different chapters, we are going to take a web application and deploy it like it's our core product. You will see how to scale the infrastructure supporting it, so that millions of people can use it, and also so that you can make it more secure. And, of course, we will do this following DevOps best practices. By going through that exercise, you will learn how AWS provides a number of managed services and systems to perform a number of common tasks, such as computing, networking, load balancing, storing data, monitoring, programmatically managing infrastructure and deployment, caching, and queuing.

As you saw earlier in this chapter, having a DevOps culture is about rethinking how engineering teams work together, by breaking the development and operations silos and bringing a new set of tools, in order to implement the best practices. AWS helps to accomplish this in many different ways. For some developers, the world of operations can be scary and confusing, but if you want better cooperation between engineers, it is important to expose every aspect of running a service to the entire engineering organization.

As an operations engineer, you can't have a gatekeeper mentality towards developers. Instead, it's better to make them comfortable by accessing production and working on the different components of the platform. A good way to get started with this is in the AWS console, as follows:

While a bit overwhelming, this is still a much better experience for people who are unfamiliar with navigating this web interface, rather than referring to constantly out-of-date documentation, using SSH and random plays in order to discover the topology and configuration of the service. Of course, as your expertise grows and your application becomes more complex, the need to operate it faster increases, and the web interface starts to show some weaknesses. To get around this issue, AWS provides a very DevOps-friendly alternative. An API is accessible through a command-line tool and a number of SDKs (including Java, JavaScript, Python, .NET, PHP, Ruby Go, and C++). These SDKs let you administrate and use the managed services. Finally, as you saw in the previous section, AWS offers a number of services that fit DevOps methodologies and will ultimately allow us to implement complex solutions in no time.

Some of the major services that you will use, at the computing level are Amazon Elastic Compute Cloud (EC2), the service to create virtual servers. Later, as you start to look into how to scale the infrastructure, you will discover Amazon EC2 Auto Scaling, a service that lets you scale pools on EC2 instances, in order to handle traffic spikes and host failures. You will also explore the concept of containers with Docker, through Amazon Elastic ContainerService (ECS). In addition to this, you will create and deploy your application using AWS Elastic Beanstalk, with which you retain full control over the AWS resources powering your application; you can access the underlying resources at any time. Lastly, you will create serverless functions through AWS Lambda, to run custom code without having to host it on our servers. To implement your continuous integration and continuous deployment system, you will rely on the following four services:

- AWS Simple Storage Service (S3): This is the object store service that will allow us to store our artifacts

- AWS CodeBuild: This will let us test our code

- AWS CodeDeploy: This will let us deploy artifacts to our EC2 instances

- AWS CodePipeline: This will let us orchestrate how our code is built, tested, and deployed across environments

To monitor and measure everything, you will rely on AWS CloudWatch, and later, on ElasticSearch/Kibana, to collect, index, and visualize metrics and logs. To stream some of our data to these services, you will rely on AWS Kinesis. To send email and SMS alerts, you will use the Amazon SNS service. For infrastructure management, you will heavily rely on AWS CloudFormation, which provides the ability to create templates of infrastructures. In the end, as you explore ways to better secure our infrastructure, you will encounter Amazon Inspector and AWS Trusted Advisor, and you will explore the IAM and the VPC services in more detail.

In this chapter, you learned that adopting a DevOps culture means changing the way that traditional engineering and operations teams operate. Instead of two isolated teams with opposing goals and responsibilities, companies with a DevOps culture take advantage of complementary domains of expertise to better collaborate through converging processes and using a new set of tools. These new processes and tools include not only automating whatever possible, from testing and deployment through to infrastructure management, but also measuring everything, so that you can improve each process over time. When it comes to cloud services, AWS is leading the catalogue with more services than any other cloud provider. All of these services are usable through APIs and SDKs, which is good for automation. In addition, AWS has tools and services for each key characteristic of the DevOps culture.

In Chapter 2, Deploying Your First Web Application, we will finally gets our hands dirty and start to use AWS. The final goal of the chapter will be to have a Hello World application, accessible to anyone on the internet.

You can explore more about AWS services at https://aws.amazon.com/products/.

Download code from GitHub

Download code from GitHub