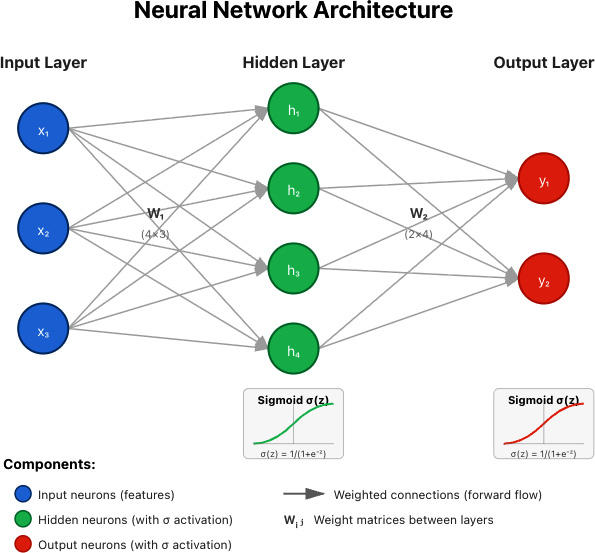

Machine learning with PyTorch

It is first important to understand the basics of NNs. NNs originated from early efforts to understand how the human brain functions. However, the first NNs were not all that brain-like in that they tended to contain linear components (the brain’s neurons are highly non-linear) and they were trained using backpropagation – we’ll cover this in a moment and discuss why it is not like human learning. Despite that, increased computational power has somewhat compensated for this and led to the breakthroughs we see today. NNs contain nodes, like neurons, that are connected to each other by weights, similar to dendrites in the human brain, and each node implements some transform called an activation function on the signals coming into it.

Here is an overview of a simple NN:

Figure 17.1 – A standard NN

We first have an input layer, which takes in the input data to be processed. Next, there are one or...