2. Service tiers

Azure provides multiple service (performance) tiers for Azure SQL Database and SQL Managed Instance. There are two purchasing options, the Database Transaction Unit (DTU) model and the vCore model. Each purchasing option has multiple service tiers. The purchasing option and service tier define the performance and cost of an SQL managed database. In this chapter, we'll look at the different purchasing options and service tiers and learn how to choose an appropriate starting performance tier when migrating to an SQL managed database.

We will learn about:

- DTUs and the vCore purchasing model

- Different service tier options for SQL Database and SQL Managed Instance

- Using Data Migration Assistant (DMA) to get service tier recommendations when migrating an on-premises SQL Server workload to SQL Database and SQL Managed Instance

Let's get started with understanding the DTU model.

The DTU model

In the DTU purchasing option, the amount of resources (CPUs, I/O, RAM, and storage) to be assigned to an SQL database in a particular service tier is calculated in DTUs.

DTUs guarantee that an SQL database will always have a certain amount of resources and a certain level of performance (offered under a particular DTU model) at any given point in time, independent of other SQL databases on the same SQL server or across Microsoft Azure.

The ratio for the aforementioned resources was calculated by Microsoft by running an Online Transaction Processing (OLTP) benchmark. One DTU roughly equates to 1 transaction/sec as per the benchmark.

The DTU purchasing model measures performance in DTUs instead of CPU and memory. Each DTU level and service tier provides predictable performance. The higher the DTU, the better the performance.

Note

The DTU purchasing model is not available with SQL Managed Instance.

In the DTU purchasing model, the compute and storage are bundled and priced together. For example, the 10 DTU standard service tier has a fixed storage capacity of 250 GB included within the DTU cost. Any additional storage is charged separately.

DTU service tiers

There are three service tiers available in the DTU-based purchasing option:

- Basic service tier: The Basic tier is the lowest tier available and applies to small, infrequently used applications, usually supporting one single active transaction at any given point in time.

The Basic tier has a size limit of 2 GB, a performance limit of 5 DTUs, and costs $5/month:

Figure 2.1: Performance statistics for the Basic service tier

- Standard service tier: This is the most used service tier and is best for web applications or workgroups with low to medium I/O performance requirements. Unlike the Basic service tier, it has nine different performance levels: S0, S1, S2, S3, S4, S6, S7, S9, and S12. Each performance level offers the same size (250 GB); however, they differ in terms of DTUs and cost. S0, S1, S2, S3, S4, S6, S7, S9, and S12 offer 10, 20, 50, 100, 200, 400, 800, 1,600, and 3,000 DTUs and cost $15, $30, $75, $150, $300, $600, $1,200, $2400, and $4,500 per month, respectively:

Figure 2.2: Performance statistics for the Standard service tier

Figure 2.3: Performance statistics for the Standard service tier (continued)

Note

The Basic and Standard S0, S1, and S2 tiers have less than one vCore (CPU). For CPU-intensive workloads, S3 or higher is recommended.

- Premium service tier: The Premium service tier is used for mission-critical, high-transaction-volume applications. It supports a large number of concurrent users and has high I/O performance compared to the Basic and Standard service tiers. It provides 25 IOPS per DTU.

It has six different performance levels: P1, P2, P4, P6, P11, and P15. Each performance level offers different sizes and DTUs. P1, P2, P4, P6, P11, and P15 are priced at $465, $930, $1,860, $3,720, $7,001, and $16,003 per month, respectively:

Figure 2.4: Performance statistics for the Premium service tier

Note

The prices listed here are for a single database and not for an elastic database pool.

The Premium service tier supports read scale-out and zone redundancy.

Read scale-out, when enabled, routes read queries to a read-only secondary replica. The read-only secondary is of the same compute and storage capacity as the primary replica.

An Availability Zone in an Azure region is an isolated datacenter building. There can be more than one Availability Zone in an Azure region. When opting for the Premium service tier, you can choose for SQL Database to be zone-redundant. This will ensure that a copy of the database is available in another zone within the same region to facilitate high availability.

The zone redundancy feature is available for databases up to 1 TB in size.

The vCore model

The vCore purchasing model decouples compute and storage. The compute is measured in terms of vCore. vCore characteristics such as physical/hyper-threading are defined by hardware generations.

Memory and I/O are defined per vCore and depend on the hardware generation and the service tier. vCore and storage are to be selected separately and are therefore priced separately. The vCore purchasing model also allows the use of existing SQL Server licenses at discounted rates for SQL managed databases under Azure Hybrid Benefit.

When configuring a vCore-based service tier, there are two license types available, BasePrice and LicenseIncluded.

BasePrice offers discounted rates for existing on-premises SQL Server licenses. You only pay for the underlying Azure infrastructure. This is the best option when migrating an on-premises database to SQL Database. LicenseIncluded includes the cost of the SQL Server license and Azure infrastructure.

For more details on Azure Hybrid Benefit, please visit https://docs.microsoft.com/azure/azure-sql/azure-hybrid-benefit?tabs=azure-powershell.

vCore service tiers

There are three service tiers available with the vCore pricing model: General Purpose, Business Critical, and Hyperscale. The Hyperscale service tier is only available with SQL Database, whereas General Purpose and Business Critical are available with both SQL Database and Managed Instance.

The General Purpose service tier

The General Purpose service tier provides balanced compute and memory options and is suitable for most business workloads. It separates compute and storage, and the data and log files are stored in Azure Blob Storage whereas tempdb is stored in a local SSD.

Figure 2.5 shows the architecture model of a General Purpose service tier:

Figure 2.5: General Purpose service tier architecture model

In Figure 2.5, these four active compute nodes and two redundant compute nodes are just for illustration—the actual number of redundant nodes is determined by Azure Service Fabric to always deliver 99.99% availability. The active compute nodes have SQL Server installed. They contain transient data, such as the plan cache, buffer pool, and columnstore pool). The compute nodes write to data and log files stored in Blob Storage (premium performance type). The built-in availability and redundancy of Blob Storage make sure that no data loss happens in the event of an SQL Server or compute node crash. Blob Storage provides storage latency of between 5 and 10 milliseconds.

If any of the active compute nodes fail or are being patched, the node fails over to an available redundant node. The data and log files are attached to the new active node, thereby providing 99.99% availability. The failover behavior is similar to what we have in a failover cluster instance configuration.

Azure Premium Storage characteristics

In the SQL Managed Instance General Purpose service tier, every database file gets dedicated IOPS and throughput based on the database file size. Larger files get more IOPS and throughput. Refer to the following table for file I/O characteristics:

Table 2.1: Premium storage characteristics

If you are noticing slow performance and high I/O latency in SQL Managed Instance, then increasing individual files might improve performance. In the General Purpose tier, you can only have 280 database files per instance. If you are hitting this limit, you might need to consider reducing the number of database files or moving to the Business Critical tier. Though all database files are placed on Azure Premium Storage, tempdb database files are stored on a local SSD for a faster response.

The following are some of the workload-related guidelines and best practices for SQL Managed Instance running on the General Purpose tier:

- Short transactions: Azure SQL Managed Instance runs on a cloud environment and there could be chances of transient network errors or failover, so you need to be prepared for that. It's best to always run short transactions as they will be quicker to recover.

- Batch updates: Always try to run updates in batches rather than running individual updates.

- Table/index partitioning: Use table partitioning for better I/O throughput and index partitioning to avoid long-running index maintenance. Partitioning may or may not benefit all workloads and therefore should be tested and then used.

- Compression/columnstore: In the General Purpose tier, there is latency between the compute and storage layer. Latency can be reduced by using a compression or columnstore.

The General Purpose service tier is suitable for generic workloads that require a 99.99% uptime SLA and storage latency between 5 and 10 milliseconds.

The Business Critical service tier

The Business Critical service tier has integrated compute and storage. Figure 2.6 shows a Business Critical service tier architecture:

Figure 2.6: Business Critical service tier architecture model

It consists of four replicas in an Always On availability group. There is one primary replica and three secondary replicas. Each replica has local SSD storage to host data files, log files, and tempdb. This provides one to two milliseconds of storage latency.

There are two endpoints—the primary endpoint, which is used for read and write, and a secondary read-only endpoint. The read-only endpoint can be used to offload read-only queries to the secondary replica. The read-only endpoint is provided free of cost.

If the primary replica fails, one of the secondary replicas is promoted to the primary replica. Failover is faster than in the General Purpose service tier. When the primary replica recovers, it connects as a new secondary replica.

The Business Critical service tier with a zone-redundant configuration provides 99.995% uptime. It is suitable for workloads that require low I/O latency (one to two milliseconds) and highly available and highly resilient applications (faster failover).

The Hyperscale service tier

The Hyperscale service tier decouples the compute, storage, and log into microservices to provide a highly scalable and highly available service tier.

Note

The Hyperscale service tier isn't available in SQL Managed Instance.

A traditional database server, as shown in Figure 2.7, consists of compute (CPU and memory) and storage (data files and log files):

Figure 2.7: Database server architecture

An SQL Server engine is run by three main components: the query processor, the storage engine, and the SQL operating system:

- The query processor does query parsing, optimization, and execution.

- The storage engine serves the data required by the queries and manages the data and log files.

- The SQL operating system is an abstraction over the Windows/Linux operating system that is mainly responsible for task scheduling and memory management.

The Hyperscale service tier takes out the storage engine from the database server and splits it into independent scale-out sets of components, page servers, and a log service, as shown in Figure 2.8.

Comparing it with the traditional database server, observe that the data and log files are no longer part of the database server:

Figure 2.8: Architecture of the Hyperscale service tier

A detailed architecture diagram for the Hyperscale service tier is shown here:

Figure 2.9: Detailed architecture of the Hyperscale service tier

The different Hyperscale service tier components are explained here:

- Compute nodes: A compute node is an SQL Server without the data files and the log files. Compute nodes are similar to the SQL Server query processor, responsible for query parsing, optimization, and execution. Users and applications connect and interact with the compute nodes.

Each compute node has a local data cache, a non-covering data cache—the Resilient Buffer Pool Extension (RBPEX).

Note

The RBPEX is an SQL Server feature that allows SSDs to be used as an extension of the buffer pool (server memory or RAM). With an RBPEX, data can be cached to extended buffers (SSDs), thereby decreasing physical disk reads and increasing I/O throughput.

The primary compute node takes user and application transactions and writes them to the log service landing zone. If the data requested by a query isn't available in the primary node's buffer pool or its local RBPEX cache, it reads or requests the missing data from the page servers.

The secondary compute nodes are used to offload reads from the primary compute node. The Hyperscale tier offers four secondary replicas for read scale-out, high availability, and disaster recovery. Each replica has the same vCore model as the primary replica and is charged separately. You connect to a secondary replica by specifying ApplicationIntent as ReadOnly in the connection string.

Each secondary replica, similar to the case with the primary node, has a local cache (RBPEX). When a read request is received by a secondary replica, it first checks for the data in the buffer pool, then the local RBPEX cache, and then the page servers.

When the primary compute node goes down, failover happens to a secondary node, and one of the secondary nodes promotes itself to a primary node and starts accepting read-write transactions. A replacement secondary node is provisioned and warms up.

No action needs to be taken at the storage level as the compute nodes are separate from the storage. This is contrary to regular SQL Server architecture, where a database hosts the SQL Server engine and the storage, as explained earlier in this section. If the database server goes down, the storage (that is, the data files and the log files) also goes down.

- Page server node: The page server node is where the database data files are. Each page server node manages 1 TB of data and represents one data file. The data from each page server node is persisted on a standard storage account. This makes it possible to rebuild a page server from the data in a standard storage account in the event of a failure. Therefore, there's no loss of data.

The page servers get the data modifications from the log service and apply them to the data files. Each page server node has its own local cache (RPBEX). The data is fully cached in the page server local cache to avoid any data requests being forwarded to the standard storage account. A database can have one or more pages of server nodes depending on its size. As the database grows in size, a new page server is automatically added if the existing page server is 80% full. The Hyperscale service tier, for now, supports databases up to 100 TB in size.

- Log service node: The log service node is the new transaction log and is again separated from the compute nodes. The log service node gets the log records from the primary node, in the landing zone, which is an Azure Premium Storage account. An Azure Premium Storage account has built-in high availability, which prevents the loss of any log records. It persists log records from the landing zone to a durable log cache.

It also forwards log records to the secondary compute nodes and the page server nodes. It writes the log records to long-term log storage, which is an Azure Standard Storage account. The long-term log storage is used for point-in-time recovery. When the log records are written to long-term storage, they are deleted from the landing zone to free up space.

The log records are kept in long-term log storage for the duration of the backup retention period that has been configured for the database. No transaction log backups are needed.

There's no hot standby for a log service node because it's not required. The log records are persisted first in an Azure Premium Storage account, which has its own high-availability provision, and then in an Azure Standard Storage account.

The Hyperscale service tier, with this improved architecture, offers the following benefits:

- Nearly instantaneous backups. A backup is taken by taking a snapshot of the file in an Azure Standard Storage account. The snapshot process is fast and takes less than 10 minutes to back up a 50 TB database.

- Similar to database backups, database restores are also based on file snapshots and are a lot faster than in any other performance tier.

- Higher log throughput and faster transaction commits, regardless of data volumes:

- The primary replica does not need to wait for an acknowledgment-of-transaction commit from the secondary replica. This is because the transaction log is managed by a log service.

- Supports up to 100 TB database size.

- Rapid read scale-out by creating read replicas.

Note

For details on resource limits for different service tiers, please visit https://docs.microsoft.com/azure/azure-sql/database/service-tiers-vcore?tabs=azure-portal.

The Hyperscale service tier is suitable for applications with large databases (over 4 TB in size and up to 100 TB), 1- to 10-millisecond storage latency, and instant backup and restore requirements, as well as for applications with a smaller database size requiring faster, and vertical and horizontal, compute scaling.

vCore hardware generations

Hardware generations apply only to the vCore purchasing option and define the compute and memory resources. There are three hardware generations for different types of workloads:

- Gen5 offers up to 80 logical CPUs, based on Intel E5-2573 v4 (Broadwell) and 2.3 GHz processors, with 5.1 GB per core and fast eNVM SSD. Gen5 offers more compute scalability with 80 logical CPUs.

- Fsv2-series is for high-compute workloads and provides a faster CPU with a clock speed of 3.4 GHz to 3.7 GHz. The maximum memory is limited to 136 GB with 1.9 GB of memory per vCore.

- M-series is for high-memory workloads with a max memory of 3.7 TB and 29 GB of memory per vCore. M-series is available only in the Business Critical service tier.

For details on compute and memory specifications, please visit https://docs.microsoft.com/azure/azure-sql/database/service-tiers-vcore?tabs=azure-portal.

Note

SQL Managed Instance only supports Gen5 hardware generation at the time of writing this book.

An SQL workload can be categorized as a balanced, compute, or memory-optimized workload. Hardware generation makes it easier to map an on-premises workload to Azure SQL Database during migration. We can find out which category the on-premises workload belongs in and then choose the relevant hardware generation in Azure SQL.

Determining an appropriate performance tier

As an SQL Server DBA, when migrating to Azure SQL Database, you will need to have an initial estimate of DTUs so as to assign an appropriate service tier to Azure SQL Database. An appropriate service tier will ensure that you meet most of your application performance goals. Estimating a lower or a higher service tier will result in decreased performance or increased cost, respectively.

This section teaches you how to use DMA to make an appropriate initial estimate of the service tier. You can, at any time, change your service tier by monitoring SQL Database's performance once it's up and running.

DMA SKU recommendation

DMA is a free tool from Microsoft to facilitate migration from SQL Server (on-premises or IaaS) to SQL Database. It can assess the source database to list out the compatibility issues between SQL Server and SQL Database. Once you fix the compatibility issues, you can use it to migrate the schema and data to SQL Database.

It also helps with recommendations to select a starting service tier and SKU. To get recommendations, we first need to run a PowerShell script to collect the required performance counters. It's advised to run the script for at least two hours at different times and ensure we collect counters at peak business hours.

The activity requires DMA to be installed on your machine. You can download it here: https://www.microsoft.com/download/details.aspx?id=53595.

To get recommendations using DMA for the toystore database, perform the following steps:

- Open

~/Chapter02/DMA/RunWorkload.batin Notepad. You should see the following code:CD "C:\Program Files\Microsoft Corporation\RMLUtils" ostress -SXENA\sql2016 -E -dtoystore -Q"Execute usp_Workload" -n10 -r100000 -q @echo off Pause

Modify the

RMLUtilsdirectory location if required. Change theostressparameter to point to thetoystoredatabase in your local environment.Save and close the file.

Double-click on the file to run the workload.

- Open

~/Chapter02/DMA/RunSKURecommendation.bat. You should see the following code:cd "C:\Program Files\Microsoft Data Migration Assistant\" powershell.exe -File .\SkuRecommendationDataCollectionScript.ps1 -ComputerName XENA -OutputFilePath "C:\Professional-Azure-SQL-Database-Administration-Third-Edition\Chapter02\DMA\Counter.csv" -CollectionTimeInSeconds 7200 -DbConnectionString "Server=XENA\SQL2016;Initial Catalog=master;Integrated Security=SSPI;"

The preceding command runs the DMA

SkuRecommendationDataColletionScript.ps1PowerShell script to collect the required counters. The script is available at the DMA installation location.Modify the parameter values to point the script to your SQL Server environment.

Save and close the file.

Double-click

RunSKURecommendation.batchto run theskucounter collection script.The script will run for the time specified by the

CollectionTimeInSecondsparameter and will write the counter values to the file specified by theOutputFilePathparameter.To get more appropriate recommendations, it's advised you collect counters for at least two hours. You can also collect counters at different times of the day and generate recommendations to get the best results.

When the

skucollection script completes successfully, a file namedcounter.csvis generated at the~/chapter02/DMAlocation. - Open

~/Chapter02/DMA/GetSKURecommendation.batch. You should see the following code:cd "C:\Program Files\Microsoft Data Migration Assistant" .\DmaCmd.exe /Action=SkuRecommendation /SkuRecommendationInputDataFilePath="C:\Professional-Azure-SQL-Database-Administration-Third-Edition\Chapter02\DMA\Counter.csv" /SkuRecommendationOutputResultsFilePath="C:\Professional-Azure-SQL-Database-Administration-Third-Edition\Chapter02\DMA\SKURecommedation.html" /SkuRecommendationPreventPriceRefresh=true /SkuRecommendationTsvOutputResultsFilePath=C:\Professional-Azure-SQL-Database-Administration-Third-Edition\Chapter02\DMA\SKURecommedation.tsv" @echo off Pause

The preceding command uses the DMA CLI command to generate recommendations. Provide the path to

counter.csvin step 2 to theSKURecommendationInputDataFilePathparameter.Copy and save the results.

When run, the command will generate an

htmlandtsvrecommendation output file.Double-click the

GetSKURecommendation.batchfile to generate the recommendations.The recommendation script will generate

skurecommendation_SQL_DB htmlandtsvfiles with recommendations for Azure SQL Database. It also generates similar files for SQL Managed Instance.Figure 2.10 is a snapshot of the

skurecommendation_sql_db.htmlfile:

Figure 2.10: DMA SKU recommendations for SQL Managed Instance

Observe that it recommends using the General Purpose pricing tier with 8 vCores. You can select the pricing tier from the Pricing Tier drop-down menu.

- The

tsvfile contains the reasons for considering or not considering a particular performance tier:

Figure 2.11: DMA SKU recommendation—tsv file

The DMA makes it easy to choose a starting service tier when migrating an existing on-premises SQL Server workload to an SQL managed database. Once we migrate the database to the selected service tier, we need to further test the application performance against the service tier and scale up or scale down as per the required performance.

Azure SQL Database compute tiers

There are two compute tiers, provisioned and serverless.

In the provisioned compute tier, the resources (vCores) are pre-allocated and can be changed by manually scaling to a different service tier as and when required. The provisioned compute tier cost is calculated per hour based on the number of vCores configured. The provisioned compute tier is suitable for scenarios with consistent and regular workloads.

In the serverless compute tier, compute resources for databases are automatically scaled based on workload demand and are billed based on the amount of compute used per second. The serverless compute tier also provides an option to automatically pause the database during inactive usage periods, when only storage is billed, and then automatically resume databases when activity returns. The serverless compute tier is price performance-optimized for single databases with intermittent, unpredictable usage patterns that can afford some delay in compute warm-up after low or idle usage periods.

Scaling up the Azure SQL Database service tier

In this section, we'll learn how to scale up the SQL Database service tier for better performance. Let's go back to our example of Mike, who observes that there is an increase in the load on the SQL database. To overcome this problem, he plans to change the service tier for the database so that it can handle the overload. This can be achieved via the following steps:

- Open a new PowerShell console. In the PowerShell console, execute the following command to create a new SQL database from a

bacpacfile:C:\Code\Chapter02\ImportAzureSQLDB.ps1

- Provide the SQL server name, SQL database name, SQL Server administrator user and password,

bacpacfile path, andsqlpackage.exepath, as shown in Figure 2.12:

Figure 2.12: The Windows PowerShell window

The script will use

sqlpackage.exeto import thebacpacfile as a new SQL database on the given SQL server. The database is created in the Basic service tier, as specified in the PowerShell script.It may take 10 to 15 minutes to import the SQL database.

- Open

C:\Code\Chapter02\ExecuteQuery.batin Notepad. It contains the following commands:ostress -Sazuresqlservername.database.windows.net -Uuser -Ppassword -dazuresqldatabase -Q"SELECT * FROM Warehouse.StockItems si join Warehouse.StockItemholdings sh on si.StockItemId=sh.StockItemID join Sales.OrderLines ol on ol.StockItemID = si.StockItemID" –n25 –r20 -1

- Replace

azuresqlservername,user,password, andazuresqldatabasewith the appropriate values. For example, if you are running the preceding command against SQL Database withtoystorehosted on thetoyfactorySQL server with the usernamesqladminand the passwordPackt@pub2, then the command will be as follows:ostress -Stoyfactory.database.windows.net -Usqladmin -PPackt@pub2 -dtoystore -Q"SELECT * FROM Warehouse.StockItems si join Warehouse. StockItemholdings sh on si.StockItemId=sh.StockItemID join Sales. OrderLines ol on ol.StockItemID = si.StockItemID" -n25 -r20 -q

The command will run 25 (specified by the

-n25parameter) concurrent sessions, and each session will execute the query (specified by the-Qparameter) 20 times. - Open the RML command prompt, enter the following command, and press Enter:

C:\Code\Chapter02\ExecuteQuery.bat

This will run the

OSTRESScommand. Wait for the command to finish executing. Record the execution time:

Figure 2.13: RML command prompt

As you can see, it took around 1 minute and 52 seconds to run 25 concurrent connections against the Basic service tier.

- The next step is to scale up the service tier from Basic to Standard S3. In the PowerShell console, execute the following command:

C:\Code\Chapter02\ScaleUpAzureSQLDB.ps1

Provide the parameters as shown in Figure 2.14:

Figure 2.14: Scaling up the service tier

Observe that the database edition has been changed to

standard. - Open a new RML command prompt and run the same

OSTRESScommand as in step 5. You should see a faster query execution time in the Standard S3 tier than in the Basic tier.Here's the output from the

ExecuteQuery.batcommand:

Figure 2.15: Output from the ExecuteQuery.bat command

It took around 42 seconds to run 25 concurrent connections against the Standard S3 service tier. This is almost 60% faster than the Basic tier. You get the performance improvement just by scaling up the service tier, without any query or database optimization.

Changing a service tier

You can scale up or scale down SQL Database at any point in time. This gives the flexibility to save money by scaling down to a lower service tier in off-peak hours and scaling up to a higher service tier for better performance in peak hours.

You can change a service tier either manually or automatically. Service tier change is performed by creating a replica of the original database at the new service tier performance level. The time taken to change the service tier depends on the size as well as the service tier of the database before and after the change.

Once the replica is ready, the connections are switched over to the replica. This ensures that the original database is available for applications during the service tier change. This also causes all in-flight transactions to be rolled back during the brief period when the switch to the replica is made. The average switchover time is four seconds, and it may increase if there are a large number of in-flight transactions.

You may have to add retry logic in the application to manage connection disconnect issues when changing a service tier.

Exercise: Provisioning a Hyperscale SQL database using PowerShell

In this section, we'll provision a Hyperscale SQL database using PowerShell:

- Open a new PowerShell console window and change the working directory to

C:\ Code\Chapter02. Enter and execute the following PowerShell command:.\ProvisionAzureSQLDatabase.ps1 -ResourceGroup RGPackt -Location "East US 2" -SQLServer sshsserver -SQLDatabase toystore -Edition Hyperscale

The preceding command calls the

ProvisionAzureSQLDatabase.ps1script to provision a new Hyperscale SQL database,toystore.Note

Change the

SQLServerandSQLDatabaseparameter values to avoid getting aServer/Database already existserror. - Once the script completes, log in to the Azure portal and click All resources in the left navigation pane.

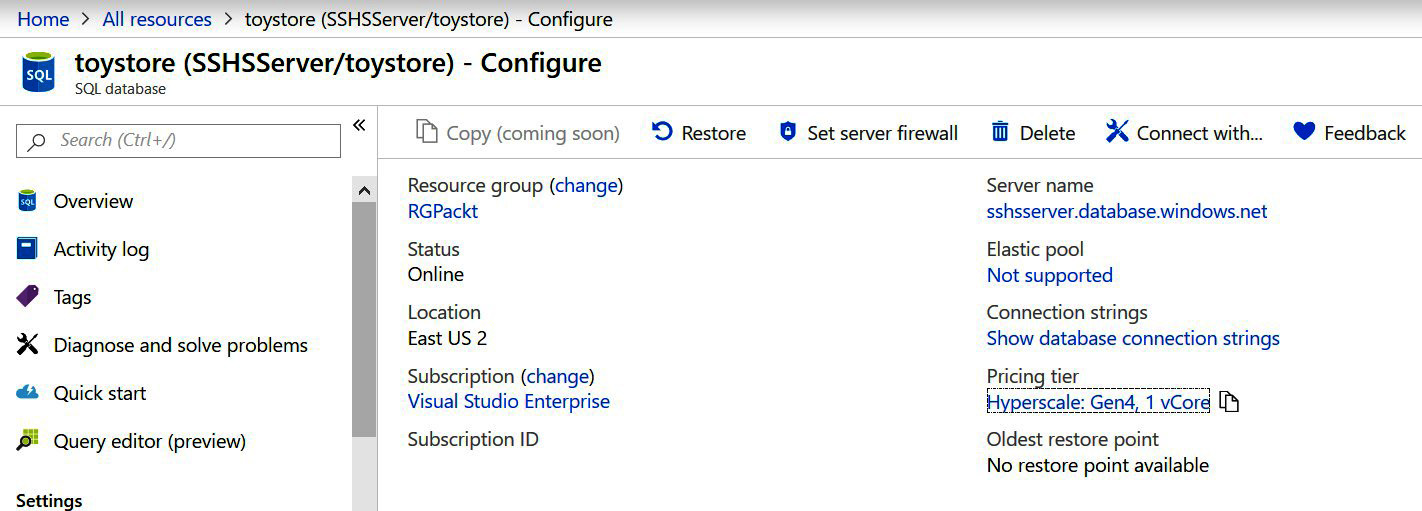

- Click toystore to open the details window:

Figure 2.16: The All resources panel

The pricing tier is Hyperscale, Gen4, 1 vCore:

Figure 2.17: The Configure pane for the toystore SQL database

In this exercise, we provisioned an SQL database with the Hyperscale service tier. The Hyperscale service tier is costly and it's advised to delete the database if it's created as part of the exercise.

Choosing between vCore and DTU-based purchasing options

When choosing between vCore and DTU-based pricing tiers, consider the following:

Licensing

The vCore pricing model provides up to 30% cost savings by using existing on-premises SQL Server Standard or Enterprise licenses with software assurance. Therefore, if you are migrating an existing on-premises SQL Server infrastructure, consider opting for the vCore pricing model.

Flexibility

The DTU-based model bundles the compute, IOPs, and storage under DTUs and provides a pre-configured range of varying DTU amounts for different types of workloads. It's therefore best suited for when you need a simple pre-configured option.

The vCore model provides flexibility when selecting compute and storage options and is therefore best when you want more transparency and control over the compute and storage options.

Consider a scenario where you have a database with high compute requirements and low storage requirements; say, 125 DTUs with a database size of 200 GB. You'll have to opt for the Premium service tier and pay for the unused storage (300 GB):

Figure 2.18: Azure portal pricing feature

Figure 2.18 is from the Azure portal and shows the pricing options for a Premium DTU-based tier. Observe that the pricing is calculated per DTU. The storage cost is inclusive of the DTUs. Therefore, in this instance, you will pay for all 500 GB of storage, even if it's not used.

In a vCore model, the compute and storage costs are calculated independently. Therefore, you only pay for the storage you use, which is 200 GB, and the vCores used:

Note

The Premium service tier includes 500 GB of free storage. An additional cost of approximately $0.16 is applied to additional storage (beyond 500 GB) up to 1 TB.

Figure 2.19: General Purpose vCore pricing model

Figure 2.19: General Purpose vCore pricing model

Figure 2.19 is from the Azure portal and shows the pricing options for the General Purpose vCore pricing model. Observe that the pricing is calculated per vCore and per GB of storage used. Therefore, you pay for the storage you use. You can, however, scale the storage up or down at any time, as per your requirements.

Consider another scenario, where a team is just starting up with a product and is looking for an SQL database pricing tier; a Standard S2 or S3 tier with 50 to 100 DTUs and a maximum of 250 GB would be a good option to go for. As the product matures and the scalability requirements become clear, the team can scale up accordingly.

Note

Once you move to the Hyperscale service tier, you can't move to any other service tier.

You can scale between vCore-based and DTU-based service tiers. When scaling from DTU- to vCore-based pricing tiers, consider the following rule of thumb for choosing the correct compute size: 100 Standard tier DTUs = 1 vCore in the General Purpose tier and 125 Premium tier DTUs = 1 vCore in the Business Critical tier

Summary

Azure SQL Database and SQL Managed Instance have different purchasing options and service tiers to support varying workloads. SQL Database has two purchase options, DTU and vCore, while SQL Managed Instance is only available with the vCore model. The DTU purchasing option measures performance in DTUs. A DTU hides the complexity of measuring performance in terms of CPU and memory and provides a simple way of measuring performance. It's good for teams that don't have specialized DBAs and for new databases/applications where we don't have historical performance metrics for the database.

The vCore model is more similar to an on-premises SQL Server wherein we get to choose compute (vCore) and storage separately. It's best for teams with specialized DBAs and for migrating on-premises workloads to Azure (where we have historical performance metrics for the database).

We also looked at different service tiers for each purchasing model and underlaying architecture model differences and use cases.

We learned how to use DMA to get SKU recommendations when migrating an on-premises SQL workload to SQL Database or SQL Managed Instance.

In the next chapter, we'll learn about techniques and considerations to migrate an on-premises SQL Server database to Azure SQL managed database offerings.

Download code from GitHub

Download code from GitHub